ewg4 - Richard (`Dick`) Hudson

advertisement

Richard Hudson, 1990, English Word Grammar (Blackwell)

Chapter 4

LINGUISTIC AND NON-LINGUISTIC CONCEPTS

4.1 GENERAL

When language is studied as a mental phenomenon - as it is in WG (Word Grammar) - the question arises how it is

related to other kinds of mental phenomena. What is the relation between what we know about words, and what we know

about people, places, the weather, social behaviour (other than speaking), and so on? It goes without saying that there

are ways of passing between the two kinds of knowledge, when understanding and producing speech; so there must be

some kind of connection between what we know, for instance, about the word CAT and whatever encyclopedic facts we

know about cats. If there were no such connection, it would not be possible to infer that a thing that is referred to by CAT

is likely to have whiskers, to like milk, and so on. This much is agreed. Beyond this there is considerable disagreement,

regarding both the nature of these connections and the general properties of language compared with other types of

knowledge.

On the one hand, there are those who are convinced that language is unique, both in its structure and in the way we

process it. This view is particularly closely associated with Fodor (1983), who believes that language constitutes an 'input

module' which converts the speech that we hear into a representation in terms of an abstract conceptual system (the

'language of thought') to which quite different types of mental processes can be applied in order to derive information. The

linguistic system itself is not formulated in terms of the 'language of thought', nor is it processed by the same inference

machinery. A similar view, though regarding knowledge rather than processing, has for long been espoused by Chomsky,

and is widely accepted by his supporters. The introductory remarks to Newmeyer (1988:4) are typical:

Much of the evidence for [the popularity of generative grammar] must be credited to the massive evidence that has

accumulated in the past decade for the idea of an autonomous linguistic competence, that is, for the existence of a

grammatical system whose primitive terms and principles are not artifacts of a system that encompasses both human

language and other human faculties or abilities.

These ideas of Chomsky's and Fodor's represent a particularly clear version of a view which is in fact typical of

twentieth-century structural linguistics. 1 think it would be fair to say that most linguists have emphasized the distinctness

of the linguistic system, and have consequently assumed that the system has boundaries - including one boundary, at the

'signal' side, between phonetics and phonology, and another at the 'message' side, between semantics and pragmatics

(or perhaps more helpfully, between linguistic and encyclopedic information). I think it is also true that linguists who have

assumed the existence of these boundaries have still not been able to produce satisfactory criteria for locating them, so

they remain a source of problems rather than of explanations.

The two boundaries just mentioned are not the only ones that ought to exist if language is distinct, but which are

problematic; the same is true, for example, when we consider knowledge about matters of style or other sociolinguistic

matters (e.g. the difference between TRY and ATTEMPT, or between PRETTY and BONNY). Are the facts about these

differences part of competence or not? Some linguists are quite sure that they are not (e.g. Smith and Wilson 1979: 37),

but this is only because they can see that such knowledge is like other kinds of social knowledge, in contrast with

linguistic competence which they assume to be unique. Similar problems arise in the uncertain border area between

syntax and discourse (e.g. are the two clauses of I'll tell you one thing - I won't do that again in a hurry! put together by

rules of grammar or by pragmatic principles that govern discourse?).

The alternative to the dominant idea of structuralism is the view that language is a particular case of more general types

of knowledge, which shares the properties of other types while also having some distinct properties of its own. The most

obvious of these properties is that knowledge of language is knowledge about words, but this is just a matter of definition:

if some bit of knowledge was not about words, we should not call it 'knowledge of language'. However it is a very

interesting research question whether there are any properties which correlate with this one, without following logically

from it. It is certain that in some respects knowledge of language is unique, in that it seems to draw on a vocabulary of

analytical categories that do not apply outside language - categories like 'preposition', 'direct object', or 'affix'. However

these differences do not support a general claim that language is a unique system, in the sense of the view described

above, because they coexist with a great number of similarities between linguistic and other kinds of knowledge.

The similarities between language and non-language have been emphasized in a number of linguistic traditions. One of

the best known is Pike's attempt (1967) to produce a 'Unified Theory of the Structure of Human Behavior'. It is true that

this theory was about behaviour rather than about thought, so it did not include any subtheorles about how experience is

processed, but at least the structures proposed could be taken as models for human thought about human behaviour.

More explicitly mental are the theories which I referred to in chapter 1 under the slogan 'cognitivism', all of which

emphasize similarities rather than differences between linguistic and non-linguistic knowledge. As I explained there, WG

is part of that tradition.

The reason why the choice between these two approaches is important is because it affects the kinds of explanation

which is available for facts about language. If language is a particular case of more general mental phenomena, then

language is as it is because thought in general is as it is; so the best explanation for some general fact F about language

is that F is a particular case of some more general fact still which is true of thought in general. In the other tradition such

explanations are obviously excluded in principle, which leaves only two possible kinds of explanation. One is the

functional kind - F is true of language because of the special circumstances under which language is used and the

functions it has to fulfil. I have no quarrel with functional explanations, but at least some such explanations are sufficiently

general to apply to systems other than language. The other kind of explanation is in terms of arbitrary genetic

programming; but since the genes are an inscrutable 'black box' this is no explanation at all.

How can we decide between these two families of theories? No doubt the choice is made by some individuals on an a

priori basis, but it should be possible to investigate it empirically. As far as language structure is concerned, what

similarities are there between the structures found in language and those found outside language? And for language

processing similar questions can be asked.

The trouble is, of course, that one first has to develop, or choose, a theory of language, and the answer will vary

dramatically according to which theory one takes as the basis of the comparison: one theory makes language look very

different from anything one can imagine outside language, while another makes it look very similar. Theories can be

distinguished empirically and it would be comforting to believe that the facts of language, on their own, will eventually

eliminate all but one linguistic theory, independently of the comparison between linguistic and non-linguistic knowledge.

This may in fact be true, but meanwhile we are left with a plethora of theoretical alternatives and no easy empirical means

for choosing among them. To make matters worse, theories are different because they rest on different premises, and one

of the most important of these differences is precisely the question we want to investigate: how much similarity is

assumed between language and non-language. It is clear, then, that the particular theory of language that we choose is

crucial if we want to explore the relations between language and non-language.

The most promising research strategy seems to be one which aims directly at throwing light on the relations between

linguistic and non-linguistic knowledge. The question is whether the two kinds of knowledge are in fact similar, so we need

to find out whether it is possible to construct a single overarching theory capable of accommodating both. What this

means is that the linguistic theory should be developed with the explicit purpose of maximizing the similarities to

non-linguistic knowledge, while also respecting the known facts about language. The result could be a theory in which the

similarities to non-linguistic knowledge are nil, or negligible; if so, then one possible conclusion is that the research has

lacked imagination, but if this can be excluded, the important conclusion is that linguistic knowledge really is unique. A

more positive outcome would obviously prove the existence of important similarities between the two kinds of knowledge.

This research plan may seem obvious, but it contrasts sharply with the strategy underlying most of linguistics. As

mentioned earlier, most linguists take it for granted that language is unique, so they feel entitled to develop theories of

language structure which would be hard to apply to anything other than language - classic examples being

transformational grammar and the X-bar theory of syntax. This is a great pity, because the work is often of very high

quality, when judged in its own terms. However good it may be, such work actually tells us nothing whatsoever about the

relations between linguistic and non-linguistic knowledge, because all it demonstrates is that it is possible to produce a

theory of language which makes them look different. It does not prove that it is impossible to produce one according to

which they are similar. Admittedly, no research ever could prove this once and for all because it is always possible that

some as yet unknown theory could be developed; but the failure of serious and extensive research along the lines I have

suggested would be enough to convince most of us that language really was unique.

It is for these reasons that WG maximizes the similarities between linguistic and non-linguistic knowledge. What has

emerged so far seems to support the 'cognitivist' view that language really is similar to non-language, but it is possible that

so-far unnoticed fundamental differences will become apparent. If so, they will certainly be reported as discoveries of

great importance and interest. Meanwhile I think we can confidently conclude that at least some parts of language are

definitely not unique.

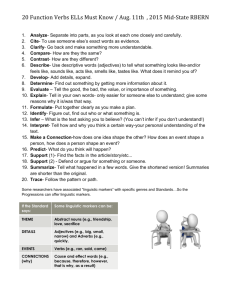

4.2 PROCESSING

One of the most obvious and striking similarities between language and non-language is that knowledge is exploited in

both cases by means of default inheritance. The principles of default inheritance outlined in chapter 3 can be illustrated

equally easily in relation to linguistic and to non-linguistic data.

Let us start with a linguistic example from chapter 3, relating to some hypothetical sentence in which the first two words,

wl and w2, are respectively Smudge and either purrs or purred.

[1]

[2]

[3]

[4]

[5]

type of referent of subject of PURR = cat.

w2 isa PURR.

subject of w2 = wl.

wl isa SMUDGE.

referent of SMUDGE = Smudge.

From these five facts we can infer, by 'derivation', at least one other fact:

[6]

type of Smudge = cat.

Now consider the following non-linguistic facts, expressed in the same format but this time referring to two concepts cl and

c2.

[7]

[8]

[9]

[10]

[111

nationality of composer of prelude of 'Fidello' = German.

c2 isa 'Fidello'.

prelude of c2 = cl.

cl isa 'Leonora Y.

composer of 'Leonora Y = Beethoven.

We can now derive fact [12], by precisely the same principles as we applied in arriving at [6].

[121

nationality of Beethoven = German.

The relevance of default inheritance to both kinds of knowledge is probably sufficiently obvious to need no further

comment. Another point of similarity in processing lies in the way in which new experience is linked to existing knowledge,

in such a way as to allow inheritance to apply. In both cases the linkage seems to be done by the same Best Fit

Principle that we discussed in section 3.6.

Though it is a major mystery precisely how the Best Fit Principle works, it seems clear that the same principles apply to

linguistic and to nonlinguistic experience. Take, for example, the string of sounds which could equally well be interpreted

as a realization of either of the sentences in

(1)

a Let senders pay.

b Let's end his pay.

The total string of sounds is the piece of experience to be understood, but we clearly understand it cumulatively, as we

receive it, rather than waiting till 'the end' (whatever that might mean). We may assume, for simplicity, that the uttered

sounds are completely regular - i.e. that their pronunciations match perfectly the normal pronunciations of the stored

words - but even so mental activity must go on before the understander arrives at a single interpretation. At least two

possible segmentations have to be considered in tandem until one of them can be eliminated, and it seems unlikely that

this can take place until several words with some content have been found. It is hard to reconcile this kind of problem with

the Fodorian

view of language as an input module in which 'decoding is only a necessary (and totally automatic) preliminary' to

'inferring what your interlocutor intended to communicate' (Smith 1989: 9).

The understanding of speech shows considerable similarities to the interpretation of other kinds of perceived input,

including vision, though no doubt there are also important differences. Most obviously we could draw a parallel with

reading, and more particularly with reading hand-written texts where rather similar problems of segmentation can arise.

Further afield are commonplace visual scenes, which can often be interpreted in different ways; for example, does that

brightly coloured thing belong to the plant on the left, in which case it must be a flower, or is it a leaf belonging to the bush

on the right?

The overall generalization seems to be that in all these cases we interpret our experience in such a way as to maximize

the coherence of our knowledge about the world - in other words, in such a way as to make sense of the experience, as

part of our total experience of the world. We do not restrict ourselves to some kind of immediate, very easily accessible,

context and evaluate alternatives only in relation to that. For instance, in processing speech we might restrict our attention

to the words in the lexicon, and prefer the interpretation which maximizes similarities between the experienced sounds E

and some word or words in the lexicon; but if we did this we would never be able to choose between homonyms.

Nor do we restrict attention to the purely linguistic context (however this might be defined), regardless of the wider

context of the current discourse, our knowledge of the world, and so on. To do this would clearly simplify the job of

processing but it would mean that we could never prefer a grammatically deviant interpretation to a well-formed one on

the grounds that the former made better total sense. For example, suppose a foreigner says (2).

(2) All the sheep is mine.

It is easy to imagine a situation in which a number of sheep are in view, and no single sheep has been picked out (less

still a single sheep corpse which might explain a non-count interpretation of sheep). In that case we would surely prefer to

take is as a mistake for are, rather than blindly follow the grammar and conclude that he was talking grammatical

nonsense. A similar explanation is needed for the way in which we cope, as hearers, with slips of the tongue produced by

native speakers.

Two consequences follow from these rather obvious observations about the processing of speech. One is that speech

is processed in similar ways to other kinds of experience, since it seems clear that we take account of our total knowledge

when processing visual experience too. To continue the previous example, let us assume that the ambiguous object is

yellow, and that the plant to the left has red flowers. Suppose further that we know, of plants, that if a plant has flowers,

they are typically all of the same colour. If we restricted ourselves to this knowledge, we should have to reject the analysis

in which the ambiguous object was a flower on the plant to the left. But suppose we also know that a small child has been

going round this garden with a yellow paint-spray, colouring one flower on each plant - and that we cannot see any other

yellow flower. This extra knowledge not only allows us, but forces us, to accept an 'ungrammatical' analysis of the

ambiguous object, just as in the example (2).

The second conclusion is that there are important similarities, and interactions, among the processes by which different

kinds of information are deduced about an object such as a perceived string of sounds. In particular there can be no

important discontinuity between the 'strictly linguistic' processing, which yields a linguistic structure, and the inference

mechanisms which apply to this output and yield its 'content' and the general message.

In this respect, then, the view of processing outlined above contrasts with that of Fodor (1983) and theories inspired by

Fodor, notably Relevance Theory (Sperber and Wilson 1986, 1987). As I said earlier, the main point of Fodor's claim is

that language is an 'input-module', which means that the linguistic processing of speech is done by a highly specialized

decoding mechanism that is blind to any information other than the purely linguistic. Because of this inability to use other

kinds of information, the decoder is said to be 'information-encapsulated'. The output of this mechanism is a

representation of a message, to which a completely different set of mental operations can apply - the inference rules of

general intelligence, which can take account of general knowledge.

This model has proved popular to psycholinguists, though (according to Tanenhaus 1988) less so to psychologists.

Various kinds of experimental evidence have been adduced in support of it, surveyed in Frazier 1988 and Carston 1988.

As Carston points out, one of the shortcomings of this evidence is that much of it involves the subjects' ability to read,

rather than to process spoken language, so to the extent that it supports any kind of modular approach, the module

concerned is one that deals with reading. This conclusion is hard to reconcile with the general innatist position of Fodor

and his followers, since it is hard to take seriously the idea that we are genetically programmed for reading (a skill that

only a minority of mankind possesses, and which has only been around in any form for a few thousand years).

Another rather obvious weakness is that if the decoder cannot refer to information outside itself, then the whole of the

grammar (including the lexicon) must be inside the decoder; but if that is so, what happens when information flows in the

opposite direction? What does the encoder - the mechanism for speaking - refer to? These two very general worries about

the Fodor model suggest that the psycholinguistic evidence must need a different interpretation.

An alternative which is compatible with the claims of WG is based on the following general principle, which we can call

the Principle of Universal Relevance.

The Universal Relevance Principle

All information becomes available as soon as it becomes relevant.

This is of course the theory which was once standard among psycholinguists, and which is widely believed to have been

refuted by experimental evidence - what Carston calls the 'fall-out' theory.

One example of supposedly refuting evidence is research by Swinney, Seidenberg and others which is quoted in

Frazier (1988: p 21) and which showed that when we hear a word (in a sentence), we immediately access all its possible

meanings, even if most of these meanings are obviously incompatible with the current context. From this they conclude

that contextual information is not available to the processor, on the grounds that if it had been available, it would have

been used to guide the search for meanings. However, there is an alternative explanation which is much simpler:

contextual information is used by the Best Fit Principle, to choose the interpretation which fits the context best, and can

only be applied after all the candidates have been mustered. It goes without saying that a choice cannot be made before

the range of alternatives is known, so the results found in these experiments are exactly as one might expect.

Frazier (1988: 24) suggests that the choice among alternative syntactic analyses is made very differently, namely in

sequence rather than in parallel. According to the evidence she quotes, we start with the most likely interpretation (e.g.

one in which a prepositional phrase is attached to the nearest potential head). The experimental results on which this

conclusion is based have more recently been questioned by Altmann and Steedman (1988), who conclude that what

Swinney and others showed for lexical choices is also true of syntactic choices.

If this is generally so, then we can assume that whenever choices are available, whether they are lexical or syntactic,

they are considered in parallel until any of them can be eliminated as poor candidates by the Best Fit Principle. This

conclusion seems to fit with the one reached by Frazier (1988: 29) at the end of her survey, that the Fodor view does not

account adequately for the choice among alternative syntactic analyses in those cases where the first choice turns out to

be the wrong one.

It follows from the Principle of Universal Relevance that information is applied as soon as possible, which leads to the

view that parsing is ' incremental' - in other words, that we build up a complete sentence structure at all levels, one word

at a time, rather than waiting till the end of the sentence or clause before mapping a surface string onto deeper levels of

structure. This view has been defended for some time by Marslen-Wilson and his colleagues (e.g. Marslen-Wilson 1975a,

b), but now seems to be widely accepted among psycholinguists (Frazier 1988, Altmann and Steedman 1988). According

to our general principle of Universal Relevance there is no need to assume any smallest unit for processing, such as the

word; and this is surely plausible, given the evidence that listeners can identify a word before it is complete (Flores

D'Arcals 1988). Thus, we predict that a hearer will apply knowledge about which words exist as soon as any word starts to

be processed, but of course at this stage there is normally no way in which contextual expectations about meaning or

syntactic structure can be applied to this particular word, given that very few sentential contexts are sufficiently restrictive

to allow either the meaning or the syntactic properties of the next word to be predicted.

One important issue in theorizing about speech processing which seems to have received remarkably little attention

from psycholinguists is the question of segmentation - how do we locate word-boundaries in strings that could be

segmented in different ways, such as our example above (Let senders pay versus Let's end his pay). The Principle of

Universal Relevance suggests a considerable amount of feed-back between different levels of analysis in such cases, but

not only in a handful of specially ambiguous cases; it is normal for the phonetic stream to be continuous and to lack clear

signals for word boundaries, so we must assume that feed-back is a normal part of speech processing - and not just a

special guessing strategy applied,

perhaps consciously, only when the normal machinery falls.

As we have seen, the choice between the modular and non-modular views of processing is reminiscent of the old debate

about whether rule-ordering is extrinsic or intrinsic. Extrinsic ordering involves stipulated restrictions. One way of imposing

an order on rules is to divide them into a number of distinct classes, and to order these classes (e.g. rules were classified

as 'lexical rules', 'lexical-insertion rules', 'transformations', and so on; transformations were classified as 'pre-cyclic', 'cyclic'

or 'post-cyclic'). In this approach, two things need to be stipulated: the class-membership of each rule, and the order in

which different classes of rule apply. The 'intrinsic' alternative was to allow rules to apply in any order at all, i.e. whenever

they became relevant. Since some rules 'feed' other rules it follows that some orderings will not be possible, but of course

these restrictions need not be stipulated either in the grammar or in the general theory. The Fodor theory of language as

an input-system claims that there is extrinsic, module-based, ordering of operations in processing, while the Principle of

Universal Relevance claims that all ordering is intrinsic.

I should emphasize that I am not arguing that all processing, including semantic and pragmatic processing, is a matter

of 'decoding' (or 'encoding'), an automatic mapping algorithm. On the contrary, I agree entirely with Fodor, Sperber and

Wilson and their colleagues that this view is inappropriate for higher-level operations, which must be much more

intelligent. What I am arguing is essentially that all processing, at all levels, is intelligent, and follows much the same lines;

in other words, the 'coding' model is wrong at all levels.

Thus if Sperber and Wilson's 'Principle of Relevance' applies to the choice between alternative messages, it also

applies to the choice between alternative syntactic (or other linguistic) analyses. An important consequence of the

Principle of Relevance is that a hearer prefers an analysis which leads to the maximum number of new inferences. It is

easy to see how this helps us to choose, say, between different meanings of the word pen in the sentence We need a

new pen, according to whether we are discussing writing or pigs (Carston 1988). Suppose we are watching some pigs on

the rampage; if we take pen in the sense 'enclosure for animals', it leads to a variety of inferences, such as that the

reason why the pigs are loose is because their pen is old, but taking pen in the sense 'writing instrument' leads nowhere.

Much the same seems to happen when we choose between syntactic analyses. E.g. suppose we are processing It will

sink, and we are currently dealing with sink, which could be either a noun or a verb. We know that will allows a verb after

it, as its complement, so if we take sink as a verb, we allow ourselves to infer that sink is the complement of will; but if we

take it as a noun we cannot infer anything. It seems likely, then, that Sperber and Wilson's Principle of Relevance, when

taken in conjunction with our Principle of Universal Relevance, applies much more widely than has been claimed so far.

These remarks about processing are obviously programmatic and tentative, and certainly don't constitute anything

approaching a theory of how we understand speech (less still, of how we produce it). I must emphasize in particular what I

said earlier about the Best Fit Principle: it is anything but clear how any system can apply this principle as efficiently as the

human mind seems to. Moreover, as John Fletcher has pointed out to me, it seems clear that there is no one way of

processing linguistic input which we all apply all of the time - e.g. some people (professional linguists, actors, etc.)

sometimes pay more attention to linguistic form than is normal to other people. And it is certain that we process speech

differently from writing, for a large number of very well-known reasons.

As I have already pointed out, at the heart of the dispute about modularity is a disagreement about how language and

linguistic behaviour can be explained. Since modularity emphasizes the discontinuities between language and

non-language, all explanations must be in terms of principles which are stipulated specifically for language. The

non-modular view is more optimistic. Those of us who take this position hope that it will be possible to analyse all the

properties of language as examples of more general principles and processes applied under the particular circumstances

of language. In this view, nothing needs to be stipulated for language, so the total theory of cognition is more

parsimonious, and more explanatory. Of course it remains to be seen which of these approaches is right - or indeed,

whether either of them is right, given that one likely outcome of the debate is a compromise position in which some

properties of language are unique, but others are not.

4.3 WORDS AS ACTIONS

One commonplace of linguistics is that speech is primary. Another is that words are actions, rather than static 'things'. It

follows that when we discuss words, we are in the first instance concerned with spoken words, rather than with written

ones, which are permanent marks on a page rather than 'actions' (in any normal sense of this word). It is clear that an

uttered word is an action - it takes place in time, is performed by some person, requires physical movement (of the vocal

organs), etc. However it is equally clear that this fact must be part of our knowledge about words, so if some object was

not an action we would not call it a word. Moreover we know about particular word-types what kind of action they are - so

the word-type CAT is an action whose first part is an instance of /k/ (which in turn is the name of a kind of action, defined

phonetically). In other words, we know that word-types are particular kinds of action just as we know that running, walking,

skipping and hopping are types of action.

Various consequences follow from these rather mundane observations. One is that if the concept 'word' is a sub-case of

the more general concept action', then they must be related in an isa hierarchy in which 'action' dominates 'word'. Now we

have already seen 'word' in one hierarchy, in which it was at the top - (see figure 3.1), the hierarchy of word-types which

included concepts such as 'noun', 'common' and 'CAT'. It seems impossible to avoid the conclusion that this hierarchy

must be part of the larger hierarchy in which 'word' is dominated by 'action'. (We shall see shortly that there are further

concepts in between 'word' and 'action', and others above 'action'; for the present we can ignore these other concepts.)

But if that is the case, grammar itself is formally a part of a much larger conceptual structure, and all word-type concepts

are particular cases of concepts that have nothing inherently to do with language (such as 'action').

One interesting consequence of this conclusion is that words must inherit the properties of the more general concepts

above them in the hierarchy. For example, if we know that the typical action has an actor, then it follows that the same is

true of every word-type, from the most general, 'word', to particular lexical items like CAT. The actor of a word is clearly

another way of referring to the speaker, so the notion 'speaker of X', where X is some word-type, is now available for use

in facts about X. The same does not seem to be true of any other theory of language structure, but it is in fact an essential

ingredient of any adequate theory, as we shall see below.

Returning to the position of the word-type hierarchy below 'action', we seem to have some support for the general claim

of WG, which is that language is just a special case of more general conceptual structures. If a language consisted of

nothing but word-types, then someone who wanted to preserve the boundaries round language could at least say that it is

formally identifiable, in this view of the structure of knowledge, as an isa hierarchy whose top is the concept 'word'.

However, this will not do because it takes no account of the many relational concepts which form part of any grammar,

and which we shall discuss in chapter 5 - notions like 'subject', 'structure', 'referent' and so on. A relation is not an instance

of an action, but of a type of relation, so relational concepts of language must be part of a different hierarchy from the

word-types. The next chapter will explore this hierarchy, but for the present it is enough to agree that the notion 'subject' is

part of a grammar, but that it is not a sub-case of 'action'. If this is so, then we can conclude that different parts of the

grammar are in different more general hierarchies - which means that, formally speaking, there is no single sub-network

which could be referred to as 'language'. If we want to identify a language, or the general notion 'language', then it must

be a set consisting of the hierarchy dominated by 'word', plus one or more hierarchies of relational concepts.

Let us now return to the consequences of being able to refer to relational concepts such as the 'actor' (i.e. speaker) of

a word. What other such concepts can we inherit from 'action'? One is the concept 'time', because an action takes place in

time. Another is 'place', on the assumption that the typical action happens in a particular place. A third is 'purpose',

because an action (unlike a simple 'event') is purposeful. And a fourth is 'structure', because an action has an internal

structure which changes through time. We shall now review reasons why every one of these inherited properties must be

referred to in the grammar. Let us start with the notions 'structure' and 'purpose'. The structure of a (spoken) word is its

phonological and/or morphological composition; so the structure of CAT is /kat/. Its purpose is its (typical) illocutionary

force; so arguably the purpose of an imperative verb is the event referred to by the verb (Hudson 1984: 189), and when I

say Come in! this refers to the event 'you will come in' and my purpose is precisely that this event should happen. If this

analysis is correct, then the grammar must be able to refer to the notion 'purpose of X', where X is a word-type.

The other notions, 'place' and 'time', are both essential for deictic semantics, as is the notion 'actor' introduced earlier.

The time and place of a word are the time and place at which the word occurs - i.e. at which it is uttered. Now that we can

refer to these notions directly in the grammar, deictic categories are extremely easy to treat because their referents can

be identified with the values of the relational variables - the time or place of utterance. For example, 'time of NOW' is the

name of whatever the time is when this word is uttered -which is of course precisely the same as the referent of NOW, so

this can simply be identified as 'time of NOW'. The fact concerned is given in [13].

[13] referent of NOW = time of it.

Suppose some word-token is called w5, and it is an instance of NOW; and suppose the time of w5 is 12.20 on the 12

December 1989. By the normal rules of derivation given in chapter 3, it follows that the referent of w5 is also 12.20 on the

12 December 1989. Similar remarks apply to the meanings of the words HERE and ME, whose referents are respectively

their place and their speaker.

Another use for notions like 'time' and 'actor' is in stating facts of a sociolinguistic type (Hudson 1980c, 1986b, 1987b).

Some greetings are time-bound - e.g. GOOD MORNING is used in the morning - and some word-types are used only

when the social relations between the speaker and addressee (a notion we shall derive below) satisfy certain conditions e.g. French TU is used only to refer to an addressee who is relatively close to the speaker, or subordinate. These

restrictions can be stored as facts about the (typical) use of the word-types concerned, and this knowledge can be

exploited in the usual way by the inference mechanism - e.g. if A calls B tu, then B can work out that in A's view, B is

either subordinate to A or close to A.

A third role that the notion 'time' can play in the grammar is in formalizing word-order rules, which are in effect

restrictions on the relative times of co-occurring words. Take a simple rule like [14], for instance.

[14] pre-dependent of word precedes it.

This can be taken as an abbreviation for a fact which refers explicitly to the times of the words concerned:

[15] time of pre-dependent of word precedes time of it.

It is common in work on the semantics of time to represent times by numbers which increase as time passes, which

allows the relation 'precedes' to be replaced by the simpler notion 'is less than': timei precedes timej if 1 < i < j. WG uses a

similar convention in naming word-tokens, calling each one 'word N', where N increases from the beginning to the end of

the sentence. If we follow the simple convention of identifying the number which names the word's time with the one in the

word's own name, it becomes very easy to test, in a principled way, which of two words precedes the other, by comparing

their names.

But more importantly, the link between word-order and relative times is another example of the general claim that the

properties of grammar are particular cases of properties of knowledge in general. In this case, wordorder rules turn out to

be particular cases of facts about the relative time of events, such as are found in discussions of tense.

Perhaps somewhat surprisingly, then, we have found some important consequences for the theory of grammar which

arise from the rather banal observations about words being actions with which we started.

4.4 ACTIONS AS WORDS

One objection which is sometimes raised to the general idea of a word-based grammar is that the notion 'word' is too

vague to play such a critical role in a theory. This criticism seems to rest on two premises: the lack of a clear definition of

the notion, and the existence of ambiguous cases.

The first premise is irrelevant to our present undertaking, because the notion 'definition' does not apply to any of the

concepts that we have invoked. Instead of offering a definition, we offer typical properties against which the properties of

any potential instance may be matched, bearing in mind the possibility that any property may in fact be overridden in an

exceptional case. In section 3.6 we rejected the Aristotelian view of categories as having necessary and sufficient

conditions which can be taken as their 'defining' characteristics. This principle applies to 'word' as much as to any other

concept, so there will be no attempt to define this concept. However the empirical content of the theory is not undermined

by this lack of definition, because I shall list some of the properties of typical words, the claim being that these properties

all typically apply to the same range of objects. This claim is testable (given a reasonable interpretation of 'typically').

The existence of ambiguous cases is no more worrying than the lack of a definition. For example, one might ask how

many words there are in the expression hard-wearing. The writing system reflects the difficulty of answering this question,

because the hyphen itself represents a link which is closer than that indicated by an ordinary word-space, but less close

than that indicated by the absence of either. Indeed, it is also permissible (so one dictionary tells us) to write hard wearing,

without a hyphen, and a different dictionary gives only hardwearing, without either of the other two options. In other words,

English speakers cannot decide how to write this expression, and presumably the reason for this is that in some respects

it behaves like a single word, and in others like two separate words. But this does not show that the concept 'word' is

either psychologically unreal or illegitimate in theoretical linguistics. All it shows is that like other concepts it has the

properties of a prototype - some instances of it are clearer than others. The only problem for the analyst is an excess of

options: since the analysis is so uncertain, it makes very little difference whether we take hard wearing as one word or

two, so we cannot be wrong either way.

What then are the properties of words? The typical word has at least the following properties (a fuller list is given in the

appendix to Hudson 1989a).

1

2

3

4

5

6

It has an internal structure consisting of a sequence of speech sounds, at least one of which is a vowel.

It is an instance of one of the 'major' word-types (noun, verb, ad-word, conjunction).

It has at least one 'companion' (a notion to be explained in the next section), and each companion is itself a word.

One of its companions is its head.

It has a meaning, consisting of a sense and a referent.

It has (i.e. 'belongs to') a language.

This list refers to other notions, e.g. 'speech sound' and 'language', which in turn have a collection of typical properties.

Some of these bring us back to the notion 'word', but this does not involve circularity because they each have other

properties too which keeps their content independent of that of 'word'.

A word like the English CAT is an unproblematic, clear example of a word, but it is not hard to find words that are not

very 'good' words, in the sense that they lack some of these properties. For instance, HELLO has no referent, and

perhaps no companion words (unless one treats oh as a companion in Oh, hello!). One of the most interesting cases is

the English transitive verb GO/noise, as in (3), which we discussed in section 3.2 (see also Hudson 1985).

(3) The train whistle goes [speaker hoots].

Younger speakers of British English seem increasingly to use GO transitively with reported speech, an innovation which

was noted some time ago in the USA (Partee 1973, Schourupp 1982):

(4) He went 'What do you mean?'.

However for most older speakers I think the rule is that transitive GO can be followed only by a sound, made by the

speaker, which is not a word. For words, of course, the verb to use is SAY. Since GO has a special meaning when it is

followed by a sound, there must be a distinct concept with these two properties, which in other respects (e.g. for

morphology) is an instance of GO. This is what we call 'GO/noise'.

What is interesting about GO/noise is that it has a companion which is specifically not a word. This is not only atypical

for words, but deeply problematic for any theory of grammar which relies on there being a clear boundary round language,

such that linguistic objects can combine with each other but not with anything outside language. It is very hard to see how

most of the alternatives to WG could accommodate sentences like (3), or the 'subcategorization' facts about GO/noise.

The WG analysis for GO/noise is quite straightforward. We assume first that the noise is a complement of the verb, and

secondly that the verb's meaning is defined as a kind of action which is the same as the noise; thus the meaning of went

in He went [wolf-whistle] is 'the action of doing a wolf-whistle at some time in the past'. Here are some of the relevant

rules, which should be self-explanatory although they refer to some ideas which we have not yet touched upon.

[16a]

[16b]

[16c]

[16d]

[16e]

GO/noise isa GO.

GO/noise has [1-1] complement.

NOT: type of complement of GO/noise = word.

NOT: sense of GO/noise = movement.

sense of G0/noise = type of complement of it.

One theory which does have an explicit place for such sentences is Langacker's Cognitive Grammar, so it is interesting

to compare the analysis given here with the one in Langacker (1987: 61, 80). According to Langacker's analysis, the noise

has a meaning, namely itself, in contrast with our analysis in which it has no meaning, but defines the meaning of

GO/noise. One objection to Langacker's treatment is that it implies that some noises that can be used after GO/noise do

have a 'meaning' in other contexts - e.g. a wolf-whistle is a kind of mating call (or some such notion). What happens to this

meaning if the wolf-whistle means itself? Another objection is that if the noise has a meaning, then this ought to be

available for ordinary anaphoric pronouns (e.g. IT), but we cannot say things like He went [sound of car starting up], *and

then he went it again.

Another suggestion for analysing GO/noise is in Partee (1973). According to this analysis, He went [noise] is the surface

structure corresponding to a deeper structure He went like this: [noise], where [noise] is not part of the sentence any more

than a gesture would be. The obvious objection to this suggestion is that it misses the main problem, namely how to state

the subcategorization facts about GO/noise. The fact is that the complement of GO/noise is obligatory, so the grammar more specifically the part of the grammar which is responsible for the various properties of GO/noise - must say not only

that this verb requires a complement, but also what kind of complement it requires. And the problem is that this statement

will necessarily refer to non-linguistic concepts such as 'noise' or 'action' - and certainly to the non-linguistic concept 'not a

word'.

The conclusion of this discussion is not that the noises after GO/noise are a kind of untypical word but rather that

GO/noise itself is an untypical word. It is untypical in that it combines, in a rule-governed way, with non-words, whereas

the typical word combines only with other words. We shall discuss another construction in which words and non-words

combine in section 11.8, where we shall again see that the boundaries between language and non-language are much

more permeable than is generally assumed.

4.5 COMPANIONS

I said above that the typical word has one or more 'companions', each of which is itself a word. As may be guessed,

'companion' is a relational concept, and as the ordinary English word COMPANION suggests, it is a significant relation

between co-occurring elements of similar type. X's companion on a journey is another person who is not just going in the

same direction as X, in the same vehicle as X, but who is also linked to X by other bonds - friendship, common goals, or

whatever. In the same way we can distinguish between two kinds of relations between co-occurring words. Take a simple

example like tall, very thin, men. There are three pairs of words here which are significantly related: {tall, men}, {thin, men}

and {very, thin}. The significance of these relations comes from the fact that each relation is controlled, and permitted, by

some fact in the grammar. The members of each of these pairs are companions of one another. In contrast the relation

between tall and very is not an instance of a relation mentioned in the grammar, so it is not significant and does not count

as a companion relation.

We shall develop the notion 'companion' in chapter 5. The question to be addressed here is about the connection

between the companion relations that are found among words and relations that are found outside language. What 1 shall

try to show, in a very informal way, is that there are examples of companion relations outside language as well. The

obvious place to look for such relations is in cases to which we would apply words like COMPANION or ACCOMPANY.

For example, consider the meaning of (5).

(5) Fred accompanied Mary.

If we say (5) we must be referring to two distinct but linked actions: Fred going somewhere, and Mary going to the same

place. We could not use this sentence to refer to anything other than actions; for example if Fred and Mary shared the

same taste in music, or were sitting together, we could not express this linkage by means of (5). The semantic structure

for (5) must therefore distinguish two distinct activities, each with its own actor, and must define the relation between

these two actions along the following lines: 'There was an action A1 done by Fred and another action A2 done by Mary

such that A1 "accompanied" A2.'What is slightly odd about this paraphrase is the use of ACCOMPANY for events rather

than for the people involved in the events, but a precedent exists in sentences like The collapse of the podium was

accompanied by laughter. This is how we shall use the term 'companion', as a technical term.

Suppose, then, that we classify the relation between linked actions of the kind described in (5) as 'companion', so that

each action is said to be a companion of the other. There are some similarities between these linked actions and linked

words, notably the fact that their co-occurrence is not just coincidental, but purposeful and controlled (e.g. we may

assume cultural restrictions on who should accompany who). In addition there is the fact that in (5) both actions are

closely linked in time, and also the fact that the linked actions are of the same type (e.g. if Mary travelled by car, so did

Fred). Indeed, (5) can only be used to refer to a limited range of action-types: journeying, or making music. However the

differences are also striking: linked words have the same actor, but linked actions describable by (5) have distinct actors;

and linked words necessarily occur at different times, whereas the actions in (5) must occur simultaneously.

In order to find a closer parallel with word-word links, we must look at a different kind of non-linguistic situation, where

one person carries out a sequence of actions. Consider the overall activity of making a phone call. This can be described

in terms of a series of steps, each carried out by the same person:

1 lift the receiver; 2 dial the number; 3 speak; 4 replace the receiver.

It seems reasonable to see the third step, speaking, as the main one, because this is the purpose of the whole activity.

This step is linked by rules and purposes to each of the other steps, including step 1, although this is in fact separated

from it by step 2. Technology apart, the ordering of steps 1 and 2 is free, as neither is a logical prerequisite for the other it is possible to imagine a telephone system in which you dial before picking up the receiver (or indeed, in which there is

no receiver to pick up, but a fixed microphone).

There are similarities between the linkage of events in the telephone scene and the links that we noted in connection

with sentence (5) - close connection in time, a unifying purpose, and the fact that at least one element in each action must

be the same (the direction of the journey in the case of 'accompany', the actor in the telephone case). These similarities

are sufficient to justify bringing them together under the common term 'companion'; so this term now applies not only to

the relation between the two actions implied by ACCOMPANY but also to the relation between speaking on the telephone

and picking up the receiver, and the other links in the telephone scene.

The relevance of the telephone example lies in the fact that its structure is rather similar to the structure of a sentence

like (6).

(6) Fred never drinks wine.

Here too we can identify four actions, each of which is a word, and one of these actions stands out as the 'main' one,

namely drinks. (We shall see below that a sentence has a single word as its 'root', and that this word is normally a finite

verb.) Each of the other actions is related directly to the main one - this time by a grammatical rule - and the similarity

even extends to the fact that there are two actions before the main one and one after. Moreover, the ordering of the two

subordinate actions before the main one is not free, though in principle it could have been. The ordering is fixed in the

telephone case by a (logically arbitrary) decision about how to build telephone hardware, while in the sentence it is fixed

by an equally arbitrary rule of English grammar which prevents NEVER from immediately preceding the subject (contrast

*Never Fred drinks wine with Sometimes Fred drinks wine).

The similarities between the two cases are not limited to their abstract structures. The sentence is like telephoning in

that all the actions have the same actor (i.e. the same speaker), but it is also like the case where Fred accompanies Mary

because the linked actions have to be of the same type (namely, words). These similarities justify a further extension of

the relational category 'companion' to cover the relations between pairs of words in a sentence as well as the other two

kinds of case. We shall see in the next chapter that this category, when applied to language, covers quite a wide range of

syntagmatic relations, of which the most important is the relation of dependency, but for the present it is enough to have

established a connection between word-word relations and relations that involve actions of completely different types.

To the extent that this chain of arguments is successful, it leads to a rather surprising conclusion: that so-called

grammatical relations are in fact a sub-case of semantic relations. This conclusion rests on an assumption that we have

not yet laid out, namely that grammatical relations like 'subject' are a sub-case of dependency, which we have just seen to

be a sub-case of 'companion'. Now it should be clear that 'companion' will be needed in the semantic analysis of

sentences like (5), Fred accompanied Mary, as well as in the conceptual structure for the concept 'make a phone call' to

which the verb TELEPHONE is related. Therefore grammatical relations are a sub-case of a relation which is needed in

semantic analysis, and not only in semantic analysis but in general conceptual analysis of scenes such as making a

phone call. Once again, then, we find an intimate connection between linguistic and non-linguistic knowledge.

The comparison that we have just made between grammatical relations and extra-linguistic relations is similar in spirit to

the one in Lakoff (1987: 283), which has been developed by Deane (1989). The main difference is that Lakoff and Deane

assume a constituent-structure analysis of sentence structure, so they emphasize the similarities between sentence

structure and other part-whole structures, whereas I shall argue against constituency analysis. The general conclusion is

the same, however: grammatical relations can be taken as particular cases of more general types of relations, and some

of their detailed properties can be explained in these terms.

4.6 GRAMMARS AND ENCYCLOPEDIAS

If there were a boundary between linguistic and non-linguistic knowledge, then it would divide the notion 'meaning' into

two parts, one linguistic, the other non-linguistic. The assumption that such a division does indeed exist is very deeply

embedded into the tradition of linguistics, to the extent that many linguists find it hard to imagine any alternative (though to

be sure a few linguists have questioned the tradition - notably Bolinger 1965, Chafe 1971, Haiman 1980, jackendoff 1983,

Langacker 1987, Lakoff 1987, Taylor 1989). For example, Emmorey and Fromkin (1988) describe the existence of this

distinction as 'unquestionable'. It is interesting to speculate about the reasons for this belief - for instance, it is quite

possible that some of its roots lie in the distinction that publishers used to make between dictionaries and encyclopedias

(Hudson 1989a). However it seems unlikely that the belief in this distinction could have arisen out of rational observation

of linguistic facts, because everyone concerned seems to agree that the boundary is extremely hard to locate in particular

cases.

Two different distinctions seem to be involved in this debate. One is the distinction between analytic and synthetic

truths - between inferences that necessarily follow from a sentence and those that probably follow, but need not. The

other is the contrast between definitional and incidental properties. Traditionally these questions have tended to be

identified, because definitional properties lead to analytic entailments, but they must be separate because at least some

linguists are willing to accept that the analytical entailments of a sentence need not provide definitions for the words in it

(Sperber and Wilson 1986). In this view, perhaps the only analytical property of a word like giraffe is 'animal of a certain

species', as all other properties may be negated, given a suitable context; but 'animal of a certain species' is clearly not a

definition of giraffe, as it does not distinguish giraffes from other animals.

This example is useful, because it illustrates a general property of the base-line in the debate. In general, if one person

claims that some word analytically entails some property P, someone else manages to construct a context in which the

word can be used correctly without entailing P. However there is often a core of properties which remain after this

process, such as 'animal of a certain species' in the case of giraffe. It seems that these properties are all related to the

position of the word's sense in the conceptual isa hierarchy: if the typical A isa B, then it is very hard to imagine an

instance of A which is not also an instance of B. We have already noticed this fact, and have suggested an explanation for

it in terms of the Best Fit Principle plus the principles of inheritance (see section 3.6).

My general conclusion, then, is that there is in fact no boundary between 'strictly linguistic' meaning and the information

that we have about the concepts which words take as their meanings (their senses). Thus the sense of GIRAFFE is the

concept 'giraffe' - the same concept that we use in understanding our non-linguistic experience of giraffes - and in

principle all the properties of 'giraffe' are equally accessible when we hear or speak the word giraffe, though for the

reasons just given some of them may be more important, or more dependable, than others. I should like to stress that in

this view the sense of a word is simply a name, an atom, and not a 'frame' with an internal structure. This means that it

makes no difference how many properties the sense has - it takes no more memory space to hold the sense of a word like

BABY, about which we know a great deal, than that of a word like QUASAR, about which most of us know very little. Nor

is there any problem of circularity such as Langacker discusses (1987: 164) on the assumption that the concepts 'cat' and

'mouse' refer to one another, and that each concept is the sum total of all its properties.

An interesting consequence of the totally integrated view of our knowledge which I am proposing is that all concepts are

equally available as potential senses for words. One particular kind of concept is the kind found inside the grammar itself,

such as 'noun', 'subject' and 'CAT', which are of course used as the senses for metalinguistic terminology (Hudson

1989c). Thus the sense for NOUN is 'noun', and so on. This is interesting because it underlies the futility of trying to find

the boundary between 'the encyclopedia' and 'the grammar'. As we have already seen, to the extent that the grammar can

be identified it is a number of distinct segments of isa hierarchies (notably the hierarchy for word-types and the one for

grammatical relations). But we can now see that this collection of cognitive structures must be part of the 'encyclopedia', if

this is the repository for the information that we use in understanding words. But the whole point of the traditional

distinction between 'dictionary' meaning and 'encyclopedic' information was precisely to reinforce the supposed boundary

between the grammar (including the dictionary) and the rest of our knowledge; so our conclusion brings all this work to

nothing.

Lastly it is important to distinguish the position put forward here from that of Fodor and his followers (e.g. Fodor et al.

1980, Sperber and Wilson 1986), according to which the meaning of a word is distinct from the encyclopedic information

about it, but is also virtually empty. (As we saw above, the analytic content of a word consists of nothing but the place of

its sense in the isa hierarchy.) In particular, Fodor denies that any semantic decomposition of word meanings is

necessary, so the meaning of a word may be represented as a single unanalysable element; thus the meaning of giraffe is

'giraffe'.

This is similar to the WG view in that in both cases the word corresponds to just one 'sense' - i.e. the proposition which

links a lexical item to its meaning merely names the sense, rather than spelling out a 'content' for the sense in terms of

real-world properties. However it is important to stress that in WG a great deal of semantic information may need to be

referred to in the grammar, and that this information does involve semantic decomposition in the sense of an explicit

listing of properties of the sense.

To take a simple example, how else could one explain the structure of sentence (7)?

(7) Last Christmas, they put it in the cellar till the summer.

This is problematic for a non-compositional approach, because the meaning of put involves both an action ('they did X')

and a resulting state (the result of X = 'it was in the cellar'). The first time expression, last Christmas, defines the time of

the action, but the second one, till the summer, defines the length of the state. Semantic decomposition is needed in order

to provide a distinct element for each of these expressions to relate to, because these two kinds of time expression cannot

both relate to the same element. (Compare e.g. *Last Christmas they found it till the summer, and *Last Christmas they

stayed there till the summer.) We shall discuss semantic structures like these in more detail in section 7.6.

In conclusion, then, we have seen a number of serious problems that would arise if we tried to make a strict

distinction between 'linguistic' and ,encyclopedic' knowledge - not least of which is the problem of actually drawing the line

in particular cases. 1 know of no compensating benefits that are conferred by making this distinction.

4.7 TOWARDS A CONCEPTUAL HIERARCHY

According to WG, then, there is no boundary between linguistic and non-linguistic concepts, regardless of whether the

linguistic concepts concerned are internal to the grammar - syntactic word-types or relations - or at the interface

between linguistic and non-linguistic meaning. This conclusion has radical consequences for empirical research on

language, because any concept referred to in the grammar will inherit properties from higher-level concepts which are

clearly non-linguistic. We have already seen how this makes notions like 'actor' (i.e. 'speaker') and 'time' available in

the grammar, but the point applies much more widely. At its most general, it means that any linguistic research must

rest on an explicit set of assumptions about how at least the highest levels of conceptual structure are organized. This

is obviously a source of difficulty for the practising linguist, and the best I can do at present is to guess at a reasonably

plausible and coherent structure, with very little certainty that it is right - and indeed, very unclear ideas about how it

could be tested for truth, or how I would justify it in comparison with the many other conceptual schemas that have

been developed over the ages (going back at least to the eighteenth century).

In this section I shall lay out a system of concepts that seems compatible at least with the linguistic facts that I know

of, and (at a lay level) with what I know of some non-linguistic concepts as well. For each concept I shall provide a

brief and informal description of its 'content' - i.e. of the properties that it has, and especially of those which are

inherited by linguistic concepts that are instances of it. We start with a map of the territory, namely Fig 4.1.

Entity

This is the most general concept of all, which is almost devoid of content. It does have one property, however, which

is of being in the mind of a particular person. For most purposes we can ignore this fact, because we are trying to

model the knowledge of a single person so the 'owner', or 'knower', stays constant. Let us call this person 'ego',

following the practice of anthropologists. It becomes highly relevant, however, if we want to model ego's model of

someone else's knowledge, because this can be distinguished from ego's own knowledge in terms of who is its

'knower'.

entity

person

thing

relation

situation

theme

event

companion

action

dependent

set

communication

speech

word

FIGURE 4.1 The concepts above the main linguistic concepts

Let us assume that we can develop a system of knowledge representation in which any given concept may be

relativized to a specified person, its 'knower', and that any concept which is not relativized in this way belongs to ego

themself. This system should provide a basis for the analysis of propositional-attitude verbs such as KNOW whose

subject defines the knower for the situation defined by its complement. We shall also apply it more generally in the

semantic analysis of mood, definiteness and some quantifiers (Hudson 1984: 180ff; see e.g. section 7.2 below). For

example, the determiner SOME, as in Some linguist will solve this problem one day, indicates that the referent of the

common-noun is not known either to the speaker or to the addressee, which can be shown by suitable propositions

saying that neither the speaker nor the addressee is the 'knower' of this referent.

It is worth explaining why the top-most node in the hierarchy is 'entity' rather than 'concept', as one might have

expected given that the hierarchy is a 'conceptual' one. The reason has to do with inheritance. Suppose we have the

sentence Fred ate an apple; now since both Fred and the apple are located in this hierarchy, they must both isa the

top category. If this had been 'concept', we could have inferred that a concept ate a concept. But this would surely be

nonsense. In contrast it is fine to infer that an entity ate an entity. This decision leaves 'concept' free to be used for a

particular kind of entity, e.g. as the sense of the word CONCEPT. Moreover we shall continue to use this term to refer

to any category in our analysis, since this is a conceptual hierarchy. I hope this systematic ambiguity will not lead to

any confusion.

Person

Like most of the concepts immediately below 'entity' itself, the concept person is only indirectly relevant to linguistic

structures. It has two connections to the latter. One is that it is central to the classification system which is reflected in

the meanings of words - for example, the English relative and interrogative pronoun system contains a special word

for use only to refer to humans (WHO) and there is no more general term which covers both humans and things

(hence the need for who or what in some cases). These linguistic facts clearly conflict with what we learn from biology,

about humans being instances of the type 'animal'. As far as language is concerned, and presumably also as far as

our folk taxonomy is concerned, humans are unique. It is interesting to speculate about how, and how far, we manage

to integrate the scientific world-view into our conceptual structures.

The other connection between 'person' and language is that the participants in speech - the speaker and the

addressee - are (typically) human, a fact which must be included in our model of knowledge. It seems selfevident that

the concept 'human' is extremely rich in properties - bodily, emotional, social, etc. Thus a great deal of information is

inherited by any speaker or addressee.

Thing

There is even less to say about this concept than about 'person', except to point out that IT is used to refer to things.

In contrast with the wealth of properties of the typical human, it is hard to think of any that might characterize the

typical 'thing', except for the absence of the human attributes. If there really are no shared properties to be inherited by

things, it must follow that this concept is totally vacuous and should be removed. In that case all the concepts which

would otherwise be immediately below it (such as 'situation' in our diagram) will take their place along with 'person'

etc. immediately below 'concept'. The meaning of IT would then be 'any concept which is not a person'.

Relation

The main property of a relation is, of course, that it is relational: it identifies one concept in relation to another. For

example, 'subject of X' identifies one word in relation to another word, X. If this definition always picks out a unique

concept, the definition is a 'function', in mathematical terms. In many cases this is true of WG relations, but by no

means in all; for instance, more than one word may be describable as 'dependent of' some other word.

It should be noted that WG relational categories are truly relational, because they include the other relatum of

the relation (i.e. 'X' in 'subject of X'). In this respect they contrast with the so-called functional categories of some other

theories, in which the relation is defined but the other relatum is not; one thinks, for example, of the many 'functional'

theories in which words or phrases are labelled simply 'subject' or 'object', and so on. The inclusion of the other

relatum is important because it allows the identification to be made more precise without altering the relation itself. For

instance, one could refer to the subject of some particular verb, or verb-type, simply by specifying the relatum more

specifically, using categories that are already available in the grammar; e.g. the subject of a tensed verb could be

distinguished from that of an infinitive as 'subject of tensed verb' versus 'subject of infinitive verb'. This facility greatly

reduces the number of relational categories that need to be distinguished.

Set

A set has very special properties which distinguish it from other concepts, namely that it has a membership, a

membership-size (which is countable), and a Boolean relation (AND or OR), as explained in section 2.3. As far as

language is concerned three kinds of set need to be distinguished.

1 One is the kind represented most typically by plural nouns; for example, the sense of cats is a set, each of whose

members is a cat. Such a set is homogeneous in that it has a 'set-model', and each of its members is an instance of

the set-model. It is worth pointing out that it is important to keep the notion 'Instance of' clearly distinct from 'member

of'; each member is a member of the set, an instance of the set-model. It would be nonsense to say that it was an

instance of the set, because a set has quite distinct properties from those of its members; e.g. the set referred to by

cats has the property of having a certain number of members, whereas each of its members has properties like having

a tail and whiskers. We shall see that sets such as these are also needed for word-types other than nouns, notably for

verbs.

2 Another kind of set is defined extensionally, by listing its members. This is the kind which is defined linguistically in

coordinate structures; for instance, the expression Tom, Dick and Harry defines the set {&: Tom, Dick, Harry}. Such a

set has no set-model, and need not be homogeneous.

3 The third type of set is lexicalized, and expressed by a 'collective noun' like FAMILY (or by a 'collective verb' such

as SCATTER). Its members have more complex interrelations which are fixed by the properties of the collectivity

concerned. The peculiarity of such sets is that they can also be treated as members of higher sets, so that FAMILY

has a plural, families, which refers to a set of families.

Situation

The term 'situation' is taken from Situation Semantics (Barwise and Perry 1983), where it is used to describe a state of

affairs in the world. The notion is the same as Halliday's 'process' (Halliday 1967, 1985), but the term 'situation' is

better as it needs to cover not only events but also states, such as 'it is cold'. A situation is a kind of 'thing' - e.g. it can

be referred to not only by IT, but also by WHAT:

(8) Fred was late. It was most unusual.

(9) What happened? Oh, Fred came late.

Unlike other kinds of thing, however, it is time-bound, so it has a time (and is typically referred to by verbs, which are

purpose-built for defining temporal relations); but of course the time may be either unknown or generalized ('any

time'). It also typically has a cause, which may not be known either.

Event

An event is distinctive, in relation to other kinds of situation, in having an internal temporal structure - in other words,

the situation alters with time. One particular case of this is the 'change', where the situation is presented as flipping

instantaneously from one state to another (e.g. He reached the summit.), but the typical event has an ongoing internal

structure where different things are happening at different stages. A word is a clear example, in spite of its brevity, as

each phoneme represents a distinct action.

In addition to having an internal structure, a typical event also occurs at some particular place - in other words, it

'has' a place. This is important for language because some words (e.g. HERE) refer to this place.

Action

The sub-type of event called 'action' is the typically human one. It has an 'actor', with prototypical properties such as

exercising control and supplying energy (for further properties see e.g. Keenan 1976, Dik 1978); and the typical actor

is human. As we have seen, if the action is a word, then the actor is the speaker.

Furthermore, the actor typically has a purpose in carrying out the action, so we can say that the action itself has a

purpose, which is understood to be what the actor intends. The purpose is another situation distinct from the action

itself, but standing in a causal relation to the action. The purpose of an action is a kind of cause, but it can easily be

distinguished, in English, from other kinds of cause, because only purposes can be questioned by means of What ...

for?

(10) What did Fred kick the cat for?

(11) ?What did it rain for?

Purposes are important linguistically for a number of reasons. One is that they define the normal course of events,

which may or may not terminate. This explains for example the well-known paradox of sentences like (12) (Dowty

1979).

(12) He was drawing a circle.

This can only be used when he is in the middle of drawing a circle, but it is only when the drawing is complete that he

can be said to have drawn a circle. The paradox disappears when we distinguish the activity itself (drawing) from its

purpose (producing a circle). The purpose is valid right through the activity, even though it is not achieved until the

end.

Another contribution of purposes to linguistics is in the definition of some kinds of semantic structures. As 1

suggested earlier, it may be that the purpose of a typical imperative verb is the same as the situation to which it

refers: so if Work harder! refers to the situation in which, at some time in the future, you work harder, then this

situation is also its purpose. It may be that other parts of illocutionary force could be defined in terms of purposes as

well.