References and Bibliography

367

6.8

H. H. Rosenbrock, An automatic method for finding the greatest or least value of a

function, Computer Journal, Vol. 3, No. 3, pp. 175-184, 1960.

6.9

S. S. Rao, Optimization: Theory and Applications, 2nd ed., Wiley Eastern, New Delhi,

1984.

6.10

W. Spendley, G. R. Hext, and F. R. Himsworth, Sequential application of simplex designs

in optimization and evolutionary operation, Technometrics, Vol. 4, p. 441, 1962.

6.11

J. A. Nelder and R. Mead, A simplex method for function minimization, Computer Journal, Vol. 7, p. 308, 1965.

6.12

A. L. Cauchy, M´ethode generale

´ pour la r´esolution des systemes d'equations

`

´simultanees,

´ des Sciences, Paris, Vol. 25, pp. 536-538, 1847.

Comptes Rendus de l'Academie

6.13

R. Fletcher and C. M. Reeves, Function minimization by conjugate gradients, Computer

Journal, Vol. 7, No. 2, pp. 149-154, 1964.

6.14

M. R. Hestenes and E. Stiefel, Methods of Conjugate Gradients for Solving Linear Systems, Report 1659, National Bureau of Standards, Washington, DC, 1952.

6.15

D. Marquardt, An algorithm for least squares estimation of nonlinear parameters, SIAM

Journal of Applied Mathematics, Vol. 11, No. 2, pp. 431-441, 1963.

6.16

C. G. Broyden, Quasi-Newton methods and their application to function minimization,

Mathematics of Computation, Vol. 21, p. 368, 1967.

6.17

C. G. Broyden, J. E. Dennis, and J. J. More, On the local and superlinear convergence of

quasi-Newton methods, Journal of the Institute of Mathematics and Its Applications, Vol.

12, p. 223, 1975.

6.18

H. Y. Huang, Unified approach to quadratically convergent algorithms for function minimization, Journal of Optimization Theory and Applications, Vol. 5, pp. 405-423, 1970.

6.19

J. E. Dennis, Jr., and J. J. More, Quasi-Newton methods, motivation and theory, SIAM

Review , Vol. 19, No. 1, pp. 46-89, 1977.

6.20

W. C. Davidon, Variable Metric Method of Minimization, Report ANL-5990, Argonne

National Laboratory, Argonne, IL, 1959.

6.21

R. Fletcher and M.J.D. Powell, A rapidly convergent descent method for minimization,

Computer Journal, Vol. 6, No. 2, pp. 163-168, 1963.

6.22

G. G. Broyden, The convergence of a class of double-rank minimization algorithms, Parts

I and II, Journal of the Institute of Mathematics and Its Applications, Vol. 6, pp. 76-90,

222-231, 1970.

6.23

R. Fletcher, A new approach to variable metric algorithms, Computer Journal, Vol. 13,

pp. 317-322, 1970.

6.24

D. Goldfarb, A family of variable metric methods derived by variational means,

6.25

Mathematics of Computation, Vol. 24, pp. 23-26, 1970.

6.26

D. F. Shanno, Conditioning of quasi-Newton methods for function minimization, Mathematics of Computation, Vol. 24, pp. 647-656, 1970.

6.27

M.J.D. Powell, An iterative method for finding stationary values of a function of several

variables, Computer Journal, Vol. 5, pp. 147-151, 1962.

6.28

F. Freudenstein and B. Roth, Numerical solution of systems of nonlinear equations, Journal of ACM , Vol. 10, No. 4, pp. 550-556, 1963.

6.29

M.J.D. Powell, A hybrid method for nonlinear equations, pp. 87-114 in Numerical Methods for Nonlinear Algebraic Equations, P. Rabinowitz, Ed., Gordon & Breach, New York,

1970.

J. J. More, B. S. Garbow, and K. E. Hillstrom, Testing unconstrained optimization

software, ACM Transactions on Mathematical Software, Vol. 7, No. 1, pp. 17-41, 1981.

´

368

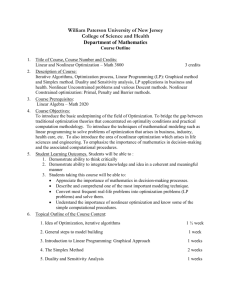

Nonlinear Programming II: Unconstrained Optimization Techniques

6.30

A. R. Colville, A Comparative Study of Nonlinear Programming Codes, Report 320-2949,

IBM New York Scientific Center, 1968.

6.31

E. D. Eason and R. G. Fenton, A comparison of numerical optimization methods for

engineering design, ASME Journal of Engineering Design, Vol. 96, pp. 196-200, 1974.

6.32

R.W.H. Sargent and D. J. Sebastian, Numerical experience with algorithms for unconstrained minimization, pp. 45-113 in Numerical Methods for Nonlinear Optimization,

F. A. Lootsma, Ed., Academic Press, London, 1972.

6.33

D. F. Shanno, Recent advances in gradient based unconstrained optimization techniques

for large problems, ASME Journal of Mechanisms, Transmissions, and Automation in

Design, Vol. 105, pp. 155-159, 1983.

6.34

S. S. Rao, Mechanical Vibrations, 4th ed., Pearson Prentice Hall, Upper Saddle River,

NJ, 2004.

6.35

R. T. Haftka and Z. G¨urdal, Elements of Structural Optimization, 3rd ed., Kluwer Academic, Dordrecht, The Netherlands, 1992.

6.36

J. Kowalik and M. R. Osborne, Methods for Unconstrained Optimization Problems, American Elsevier, New York, 1968.

REVIEW QUESTIONS

6.1

State the necessary and sufficient conditions for the unconstrained minimum of a function.

6.2

Give three reasons why the study of unconstrained minimization methods is important.

6.3

What is the major difference between zeroth-, first-, and second-order methods?

6.4

What are the characteristics of a direct search method?

6.5

What is a descent method?

6.6

Define each term:

(a) Pattern directions

(b) Conjugate directions

(c) Simplex

(d) Gradient of a function

(e) Hessian matrix of a function

6.7

State the iterative approach used in unconstrained optimization.

6.8

What is quadratic convergence?

6.9

What is the difference between linear and superlinear convergence?

6.10

Define the condition number of a square matrix.

6.11

Why is the scaling of variables important?

6.12

What is the difference between random jumping and random walk methods?

6.13

Under what conditions are the processes of reflection, expansion, and contraction used in

the simplex method?

6.14

When is the grid search method preferred in minimizing an unconstrained function?

6.15

Why is a quadratically convergent method considered to be superior for the minimization

of a nonlinear function?

Review Questions

369

6.16

Why is Powell's method called a pattern search method?

6.17

What are the roles of univariate and pattern moves in the Powell's method?

6.18

What is univariate method?

6.19

Indicate a situation where a central difference formula is not as accurate as a forward

difference formula.

6.20

Why is a central difference formula more expensive than a forward or backward difference

formula in finding the gradient of a function?

6.21

What is the role of one-dimensional minimization methods in solving an unconstrained

minimization problem?

6.22

State possible convergence criteria that can be used in direct search methods.

6.23

Why is the steepest descent method not efficient in practice, although the directions used

are the best directions?

6.24

What are rank 1 and rank 2 updates?

6.25

How are the search directions generated in the Fletcher-Reeves method?

6.26

Give examples of methods that require n 2, n, and 1 one-dimensional minimizations for

minimizing a quadratic in n variables.

6.27

What is the reason for possible divergence of Newton's method?

6.28

Why is a conjugate directions method preferred in solving a general nonlinear problem?

6.29

What is the difference between Newton and quasi-Newton methods?

6.30

What is the basic difference between DFP and BFGS methods?

6.31

Why are the search directions reset to the steepest descent directions periodically in

the

DFP method?

6.32

What is a metric? Why is the DFP method considered as a variable metric method?

6.33

Answer true or false:

(a) A conjugate gradient method can be called a conjugate directions method.

(b) A conjugate directions method can be called a conjugate gradient method.

(c) In the DFP method, the Hessian matrix is sequentially updated directly.

(d) In the BFGS method, the inverse of the Hessian matrix is sequentially updated.

(e) The Newton method requires the inversion of an n × n matrix in each iteration.

(f) The DFP method requires the inversion of an n × n matrix in each iteration.

(g) The steepest descent directions are the best possible directions.

(h) The central difference formula always gives a more accurate value of the gradient

than does the forward or backward difference formula.

(i) Powell's method is a conjugate directions method.

(j) The univariate method is a conjugate directions method.

370

Nonlinear Programming II: Unconstrained Optimization Techniques

PROBLEMS

6.1

A bar is subjected to an axial load, P 0, as shown in Fig. 6.17. By using a one-finite-element

model, the axial displacement, u(x), can be expressed as [6.1]

u(x) = {N

1(x)

N 2(x)}

-u .

1

u2

where N i(x) are called the shape functions:

x

= x

l

and u1 and u2 are the end displacements of the bar. The deflection of the bar at

Q can be foundpoint

by minimizing the potential energy of the bar (f ), which can be

expressed as

N 1 (x) = 1 Š

f =

,

N

•u ffi2

•x

l

1 fi

2

l

EA

0

2(x)

dx Š P 0u2

where E is Young's modulus and A is the cross-sectional area of the bar. Formulate the

optimization problem in terms of the variables u 1 and u 2 for the case P 0l/EA = 1.

6.2

The natural frequencies of the tapered cantilever beam () shown in Fig. 6.18, based on

the Rayleigh-Ritz method, can be found by minimizing the function [6.34]:

c1

Eh3

3

f (c 1 , c ) =

2

2

2l

c2

ffhl

1

30

4

+

+

c2

2

+

ffi

c

c

1 2

+

2 51c ffi

c2

10

c2

2

280

105

and c 2, where f =

E is Young's modulus, and ff is the

with respect to

3

2

c1

Plot

the graph of 3fffl

/Eh

in (c 1, c 2) space and identify the values of 1 and 2.

density.

2,

6.3

The Rayleigh's quotient corresponding to the three-degree-of-freedom spring-mass system shown in Fig. 6.19 is given by [6.34]

R(X) =

X T [K]X

X T [M]X

where

/ 2

[K] = k 0Š1 Š1 2

01

Š12 ,

1

/ 1 0 01

1 02 ,

[M] = 00

0 0 1

•x1_ _

X=

x2

_ x3 _

0

It is known that the fundamental

natural frequency of vibration of the system can be

Š1

found by minimizing R(X). Derive the expression of R(X) in terms of x 1, x 2, and x 3 and

suggest a suitable method for minimizing the function R(X).

Figure 6.17

Bar subjected to an axial load.