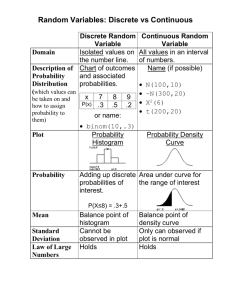

Continuous Probability Distributions

advertisement

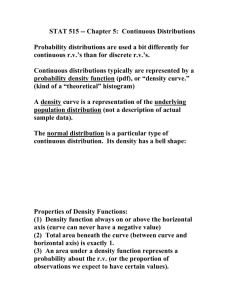

Continuous Probability Distributions Continuous Random Variables You’ve seen now how to handle a discrete random variable, by listing all its values along with their probabilities. But what if you’re dealing with a continuous random variable, like height or weight or duration (something measured) and you want to talk about the probability of the random variable taking on different values? Clearly you can’t just list all the possible values. You’d have to spend the rest of your life doing it, and even then you wouldn’t make a dent. Say you were weighing something, and the random variable is the weight. Even if you could give a probability for, say, 42.783 and 42.784, you’d still have to list, for instance, 42.7835. Between each two rational numbers there is another one, and so on and so on. We say that these numbers are dense. We simply can’t list them all. So with continuous random variables a whole different approach to probability is used. First, we don’t speak of the probability that the random variable takes on an individual value. Instead we deal with the probability that the random variable falls within a certain range of values. The probability that the variable takes on an individual value is 0. Nothing weighs 42.783 g on the nose, but there may be a positive probability that whatever it is we’re weighing will weigh between 42.783 g and 42.784 g. And how do we find these probabilities of ranges of values? Not by adding up individual probabilities, as you can see, but by using a concept from calculus (don’t let that word scare you – you’ll find that it’s surprisingly easy to grasp): We look at what’s called the area under the curve. Probability Density Functions (PDF) Let me explain this using a really simple example. Let’s say that I arrive at work every morning between 7 a.m. and 9 a.m. I never come before 7 or after 9. It isn’t that I mostly arrive pretty near 8 a.m. I am as likely to arrive at 7:19 as at 7:58 as at 8:13 as at 8:45. In mathematical terms, my arrival times are uniformly distributed from 7 a.m. to 9 a.m. What fraction of the mornings will I arrive between 7:30 and 8? Another way to ask this question is: What is the probability that on a given morning I will arrive between 7:30 and 8? The answer is 41 or 0.25 or 25%. This is because since I arrive 100% of the days between 7 a.m. and 9 a.m., and because I’m equally likely to arrive at any time between 7 and 9, and because the half hour from 7:30 to 8 is one- fourth of this two-hour interval, the probability that I’ll arrive between 7:30 and 8 is one-fourth of 100%, or 25%. I hope that seems obvious. But here’s how we’d do this using the probability distribution of a continuous random variable. Look at this graph: The t-axis represents my time of arrival at work. The thick line, which we call a probability density function, represents the probability of my arriving at work. The probability is 0 before 7 a.m. (the thick line coincides with the t-axis), shoots up to a certain level at 7 and maintains that level, and then drops back down to 0 at 9 a.m.. What is this certain level? To find that, think back to discrete probability distributions. There, the P(X)’s all had to add up to 1. One of the X values was sure to occur. It’s the same thing in the continuous case, only now we talk about the total area under the curve equaling 1. In fact, that’s part of the definition of a probability density function: the total area under it must equal 1. In our case the area is a rectangle bounded by the vertical lines at 7 and at 9, the t-axis, and our probability density function. This area is 1. The area of a rectangle is the product of its height and its width. The width is 2 hours, from 7 to 9. So the height must be 1/2, or 0.5, because (1/2)*2 = 1. Finding Probability from a PDF Now I’ll label the vertical axis with the 0.5 and shade in the area we’re interested in: In this system, the probability that I’ll arrive between 7:30 and 8 is equal to the shaded area. It too is a rectangle, with width 1/2 (the half hour from 7:30 to 8) and height 1/2, so its area is (1/2)*(1/2)=0.25. This is the same answer we got using common sense. I just wanted to illustrate the concept of the probability density function (pdf) and area under the curve, and especially to emphasize the defining characteristic of a pdf as having a total area underneath it (and above the horizontal axis) of 1. Before we leave this example, let’s consider a trick question: what is the probability that I arrive at exactly the moment of 7:30? The answer, somewhat surprisingly, is zero, simply because there is no area to be spoken of under a single point. Of course, when we normally say we “arrive at 7:30”, we are really looking at the probability of arriving between 7:30 and 7:31 (otherwise, no one will arrive on time!), in which case, the probability is (1/60)*(1/2) = 1/120. Uniform Distribution Now let’s approach what we just discussed using mathematical language. A continuous probability distribution with a PDF shaped like a rectangle has a name – uniform distribution. Here the word “uniform” refers to the fact that the function is a constant on a certain interval (7am to 9am in our case), and zero everywhere else. Using the language of functions, we can describe the PDF of the uniform distribution as: 1 f ( x) b a 0 if a x b Otherwise Where X is the Random Variable, a is the lower bound of the distribution’s range, and b is the upper bound of the distribution’s range. The interval [a, b] is also called the “support” of the PDF, i.e. where the function is positive. When a and b are given, we can also use the notation: X ~ U ( a, b) The upper case U simply denotes that it is a Uniform Distribution. As we saw in the first example of arrival time, a Uniform distribution has the following properties: 1. It is a continuous distribution. The values of the random variable x cannot be discrete data types. There exist discrete distributions that produce a uniform probability density function, but this section deals only with the continuous type. 2. The Probability Distribution function is a constant for all values of the random variable x. This means that all events defined in the range are equally probable. In other words, all values of the random variable x are equally likely to occur. This is the clearest indication that one is dealing with a Uniform distribution. 3. The total area underneath the probability distribution curve is equal to one. This is a property of all probability distribution curves, but for Uniform distribution, this means the height of the curve f ( x ) needs to the reciprocal of the value of (b-a), the width of the interval. Solving Problems related to Uniform Distributions As we saw in the example of arrival time, the probability of the random variable x being a single value on any continuous probability distribution is always zero, i.e. P(X=a)=0. This applies to Uniform Distributions, as they are continuous. Because of this, P ( X d ) and P ( X d ) are always the same. This is because P( X d ) P( X d ) P( X d ) . But the probability of X being any single value on a continuous distribution is zero, so P ( X d ) 0 and therefore P( X d ) P ( X d ) . You probably noticed that this is quite different from the discrete distributions: if we toss a coin 4 times, and let X be the number of heads, then for sure P( X 2) P( X 2) . So it’s quite important to understand which one you are applying to the problem at hand. The probability of a continuous random variable falling within a range of values is generally nonzero, however. As with all distributions, these probabilities can be represented as various ratios of the area under the probability distribution function curve. Viewing the probabilities of certain relationships as ratios of areas is perhaps clearer than looking at formula alone. Suppose there exists a Uniform probability distribution function on the interval (c, d ) . The dark shaded region represents the probability that the random variable falls on the interval (e, f ) . It is given by the area of the darker shaded region: P (e X f ) ( f e) 1 f e d c d c Now, something a bit trickier that involves conditional probability: P(X<f | X>e) In this case the dark shaded region represents the probability that the random variable X is less than f given that X is greater than e. As we saw in the chapter on probability, P(A | B) can be calculated by using: P( A | B) P(A and B) P( B) Replacing A and B with the events in the uniform distribution, the conditional probability P(X<f | X>e) becomes the ratio between the dark shaded region and the lighter region: 1 ( f e) Dark d c f e P ( x f | x e) 1 Light ( d e) d e d c Last example: Here, the dark shaded region represents the probability that the random variable falls on the interval ( f , g ) given that it is known to be somewhere on the interval (e, h) . Once more, this probability is found by the ratio between the darker region to the lighter region: 1 (g f ) Dark d c g f P ( f X g | e X h) 1 Light he ( h e) d c Notice that in the last two cases the “height” of the rectangle ends up canceling between the numerator and the denominator. So we end up comparing the relative proportion of the smaller interval compared to the larger interval (the event that is “given”). Mean and Standard Deviation of Uniform Distributions Given the shape of the uniform distribution, it’s probably no surprise to you that the (population) mean of a uniform distribution, if X ~ U (a, b) , is just: ab 2 i.e. the mid-point of the interval. The variance is a bit more complicated: 2 (b a)2 12 Hence the standard deviation is (b a) 2 . 12 We got pretty far by using the middle school geometry. But to get more serious, we will need calculus to understand how to work with continuous distributions. If you have taken calculus, you will recognize the probability under a continuous distribution curve is the definite integral that corresponds to the area. If you are itching to do some real math, here is a few more pieces of information that you need: the expected value (or mean) of a continuous distribution is defined as x f ( x) dx . The variance, on the other hand, is defined as 2 ( x ) 2 f ( x) dx . Together with the PDF of the uniform distribution, you should be able to derive the mean and standard deviation formulas yourself. Example Problem 1: Suppose a local animal shelter has a room filled with cats between one and nine years old, and that the ages of these cats are uniformly distributed. If one were to pick up a cat, what is the probability that the cat picked up is between five and six years old? You will probably want to draw the uniform distribution first. When you have the picture, it’s easy to find the area of the rectangle with (5,6) as the base: P(5 X 6) 65 1 0.125 9 1 8 Problem 2: Given the same room full of cats as given above. If one were to pick up a cat, what is the probability that the cat picked up is older than 3, given that it weights within 1 standard deviation of the mean? We will apply the mean and standard deviation formulas given above: 1 9 5 2 (9 1) 2 2.3 12 “Within 1 standard deviation of the mean” can be stated as 5 2.3 X 5 2.3 We are looking for a conditional probability: P( X 3 | 2.7 X 7.3) . By dividing the smaller rectangle with (3, 7.3) as the base, over the larger rectangle with (2.7, 7.3) as the base, we find the conditional probability: P( X 3 | 2.7 X 7.3) P(3 X 7.3) 7.3 3 0.935 P(2.7 X 7.3) 7.3 2.7