Controlling Machines with Your Brain

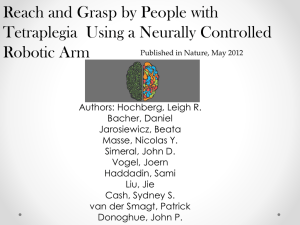

advertisement

Behraam Baqai WRIT 340 May 1, 2013 Controlling Machines with Your Brain With recent advances in brain-machine interfaces, controlling computers or robotics implements with thoughts alone is now possible. Patients with neurological damage can be taught to move a mechanical arm connected to their brain using implanted electrodes. Even right now, engineers are working on further advances to achieve wireless control and developing consumer products connected to user’s brains. The next century will bring us towards a vision of the future in which one merely needs to think about something to achieve it. Your brain on machines Wake up in the morning and think about a nice cup of coffee to ease you into the day: your neural implant sends a signal to your coffee maker to brew some up right away. Biomedical engineers are working to make that futuristic dream a reality. Connecting the human brain with the vast resources of modern engineering knowhow is bringing about exciting results in brainmachine interfaces. Researchers have developed systems that connect the brain’s neural systems to manmade devices to perform functions just by thinking about them. Recent advances in neuroscience have allowed for better characterization of neurons, the main brain cells, and understanding of how specific parts of the brain control different things. Similarly, electronics research has gotten to the point where making extremely small components is commonplace. The convergence of these two fields to Brain-Computer Interfaces (BCI) has led to therapies for paralysis patients and consumer technologies alike, tapping into brain signals to control devices. History of the Brain-Computer Interface In the 1970s, the Brain Research Institute at UC Berkeley undertook research to apply electroencephalogram, or EEG, signals to the newly developed computer and see how they could communicate [1]. EEGs are a measure of the electrical activity of the brain through external electrodes placed on the scalp. The electrical signals are created by the neurons in the brain sending impulses between each other as a communication mechanism [1]. In fact, the voltages inside your brain are the same as inside the circuits in computers, just a few orders of magnitude smaller. The team at Berkeley evoked responses in test subjects by having them play a rudimentary computer game called “space war” [2]. When subjects viewed explosions of their own or enemy space ships their EEG data in the surrounding time frame would be entered into the system. Further experiments measured response to physical stimuli, such as being tapped upon the shoulder or seeing a flash of light [3]. The purpose of these experiments was to get a first look at what sort of data one could measure from brains and lay the groundwork for what was to come. Still, the technology of the day was not yet sufficient for implanted devices that had the potential for more accurate readings of brain signals. Since then, other groups found that the human brain transmits data at 3.5 bits/second [4]. For comparison, wired Ethernet communication in the 1970s achieved 2940 bits/second, and today we can achieve over 30,000 times greater bitrates [5]. By the late 70s, the Laboratory of Neural Control, associated with the National Institute of Health, used implanted circuitry in Rhesus monkeys to train them in firing specific groups of neurons to receive reward treats when they performed well [4]. The monkeys were trained by performing specific arm movements which corresponded to digital signal and lit up a panel depending on whether or not the action was correct. Finally the level of implantable devices was approaching the necessary precision, safety and effectiveness needed to connect neural signals to responsive electronics. Modern breakthroughs. In the last decade, major advances have been made in the BCI field. Motor cortex neurons, the brain cells responsible for movement, have been used to generate control signals that operate artificial devices. With a little reward training, monkeys implanted with electrodes, similar to the setup shown in Figure 1, can move computer cursors with their brains alone [6]. The monkeys can see the space in which they move the cursor using a screen. This is straightforward visual feedback, an important feature that allows for the brain to see what it is controlling. The monkeys follow a generated path to a fairly high degree of precision. Visual and other sensory feedback coupled with a dynamically learning AI compensate for any inaccuracies inherent to the model and can scale up the system past what was formerly possible for BCI. Source: JohnManuel/Wikimedia Commons http://upload.wikimedia.org/wikipedia/commons/f/fd/BrainGate.jpg Figure 1. A thematic representation of a brain-machine interface. Even more recently, in 2012 researchers at the University of Pittsburgh developed a robotic arm that could be controlled by paralyzed patients. Jan Scheuermann, a woman paralyzed from the neck down, has a neurodegenerative disorder that was diagnosed when symptoms emerged 13 years ago leading to her paralysis. She had since relied on traditional methods to do her day to day business, relying on her family for support. But as part of this study she was able to use this new robotic arm, which she lovingly named Hector as seen in Figure 2, and was able to move it around, grasp objects and manipulate them with such precision that was impossible until now [7]. With speed matching that of any able bodied person, Scheuermann controls every action using her thoughts, transmitted through implanted electrodes. She fed herself chocolate for a demonstration with reporters and happily drank a cup of coffee for the first time since her initial diagnosis, demonstrating how quadriplegics could regain arm function at home once the system is sent to market [8]. Source: http://www.popsci.com/technology/article/2012-12/brain-machine-interface-breakthroughs-enable-most-lifelike-mind-controlled- prosthetic-arm Figure 2. Jan Scheuermann pointing at something using Hector, the robotic arm. How Brain-Computer Interfaces work These systems convert the subject’s brain waves into a useable form through a mathematical algorithm. The algorithm transforms the neural activity into the control signal that operates whatever machinery is being used [4]. The evolving technology backing these advances is the increasing sophistication of electrical connections to the brain. For example, Hector the robotic arm uses some 96 electrical prongs in Scheuermann’s brain to allow her to control all degrees of motion [8]. Furthermore, the 0.25” by 0.25” electrodes are used to train her brain to figure out how to create movement. In a process similar to how patients with spinal cord damage have to relearn how to use their legs to walk, patients in these studies have to relearn control of their movement. Scheuermann underwent a sort of rehabilitation to have her neurons perform actions they had not done for 13 years, to translate thinking about moving a limb to actually getting that result. A multitude of minute differences between the nuanced movements possible in human limbs and robotic ones alike take time to get used to, and it took many sessions for Scheuermann to master Hector. Sentimental actions like reaching out and touching one’s child are now possible with such BCIs. Renewed satisfaction with life and a return to one’s fully functioning potential can turn around the mental and emotional outlook for many of these patients. Other engineers are using functional magnetic resonance imaging (fMRI) techniques in which electrodes respond to patient thoughts of performing specific motor movements [9]. In this case, researchers can discover particular regions of the brain that control specific action and use that information to improve and create better BCIs. The complex algorithms that make up this process have already achieved a 91.6% success rate in brain signal transduction into the intended movement output [9]. The specifics of transforming brain electrical signal into commands sent to the robotic arm are still subject to improvements, though they are one of the most advanced parts of the system. At the moment, the time it takes to train the brain varies with level of function before the electrodes are implanted; for Scheuermann it took 2 weeks to fully utilize all aspects of the hand. With further usage she increased her speed exponentially and the researchers expect refinements to their algorithms would improve that as well. Upcoming technologies using brain signals. Another application of this neural research is on consumer products like computer peripherals using external sensors similar to EEGs. One such Neural Impulse Actuator (NIA) by OCZ Technology takes sensed brain messages and correlates them to computer input, such as mouse clicks and keyboard commands, as seen in Figure 3. It works through a series of filters from the input gesture/brain frequencies and converts the signals to digital format. Then the computer processes these signals using OCZ’s algorithms and translates them to clicks or keystrokes. While the NIA also takes in facial expressions into account in addition to neural impulses, experienced users have been able to seamlessly play Unreal Tournament 3 hands free [10]. That said, the amount of actions this device can be substituted for is fairly limited when compared to implant based robotics. Still, consumers will enjoy the hands free experience in cases when they merely need to click or press a few buttons. The NIA is currently still pre-release, and as one of the first consumer brain interface devices it has a lot of potential for further improvements in accuracy and depth of capability. Source: http://hardwaregadget.blogosfere.it/2008/12/mouse-per-pc-i-suoi-primi-40-anni.html Figure 3. A man using the NIA with a mouse to fully control his actions. The expanding reach of the brain to come In the future, both implanted and external brain-machine interface devices will continue to improve. First, implants will be small enough that they will have space for response mechanisms. For instance, if an implant monitoring brain chemistry and waves can detect levels of neurotransmitters that indicate depression, then it could activate a drug release bay on the implant to counter the condition in real time. Implants will integrate medical testing and diagnostics with onboard computing, supporting patients who have multiple risk factors for certain diseases because of their handicapped state. Response loops will be feature additions that return sensory information to the brain caused by robotic arm movement and interaction with objects. Rudimentary information such as roughness or smoothness will be implanted in the short term, but in coming decades engineers might be able to make sensing arms and even hands that could be reintegrated onto the body itself. Imagine reengineered limbs for a handicapped veteran or quadriplegics that can move as before but also touch and feel texture and temperature. In the short term, Wi-Fi signals will remove the need for having wired brain-robotics connections and make devices more convenient. The safety issues that will arise therein are of transmitting and receiving wireless radio signals directly from the brain. Existing products similar to the OCZ NIA that perform data transfer over wireless protocols were found to have day one security exploits [11]. A hacker could scan the brain waves and identify a “P300 response” which indicates meaningful data, and could pinpoint pertinent data like bank PINs or user info easily. Neural implants will also have to include safety against malicious attacks on individual’s “brain-Wi-Fi addresses”. Consider existing internet security problems, and incomparable benefits, and extrapolate them to having the internet connected to your brain. Attention to the security details is paramount when developing these products as the eager market might prove ripe for sensitive data picking and even more serious harm to brain health. Extensive testing needs to be done to determine such effects on the body. Wireless communications have been studied extensively but a consensus on the extent of its damage caused to the human body, if any, has not been met [12]. Regardless, ethical questions regarding further capabilities such as neural interfaces with the internet will arise. These devices could give people unfair advantages in all aspects of life. Furthermore, not all economic groups will have access to implants and other augmentations, creating a divergence in capabilities and possibly physical attributes between social classes. Given enough time and many generations, the homo sapiens sapiens population could split into another branch with substantial augmentation requirements as in some science fiction universes. The possibilities are both daunting and exciting, and will have to be discussed heavily as a society. In short, BCIs are going to drastically improve the quality of life for disabled people with access to this emerging technology. Engineering great advances in the connections from brain to computer and vice versa will take time, but the rewards for society will be worth the wait. References. [1] Medline Plus. (2013, January). EEG. U.S. National Library of Medicine. Bethesda, MD. [Online]. Available: http://www.nlm.nih.gov/medlineplus/ency/article/003931.htm [2] J. J. Vidal. (1973, June). Towards Direct Brain-Computer Communication. Annual Review of Biophysics and Bioengineering [Online]. 2, 157-180. Available: http://www.annualreviews.org/doi/abs/10.1146/annurev.bb.02.060173.001105 [3] J. J. Vidal. Real-Time Detection of Brain of Events EEG. Proceedings of the IEEE, May 1977 [Online]. Available: http://www.cs.ucla.edu/~vidal/Real_Time_Detection.pdf [4] E. M. Schmidt et al. (1978, September 1). Fine control of operantly conditioned firing patterns of cortical neurons. Experimentally Neurology [Online]. 61(2), 349-369. Available: http://ac.els-cdn.com/0014488678902522/1-s2.0-0014488678902522-main.pdf?_tid=d1bd671e701e-11e2-99d8-00000aacb362&acdnat=1360129024_ad98bdc3e896067a940144941ea4f2ef [5] R. Myslewski. (2013, February 9). Ethernet at 40: Its daddy reveals its turbulent youth [Online]. Available: http://www.theregister.co.uk/2013/02/09/metcalfe_on_ethernet/print.html [6] Nature (2002). Brain-machine interface: Instant neural control of a movement signal. Nature [Online]. 416, 141-142. Available: http://donoghue.neuro.brown.edu/library/images/briefcomm_files/DynaPage%281%29.html [7] C. Dillow. (2012, December 17). Paralyzed Woman Can Eat A Chocolate Bar, With Graceful Mind-Controlled Prosthetic Arm. Popular Science [Online]. Available: http://www.popsci.com/technology/article/2012-12/brain-machine-interface-breakthroughsenable-most-lifelike-mind-controlled-prosthetic-arm [8] J. Gardner. (2012, December 18). Paralyzed Mom Controls Robotic Arm Using Her Thoughts. ABC News Medical Unit [Online]. Available: http://abcnews.go.com/blogs/health/2012/12/18/paralyzed-mom-controls-robotic-arm-using-herthoughts/ [9] C. Wickham. (2012, December 17). Mind-controlled robotic arm has skill and speed of human limb. Reuters [Online]. Available: http://www.reuters.com/article/2012/12/17/us-scienceprosthetics-mindcontrol-idUSBRE8BG01H20121217 [10] A. L. Shimpi. (2008, March 5). CeBIT 2008 Day 2 – OCZ’s Mind Controllable Mouse, ASUS’ HDMI Sound Card, Intel and more. Anandtech (Online). Available: http://www.anandtech.com/show/2469/2 [11] S. Anthony. (2012, August 17). Hackers backdoor the human brain, successfully extract sensitive data [Online]. Available: http://www.extremetech.com/extreme/134682-hackersbackdoor-the-human-brain-successfully-extract-sensitive-data [12] Industry Canada. Multimedia Services Station. (2012, August 31). Wireless Communications and Health – An Overview [Online]. Available: http://www.ic.gc.ca/eic/site/smt-gst.nsf/eng/sf09583.html