Regression Discontinuity Basic RD equation: Where x is the

advertisement

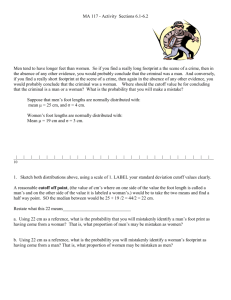

Regression Discontinuity 1. Basic RD equation: 𝑦𝑖 = 𝛼 + 𝜏𝐷𝑖 + 𝛽𝑥𝑖 + 𝑒𝑖 Where x is the continuous assignment variable that determined the treatment and D is the binary treatment variable that “turns on” when x crosses some threshold c. RD analysis essentially uses individuals with x’s just below the cutoff c as a control group for those with x’s just above the cutoff 2. The two main assumptions are Imprecise control over the treatment. This leads to a design where variation just around the threshold is random. Individuals may have some influence over x and therefor D, but their control cannot be deterministic. Continuity of the functions around the cutoff c. If there is a discontinuity in y around the cutoff that is due to some factor other than the treatment, the estimate will inappropriately attribute that discontinuity to the effect of the treatment. 3. RD analysis usually relies on a number of indirect methods to lend support to the above assumptions Document in detail the way the treatment is assigned and any potential for manipulation/precise control Examine the distribution of x for “heaping” or bunching just above or below the cutoff Examine the distribution of other covariates for discontinuities at the cutoff Report results excluding and including other covariates. Including covariates should not change the magnitude of the estimate of τ. Report results looking at estimates with differences in y as well as levels—again the magnitude of the estimate of τ should not be affected. Estimate with a range of polynomial functions in x and D or with nonparametric estimates for windows around c. See below. 4. RD analysis is essentially looking at differences in average y around the cutoff c. If the effect of x on y is non-linear, misspecifying the functional form will lead to a biased estimated effect of the treatment. Researchers therefore use flexible functions to estimate the effect of D. This will produce more conservative estimates of τ as differences in average y are more likely to be absorbed by the function form (for example, an inflection point is less likely to be attributed to a break in the function.) One approach is to use a flexible polynomial function for x and D (interaction terms included in that function). Researchers typically report results using several different functions to show that results are robust to higher order terms. A second method is to use non-parametric approaches that essentially produced smoothed estimates of the function on either side of the cutoff. Researchers typically report results using various sizes of the window around the cutoff (or bin) and different smoothing (kernel) estimators. We have not discussed in this paper the issue of “fuzzy” regression discontinuity models, where the cutoff is not sharp, but you should know that there are additional methods to deal with that treatment design. Blundell and Dias “That's a general issue in causal inference: do you want a biased, assumption-laden estimate of the actual quantity of interest, or a crisp randomized estimate of something that's vaguely related that you happen to have an experiment (or natural experiment) on?” (Andrew Gelman) Six Methods reviewed—weaknesses also listed here 1) Social experiment methods closest to a clinical trial—“theory free” experimental conditions not always met o those who randomized in may decline, so usually measures an “intent to treat” rather than actual treatment effect o those randomized out may be discouraged and change behavior 2) Natural experiment methods exploit randomization created by a naturally occurring event DD methods Measures “average treatment effect of treatment on the treated” Critical assumptions are o Common time effect across groups o No systematic composition changes within groups 3) Discontinuity design (also called regression discontinuity) exploit natural discontinuities in the rules Measures a local average treatment effect, but a different one than the IV estimator Relies heavily on assumption of continuity 4) Matching methods Goal is to reproduce the treatment group among the non-treated Match observable characteristics Need clear understanding of the determinants of the assignment rule Data intensive 5) IV methods relies on explicit exclusion restrictions—something excluded from outcome equation but which determines the assignment rule if treatment has heterogenous effects, will identify the average treatment effect only under strong and implausible assumptions does identify a local treatment effect, although again not necessarily the same local effect as RD approach 6) Control function closest to a structural approach, directly models the assignment rule to control for selection/directly characterizes the problem for individuals deciding on program participation uses full specification of assignment rule that contains an instrument to derive a control function the control function is included in the outcome equation misspecifications of the control function (behavioral relationship) will lead to biased estimates this type of model is closely related to Heckman’s selectivity estimator we discussed earlier Blundell and Dias describe several different estimators of the effect of a “treatment”: the population average treatment effect (ATE), the average assigned to treatment effect (ATT) or “intent to treat” effect, the local average treatment effect (LATE), and the marginal treatment effect (MTE). Explain what they mean by each of these. Which estimators identify which treatment effects? All of these are related to idea that policy may have heterogenous effects—see pg 569 Potential outcomes: 𝑦𝑖1 = 𝛽 + 𝛼𝑖 + 𝑢𝑖 for treatment scenario 𝑦𝑖0 = 𝛽 + 𝑢𝑖 for control scenario 𝛼𝑖 represents the treatment effect for individual i Note this is heterogenous—treatment effect varies Above is unobserved. What do observe is 𝑦𝑖 = 𝑑𝑖 𝑦𝑖1 + (1 − 𝑑𝑖 )𝑦𝑖0 𝑦𝑖 = 𝛽 + 𝛼𝑖 𝑑𝑖 + 𝑢𝑖 Note that this is very general How is d (treatment status) assigned? Z are observable characteristics that determine treatment, v are unobservable characteristics that determine treatment ATE Average Treatment effect = E(𝛼𝑖 ) Average effect in population if entire population were treated. Assignment from population is random. ATT Average Assigned to treatment effect Assignment is based on 𝑑𝑖∗ =g(zi, vi) Z are observed characteristics, v are unobserved ATT = E(𝛼𝑖 |𝑑𝑖 = 1) = 𝐸(𝛼𝑖 |𝑔(𝑧𝑖 , 𝑣𝑖 ) ≥ 0 Note individuals in an RCT don’t always participate even if assigned to treatment group. Because those who don’t sign up for treatment are different than those who do, include everyone assigned to treatment. Then measure treatment effect in that entire assigned group Note: Individuals in control group may change behavior since were randomized out LATE Local average treatment effect Here allow for the fact that the average treatment effect may vary across distribution of z. Treatment may have heterogenous effects depending on value of z. Local averages look at effect on people who are non-participants under z*, participants under z** Local average means that treatment effect is specific to the group in the “local” area of the variation. For example, in an IV estimate, it estimates the effect of treatment on the outcome for individuals whose “treatment” is sensitive to changes in the instrument (e.g., if the “treatment” is college attendance and the outcome is wages and the instrument is distance to college, the IV estimate measures the effect of college attendance on income for individuals whose education decisions are sensitive to how far they live from college) In an RD design, the local effect is the effect on individuals whose assignment variable is close to the cutoff. MTE Marginal treatment effect. The effect of marginal changes in the treatment. Again, this will be different from the average treatment effect if treatment effects are not constant across the population