Minutes 02 July 2013

RUGIT meeting, Tuesday 2nd July 2013

Imperial College

1.

Introduction

Present at the meeting:

Ian Tilsed, Exeter

Simon Marsden, Edinburgh

Stuart Lee, Oxford (Secretary)

Anne Trefethen, Oxford

Carolyn Brown, Durham

John Shemilt, Imperial

Ian Lewis, Cambridge

Sean Duffy, Birmingham (Chair)

Tony Taylor, Imperial

Nick Leake, KCL

Alison Clarke, Nottingham

John Gormley, QUB

Brian Gilmour, Edinburgh

Dave Wolfendale, Newcastle

Paul Hopkins, Manchester

Simon Marsden, Edinburgh

Tim Philips, Bristol

Andrew Male, York

Pete Hancock, Southampton

Apols: Eileen Brandreth, Nick Dyes, Chris Day, John Cartwright, Lynne Tucker (Ian Tilsed), Steve

Williams (Dave Wolfendale), Rhys Davies, Chris Sexton, Mike Roberts, Sandie Macdonald, Heidi

Fraser-Kraus (Andrew Male).

2.

Minutes and matters arising

The minutes of the last meeting were accepted without corrections.

Open Access Research SIG – the chair presented the names of people interested in joining. Broken down into IT, Research Support, and Library. Chair will set up meeting.

RUGIT Security sub-group – no update received, actions will be chased up for next meeting.

Speakers – Heidi Fraser-Kraus had suggested names of speakers.

Oracle day – will be afternoon prior to next meeting. Want a very robust discussion with them on licensing to be part of this. Manchester, Oxford, KCL all agreed. A point was raised about the academic licences and the desire for a license migration option to a processor based licence.

Action: Chair to include licensing and virtualisation on the agenda for Oracle meeting. Also to shape roadmap – discussions re databases, dealing with Oracle as a single company/partner (“what do they offer us?” – the benefits for ERP, Java, Sun, Peoplesoft, database, ERP etc collectively.

3. RUGIT Study Tour

Exec discussed this and felt there was more value in concentrating on Europe – SW/CH/DE/NL were suggested. Possibly some interest of us providing a reciprocal visit here also. Consider 3 venues may be the limit in a week to allow rest days. Is this something that could lead to longer term collaboration? EUNIS was raised also, but it was felt that this may not be that useful compared with focussed visits.

A show of hands was called for to assess interest. Which revealed about 10 HEIs would be interested.

Action: Set up a small task force to contact key institutions in countries to set this up. Alison and

Mike C offered to work with Sean.

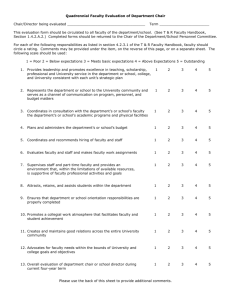

4. Benchmarking

Unfortunately Warwick and Sheffield were unable to attend, so the presentation was solely from

UCL to begin with.

Pilot benchmarking with ImprovIT – Warwick, UCL, Sheffield.

ImprovIT was made up of ex-Gartner staff (5-6 people), aimed at testing their approach. Suggested their approach could adjust for different service levels. Sheffield have also used Janet X-Ray.

Services selected – servers/storage (including HPC), data centre, desktop and email, student information (SITS).

Process – ImprovIT needed a lot of data, this was provided by University staff (quite cumbersome).

ImprovIT then came in to validate data and present back. If data is readily available should take 10 weeks, but in UCL case took more like 20 weeks. Data requested – number of users, devices, email accounts, hardware, services levels, QoS, service scope, financial information, and so on. This is then used to generate a peer group (6 organisations: a mixture of HE and other sectors).

Results (for UCL):

* Desktop costs slightly higher to ‘peers’ but how do you compare (e.g.) CITRIX sessions with physical desktops? What do you do if you have different desktop environments (e.g. one for admin, one for students)? – so this illustrated the issues of comparison.

* Email quite a bit lower than peers as use Live@edu, students use Office365 and will come down.

* Servers a bit lower which was surprising, expecting it to be higher than peers. May have been due to coping with more complexity.

* Datacentre – marginally higher as expected.

* Student information – a fair bit higher as expected.

Reflections:

Needs considerable amount of data already (also will provide good benchmarking)

Needs good service descriptions

Needs measurement points to measure improvement

Engaging in the activity helped staff begin to consider improvements

Comparisons to industry was valuable – otherwise just keep to HE which can be quite closed

Led to work on service levels

Were considered complex

Think it would be useful to combine with Janet financial x-ray, and suggest that should be done first – Sheffield who have done both felt there was synergy here

Valuable process

Focussed on central services but recognised that ideally you’d look across institute and at local provision.

Looking at partaking in Tribal benchmarks. Would ImprovIT be interested in doing this as a collective deal for all of us? About 8 or 9 HEIs would be interested.

Action: Chair to get written feedback from ImprovIT on their perspective.

Action: Mike Cope to share the data capture sheet so institutions could prepare in advance and start to collate this information.

Pramod Philips, JANET Financial X-Ray

This all emerged from the JANET brokerage service. Key points:

this is a diagnostic tool, it’s not an audit

use standardised approach to allow benchmarking

it’s a collaborative exercise

onsite assistance is provided

They look at pay, non-pay, and overheads. Developed taxonomy after a lot of consultation (about 43 items in total). Also at platforms that enable IT services. Amoritise costs, look at cost per beneficiary.

Costs then provided and then benchmarked against peers and a spectrum on where you appear

(using cost per beneficiary).

Process includes a non-disclosure agreement, initial data collection, on campus visit 4-8 days, presentation, follow up visit (possible), final report. Costs are derived by activities 0 for pay and nonpay.

Have developed a data centre investment appraisal model, available as an Excel model.

How are peer groups chosen? Ideally they would like to give a focus peer group, e.g. research intensive Universities to benchmark against.

There is no comparative matrix though around service level.

Action: Pramod to send appraisal model for circulation around RUGIT, a sample pro-forma report, and the data taxonomy for services.

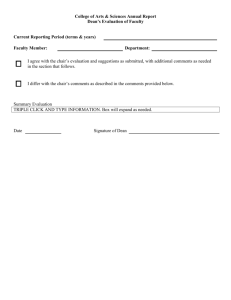

5. RUGIT Principles

The Chair opened the discussion about RUGIT principles. Considered its mission, aims, and objectives

(all detailed on the web site).

Questions that arise from this though:

Strong at ascertaining views but weak at publicising these

Do not promote research and development

Strong in exchanging ideas

Modest at linking with other bodies (but mainly through individuals)

Weak at lobbying government

Weak on promoting process change

Russell Group has decided that at top level IT is not a sub-group so we are in effect independent from the main Russell Group body.

We have UCISA and JANET on our mailing lists and only invite them to specific items.

Link with RLUK not major.

Are we missing a key area – notably educational technology?

Some discussion about whether we should publicise, lobby, and what was the difference? Moreover at what level – suppliers, JISC, Government etc. Could bring JISC/JANET/UCISA/RLUK at meetings on a rolling basis.

Some areas we clearly would have shared interest in – e.g. HPCs, Russell Groups paying up to 50% of

JANET charges HEI.

Action: Chair to explore reconnecting with JISC/JANET, UCISA and RLUK on a regular basis.

Action: Chair to discuss this issue with Russell Group centre.

Action: Change aims to lobbying stakeholders and collectively engaging with suppliers.

Action: Change aim to highlighting key areas of research, process change, and developments needed.

6. Business Intelligence

Tony Tyler (Imperial) opened this. Have an Enterprise Data Warehouse, based on an Oracle stack.

Successes such as HESA data, procurement BI app, research grants pre/post award, REF preparation,

NSS dashboards, OFFA, etc. Based on training super users and giving them access to data sets and training. Noticing that new systems have embedded analytics now also (e.g. service desk systems).

Simon Marsden then discussed experience at Edinburgh. This grew but not with ideal Governance structures surrounding it. Began with Information Services, but soon they took on the role of brokerage, and needs strong leadership to make sure other units are part of this.

A clear message arising from the discussion is that there needs to be overall governance and stewardship, otherwise there is no force for change. It can be led from finance, planning, or IT.

7. Top 3 Issues

Areas we could discuss:

Learning technologies

Open standards and education (CETIS)

8. AOB

JISC want to link to our web site and request official logo.

Consideration was given to approaches from vendors to come and speak to the group. Interest was expressed in hearing from (i) a virtualised data centre vendor, (ii) a systems integrator, (iii) a vendor about ‘software defined networking’

Away day was being suggested for the w/c 24 February 2014.

John Shemilt gave an update on the JISC co-design (see www.jisc.ac.uk

and John’s blog post). Seven schemes have been funded – the social and political barriers re IDM, a national monograph survey, student innovation teams, the digital student, open mirror, spotlight on the digital, and extending

KB+. John alerted the group to the list of student award winners.

The committee wanted to express its thanks to Brian Gilmour for all his hard work for RUGIT and wished him every success for the future.