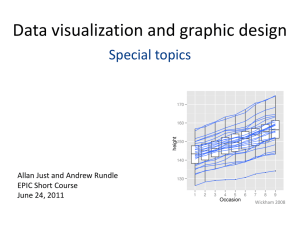

Course Manual (doc) - Babraham Bioinformatics

advertisement