Monday December

advertisement

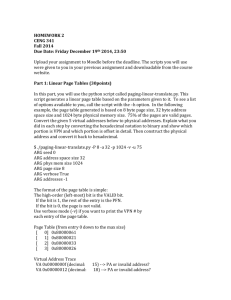

HOMEWORK 2 CENG 341 Fall 2013 Due Date: Monday December 23rd 2013, 23:00 Part 1: Multi-level Page Tables (30points) Linear Page Table Valid 0 1 1 0 0 0 0 0 1 0 0 0 1 1 0 0 PFN 54 12 14 10 13 - The virtual memory system uses an address space with 16 pages and each page is 16bytes. The linear page table for the address space is presented in the left figure. Considering each PTE or PDE is 8 bytes, convert the linear page table to a multi-level page table. Draw the physical memory layout for the page directory and pages of the page table in pairs of Valid bit and PFN. The PDBR has frame number value 100 and the page directory and the pages of the page table are put in a contiguous physical memory location. Explain what you did in each step to construct the multi-level page table. Part2: Page Replacement Policies (30 points) In this question, you will use a simulator paging-policy.py to apply different page replacement policies: OPT, LRU, FIFO. Here is an example of how you will use the simulator: For a physical memory that can hold only n pages, the –cache-size option is set. The random page references are set with –addresses and for setting the policy –policy option is used. prompt> ./paging-policy.py --addresses=0,1,2,0,1,3,0,3,1,2,1 -policy=LRU --cachesize=3 -c ARG addresses 0,1,2,0,1,3,0,3,1,2,1 ARG addressfile ARG numaddrs 10 ARG policy LRU ARG clockbits 2 ARG ARG ARG ARG cachesize 3 maxpage 10 seed 0 notrace False Solving... Access: Access: Access: Access: Access: Access: Access: Access: Access: Access: Access: 0 1 2 0 1 3 0 3 1 2 1 MISS MISS MISS HIT HIT MISS HIT HIT HIT MISS HIT LRU LRU LRU LRU LRU LRU LRU LRU LRU LRU LRU FINALSTATS hits 6 -> -> -> -> -> -> -> -> -> -> -> [0, [0, 1, [1, 2, [2, 0, [0, 1, [1, 3, [1, 0, [0, 3, [3, 1, [3, 2, misses 5 [0] 1] 2] 0] 1] 3] 0] 3] 1] 2] 1] <<<<<<<<<<<- MRU MRU MRU MRU MRU MRU MRU MRU MRU MRU MRU Replaced:Replaced:Replaced:Replaced:Replaced:Replaced:2 Replaced:Replaced:Replaced:Replaced:0 Replaced:- [Hits:0 [Hits:0 [Hits:0 [Hits:1 [Hits:2 [Hits:2 [Hits:3 [Hits:4 [Hits:5 [Hits:5 [Hits:6 Misses:1] Misses:2] Misses:3] Misses:3] Misses:3] Misses:4] Misses:4] Misses:4] Misses:4] Misses:5] Misses:5] hitrate 54.55 a) For a cache of size 5, generate worst-case address reference streams for each of the following policies: FIFO and LRU (worst-case reference streams cause the most misses possible.) For the worst case reference streams, how much bigger of a cache is needed to improve performance dramatically and approach OPT? b) Run the simulator for the following address references: 0, 1, 3,4, 5,1,3,2,3,6,7,2,4,5,4,1,2,3,0,2 Use OPT, LRU and FIFO separately with a cache size of 3. Compare FIFO and LRU algorithms to OPT. Why does LRU perform worse than FIFO? Part 3: Concurrency (40points) Write a program that creates 10 threads and assigns them ids in range [0..9]. Ids are sent to the threads via the void * type parameter of the start function of the thread. While even and odd ID threads should execute (print ID on screen) in order among themselves: [0,2,4,6,8] or [1,3,5,7,9], even and odd ID threads could execute concurrently. Example: Thread 0 executes and wakes Thread 2. Thread 2 waits for Thread 0, executes and wakes Thread 4. Thread 8 waits for Thread 6, executes and finishes. The same applies for odd ID threads. Example execution order: 0,2,1,3,4,5,7,6,8,9