Channel Coding

advertisement

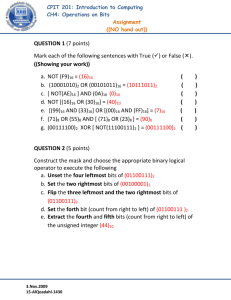

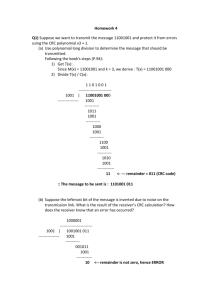

Channel Coding (Error Correcting Codes) Motivation This component converts bit stream into stream of messages, or source symbols. As we already saw, the channel may cause the receiver to interpret wrong the transmitted source symbols. This component offers more protection for our information, which represented by the bit stream, by adding redundancy to the bit stream and defining source symbols. This additional redundancy would serve the receiver for correct interpretations when the channel garbles some of our bits. The idea to protect our data in a digital manner was quite revolutionary and enabled the development of many advance communication systems such as cellular modems. We assume that the bit stream has a uniform independent identically distribution (iid). We stress out that this assumption is highly acceptable since well designed source encoders produce uniform iid bit stream. Architecture The channel encoder and decoder contain the following components: We'll now explain each component very briefly. For more information, please consult the following link: http://www2.rad.com/networks/2002/errors. Channel Code and Decode The component's design approach is called FEC (Forward Error Correction). This approach allows us to detect and correct the bit stream. It is important to bear in mind that the correction and detection of errors are not absolute but rather statistical. Thus our goal (as usual) is to minimize the BER. In this method, K original bits, which are also calledinformational bits, are replaced with new N>K bits called code bits. The difference N-K represents the redundancy 1 that has been added to the informational bits. The manner in which we produce the code bits is called channel code or ECC (Error Correcting Code). There are two general schemes for channel coding: Linear Block Codes and (Linear) Convolution Codes. More sophisticated scheme that unifies both the channel encoder and parts of the modulator, which called TCM (Trellis Code Modulation), will be presented briefly later on. Furthermore, soft decision technique will be also surveyed. Block codes simply take a block of N informational bits and convert them (using matrix multiplication, cause the code is linear) K code bits, meaning that we now have only 2kpossible combinations of code bits out of 2N. Recall that N>K. If the receiver gets an illegal code bits combination, she would know that there been an error. In many cases she might even decide what the right code bits combination was simply by choosing the code bits combination that was most likely sent. Fortunately, this combination can be found using MHD(Minimum Hamming Distance) rule. Hamming distance is simply the number of different bits between two combinations of bits. The decoding is done by simply applying MHD rule upon all possible 2K code bits combinations. Example: Let us explore Repetition code with: k=2, n=6. We take code where each source word repeated 3 times. The source words and the corresponding code words are: m0 = [0 0], c0 = [0 0 0 0 0 0], m1 = [0 1], c1 = [0 1 0 1 0 1], m2 = [1 0], c2 = [1 0 1 0 1 0], m3 = [1 1], c3 = [1 1 1 1 1 1]. Assume that the sender want to send m = [1 0]. If the sender transmit is without coding c = m = [1 0]. Assume that the channel will cause the second bit to be flipped and the receiver will get r = [1 1]. The receiver got malicious message and it can neither repair nor detect an error since r = [1 1] is also a valid message. Assume the sender use coding and it transmit c = [1 0 1 0 1 0]. The channel isn't perfect and it caused the second bit to be flipped. The receiver will get r = [1 1 1 0 1 0]. The receiver got malicious message, but now it can detect it. The only valid messages are r0 = [0 0 0 0 0 0], r1 = [0 1 0 1 0 1], r2 = [1 0 1 0 1 0], r3 = [1 1 1 1 1 1]. 2 The receiver realized that it got an invalid message. But what is the correct one? In order to choose the best option for received message who should compare it to all valid messages and to choose the one with the smallest MHD: r0 = [0 0 0 0 0 0] has 4 differences with r (marked), r1 = [0 1 0 1 0 1] has 5 differences with r, r2 = [1 0 1 0 1 0] has 1 differences with r, r3 = [1 1 1 1 1 1] has 2 differences with r. Therefore the best choice for r is r2 that corresponds to m2 = [1 0] and indeed it is the message that was sent. Let us leave the example and proceed to linear convolution codes. Unlike block codes which use only the current group of K bits, linear convolution codes produce code bits whilst using previous bits, as well as the current, and some linear binary logic, which is simply a XOR operation (see the figure below). Linear convolution codes with no feedback (which is usually the case) and S memory units can be presented as an FSM (Finite State Machine) with 2S states and 2K symmetric transitions (see the figure below). 3 Trellis diagram is another way to present this kind of convolution codes. From now on, we refer to the trellis diagram simply as "the trellis". We end the transmission by emptying the memory units. In other words, the transmitter transmits S predetermined code bits after each session of L bits, in order to close the trellis diagram. This method is called Trellis Termination and is proved to be useful when L>>S, otherwise the session is too short (see the figure below). Aforesaid, there are 2K possible code bits combinations and hence the straightforward approach for decoding by using MHD rule would have an exponential time complexity. We rather use other decoding process called Viterbi Algorithm. Heed that not every transition is possible when considering the code's FSM, Moreover; every transition produces N code bits combinations. Let us define a transition metric to be the difference between the received code bits and the transition's code bits. A node metric is the minimum of the total of all the previous node metrics and the transition metrics that lead to the current node. Each node points at its previous node that yields its node metric. After the termination, the trellis will be closed for sure. We can trace the path that leads to the final node and yields the minimum accumulated metric. The informational bits which create this path are the decoded informational bits because they have the highest chance for being transmitted. Let us observe an Example of a MHD Viterbi decoding process in the following pages: 4 5 6 7 The last seven figures demonstrate the Viterbi Algorithm. The price for using Viterbi algorithm is that we need memory units for each node metric and memory for each node's previous node. More painful price that we obliged to pay is that the decoding process is done only after the termination of the trellis. In other word, we need to wait for the entire session to end before we can decode the code bits. This fact compels an inherent delay in the whole receiving process. Mapper and Mapper-1 This component's purpose is to label groups of code bits. The label is called source symbol or message and the total number of messages has to be equal to the constellation's size. Our strategy when labeling the groups of code bits will be to map groups which differ by less bits to be closer to each other on the constellation. In this case, if the receiver errs and decides that the transmitted message was a neighbor to the real message (which is usually the case, as we shown previously), then there will be less corrupted bits. Usually when dealing with finite dimensional constellations (such as PAM, QAM or PSK) our choice would be multidimensional Gray coding labeling method. Trellis Coded Modulation (TCM) This family of communication systems uses both convolution coding method with finite dimensional constellations. There are certain rules of the thumb which produce better TCM systems. The first set of rules advocates us which convolution codes are best suitable for this method, these are called Ungerback rules. The second rule advocates us how to design the mapper, this is called MSP (Mapping Set by Partitioning). Hard Decision versus Soft Decision In the previous discussions we assumed that the input for the channel decoder was a stream of estimated source symbols that was emitted by the demodulator. In other words, the input for the channel decoder was already been processed by the demodulator in order to estimate the originate source symbols that were transmitted. More specifically, thedecision element has already processed the information. This process is called hard decision since it's divided into two parts. First the decision element processes its information to produce the estimated code bits. Afterwards, the channel decoder decodes the informational bits from the estimated code bits. The problem with this approach is that the decision element eliminates some information for the channel encoder. This lost information could be vital. Though a hard decision receiver is simple for implementation, a better approach will be to unify the decision element and the channel decoder altogether in order to make the optimal decoding. This process is called soft decision because the input of the unified decision element is soft information, information that has not processed yet. The soft decision is actually MED rule, in fact, the optimal Maximum A-posteriori Probability (MAP) rule degenerates to the MED rule in case of uniform iid distribution of code bits and AWGN channel. We can even combine MED rule with the Viterbi algorithm, in fact, the optimal Maximum A-posteriori Probability (MAP) rule degenerates to Viterbi algorithm's output (using MED rule) in the case of uniform iid distribution of code bits and AWGN channel. 8 Code Rate The ratio K/N is the code rate (heed that the ratio is inversed!) and it measures how informational bits are needed in order to represent on code bit. Smaller code rate means that there are more code bits than informational ones and vice versa. Another way to view this ratio is to think that in order to preserve the same data rate N/K code bits have to be transmitted for every one informational bit, since code bits carry less information than the informational bits (due to the redundancy). A special characteristic of channel encoding is that we actually impair the data rate of our communication system in order to improve our BER. We need to examine this tradeoff very closely in order to choose the most suitable code for our system. Previous topic: Orthonormal Bases Next topic: Line Coding Line Coding The line encoder gets stream of bits and converts them into analog signal whilst the line decoder converts an analog signal received from the channel's outlet back into bit stream. As we can see, the operation of these components is identical to the one of the channel encoder integrated with the modulator (and the channel decoder integrated with the demodulator). As a matter of fact, line coding was used former to the invention of the channel coding and modulation techniques and it had been playing the role of very simple such components. Manchester line code was used in the original DIX Ethernet, for example. The name "line coding" was chosen because the method encodes the bit stream (or PCMsignal) for transmission through a line, or a cable. Line coding is using nowadays as mediator between the CPU (Central Processing Unit), the NIC (Network Interface Card) and the modem. This technique serves for transmission of data which is represented by bits. For instance, voice signals would not use such methods (because the data is represented by an analog signal) whereas data stored on computers would. The bits are ready to be sent at the CPU memory but they have to reach first the NIC and then the modem. The NIC is peripheral equipment and so much the more for the modem which might be even connected to the NIC by a cable. Recall that we can only transmit analog signals and not bits, so line coding will be used in order to produce analog signal from the bits. For example, RS-232 is a line code that uses for communication between the CPU and the computer's peripheral equipment. For more examples of common line codes, please consult the following link: http://www2.rad.com/networks/2003/digenc/index.htm. Recall that the channel coding purpose is to achieve minimal BER as possible whilst the modulation purpose is to produce an adjusted analog signal for the channel upon which we transmit, with minimal excess bandwidth. As line coding combines those processes, its goals are still the same as those above. So we have to choose the best adjusted signal for the channel whilst sparing the bandwidth. Unfortunately, by Fourier theorem we conclude that 9 signals with narrow bandwidth tend to be stretched in time. So if we choose using signals with narrow bandwidth, Ts (The time difference between the samples of the signal) will be very long (because the signal has longer duration) resulting low data rate and poor performance for our communication systems. However, there is a family of pulses, called Nyquist pulses, which allows us using extra bandwidth and still be able to sample the signal in shorter time differences between the samples with no ISI caused. Those pulses are very commonly used in line coding methods because they allow us to transmit signals with narrow bandwidth in high data rates. An example of a Nyquist pulse is a Raised Cosine pulse (shown on the next page), a parameter that determine the pulse's behavior is r (the rolloff factor). The following images show the Raised Cosine in Time (lower) and in Frequency domain (upper), with different rolloff factors (0.01, 0.5, 1), when r tends to '0' we get a Sinc: 10 Furthermore, when considering line coding, we must take into account the synchronization problems that might arise (By the way, the same problem may arise when dealing with traditional modulation techniques. In that case the problem is dealt with the same schemes which are used for line coding). Sometimes the receiver can get out of synch when receiving a constant signal, or a signal that have not many perturbations. This phenomenon might cause the receiver to decode the original bit stream with a phase shifting. The solution is simply to create artificial perturbations by inserting opposing bits for any clustering of same bits. For example, the bit stream <�111111�> might get the receiver put of synch. The transmitter identifies the potential problem and prevents it by inserting zeros between the ones: <�11011011�>. Differential line codes use this scheme. Previous topic: Channel Coding Next topic: Source Coding Source Coding Motivation This component converts analog signal into bit stream. The goal is to produce bit stream that carries maximum information, or entropy, that allows reconstruction of the original analog signal with minimal distortion. Information theory's result shows that maximum bit stream entropy achieved by a uniform independent identically distribution (iid). That means that each bit has a 50% probability to be 0 or 1, with no correlation to the other bits in the stream. Well designed source encoders produce such bit streams. For better understanding this result, we take an example to the extreme: imagine a source encoder that produces only synonymous bit stream, this bit stream contains only 0's or 1's and carries no information whatsoever. Thus the source encoder is worthless. 11 We present the full architecture of the source coding components, but we want to note that when the originate data was digital, meaning that the inputted analog signal is a product of line coded bits, then all the conversion and quantization process can be omitted. This is because the conversion and quantization process purpose is to confine the analog signal, which can be (almost) any sort of signal. When the data was originated digital, then those processes can be omitted since the line decoder can produce our original bit stream. In that case, the source encoder functionality would be degenerated into the functionality of a compressor. In the bottom line, when considering line coded analog signal the source coding process is nothing more than a compressor. Finally, we point out that placing encipher after source encoder achieves better cipherstrength. Some cipher attackers use plain bits' statistical information where uniform iid bits conceal such knowledge from the attacker. Architecture The source encoder and decoder contain the following components: We'll now explain each component very briefly. For more information, please consult the following link: http://www2.rad.com/networks/2003/digenc/index.htm. Analog to Digital Converter (A/D) and Digital to Analog Converter (D/A) This component's purpose is to transform the analog signal into discrete signal with minimum distortions. Nyquist theorem allows us to accomplish this transformation simply by sampling the analog signal every T seconds. Moreover, Nyquist theorem allows us to reconstruct the original analog signal by interpolating the sampled signal (which is actually, the discrete signal). There is a catch, however; the analog signal must not contain any spectral content in frequencies that are higher than 1/2T hertz, or there will be aliasing in the reconstructed signal. This outcome is very intuitive because it says that in order to contain higher frequencies, one should sample faster the analog signal. So, before sampling, we should eliminate the higher frequencies by simply using a low-pass filter (LPF), namely anti-aliasing filter. 12 The spectrum of the original signal is being cut-off in a way that the sampling process won't be very fast (by hardware specifications) and that there won't be many distortions. For example, in telephony systems, which encode voice signals, we can cut-off high frequencies because the human ear cannot comprehend with them. The reconstruction process is done by interpolation. There are many schemes for interpolation such as ZOH (Zero Order and Hold), which uses constant pulses for reconstruction, and FOH (First Order and Hold), which uses linear lines for reconstruction. 13 Quantizer This component's purpose is to transform group of samples, which can be of any value, into a group of values taken from a finite set, with minimal distortions. General quantizer takes N samples and transforms them into N values from a finite set of values, say in size of M. The quantizer's latency is obviously NT, because the quantizer receive new sample every T seconds. Though there are many other quantizers, the most common quantizer is the uniform scalar quantizer (see an example below) because it's very simple to implement such component. The uniform scalar quantizer simply suits each sample with its closest possible value. 14 Uniform scalar quantizer We stress out that this quantizer achieves best performance when the inputted sample distribution is uniform. If it's not the case, we can build a quantizer especially for the inputted sample's distribution or use a reversible transformation that "smoothes" the distribution and makes it look like uniform distribution. For example, the US telephony system uses a transformation called miu-law (Europe uses A-law which is quite similar). The voice signal's samples have approximately Gaussian distribution, and the miu-law (see example below) transformation makes it more uniform shaped. miu-law characteristic expands the lower bands, in which most of the voice resides. 15 In white, a Gaussian Distribution. In gray, that distribution affected by miu-law, it can be seen that the gray dist' is more "uniform" as expected. There is no way for the receiver to know the difference between the original signal and the quantized signal, which is the quantization noise. Consequently, the quantization process is not reversible so the receiver doesn't have any analogous component for the quantizer. The quantization noise shouldbe as negligible as possible, so the distortion won't be massive. Mapper and Mapper-1 This component's purpose is to label the quantizer's output by binary strings. The quantized discrete signal can be of any M possible messages but the distribution of the messages might vary. Huffman coding achieves minimum expected binary string's length (example is shown below). Huffman coding is very simple: assign to the most common message the shortest label possible, and proceed with the labeling process. The algorithm is very intuitive: most of the time the common messages will be transmitted (because they have higher probability to be selected), thus we need to assign to them the shortest description (binary string) that possible. Huffman code example: The algorithm's input is an Alphabet with probabilities (e.g. A with prob' 60%), the output is a Huffman tree (e.g. A will be encoded as '0', D will be encoded as '1110') If the messages have uniform probability then any coding method that uses rounded up lgM bits will suffice. In that case, we usually choose N dimensional Gray coding (see below)for it has a nice property that any neighbors in the N dimensional Euclidean space differ in exactly 16 one bit. If the receiver errs and decides that the transmitted message was a neighbor to the real message (which is usually the case, as we shown previously), then there will be only one corrupted bit. Gray coding Finally, we will point out that varied length coded messages should not be a prefix of one another. Source Data Rate Let us measure the data rate of the source encoder, which also known as source data rate. We expect lgM bits to be produced for every N quantized samples which be produced after NT seconds. So we expect one bit to be produced after lgM/NT seconds. Recall that the analog signal bandwidth is at most 1/2T hertz. Thus if we want to take into account higher signal's frequencies then the source data rate will be increased. Previous topic: Line Coding Watch the Movie 17