Development of the Mathematical Model

advertisement

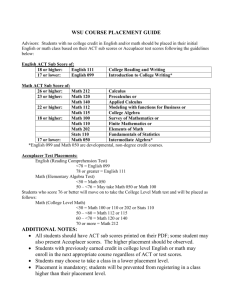

Predicting Retention Rates from Placement Exam Scores in Engineering Calculus First Author Affiliation Country Email Address Abstract: As part of an NSF Science, Technology, Engineering, and Mathematics Talent Expansion Program (STEP) grant, a math placement exam (MPE) has been developed at a large public University for the purpose of evaluating the pre-calculus mathematical skills of entering students. Approximately 4500 students take the placement exam before beginning their freshman year. Beginning in 2011, a minimum score of 22 out of 33 is needed to enroll in Calculus I. If the minimum score is not achieved, students are placed in a pre-calculus course. In previous work, the authors focused on the psychometric properties of the MPE. In this article we examine the distribution of MPE scores for two groups of students – those who pass Calculus I and those who don't. We show that the cumulative distribution function (CDF) of pass/fail outcomes can be effectively modeled by a logistic regression function. Using historical data (e.g., pass rates) and data from the MPE test (available before the semester begins) we show that one can accurately predict the number of students who will pass (as a function of their MPE scores) as well as overall retention rates. We examine the data over a 4-year period (2009-2012). Despite the fact that a mandatory MPE cutoff score was imposed in 2011, we show that the model retains validity. Background XYZ University has one of the largest engineering programs in the United States. There are over 7,000 undergraduate engineering majors. Traditionally, students in this program take Calculus during their freshmen year, along with Physics and other Science, Technology, Engineering, and Mathematics (STEM) courses. Some students are not sufficiently prepared and have difficulty passing their mathematics courses. In order to identify students with potential problems, a Math Placement Exam (MPE) was developed using confirmatory and item-response theory (Muthen & Muthen, 2012; Raykov & Marcoulides, 2011). A comprehensive statistical analysis was detailed in a previous SITE publication (XXX, 2012). The MPE consists of 33 multiple choice questions in the following areas: polynomials, functions, graphing, exponentials, logarithms, and trigonometric functions. Questions were designed by two faculty members experienced in teaching both pre-calculus and calculus. Based on historical performance data (MPE scores vs. grades), an MPE cutoff score of 22 was established. Starting in fall 2011, students with an MPE score below this cutoff were blocked from enrolling in Calculus I. Passing is defined as receiving a grade of A, B, or C. If they score below 22, they make retake the exam or enroll in a summer program called the Personalized Pre-Calculus Program (PPP). Development of the Mathematical Model Because mathematics is a critical component of the undergraduate engineering major (typically consisting of three semesters of calculus and one semester of differential equations), it is important to ensure that beginning students are mathematically prepared to enter Calculus. If a student cannot complete a required mathematics course with a C or better, they must retake the course, usually resulting in graduation delays and extra cost. Historically, it has been observed that some students enter the University with weak algebraic skills and little facility with trigonometric, exponential, and logarithmic functions. These are precisely the areas targeted by the MPE. Other issues such as over-reliance on graphing calculators and lack of problem-solving ability are not addressed by the MPE. Sample Groups In this study, two semesters of math course grades and MPE data, beginning in Fall 2009, are used to develop a mathematical model. The sample for this investigation is derived from students who have taken the MPE, enroll in Calculus I, and receive a grade of A, B, C, D or F. Excluded from the sample are students, enrolled in Calculus I, who did not take the MPE, or students with MPE scores who dropped, withdrew, or otherwise failed to complete course requirements (Pitoniak & Morgan, 2012). From the sample of eligible students (n = 1451), two groups were formed. The pass group (P; n =1193) was defined as those students who received grades of A, B, or C in Calculus I. The fail group (F; n = 258) consisted of students who received grades of D, F, or equivalent. Each of these groups has an underlying distribution of MPE scores, which can be modeled by a logistic regression function. Table 1. Fall 2009 data set MPE score 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 Number Pass 0 0 1 0 1 4 7 2 5 13 11 15 28 24 26 35 40 57 89 81 116 93 111 118 97 90 71 50 8 Number Fail 0 0 0 2 3 1 8 4 8 15 9 8 10 9 10 18 20 21 21 22 9 13 14 8 4 7 10 3 1 Cumulative Pass 0 0 1 1 2 6 13 15 20 33 44 59 87 111 137 172 212 269 348 439 555 648 759 877 974 1064 1135 1185 1193 Cumulative Fail 0 0 0 2 5 6 14 18 26 41 50 58 68 77 87 105 125 146 167 189 198 211 225 233 237 244 254 257 258 MPE score distributions for each group (P and F) are shown in Figure 1. The data is quite noisy, and does not appear to follow any particular probability distribution. As shown in Figure 2, however, plotting the cumulative distribution of scores improves the situation. Pass Fail Number of Students 140 120 100 80 60 40 20 0 5 7 9 11 13 15 17 19 21 23 25 27 29 31 33 MPE Correct Responses Figure 1. MPE score distributions for the Pass and Fail groups. Cum Pass Cum Fail Number of Students 1400 1200 1000 800 600 400 200 0 5 7 9 11 13 15 17 19 21 23 25 27 29 31 33 MPE Correct Responses Figure 2. Cumulative score distributions for the Pass and Fail groups. The distribution of scores in Figure 2 has the characteristic “S-curve” shape of cumulative distribution functions corresponding to the logistic or Gaussian probability distributions. We will investigate fitting a cumulative logistic function for several reasons. First, frequency distributions associated with MPE scores for groups P and F are not symmetric, in part due to a majority of the scores being in the interval [20, 33]. Secondly, the cumulative logistic function can be written explicitly in terms of exponentials, unlike the cumulative Gaussian which is written in terms of error functions (Secolsky, Krishnan, & Judd, 2012). Although the best fit of a logistic regression function to the data involves the maximum likelihood principle (Breslow & Holubkov, 1997), we can get a good approximation to this by requiring that the logistic probability distribution have the same mean and standard deviation as the data. If we let S denote the MPE score, then the data in Figure 2 can be modeled by two logistic functions (1) P( S)= (2) F( S)= NP 1+e a − b P P S NF 1+e a − b F F S These have the property that as 𝑆 → ∞ both P and F approach 0. As 𝑆 → ∞, P approaches N P (the total number of individuals who pass) and F approaches N F (the total number of individuals who fail). The probability density function is the derivative of the cumulative density function. We can see the fit to both groups in Figure 3. Number of Students N Pass Pass Theoretical 140 120 100 80 60 40 20 0 5 7 9 11 13 15 17 19 21 23 25 27 29 31 33 MPE Questions Correct Figure 3. Theoretical vs. actual probability for students passing Calculus I. Number of Students N Fail Fail Theoretical 25 20 15 10 5 0 5 7 9 11 13 15 17 19 21 23 25 27 29 31 33 MPE Questions Correct Figure 4. Theoretical vs. actual probability for students failing Calculus I. This also indicates the problems with predicting the probability that an individual student will pass (or fail) given only their score, rather than the probability of passing (or failing) given a range of scores. The idea of a “cutoff” score is introduced in the following way. We wish to find a particular score Sc such that the 70% of the students with scores above the cutoff pass. This was found to be approximately a score of 22 which was then adopted as the minimum MPE score required for registering for Calculus I. Paradoxically, since virtually all students in Calculus I now have MPE scores of 22 or higher, if the overall pass rate (retention rate) is different from 70%, the cutoff score would have to be revised. In our case, we did not want to have a “floating” cutoff score, so it has been fixed at 22. Essential Parameters The statistical properties of each group are determined by three parameters N, , which describe the number of individuals in the group, and the mean and standard deviation of the scores. This data is available for all students entering Calculus I with an MPE score. We know the number of individuals in Calculus I with MPE scores, and we know the mean and standard deviation of the groups, but clearly do not yet know membership or statistics of subgroups P and F until the semester ends. In this section we will show how to develop a mathematical model which takes historical data from pervious semesters, as well as the parameters N, , from the incoming Calculus I students to provide accurate estimates of the number of students who will pass or fail. Mathematical Model We define the retention rate, R, as the percentage of students in a population who pass. Consequently, N P RN N F (1 R) N (3) (4) The mean values of the MPE for the two groups P and F are related by N P P N F F N If we define the difference, P F , we get equations (5) and (6) P (1 R) F P R (5) (6) With a little bit of algebra, and defining the parameter as the ratio of standard deviations, we have F P In terms of this ratio, we have P 2 R(1 R)2 R (1 R) 2 Computational Algorithm I. II. III. Let N be the number of students who take the MPE and are admitted into Calculus I Compute the mean and standard deviation of these students MPE scores, , . Determine the pass rate of the previous year, R, and the computed values P F and IV. F P Estimate the mean and standard deviation of the two subgroups P and F by P (1 R) F R P 2 R(1 R)2 R (1 R) 2 F P V. Calculate the logistic regression parameters for the two groups 3 a b b and the cumulative number of individuals with scores less than or equal to S by N 1 e a bS Validation Study – Fall 2009 and 2010 The following table shows the actual MPE scores, and statistics, for the four fall semesters, beginning in 2009. The values for N, , are known, but the values for ∆, , R must be estimated from previous year’s historical data. From the computational algorithm, we can therefore compute the estimated values for 2010, 2011, and 2012. This is contained in Table 3. Table 2. Actual MPE data for Fall 2009-Fall 2012 Year 2009 2010 2011 2012 N 1451 1416 1742 1606 NP NF P F P F 24.579 25.402 26.713 26.911 5.025 4.733 3.432 3.936 1193 1119 1355 1311 258 297 387 295 25.308 26.174 27.246 27.496 21.205 22.492 24.798 24.922 4.577 4.200 3.149 3.497 5.604 5.451 3.802 4.324 4.103 3.683 2.448 2.574 1.224 1.298 1.207 1.237 R 0.806 0.765 0.771 0.814 Table 3. Estimated MPE data for Fall 2010-Fall 2012 Year 2010 2011 2012 N 1416 1742 1606 NP NF P F P F 25.402 26.713 26.911 4.733 3.432 3.936 1142 1333 1239 274 409 367 26.196 27.568 27.582 22.093 23.885 25.134 4.246 2.863 3.476 5.199 3.716 4.197 Approximation Results The easiest way to consider the errors is to look at the numbers of students in the P and F groups, comparing the actual numbers, the “best fit” model numbers, and the estimated numbers. This is shown in Table 4. Although we can calculate the absolute errors (in terms of numbers of students), it is perhaps more valuable to consider the retention rate, which is defined as the number of students who pass (with scores greater than or equal to S) out of the total number of students (with scores greater than or equal to S). The number of students passing with scores greater than or equal to S is given by N P P( S ) N P NP 1 e aP bP S Similarly, the number of students failing with scores greater than or equal to S is given by N F F (S ) N F NF 1 e aF bF S NP So the retention rate is equal to NP NP 1 e aP bP S NP NF NF a P bP S 1 e 1 e aF bF S Table 4. Numbers of Students who Pass/Fail vs MPE score 2010 Scaled Scaled Exact Model Estimate MPE Pass Fail Pass Fail Pass Fail 1 0 0 0 0 0 0 2 0 0 0 0 0 0 3 0 2 0 0 0 0 4 0 2 0 1 0 1 5 0 2 0 1 0 1 6 1 2 0 1 0 1 7 1 2 0 2 0 1 8 2 5 0 2 1 2 9 2 7 1 3 1 3 10 2 8 1 5 1 4 11 2 12 2 7 2 6 12 6 19 3 9 3 8 13 10 23 4 13 4 12 14 16 28 6 17 7 16 15 24 35 9 24 10 22 16 33 43 14 32 15 31 17 46 52 22 43 23 42 18 64 63 33 57 35 56 19 87 74 51 74 53 73 20 112 94 76 95 79 93 21 145 108 113 118 117 117 22 192 124 166 143 171 141 23 259 147 237 169 243 166 24 339 173 329 194 337 190 25 434 202 440 217 449 211 26 515 225 564 237 573 229 27 612 247 689 254 700 243 28 739 267 806 268 818 255 29 865 276 905 279 919 264 30 963 289 984 287 1000 270 31 1058 293 1042 294 1061 275 32 1109 297 1084 298 1104 278 33 1119 297 1114 302 1135 281 Note: the theoretical model and estimated model values have been scaled a 33b by a factor 1 e to make sure that the cumulative values sum to the number of students in the group. The results are shown below in Figure 5. Retention % Actual Model Estimate 100 95 90 85 80 75 70 1 3 5 7 9 11 13 15 17 19 21 23 25 27 29 MPE Score Note: The retention rates do not have to be monotone, due to the increasingly smaller sample sizes. Figure 5. Actual, Theoretical, and Estimated retention rates in Calculus I as a function of MPE score. Quantifying the notion of “at-risk” students The mathematical model we have developed for MPE scores allow one to predict the probability of success – not of individual students, but of groups of students with a similar range of scores. We can define at-risk students as those whose MPE scores put them in a range which has a probability of success which is less than some prescribed threshold. Summary Using historical data from the previous year, as well as data from the MPE scores of the entering freshman, we have shown that one can model the cumulative distribution function for the subgroups of passing and failing students very accurately. This in turn allows one to model retention rates as a function of MPE cutoff score. We have begun to examine intervention strategies to improve retention among students who are at-risk due to their low MPE scores. References Breslow, N. E. and Holubkov, R. (1997). Maximum likelihood estimation of logistic regression parameters under two-phase, outcome-dependent sampling. Journal of the Royal Statistical Society: Series B (Statistical Methodology), 59: 447–461. doi: 10.1111/1467-9868.00078 Brown, T. A. (2006). Confirmatory factor analysis for applied research. New York, NY: The Guilford Press. Muthén, L.K. and Muthén, B.O. (1998-2013). MPlus User’s Guide. Seventh Edition. Los Angeles, CA: Muthén & Muthén. Pitoniak, M.J. & Morgan, D.L. (2012). Setting and validating cut scores for tests. In C. Secolsky and D.B. Denison (Eds.) Handbook on measurement, assessment, and evaluation in higher education (pp.343-366). New York: Routledge. Raykov, T. & Marcoulides, G. A. (2011). Introduction to Psychometric Theory. New York, NY: Routledge. Secolsky, C., Krishnan, K, & Judd, T. P. (2012). Using logistic regression for validating or invalidating initial statewide cut-off scores on basic skills placement tests at the community college level, Research in Higher Education Journal, 19. XXX (2012). SITE conference paper. SITE Conference Proceedings.