Integrative Learning: Helping Students Make the Connections

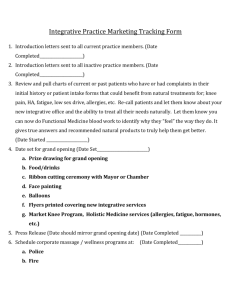

advertisement