Algorithms

advertisement

Algorithms

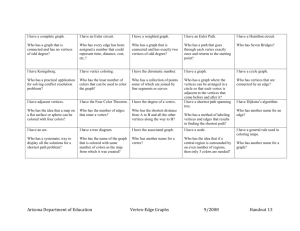

Dynamic Programming

LCS (Longest Common Subsequence)

abcddeb

bdabaed

𝐴𝑛 = 𝐵𝑚 1 + 𝑂𝑝𝑡(𝑛 − 1, 𝑚 − 1)

𝑂𝑝𝑡(𝑛, 𝑚 − 1)

𝑂𝑝𝑡(𝑛, 𝑚) = {

𝐴𝑛 ≠ 𝐵𝑚

max {

𝑂𝑝𝑡(𝑛 − 1, 𝑚)

𝑑

𝑏

𝑎

𝑐

𝑏

𝑎

𝜙

0

0

0

0

0

0

0

𝜙

1

1

0

0

𝑏

2

1

0

0

𝑐

0

𝑑

0

𝑎

0

𝑏

0

𝑐

0

𝑎

Ramsy Theory

Erdes and Szekeresh proved that given a permutation of {1, … , 𝑛}, at least one increasing or

decreasing substring exists with length > √𝑛.

For example, observe the following permutation of {1, … ,10}:

10

5

8

1

4

3

6

2

7

9

(1,1)(1,2)(2,2)(1,3) …

The pairs present the number of the longest increasing substring and longest decreasing

substring till that point (accordingly).

Since every number raises the length of one of the substrings by one (either the increasing

or decreasing) each lengths pair is unique

Due to this fact, one of the numbers must be at least √𝑛

Bandwidth Problem

The bandwidth problem is an NP hard problem.

Definition: Given a symmetrical matrix, is there a way to switch the columns s.t. the

"bandwidth" of the matrix is smaller than a number received as input.

Another variant is to find the column switch that produces the smallest bandwidth.

The bandwidth of a matrix is defined as the largest difference between numbering of end

points.

0

1

1

0

1

1

1

0

1

1

0

0

1

0

0

Special Cases

If the graph is a line, it's very easy finding the smallest bandwidth (it's 1 – need to align the

vertices as a line). In case of "caterpillar" graphs however, the problem is already NP hard.

("Caterpillars" are graphs that consist of a line with occasional side lines)

Parameterized Complexity

Given a parameter k, does the graph have an arrangement with bandwidth ≤ k?

One idea, is to remember the last 6 vertices (including their order!), and simply remember

the vertices before and after (without order) so that we know not to repeat a vertex twice.

A trivial solution would be to simply remember them all. But while it's feasible to remember

𝑛

the last 6 vertices, remembering the vertices we've passed takes up (𝑗 − 𝑘 ) where 𝑗 is the

𝑛

step index. In case of 𝑗 = 2 we would need time exponential in 𝑛.

A breakthrough came in the early 80s by Saxe. The main idea is to remember the vertices

we've passed implicitly.

In order to do so, here are a few observations:

1) Wlog, 𝐺 is connected (otherwise we can find the connected sub-graphs in linear

time and execute the algorithm independently on each of them, concatenating the

results at the end)

2) Maximal degree of the graph (if has bandwidth ≤ 𝑘) is ≤ 2𝑘.

Prefix

Active Region

Suffix

Due to these observations, a vertex is in the prefix, if it is connected to the active region

without using dangling edges.

A vertex in suffix must use dangling edges to connect to active region.

--------end of lesson 1

Color Coding

𝐴(𝑥)

The measure of complexity would be the expected running time (over random coin tosses of

the algorithm) for the worst possible input.

Monte Carlo Algorithms

There is no guarantee the solution is correct. It is probably correct.

Las Vegas Algorithms

You want the answer to be always correct. But the running time might differ.

Hamiltonian path

A simple path that visits all verties of a graph.

Logest Simple Path

TODO: Draw vertices

We are looking for the longest simple path from some vertex 𝑣 to some vertex 𝑢.

Simple path means we are not allowed to repeat a vertex twice.

This problem is NP-Hard.

Given a parameter 𝑘 and find a simple path of length 𝑘.

A trivial algorithm would work in time 𝑛𝑘 .

Algorithm 1

- Take a random permutation over the vertices.

- Remove every “backward” edge

- Find the longest path in remaining graph using dynamic programming

TODO: Draw linear numbering

For some permutation, some of the edges go forward, and some go forward. After removing

backward edges, we get a DAG.

For each vertex 𝑣 from left to right, record length of longest path that ends at 𝑣.

Suppose the graph contains a path of length 𝑘.

What is the probability all edges of the path survived the first step?

The vertices have 𝑘! possible permutations, and only one permutation is good for us. So with

1

probability 𝑘! we will not drop the edges of the path and the algorithm succeeds.

This is not too good! If 𝑘 = 10 it is not too good!

So what do we do? Keep running it until it succeeds.

1

The probability of a failure is (1 − (𝑘!)), but if we run it more than once it becomes:

1

𝑐𝑘!

(1 − (𝑘!))

1 𝑐

≈ (𝑒) expected running.

Expected running time 𝑂(𝑛2 𝑘!).

𝑘𝑘

log 𝑛

You can approximate 𝑘! =≈ 𝑒 𝑘 ≤ 𝑛, 𝑘 ≅ log log 𝑛

Algorithm 2

- Color the vertices of the graph at random with 𝑘 colors.

- Use dynamic programming to find a colorful path.

1

2

… 𝑘

𝑣1 𝑆1

𝑣1 𝑆2

𝑣𝑖 + 𝑆𝑖

⋮

𝑘

𝑣𝑛 𝑆 2

S is the set of colors we used so far.

𝑘!

𝑘𝑘

1

Probability that all vertices on path of length 𝑘 have different colors: 𝑘 𝑘 ≈ 𝑒 𝑘 𝑘 𝑘 = 𝑒 𝑘

If 𝑘 ≅ log 𝑛 then the success would be

1

.

𝑛1…

Tournaments

A tournament is a directed graph in which for every pair of vertices there exists an edge (in

one direction). An edge exists if the player (represented by the vertex of origin) won another

player (represented by the second vertex).

We try to find the most consistent ranking of the players.

We want to find a linear order that minimizes the number of upsets.

An upset is an edge going in the other direction of another edge (tour?).

A Feedback Arc Set in a Tournament (FAST) is all the arcs that go backwards.

Another motivation

Suppose you want to open a Meta search engine. How can you aggregate the answers given

from the different engines?

TODO: Draw the ranking…

The problem is NP-Hard.

k-FAST

Find a linear alignment in which there are at most 𝑘 edges going backwards.

𝑛

( )

0≤𝑘≤ 2

2

We will describe an algorithm that is good for 𝑛 ≤ 𝑘 ≤ 𝑎(𝑛2 )

Suppose there are 𝑡 subparts that have the optimal solution and we want to merge them

into a bigger solution.

If the graph 𝐺 is partitioned into 𝑡 parts, and we are given the “true” order within each part,

merging the parts takes time 𝑛𝑂(𝑡) (O just for the bookkeeping).

Color Coding

Pick 𝑡 (𝑎 𝑓𝑢𝑛𝑐𝑡𝑖𝑜𝑛 𝑜𝑓 𝑘) and color the vertices independently with 𝑡 colors.

We want that: For the minimum feedback arc set 𝐹, every arc of 𝐹 has endpoints of distinct

colors. Denote it as “𝐹 is colorful”.

Why is this desirable?

If 𝐹 is colorful, then for every color class we do know the true order.

We use the fact that this is a tournament! The order is unique due to the fact that between

every two vertices there is an arc (in some direction).

Lets look at two extreme cases:

1) 𝑡 > 2𝑘 - the probability the second vertex gets the first color then the probability is

𝑘

𝑡

< 2. However, the runtime would be 𝑛𝑂(𝑘)

2) 𝑡 < √𝑘 - not too good! Intuition: You have a √𝑘 vertices where the direction of the

arcs is essentially random. So you can create a graph that has very bad chances

(didn’t provide a real proof).

If 𝑡 > 8√𝑘 then with good probability – F is colorful. The probability behaves something

1

like: 𝑛𝑡. Then the expected running time is 𝑛𝑡 , and with the previous running time the total

running time is still 𝑛𝑂(𝑡) .

𝑘

1 𝑘

1 𝑡

→ (1 − ) ≈ ( ) → 𝑡 ≅ √𝑘

𝑡

𝑒

1 √𝑘

( )

𝑒

𝑛𝑂(√𝑘)

Lemma: Every graph with k edges has an ordering of its vertices in which no vertex has more

than √2𝑘 forward edges.

Why is this true? Pick an arbitrary graph with k edges, and start arranging its edges with the

lowest degree first. So each time we put the vertex of lower degree of the suffix.

deg 𝑖 =degree of 𝑣𝑖

𝑑𝑖 =number of forward edges of 𝑣𝑖

𝑠 =𝑛−𝑖

𝑑 ≤ 𝑠 - Because a vertex can have one forward edge to every vertex to its right

𝑑 ≤ deg 𝑖 → 𝑑 ∙ 𝑠 ≤ deg 𝑖 ∙ 𝑠 ≤ ∑𝑗>𝑖 deg 𝑗 ≤ ∑𝑗 deg 𝑗 = 2𝐾

Therefore, 𝑑2 ≤ 2𝐾 → 𝑑 ≤ √2𝐾.

The chance of failure for some vertex is bound by

𝑑𝑖

𝑡

(each neighbor has a chance of having

𝑑

the wrong color). Therefore, the chance of success is at least 1 − 𝑡𝑖.

However, since 𝑑𝑖 is blocked by √2𝐾, in order to have a valid expression, 𝑡 must be larger

than √2𝐾.

(1 −

∏ (1 −

𝑖

-------end of lesson 2

𝑑𝑖

𝑑𝑖

) ≅ 𝑒− 𝑡

𝑡

∑ 𝑑𝑖

𝑑𝑖

2𝑘

𝑑𝑖

) ≤ ∏ 𝑒 − 𝑡 = 𝑒 𝑡 = 𝑒 − 𝑡 = 𝑒 −√𝑘

𝑡

Repeating last week’s lesson:

In every graph with 𝑚 edges, if we color its vertices at random with √8𝑚 colors, then w.p. ≥

(2𝑒)−√8𝑚 the coloring is proper.

Assume we color the graph with 𝑡 colors.

TODO: Draw the example that shows the coloring is dependent.

Inductive Coloring

Given an arbitrary graph 𝐺, you find the vertex with the least degree in the graph. Then

remove that vertex. Then find the next one with the least degree and so on…

This determines an ordering of the vertices:

𝑣1 , 𝑣2 , … , 𝑣𝑛

𝑣𝑖 - has the least degree in 𝐺(𝑣𝑖 , … , 𝑣𝑛 ).

Then we start by coloring the last vertex. Each time we color the vertex according to the

vertices to its right (so it will be proper).

If 𝑑 is the maximum right degree of any vertex, then inductive coloring uses at most 𝑑 + 1

colors.

In every planar graph, there is some vertex of degree at most 5.

Corollary: Planar graphs can be colored (easily) with 6 colors.

Every graph with 𝑚 edges, has an ordering of the vertices in which all right degrees are at

most √2𝑚.

So we need at least √2𝑚 + 1 colors. But we don’t want our chances of success to be too

low, so we use twice that number of colors - 2√2𝑚.

Let the list of degrees to the right - 𝑑1 , 𝑑2 , … , 𝑑𝑛 .

𝑛

∑ 𝑑𝑖 = 𝑚

𝑖=1

What is the probability of violating the proper coloring for vertex 𝑖?

Number of colors left for vertex 𝑖 -

𝑡−𝑑𝑖

𝑡

𝑡 − 𝑑𝑖

𝑑𝑖

∏(

) = ∏ (1 − )

𝑡

𝑡

𝑖

But we know

𝑑𝑗

𝑡

≤

√2𝑚

√8𝑚

=

1

2

It’s easier to evaluate sums than products.

Suppose

𝑑𝑗

𝑡

1

= 𝑘 (a very small number)

𝑖

Why is this true:

1−

2

1

≥ 2−𝑘

𝑘

Let’s raise both sides:

𝑘

𝑘

2 2

1 2

1

(1 − ) ≥ (2−𝑘 ) =

𝑘

2

1

1

As long as < , each power only chops off less than half of what remains meaning the left

𝑘

2

1

side won’t for below 2. So the inequality is true.

So, back to the original formula:

∏ 2−

2𝑑𝑖

𝑡

= 2−

𝑑

∑ 𝑖

𝑡

𝑚

= 2𝑡

𝑖

Maximum weight independent set in a tree

Given a tree. In which, each vertex has a non-negative weight.

We need to select an independent set in the tree.

In graphs this problem is NP hard, but in trees we can do it in polynomial time.

TODO: Add a drawing of a tree

We pick an arbitrary vertex 𝑟 as the root.

We think of the vertices as being directed “away” from the root.

Given a vertex 𝑣, denote by 𝑇(𝑣) as the set of vertices reachable from 𝑣 (in the direction

from the root).

So for each vertex, we will keep two variables:

𝑊 + (𝑣) − is the maximum weight independent set in the tree 𝑇(𝑣), that contain 𝑣.

𝑊 − (𝑣) − is the maximum weight independent set in the tree 𝑇(𝑣), that do not contain 𝑣.

Need to find 𝑊 + (𝑟), 𝑊 − (𝑟) and the answer is the largest of the two.

The initialization is trivial. 𝑊 + (𝑣) = 𝑤(𝑣) and 𝑊 − (𝑣) = 0.

For every leaf 𝑙 of 𝑇, determine 𝑊 + (𝑙) = 𝑤(𝑙), 𝑊 − (𝑙) = 0 and remove it from the tree.

Pick a leaf of the remaining tree, with children 𝑢1 , … , 𝑢𝑘 .

𝑘

𝑊

+ (𝑣)

= 𝑤(𝑣) + ∑ 𝑊 − (𝑢𝑖 )

𝑖=1

𝑘

𝑊 − (𝑣) = 0 + ∑ max{𝑊 − (𝑢𝑖 ), 𝑊 + (𝑢𝑖 )}

𝑖=1

This algorithm can also work on graphs that are slightly different than trees. (do private

calculations for the non-compatible parts).

Can we have a theorem of when the graph is just a bit different than a tree and still the

algorithm can run in polynomial time?

Tree Decomposition of a Graph

We have some graph 𝐺, and we want to represent it as a tree 𝑇.

Each node of the tree 𝑇 would represent a set of vertices of graph 𝐺.

Every node of the tree is labeled by a set of vertices of the original graph 𝐺.

Denote such sets as bags.

We also have the following constraints:

1) Moreover, the union of all these sets is all vertices of 𝐺.

2) Every edge ⟨𝑣𝑖 , 𝑣𝑗 ⟩ in 𝐺 is in some bag.

3) For every vertex 𝑣 ∈ 𝐺, the bags containing 𝑣 are connected in 𝑇. Meaning, that

they are connected with vertices that contain 𝑣, and do not have to pass through

vertices that do not contain 𝑣.

Given two bags - 𝐵1 and 𝐵2 𝑠. 𝑡. 𝐵1 ⊆ 𝐵2 , they are connected through a single path (because

it’s a tree). This path must contain all vertices of 𝐵1 .

Tree Width of a Tree Decomposition

The Tree width of 𝑇 is 𝑝 if the maximal bag size is 𝑝 + 1.

Tree width of 𝐺 is the smallest 𝑝 for which there is a tree decomposition of tree width 𝑝.

Intuitively – a graph is closer to a tree when its 𝑝 is smaller.

Properties regarding Tree width of graphs

Lemma: If 𝐺 has tree width 𝑝, then 𝐺 has a vertex of degree at most 𝑝.

Observe a tree decomposition of 𝐺.

It has some leaf 𝑣. The bag of this leaf has 𝑝 + 1 vertices at most. It has only one neighbor

(since it’s a leaf).

Since no bag contains another bag, there is some vertex that exists in its neighbor that is not

in 𝑣. TODO: Copy the rest

Fact: 𝑇𝑊(𝐺\𝑣) ≤ 𝑇𝑊(𝐺). Since we can always take the original tree decomposition and

remove the vertex.

Corrolery: If 𝐺 has tree width 𝑝 then 𝐺 can be properly colored 𝑝 + 1 colors.

Indicates that if 𝐺 is a tree, its tree width is 1 (since a tree is a bi-part graph and therefore

can be colored by 2 colors).

A graph with no edges has tree width 0, since you can have each bag as a singleton of a

vertex.

A complete graph on 𝑛 vertices has 𝑇𝑊 = 𝑛 − 1 (one bad holding all vertices)

A compete graph missing an edge – ⟨𝑢, 𝑣⟩ has 𝑇𝑊 = 𝑛 − 1:

We can construct two bags – 𝐺 − 𝑢 and 𝐺 − 𝑣 and connect them.

Theorem: 𝐺 has 𝑇𝑊 = 1 iff 𝐺 is a tree.

Assume 𝐺 has 𝑇𝑊 = 1. Has a vertex 𝑣 of degree 1. Remove 𝑣. The graph is still a tree! So we

can continue…

We assume the graph is connected. But it doesn’t have a cycled! If it had a cycle, we would

have a contradiction. A connected graph with no cycles is a tree.

Assume 𝐺 is a tree. Lets construct the decomposition as follows:

Lets define each vertex as a bag with two vertices. An edge is connected to all edges that are

other edges of the contained vertices.

Series-Parallel graphs

TODO: Draw resistors…

Series-Parallel graphs are exactly all graphs with 𝑇𝑊 = 2.

Start from isolated vertices.

1) Add a vertex in series.

2) Add a self loop

3) Add an edge in parallel to an existing edge

4) Subdivide an edge

Series-Parallel⇒ 𝑇𝑊(2).

TODO: Draw

------ end of lesson 3

Graph Minor

A graph 𝐻 is a minor of graph 𝐺 if 𝐻 can be obtained from 𝐺 by:

(1) Removing vertices

(2) Removing edges

(3) Contracting edges

TODO: Draw graph

Definition: A sub-graph is a graph generated by removing edges and vetices

Definition: An induced sub-graph is a graph with a subset of the vertices that includes all

remaining edges.

Contracting an edge is joining the two vertices of the edge together, such that the new

vertex has edges to all the vertices the original vertices had.

A graph is planar if and only if it does not contain neither 𝐾5 nor 𝐾3,3 as a minor.

TODO: Draw the forbidden graphs

Definition: A graph is a tree or a forest if it doesn’t contain a cycle⇔A clique of 3 vertices as

a minor.

A graph is Series parallel, if it does not contain a 𝑘4 as a minor.

Theorem: There are planar graphs on 𝑛 vertices with tree width Ω(√𝑛)

Let’s look at an √𝑛 by √𝑛 grid

∙ − ∙ − ∙ − ∙

|

|

|

|

∙ − ∙ − ∙ − ∙

|

|

|

|

∙ − ∙ − ∙ − ∙

|

|

|

|

∙ − ∙ − ∙ − ∙

We will construct √𝑛 − 1 bags.

A bag 𝑖 contains columns 𝑖 and 𝑖 + 1

Bags:

∙ − ∙

− ∙ …

This is a tree decomposition by all the properties.

Vertex Separators

Vertex Separators: A set of 𝑆 of vertices in a graph 𝐺 is a vertex separator if removing 𝑆 from

𝐺, the graph 𝐺 decomposes into connected components of size at most

2𝑛

3

TODO: Draw schematic picture of a graph

It means we can partition the connected components into two groups, none of them with

more than

2𝑛

3

vertices.

Every tree has a separator of size 1

Let 𝑇 be a tree. Pick an arbitrary root 𝑟.

𝑇(𝑣) =the size of the sub-tree of 𝑣 (according to 𝑟).

𝑇(𝑟) = 𝑛.

2

3

2

3

All leaves have size 1. So ∃𝑣 with 𝑇(𝑣) > 𝑛 and 𝑇(𝑢) ≤ 𝑛 for all children 𝑢 of 𝑣.

That 𝑣 is the separator.

If a graph 𝐺 has tree width 𝑝, then it has a separator of size 𝑝 + 1.

My summary:

Let 𝐷 be some tree decomposition.

Each bag has at most 𝑝 + 1 vertices. We can now find the separator of 𝐷 and consider its

𝑝 + 1 vertices as the separator of the graph 𝐺.

Note that when we calculate 𝑇(𝑣) for some 𝑣 ∈ 𝐷, we should count the number of vertices

inside the bags below it (not the number of bags).

His summary:

Consider a tree decomposition 𝑇 of width 𝑝.

Let 𝑟 serve as its root. And orient edges of 𝑇 away from 𝑟.

2

Pick 𝑆 to be the lowest bag whose sub-tree contains more than 3 𝑛 vertices.

Every Separator in the √𝑛 by √𝑛 grid is of size at least

√𝑛

6

Why is this so?

Let’s assume otherwise. So there is such a separator.

Let 𝑆 be a set of

5√𝑛

6

√𝑛

6

vertices.

rows that do not contain a vertex from 𝑆.

Same for columns.

Let’s ignore all vertices in the same row or column with a vertex from 𝑆.

So we ignore at most

√𝑛

6

∙ √𝑛 +

√𝑛

6

𝑛

∙ √𝑛 = 3 vertices.

Claim: All other vertices are in the same connected component. Since we can walk on the

row and column freely (since no members of 𝑆 share the same row and column). Therefore

2

we have 3 𝑛 connected components – A contradiction.

If 𝐺 has tree width 𝑝, then 𝐺 is colorable by 𝑝 + 1 colors.

Every planar graph is 5-colorable.

Proof: A planar graph has a vertex of degree at most 5.

We will use a variation of inductive coloring.

Pick the vertex with the smallest degree.

Assume we have a vertex with a degree 5. These 5 neighbors cannot form a clique! (since

the graph is planar)

So one edge is missing. Contract the two vertices of that missing edge with the center vertex

(the one with degree 5).

Fact: Contraction of edges maintains planarity. This is immediately seen when thinking of

bringing the edges closer in the plain.

Now we color the new graph (after contraction). Then we give all “contracted” vertices the

same color in the original graph (before contraction).

After the contraction we have degree 4, so we can color it with 5 colors.

Hadwiger: Every graph that does not contain 𝐾𝑡 as a minor, can be colored with 𝑡 − 1 colors.

(A generalization of the 4 colors theorem).

If a graph has tree width 𝑝, then we can find its chromatic number in time 𝑂(𝑓(𝑝) ∙ 𝑛)

Note: The number of colors is definitely between 1 and 𝑝 + 1 (we know it can be colored by

𝑝 + 1 colors… The question is whether it can be colored with less).

For a value of 𝑡 ≤ 𝑝, is 𝐺 𝑡-colorable?

Theorem (without proof): Given a graph of tree width 𝑝, a tree decomposition with tree

width 𝑝 can be found in time linear in 𝑛 ∙ 𝑓(𝑝)

TODO: Draw the tree decomposition

For each bag, we will keep a table of size 𝑡 𝑝+1 of all possible colorings of its vertices. For

each entry in the table, we need to keep one bit that determines whether that coloring is

legal with the bags below that bag.

We can easily do it for every leaf (0/1 depending on: “is this coloring is legal with sub-graph

below bag).

A coloring is legal if the sub-tree can be colored such that there is no collision of assignment

of colors.

A family 𝐹 of graphs is minor-closed (or closed under taking minors) if whenever 𝐺 ∈ 𝐹 and

𝐻 is a minor of 𝐺, then 𝐻 ∈ 𝐹.

Characterizing 𝐹:

1) By some other property

2) List all graphs in the family (only if the family is finite)

3) List all graphs not in 𝐹 (if the complementary of the family is finite)

4) List all forbidden minors

5) List a minimal set of forbidden minors

For planar graphs, this minimal set was 𝐾5 and 𝐾3,3

A list of minors is a list of graphs: 𝐺1 , 𝐺2 … such that no graph is a minor of the other.

A conjecture by Wagner: Minor relation induces a “well quasi order”⇔No infinite

antichain ⇔ A chain of graphs such that no graph is a minor of another graph.

So this is always a finite property!!!

The conjecture was proved by Robertson and Seymour.

----- End of lesson 4

Greedy Algorithms

When we try to solve a combinatorical problem, we have many options:

- Exhaustive Search

- Dynamic Programming

Now we introduce greedy algorithms as a new approach

Scheduling theory

Matroids

Interval Scheduling

There are jobs that need to be performed at certain times that take a certain time

𝑗1 𝑠1 − 𝑡1

𝑗2 𝑠2 − 𝑡2

⋮

We can’t schedule two jobs at the same time!

TODO: Draw intervals

We can represent the problem as a graph in which two intervals will share an edge if they

intersect. Then we will look for the maximal independent set.

Such a graph is called interval graph.

There are classes of graphs in which we can solve Independent Set in polynomial time. One

such family of graphs is Perfect graphs.

Algorithm: Sort intervals by earliest ending times and use a greedy algorithm to select the

interval that ends sooner. Remove all its intersecting intervals and repeat until no more

intervals remain.

Why does it work?

Proof:

Suppose the greedy algorithm picked some intervals 𝑔1 , 𝑔2 , …

Consider the optimal solution that has the largest common prefix with the greedy one:

𝑜1 , 𝑜2 , …

Consider first index 𝑖 such that 𝑜𝑖 ≠ 𝑔𝑖

Since 𝑔𝑖 ends at the same time (or sooner) than 𝑜𝑖 we can generate a new optimal solution

that has 𝑔𝑖 instead of 𝑜𝑖 . Such a solution would still be optimal (same number of intervals)

and is legal ⇒ there exists a solution with a larger common prefix, a contradiction!

Interval Scheduling 2

𝐽1 ∶ 𝑤1 > 0, 𝑙𝑖 > 0

𝐽1 ∶ 𝑤1 > 0, 𝑙𝑖 > 0

𝑤 determines how important the job is. 𝑙 determines how much time would it take for a CPU

to perform the job.

Penalty for job 𝑖 given a particular schedule is the ending time of 𝑖 × 𝑤𝑖

Total penalty is ∑𝑖 𝑝𝑠 (𝑖)

Find schedule 𝑠 than minimizes the total penalty.

𝐽1 … 𝐽𝑖 𝐽𝑗 …

Let’s flip some job:

𝐽1 , … 𝐽𝑗 𝐽𝑖 …

And suppose that by flipping the order grew.

The penalty for the prefix and the suffix stays the same!

So we should only consider what happens to the penalty from 𝐽𝑖 , 𝐽𝑗 that resulted the switch

𝑤𝑖 (𝑡 + 𝑙𝑖 ) + 𝑤𝑗 (𝑡 + 𝑙𝑖 + 𝑙𝑗 )

𝑤𝑗 (𝑡 + 𝑙𝑗 ) + 𝑤𝑖 (𝑡 + 𝑙𝑗 + 𝑙𝑖 )

𝑤𝑗 𝑤𝑖

𝑤𝑗 𝑙𝑖 < 𝑤𝑖 𝑙𝑗 ⇒

<

𝑙𝑗

𝑙𝑖

So this suggests the following algorithm:

Schedule jobs in decreasing order of

𝑤𝑖

𝑙𝑖

This is optimal.

Proof: Consider any other schedule which does not respect this order.

Then there must be some 𝑖 in which

𝑤𝑗

𝑙𝑗

<

𝑤𝑖

𝑙𝑖

. Then we can reverse the order, and get a

better scheduling – a contradiction!

General Definition of Greedy-Algorithm solvable problems – Matroids

𝑆 = {𝑒1 , … , 𝑒𝑛 } (sometimes we have matroids in which the items represent edges)

𝐹 = a collection of subsets of 𝑆. (Short for “Feasible”. Often called “independent sets”).

We want 𝐹 to be hereditary.

Hereditary - If 𝑋 ∈ 𝐹, 𝑌 ⊂ 𝑋 ⇒ 𝑌 ∈ 𝐹

And we also need a cost function:

𝑐: 𝑆 → 𝑅 +

Find a set 𝑋 ∈ 𝐹 with maximum cost. ∑𝑒𝑗 ∈𝑋 𝑐(𝑒𝑗 )

Example: Given a graph 𝐺, the items are the vertices of 𝐺. 𝐹 is the independent sets of 𝐺,

∀𝑥. 𝑐(𝑥) = 1.

𝑀𝐼𝑆(𝐺) is NP hard.

Definition: A hereditary family 𝐹 is a matroid, if and only if ∀𝑋, 𝑌 ∈ 𝐹 if |𝑋| > |𝑌| then ∃𝑒 ∈

𝑋, 𝑒 ∉ 𝑌 such that 𝑌 ∪ {𝑒} ∈ 𝐹.

Proposition: All maximal sets in a matroid have the same cardinality.

Suppose |𝑋| > |𝑌|, then there should be some item in 𝑋 that we can add to 𝑌 to make it

feasible, and therefore 𝑌 does not have the maximal cardinality.

All maximal sets is called “bases”, and the cardinality of the maximal sets is called the “rank

of the matroid”.

We have all sorts of terms: Matroids, Independent Sets, Basis, Rank. How are they related?

Consider a matrix. The items are the columns of the matrix.

The independent sets are columns that are linearly independent columns.

Note that it is hereditary. Because if a set of columns is linearly independent, then any

subsets is also linearly independent.

The basis of the matrix is the largest set of columns that spans the space. And the rank is

also the same as in linear algebra.

Theorem: For every hereditary family 𝐹, the greedy algorithm finds the maximum solution

for every cost function 𝑐 ⇔ 𝐹 is a matroid

Greedy Algorithm: Iteratively, add to the solution the item 𝑒𝑗 with largest cost that still keeps

the solution feasible.

Proof: Assume that F is not a matroid.

So (by definition) there are some sets 𝑋, 𝑌 such that |𝑋| > |𝑌|, 𝑋, 𝑌 ∈ 𝐹

and ∀𝑒 ∈ 𝑋\𝑌. 𝑌 ∪ {𝑒} ∉ 𝐹.

1

𝑛

∀𝑒 ∈ 𝑌. 𝑐(𝑒) = 1 + 𝜖 𝜖 < if 𝑛 = |𝐹| (or something similar)

∀𝑒 ∈ 𝑋\𝑌. 𝑐(𝑒) = 1

∀𝑒 ∉ 𝑋 ∪ 𝑌. 𝑐(𝑒) = 𝜖 2

Since the elements of 𝑌 have the highest cost, they will be selected until the set 𝑌 is chosen

and cannot increase any further. But the optimal solution would be to select the elements of

𝑋 – so the greedy algorithm does not solve the problem!

Suppose 𝐹 is a matroid.

Since 𝑐: 𝑆 → 𝑅 + - all weights are positive, the optimal solution is a basis.

But likewise, the greedy algorithm is also a basis

Sort items in solution in order of decreasing cost.

Suppose the items in the greedy solutions are 𝑔1 , … , 𝑔𝑟 where 𝑟 is the rank of the matroid.

And the items in the optimal solution are 𝑜1 , … , 𝑜𝑟 where 𝑟 is the rank of the matroid.

Suppose the maximum prefix is not the entire list of items. Suppose index 𝑗 is different. So

𝑜𝑗 ≠ 𝑔𝑗 but 𝑜𝑗−1 = 𝑔𝑗−1 .

But we know that:

𝑐(𝑔𝑗 ) ≥ 𝑐(𝑜𝑗 )

So let’s build another optimal solution.

Observe the set:

𝑜1 , … 𝑜𝑗−1 , 𝑔𝑗

Because this is a matroid, we definitely have some item in 𝑜1 , … , 𝑜𝑗−1 , 𝑜𝑗 , … , 𝑜𝑟 that can be

added and still have an element of 𝐹. We can continue doing so until the group is just as

large as 𝑜1 , … , 𝑜𝑗−1 , 𝑜𝑗 , … , 𝑜𝑟 . But all added items have to be of the set {𝑜𝑗 , … , 𝑜𝑟 }.

But since all items are ordered all of them must have a cost ≥ 𝑐(𝑜𝑗 ) and 𝑐(𝑜𝑗 ) ≤ 𝑐(𝑔𝑗 )

Greedy works also for general 𝑐, if the requirement is to find a basis.

Graphical Matroid

Graph 𝐺. items are edges of 𝐺.𝐹 - forests of 𝐺. Sets of edges that do not close a cycle. 𝐺 is

connected – bases⇔spanning trees.

Greedy algorithm - Finds maximum weight spanning tree.

We can also find minimal spanning tree – Kruskal’s algorithm.

----- End of lesson 5

Matroids – a hereditary family of sets 𝑋 ∈ 𝐹, 𝑌 ⊂ 𝑋 ⇒ 𝑌 ∈ 𝐹

𝑋, 𝑌 ∈ 𝐹, |𝑋| > |𝑌| ⇒ 𝑒 ∈ 𝑋 𝑠. 𝑡. 𝑌 ∪ {𝑒} ∈ 𝐹

The natural greedy algorithm, given any cost function: 𝑐: 𝑆 → 𝑅 + , finds the independent set

(members of 𝑆) of maximum total weight/cost.

The maximal sets in 𝑓 all have the same cardinality, 𝑟(rank). The maximal sets are called

“basis”.

𝑆′ ⊂ 𝑆

And for every 𝑋 ⊂ 𝑆, 𝑋 ∈ 𝐹. 𝑋 ′ = 𝑋 ∩ 𝑆 ′

This gives rise to a family 𝐹 ′ .

If (S, F) is a matroid, then so is (𝑆 ′ , 𝐹 ′ ) |𝑋 ′ | > |𝑌 ′ |

The rank of the new matroid is 𝑟 ′ ⇒ 𝑟 ′ ≤ 𝑟

Dual of a Matroid

Given a matroid (𝑆, 𝐹), it’s dual (𝑆, 𝐹 ∗ ) is the collection of all sets where each 𝑋 satisfies

𝑆\𝑋 still contains a basis for (𝑆, 𝐹)

In the graphical matroid:

𝑆 edges of graph 𝐺.

𝐹 - forests of graph 𝐺

The bases are the spanning trees of the graph.

𝐹 ∗ - Any collection of edges that by removing it the graph is still connected.

Theorem: The dual 𝐹 ∗ of matroid 𝐹 is a matroid by itself.

Moreover, (𝐹 ∗ )∗ = 𝐹.

Proof:

We need to show that the dual is hereditary – but this is easy to see. If we remove an item

from 𝑥, it still doesn’t interfere with the bases of 𝑆.

𝑋, 𝑌 ∈ 𝐹 ∗ , |𝑋| > |𝑌| ∃𝑒 ∈ 𝑋. 𝑌 ∪ {𝑥} ∈ 𝐹 ∗

TODO: Draw sets 𝑋 and 𝑌.

Let’s look at 𝑆 ′ = 𝑆 − (𝑋 ∪ 𝑌)

We know that (𝑆 ′ , 𝐹 ′ ) is a matroid, and it has rank 𝑟 ′ .

If 𝑟 ′ = 𝑟 we can move any item from 𝑋 to 𝑌 and we will still be in 𝐹 ∗ .

So the only case we have a problem is when 𝑟 ′ < 𝑟 (it can’t be larger).

𝐵′ be a basis for (𝑆 ′ , 𝐹 ′ ). |𝐵| = 𝑟 ′

𝑟 − 𝑟 ′ ≤ |𝑌\𝑋|

Complete 𝐵′ to a basis of 𝐹 using 𝐵𝑦 .

The number of elements of 𝐵𝑦 that we use is exactly 𝑟 − 𝑟 ′ ≤ 𝑌\𝑋 < 𝑋\𝑌.

So some item wasn’t used in 𝑋\𝑌!

We can take that item, and add it to 𝑌 and therefore still get a basis when 𝑌 plus that item is

removed from 𝑆.

(𝐹 ∗ )∗ = 𝐹 ?

Because the bases of the dual is just the complement of the bases of the original matroid.

|𝑆| = 𝑛 Also all the bases of the dual are of size 𝑛 − 𝑟. 𝑟 ∗ = 𝑛 − 𝑟.

How did we find the minimal spanning tree?

We sorted all weights by their weight, and added an edge in the spanning tree as long as it

doesn’t close a cycle.

Minimum weight basis for 𝐹=complement of maximum weight basis for 𝐹 ∗ .

Graphical Matroids on Planar Graphs

TODO: Draw planar graphs

Every interior (a shape closed by edges) is denoted as a vertex. Then every two vertices are

connected if they share a common “side” (or edge). The exterior is a single vertex.

A minimal cut set in the primal is a cycle in the dual.

The dual of a spanning tree is a spanning tree.

The complement of a spanning tree in the primal is a spanning tree in the dual.

Assume we have a planar graph with 𝑣 vertices, 𝑓 faces, and 𝑒 edges.

𝑣−1+𝑓−1=𝑒

We can always triangulate a planar graph, thus increasing the number of vertices but

keeping it planar.

In such graphs 3𝑓 = 2𝑒.

2𝑒

𝑣−1+

−1=𝑒

3

𝑒

𝑣−2=

3

𝑒 = 3𝑣 − 6

𝑒

2 (𝑣) = 6 −

12

𝑣

= 6 minus something – at least one with 5 or less!

(𝑆, 𝐹1 ), (𝑆, 𝐹2 )

We can look at their intersection such that 𝑋 ∈ 𝐹1 and 𝑋 ∈ 𝐹2

Partition Matroid

Partition 𝑆 into:

𝑆1 , 𝑆2 , … , 𝑆𝑟

And every set that contains at most one item from each partition.

TODO: Draw stuff

A matching is a collection of edges where no two of them touch the same vertex.

The intersection of two partition matroids is the set of matchings in bipartite graph.

In a bipartite graph the set of matchings are not a matroid.

TODO: Draw example graph.

Theorem: For every cost function 𝑐: 𝑆 → 𝑅 +, one can optimize over the intersection of two

matroids in polynomial time.

Intersections of 3 matroids.

TODO: Draw partitions for the matroid.

Given a collection of triples – find a set of maximum cardinality of disjoint triples. This

problem is 𝑁𝑃-Hard.

Things we will see:

1) Algorithm for maximum cardinality matching in bipartite graphs.

2) Algorithm for maximum cardinality matching in arbitrary graphs.

3) Algorithm for maximum weight matching in bipartite graphs.

There is also an algorithm for maximum weight matching in arbitrary graphs, but we will not

show it in class.

Vertex cover – a set of vertices that cover (touch) all edges.

Min vertex cover ≥ maximum matching

Min vertex cover ≤ 2 ∙maximal matching.

For bipartite graphs minV.C.=max matching.

Alternating Paths

TODO: Draw graphs

Vertices not in our current matching will be called “exposed”.

An alternating path connects two exposed vertices and edges alternates with respect to

being in the matching.

Given an arbitrary matching 𝑀 and an alternating path 𝑃, we can get a larger matching by

switching the matching along 𝑃.

Alternating forest: A collection of rooted trees. The roots are the exposed vertices, and the

trees are alternating.

TODO: Draw alternating forest

In a single step:

- connect exposed vertices

- Add two edges to a tree (some tree)

The procedure to extend a tree:

Pick an outer vertex, and connect it to

- exposed vertex – alternating path – extend matching

- outer vertex – different tree. So we can get from a root of some tree to the root of

another tree.

- Extened the tree (alternating forest) by two edges.

Claim: When I’m stuck I certainly have a maximum matching.

Proof by picture.

Select the inner vertices as the vertex cover.

--- end of lesson 6

Matchings

TODO: Draw an alternating forest

1) If you find an edge between exposed vertices then you can just add it to the

matching

2) An edge between two outer vertices (in different trees) – alternating path

3) Edge from outer vertex (the exposed vertices are considered as outer vertices) to an

unused matching edge

In cases 1 and 2 we restart building a forest

Gallai-Edmond’s Decomposition

Outer vertices ar denoted as 𝐶 (components)

Inner vertices are denoted as 𝑆 (separator)

The rest are denoted as 𝑅 (Rest)

We don’t have edges between 𝐶 and itself otherwise the algorithm would not stop

We also don’t have edges between 𝐶 and 𝑅 otherwise it wouldn’t have stopped

In short, we may have edges between 𝐶 and 𝑆, 𝑆 can have edges between it and itself, 𝑆 can

have edges between it and 𝑅 and 𝑅 can have edges between it and itself.

The only choice of matching we used are internal edges of 𝑅 and edges between C and 𝑆.

All vertices of 𝑅 are matched, all vertices of 𝑆 are matched, and the number of vertices of 𝐶

participate in the matching is |𝐶| − |𝑆|

Another definition of 𝐶, 𝑆 and 𝑅:

𝐶 is the set of vertices that are not matched some maximum matching.

𝑆 is all neighbors of 𝐶

𝑅 is the rest

The number of vertices not matched in a maximum matching is exactly |𝐶| − |𝑆|.

General Graph

In general graphs we might have odd cycles. So case 2 doesn’t work anymore (because an

edge can exist in the same tree)

We must have a new case:

2a) An edge between two outer vertices of the same tree.

In this case we get an odd cycle.

Contract the odd cycle! The contracted vertex is an outer vertex.

Suppose we had a graph 𝐺, and now we have 𝐺 ′ as the contracted graph.

First note the following: Size of matching in 𝐺 ′ is equal to size of matching in 𝐺 − 𝑘 if the

odd cycle had length 2𝑘 + 1.

Lift matching from 𝐺 ′ to 𝐺 and restart building a forest (instead of just restart building a

forest).

Now we can see why we call the outer vertices 𝐶 - since they might represent components

(contracted odd cycles)

Can be see that each component always represents an odd number of vertices.

Now, we might have components that might have edges to themselves, but not between

two components. So the separator really separates the different components from each

other and 𝑆.

Vertices of 𝑆 are all matched. Components in 𝐶 are either connected to some vertex in 𝑆 or

none (at most connected to one vertex in 𝑆)

But then this is the optimal matching! Which means that the algorithm is correct.

So the algorithm finds a matching that misses |𝐶| − |𝑆|. But this is the minimal number of

edges we miss by any covering – so our solution is the optimal one.

Theorem: If in a graph there is a set 𝑆 of vertices where removal leaves in the graph 𝑡

components of odd size (and any number of components of even size, then the size of the

maximum matching is at most

𝑛−(𝑡−|𝑆|)

2

Minimum Weight Perfect Matching in Bipartite Graphs

Weights are non-negative, and we try to find the perfect matching with the maximal weight.

Let’s observe the variant where we search for a maximal weight.

If an edge is missing, we can add zeroes instead. Then a perfect matching always exist. And

then we can apply a transformation on the maximal variant to make it a minimal variant. So

the problem is well defined for non-perfect graphs.

We will use:

- Weight Shifting

- Primal-Dual method

In our case the primal problem – minimum weight covering of vertices by edges. But a

perfect matching is a covering problem with minimal weight.

The dual problem is the packing problem: Assign non-negative weights to the vertices such

that for every edge, sum of weights of its endpoints is at most weight the edge. Maximize

sum of weights of vertices.

TODO: Draw a bipartite graph

The primal is a minimization problem, so it’s at least as large as the dual.

For optimal solution there is equality! We will show that…

This is a theorem of Evergary of 1931

Let’s try to reduce the problem to an easier problem.

Let 𝑤𝑚 the smallest weight of an edge

Subtract 𝑤𝑚 from the weight of every edge.

Every perfect matching contains exactly 𝑛 ∙ 𝑤𝑚 from its weight.

As for the dual problem, we can start with the weight of every vertex as 0, and increase the

weight of every vertex by

𝑤𝑚

.

2

The dual we got is a feasible dual, and we decreased the

weight of the edges.

Let 𝑣 be some vertex. We can subtract 𝑤𝑣 from all of its edges. Since one of them has to be

in the final perfect matching, the perfect matching lost exactly 𝑤𝑣

And in the dual, we can increase the weight of 𝑣 by 𝑤𝑣

We will keep the following claims true:

- For every edge, the amount of weight it loses is exactly equal to the number of

weight gained by its endpoints.

- At any state, edges have non-negative weights.

Consider only edges of zero weight and find maximum matching.

There are two possibilities now:

- It is a perfect matching. In this case it is optimal.

- It’s not a perfect matching. We can observe the Galai-Edmond decomposition of the

graph.

Let 𝜖 be the minimum weight of an edge between 𝐶 and 𝑅 or an edge of weight 2𝜖 between

𝐶 and 𝐶.

For every vertex in 𝐶, we will add to its weight +𝜖

For every vertex is 𝑆 we will reduce its weight by 𝜖

With respect to the matching, we did not change the weight. But we created one more zero

weight edge!

(Note that no edges became negative)

Either we increased the matching (for an edge between 𝐶 and 𝐶)

Or we increased 𝑆 (for an edge between 𝐶 and 𝑅, since the new connected component of 𝑅

now belongs to 𝑆 since a neighbor of some vertex in 𝐶)

Every time we make progress. So in 𝑂(𝑛2 ) weight shifting steps, get a perfect matching of

weight zero.

--- end of lesson 7

Algebraic Methods for Solving Algorithms

Search of Triangles

By using exhaustive search, we can go over all triples and do it in 𝑂(𝑛3 )

The question is, can we do it better?

Multipication of Two Complex Numbers

(𝑎 + 𝒾𝑏)(𝑐 + 𝒾𝑑) = 𝑎𝑐 − 𝑏𝑑 + 𝒾(𝑎𝑑 + 𝑏𝑐)

Assume the numbers are large!

Multiplications are rather expensive. Much more then addition and subtractions.

Let’s compute 𝑎𝑐, 𝑏𝑑, and then compute:

𝑎 + 𝑏 and then 𝑐 + 𝑑

And then calculate: (𝑎 + 𝑏)(𝑐 + 𝑑) = 𝑎𝑐 + 𝑎𝑑 + 𝑏𝑐 + 𝑏𝑑

But then we can use the previous values to extract 𝑎𝑑 + 𝑏𝑐

So the naïve way uses 4 products and 2 additions/subtractions. The new way uses 3

products and 5 additions/subtractions.

Fast Matrix Multiplication

Suppose we have a matrix:

𝑎11 𝑎12 … 𝑎1𝑛

⋮

⋮

𝐴=[ ⋮

𝐵 = (𝑏𝑖𝑗 ),

𝑀 = 𝐴𝐵 = (𝑚𝑖𝑗 ),

𝑚𝑖𝑗 = ∑ 𝑎𝑖𝑘 𝑏𝑘𝑗

⋮ ],

𝑘

𝑎𝑛1 … … 𝑎𝑛𝑛

So we need 𝑛3 products to compute 𝑀

Unlike the previous problem, we want to reduce both the number of products and the

number of additions.

We will show a very known algorithm by Strassen:

Assume for simplicity that 𝑛 is a power of 2. If not we can pad it with zeroes to the next

power of 2.

Let’s partition 𝐴 and 𝐵 into blocks:

𝐴

𝐴12

𝐵

𝐵12

𝐴 = [ 11

𝐵 = [ 11

],

]

𝐴21 𝐴22

𝐵21 𝐵22

And then:

𝐴 𝐵 + 𝐴12 𝐵21

𝑀 = [ 11 11

𝐴11 𝐵12 + 𝐴12 𝐵22

𝐴21 𝐵11 + 𝐴22 𝐵21

𝑀

] = [ 11

𝐴21 𝐵12 + 𝐴22 𝐵22

𝑀21

𝑀12

]

𝑀22

𝑛

2

So 𝑇(𝑛) = 8𝑇 ( ) + 𝑂(𝑛2 )

After solving the recursion, we get:

𝑇(𝑛) = 𝑂(8log 𝑛 ) = 𝑛3

If we could compute everything by 7 multiplication (instead of 8) the time will be:

𝑛

𝑇(𝑛) = 7𝑇 ( ) + 𝑂(𝑛2 ) = 𝑂(7log 𝑛 ) = 𝑂(𝑛log 7 ) = (𝑛2.8 )

2

𝐴11

𝐴12

𝐴21

𝐴22

𝐵11

𝑀11

𝐵12

𝑀12

𝑀21

𝐵21

𝐵22

𝑀11

𝑀12

𝑀21

𝑀22

𝑀22

Products:

For example:

0 + +

+ 0 + +

− 0 − −

0 0 0 0

0 0 0 0

0

0

0

0

0

What we want:

+ 0 0 0

0 0 + 0

0 0 0 0

0 0 0 0

If you think of pluses and minuses as +1 and -1 then we have a matrix of rank 2.

As we can see, every multiplication gives a matrix of rank 1!

So we must use 7 matrices of rank 1, and generate 8 matrices of rank 2.

+ +

0 0

𝑃1 = [

+ +

0 0

0

0

𝑃2 = [

0

0

0

0

0

0

0

0

] = (𝐴11 + 𝐴21 )(𝐵11 + 𝐵12 )

0

0

0 0 0

0 + +

] = (𝐴12 + 𝐴22 )(𝐵21 + 𝐵22 )

0 0 0

0 + +

We can observe that 𝑃1 + 𝑃2 = 𝑀11 + 𝑀12 + 𝑀21 + 𝑀22

In other words 𝑀22 = 𝑃1 + 𝑃2 − 𝑀11 − 𝑀12 − 𝑀21

So we used two products and we can find one expression. We only need to calculate these

three expressions.

0 0 0 0

0 0 0 0

𝑃3 = [

] = 𝐴21 (𝐵11 + 𝐵21 )

+ 0 + 0

0 0 0 0

0 0 0 0

0 0 0 0

𝑃4 = [

] = (𝐴21 + 𝐴22 )𝐵21

0 0 − 0

0 0 + 0

So we have that 𝑀21 = 𝑃3 + 𝑃4

0

0

𝑃5 = [

0

0

0

0

𝑃6 = [

0

0

0 0 0

0+ 0 +

] = 𝐴12 (𝐵12 + 𝐵22 )

0 0 0

0 0 0

+ 0 0

− 0 0

] = (𝐴11 − 𝐴12 )𝐵12

0 0 0

0 0 0

So we already used 6 products, and generated 𝑀12 , 𝑀21 and are able to compute 𝑀22 later.

We are only missing 𝑀11 and only one multiplication left! Can we do it???

(the tension!)

0 0 0 0

0 − + 0

𝑃7 = [

]

0 − + 0

0 0 0 0

𝑀11 = 𝑃7 + 𝑃1 − 𝑃3 − 𝑃6

We did it!! The world was saved!

But you can even multiply matrices faster!

If you divide the matrix into 70 parts you can find algorithms that solve it with 𝑛2.79

The best known result is 𝑛2.37.

Often people specify that matrices can be multiplied with 𝑂(𝑛𝜔 ) and use it as a subroutine.

The final lower bound is 𝑛2

Multiplying Big Numbers

Multiplying numbers of size 𝑛 takes 𝑂(𝑛2 ) by using the naïve approach.

You can break every number into two and apply an approach similar to the one used when

multiplying complex numbers.

The best running time is something like: 𝑛 ∙ log 𝑛 ∙ log log 𝑛…

Testing

How can you check that 𝐴 ∙ 𝐵 = 𝑀 is correct?

Probabilistic test:

𝑥 ∈𝑅 ⏟

0011001 …

𝑛

If you got the right answer:

𝐴∙𝐵∙𝑥 =𝑀∙𝑥

But this is rather easy! A multiplication by a vector takes 𝑂(𝑛2 ) time, and we can use

associativity on the left to multiply 𝑥 by 𝐵 first.

Suppose the true answer is 𝑀, but we got 𝑀′

So with a random vector, there’s a good probability that 𝑀𝑥 ≠ 𝑀′ 𝑥

′

Since they are different, there must be that 𝑀𝑖𝑗 ≠ 𝑀𝑖𝑗

The chance is exactly ½ that we catch the bad matrix. The reason is too long for the shulaim

of this sikum.

The inverse of a matrix can also be calculated using matrix multiplication, so finding an

inverse takes 𝑂(𝑛𝜔 ) as well.

Boolean Matrix Multiplication

There are settings in which subtraction is not allowed. One such setting is Boolean matrix

multiplication.

In this case, multiplication is an AND operation and addition is an OR operation.

So it’s clear why we can’t subtract.

In this case, we can replace OR with addition and AND with a regular multiplication then:

′

𝑀𝑖𝑗

= 0 ⇒ 𝑀𝑖𝑗 = 0

′

𝑀𝑖𝑗

> 0 ⇒ 𝑀𝑖𝑗 = 1

If you don’t like big numbers, you can use everything under mod 𝑝 of some prime variable

But we can think of worst cases such that things stop working. Such as when the OR is

replaced by XOR.

Back to Search of Triangles

𝑣1 … … 𝑣𝑛

⋮

[⋮

]

𝑣𝑛

𝐴𝑖𝑗 = 1 ⇔ (𝑖, 𝑗) ∈ 𝐸

Otherwise it’s zero.

Let’s look at 𝐴2

𝐴2𝑖𝑗 = 1 if there’s a path of length 2 between vertex 𝑖 and 𝑗

So 𝐴2 is a matrix of the number of paths of size at most 2.

𝐴𝑡 = # length 𝑡 paths between 𝑖, 𝑗

So 𝐴3𝑖𝑖 is the number of paths of size 3 from 𝑖 to itself.

But every such path is a triangle!

So 𝑡𝑟𝑎𝑐𝑒(𝐴3 ) = # triangles in G times 6 (since for every triangle, there are 2 possible paths

from each of the vertices to itself)

But you can calculate 𝐴3 in 𝑂(𝑛𝜔 )!

𝐴𝑡𝑖𝑗 = 1 ⇔ there is a path of length at most 𝑡 from 𝑖 to 𝑗.

So 𝐴𝑛 actually “hashes” all reachability of a graph!

So by performing log 𝑛 ∙ 𝑂(𝑛𝜔 ) operations we can find the closure of the graph.

--- end of lesson 8

Matrices and Permanents

Permanent 𝑝𝑒𝑟(𝐴) = ∑𝜎 ∏𝑛𝑖=1 𝑎𝑖,𝜎(𝑖)

Determinant det(𝐴) = ∑𝜎(−1)𝜎 ∏𝑛𝑖=1 𝑎𝑖,𝜎(𝑖)

det(𝐴) = 0 ⇔ 𝐴 is singular

Valiant showed that: Computing the permanent is #𝑃 complete. So 𝑁𝑃 hard.

Even for 0,1 matrices.

TODO: Draw a neighbor matrix of a bipartite graph.

Permanent of a 0/1 matrix 𝐴 is the same as the number of perfect matchings in the

associated bipartite graph.

𝑃𝑒𝑟(𝐴) (𝑚𝑜𝑑 2) = det(𝐴) (𝑚𝑜𝑑 2)

(because subtraction and addition are the same!)

𝑃𝑒𝑟(𝐴) (𝑚𝑜𝑑 3)

Suppose 𝑛 = 30

30 30

𝑒

The number of permutations is 30! ≈ ( )

There is an algorithm that is still exponential but substantially faster.

It was suggested by Ryser, and its running time is around 2𝑛 . So in the case of 30, it’s 230

which is not so bad.

The trick is to use the “Inclusion-Exclusion” principle.

𝑛 × 𝑛 matrix 𝐴

𝑆 non-empty set of columns.

𝑅𝑖 (𝑆) – Sum of entries in row 𝑖 and columns from 𝑆.

𝑛

𝑛−|𝑆|

𝑃𝑒𝑟(𝐴) = ∑(−1)

𝑆

∏ 𝑅𝑖 (𝑆)

𝑖=1

Since we go over all 𝑆, we have 2𝑛 terms. The running time is something around 2𝑛 times

some polynomial.

𝑥11

𝑃𝑒𝑟 ( ⋮

𝑥𝑛1

… 𝑥1𝑛

⋱

⋮ ) = ∑ ∏ 𝑥𝑖,𝜎(𝑖) =

… 𝑥𝑛

𝜎

𝑖

Multilinear polynomial. 𝑛! monomials. Each monomial is a product of variables. We have 𝑛2

variables.

This applies both to the determinant and the permanent.

In Ryzer’s formula we only get monomials with 𝑛 variables – 1 from each row.

In the original definition we also get monomials with 𝑛 variables – 1 from each row. But each

of them must be from a different column.

First let’s see that all the terms that should be in Ryzer’s formula are actually there.

∏𝑛𝑖=1 𝑥𝑖,𝜎(𝑖) - only for 𝑆 = all columns.

This is a monomial that includes 5 variables but only 3 columns:

𝑥11 𝑥21 𝑥33 𝑥32 𝑥55

𝑛 variables, 𝑐 columns – {1,3,5}

𝑐⊂𝑆

The number of minus signs: ∑𝑆|𝑐⊂𝑆(−1)𝑛−|𝑆| ∙ 1 𝑇𝑒𝑟𝑚 = 0

TODO: Draw many partially drawn matrices.

For every variable independently, substitute a random value {0, … , 𝑝 − 1} 𝑝 > 𝑛2 , 𝑝 is

prime. Then compute the determinant.

And we can even do the computations modulo 𝑝.

Lemma: For every multilinear polynomial in 𝑛2 variables, if one substitutes random values

from 0, … , 𝑝 − 1 and computes modulo prime 𝑝, the answer is 0 with probability at most

𝑛2

.

𝑝

1

𝑎𝑥 + 𝑏 = 0 (𝑚𝑜𝑑 𝑝) the probability is 𝑝

𝑘

Suppose the probability is 𝑝 for 𝑘 variables. We want to show it’s

𝑘+1

for 𝑘

𝑝

+ 1 variables.

We can look at the new polynomial as 𝑝(𝑥1 , … , 𝑥𝑘+1 ) 𝑥𝑘+1 (𝑝1 (𝑥1 , … , 𝑥𝑘 ) + 𝑝2 (𝑥1 , … , 𝑥𝑘 ))

𝑘

𝑝

If 𝑝1 and 𝑝2 are zero, this happens in probability (by induction) and otherwise, there’s only

one possible vale of 𝑥𝑘+1 such that everything is zero. So the total probability is

(𝑘+1)

𝑝

Kastaleyn: Computing the number of matchings in planar graphs is in polynomial graphs.

Kirchoff: Matrix-tree theorem. Counts the number of spanning trees in a graph.

We have a graph 𝐺and we want the number of spanning trees.

Laplacian of 𝐺 – 𝐿.

𝑣1

⋮

𝑣𝑛

𝑣1

𝑑1

0

…

−1

⋱

𝑣𝑛

𝑑𝑛

𝑙𝑖,𝑗 = 𝑙𝑗,𝑖 = −1 ∙ (⟨𝑖, 𝑗⟩ ∈ 𝐸)

𝑙𝑖,𝑖 = degree of vertex 𝑖

TODO: Draw the graph and its corresponding matrix

(though we got the point)

The algorithm: Generate the Laplacian matrix of the graph. Cross some row and column

(same column) and calculate the determinant. This is the number of spanning trees of the

graph.

Given 𝐺, Direct its edges arbitrarily. Create the incidence matrix of the graph.

𝑣1

𝑣2

𝑣3

𝑣4

𝑒1 𝑒2 𝑒3 𝑒4

+1 0

0

0

−1 −1 +1 0

0

0 −1 −1

0 +1 0 +1

For each edge you put a +1 for the vertex it is entering and −1 for the vertex it leaves.

Denote this matrix 𝑀.

If we multiply 𝑀 ∙ 𝑀𝑇 we get the Laplacian.

The incidence matrix has special properties. One of the properties is its “Totally

Unimoduler”.

Totally unimodular: Every square sub-matrix has determinant either +1, −1 or 0.

Theorem: Every matrix in which every column has at most a single +1, at most a single −1

and rest of the entries are zero, is totally unimodular.

--- end of lesson

Given a graph 𝐺 we have its Laplacian denoted 𝐿.

Also 𝑀 is the vertex-edge incidence matrix.

We know that 𝑀𝑀𝑇 = 𝐿

Another thing we said about 𝑀 is that it’s totally unimodular. Which means that every

square submatrix has determinant either 0,1 or +1.

Wlog, we always look at the case where 𝑚 ≥ 𝑛 (𝑚 is the number of edges, 𝑛 is the number

of vertices).

If the number of edges is smaller than the number of vertices, either we can use all edges as

a spanning tree or we don’t have a spanning tree.

𝑟𝑎𝑛𝑘(𝑀) ≤ 𝑛 − 1

Let’s observe the matrix transposed:

TODO: Draw a transposed incidence matrix.

If we take 𝑥 = [1

Then 𝑥 ∈ ker 𝑀

… 1]

What do we know about a submatrix with a subset of the edges (but all the vertices)

If 𝑆 is a spanning tree, then the rank is 𝑛 − 1.

Otherwise, then 𝑟𝑎𝑛𝑘 < 𝑛 − 1

𝑇

𝑇

det(𝑀𝑠−𝑖 ∙ 𝑀𝑠−𝑖

) = det(𝑀𝑠−𝑖 ) ∙ det(𝑀𝑠−𝑖

) = 1 if 𝑆 is a spanning tree and 0 otherwise.

Denote 𝑀𝑠−𝑖 = 𝑀𝑆′

So the number of spanning trees = ∑𝑆 det 𝑀𝑆′ ∙ det 𝑀𝑆′

Binet-Cauchy Formula for computing a determinant:

Let 𝐴 and 𝐵 be two matrices. Say 𝐴 is 𝑟 × 𝑛 and 𝐵 is 𝑟 × 𝑛, 𝑛 > 𝑟.

Then det 𝐴 ∙ 𝐵𝑇 = ∑𝑆(det 𝐴𝑆 )(det 𝐵𝑆 )

(𝑆 ranges over all subsets of 𝑟 columns)

If 𝑟 = 𝑛 then det(𝐴 ∙ 𝐵) = (det 𝐴) ∙ (det 𝐵)

If 𝑟 > 𝑛 the determinant is zero. So it’s not interesting.

If 𝑛 > 𝑟

Set 𝐴 = 𝐵 = 𝑀−𝑖 . Then everything comes out.

Prove the Binet-Cauchy formula:

x1 0 0

Δ = [0 ⋱ 0 ]

n×n

0 0 𝑥𝑛

matrix

det(𝐴Δ𝐵𝑇 ) = ∑(det 𝐴) ∙ (det BS ) ∙ (∏ 𝑥𝑖 )

𝑆

𝑖∈𝑆

We will prove this, and this is a stronger statement then the one we need to prove.

The answer 𝐴Δ𝐵𝑇 is an 𝑟 × 𝑟 matrix:

Every entry in the matrix is a linear polynomial in the variable 𝑥𝑖 . Linear because we never

multiply an 𝑥𝑖 in 𝑥𝑗 . In addition we don’t have any constant terms.

What is the determinant?

det(𝐴Δ𝐵𝑇 ) is a homogenous polynomial of degree 𝑟.

We can take any monomial of degree 𝑟, and see that the coefficients in both cases are the

same.

On the right hand side we don’t have any monomials of degree higher than 𝑟 or lower than

𝑟. We need to prove they are zero on the left side.

𝑇 = a set of less then 𝑟 variables.

Substitute 0 for all variables which are not in 𝑇.

𝐴Δ′ 𝐵𝑇

BAAAAHHH. Can’t write anything in this class!

Spectral Graph Theory

NP-Hard problems on random instances.

Like 3𝑆𝐴𝑇, Hamiltonicity k-clique, etc…

We sometimes want to look at max-clique (which is the optimization version of k-clique).

We can also look at the optimization version of MAX-3SAT.

3XOR or 3LIN:

(𝑥1 ⊕ ̅̅̅

𝑥2 ⊕ 𝑥3 ) …

This problem is generally in 𝑝. But if we look for the maximal one we get an NP-Hard

problem.

Motivations:

- Average case (good news)

- Develop algorithmic tools

- Cryptography

- Properties of random objects

Heuristics:

3SAT: “yes” – Find a satisfying assignment in many cases (yes/maybe).

Refutation/”no” – find a “witness” that guarantees that the formula is not satisfyable.

(no/maybe).

Hamiltonicity

𝐺𝑛,𝑝 - Esdes-Rengi random graph model:

Between any two vertices independently, place an edge with probability 𝑝.

𝐺𝑛,𝑝= 5 . The 𝑝 doesn’t have to be a constant.

𝑛

Process of putting edges in the graph at random one by one.

3SAT: At first, a short list of clauses is satisfyable. But as the formula becomes larger, it is not

so sure that it is satisfyable.

What is that point?

A conjecture is t 4.3 ∙ 𝑛.

There is a proof of 3.5 ∙ 𝑛.

However, there is a proof that this is a sharp transition.

For refutation, the condition is exactly opposite. The longer the formula, the easier it is to

find a witness for “no”.

--- end of lesson

Adjacency matrix:

0

1

[

] 𝑎𝑖,𝑗 = 1 ⇔ (𝑖, 𝑗) ∈ 𝐸

⋱

1

0

For regular graphs, there is no difference between the adjacency matrix and the laplacian.

For irregular graphs, we do have differences and we will not get into it.

Properties: Non-negative, symmetric, connected ⇔ irreducible

Irreducible means we cannot represent it as a block matrix such that the upper right block is

zeroes.

𝜆1 ≥ 𝜆2 ≥ ⋯ ≥ 𝜆𝑛 are all real, we might have multiplicities so we also allow equality.

𝑣1 , … , 𝑣𝑛 eigenvectors.

If we take two distinct eigenvalues 𝜆𝑖 ≠ 𝜆𝑗 then 𝑣𝑖 ⊥ 𝑣𝑗

ℝ𝑛 has an orthogonal basis using eigenvectors of 𝐴.

If we look at the eigenvector that corresponds to the largest eigenvalue, then the

eigenvector is positive (all its elements are positive).

𝜆1 > 𝜆2 ,

𝜆1 ≥ |𝜆𝑛 |

(The last 2 properties are only true if the graph is connected)

𝑥 is an eigenvector with eigenvalue 𝜆 if 𝐴𝑥 = 𝜆𝑥

In graphs, if we have the adjacency matrix, and we multiply it by 𝑥.

The values of 𝑥 corresponds to values we give to some of the vectices of 𝐴.

TODO: Draw graph.

If some 𝑥 is an eigenvector of 𝐴, it means every vertex is given a value that is a multiple of

the values of its neighbors.

𝑡𝑟𝑎𝑐𝑒𝐴 = 0

∑ 𝜆𝑖 = 𝑡𝑟𝑎𝑐𝑒

Since 𝜆1 > 0 ⇒ 𝜆𝑛 < 0

Bipartite Graphs

Let 𝑥 be an eigenvector with non-zero eigenvalue 𝜆.

TODO: Draw bipartite graph

By flipping the value of 𝑥 on one site of the bipartite graph, we get a new eigenvector and it

has eigenvalue – 𝜆.

This is a property only of bipartite graphs (as long as we’re dealing with connected graphs).

Consider an eigenvector 𝑥. Then observe:

𝑥 𝑇 𝐴𝑥

𝑥𝑇𝑥

This is called Rayleigh quotients

If 𝑥 is an eigenvector of eigenvalue 𝜆 ⇒

𝑥 𝑇 𝜆𝑥

𝑥𝑇𝑥

=

𝜆

∙

=𝜆

𝑥𝑇𝑥

𝑥𝑇𝑥

Let 𝑣1 , … , 𝑣𝑛 be an orthonormal eigenvector basis of ℝ𝑛 .

In this case:

𝑥 = 𝑎1 𝑣1 + ⋯ + 𝑎𝑛 𝑣𝑛 where 𝑎𝑖 = ⟨𝑥, 𝑣𝑖 ⟩

𝐴 (∑ 𝑎𝑖 𝑣𝑖 ) = ∑ 𝑎𝑖 𝜆𝑖 𝑣𝑖

∑ 𝑎𝑖2 𝜆𝑖

∑ 𝑎𝑖2

This is like a weighted average where every 𝜆𝑖 gets the weight 𝑎𝑖2

So this expression cannot be larger than 𝜆1 and cannot be smaller than 𝜆𝑛

𝑇

(∑ 𝑎𝑖 𝑏𝑖 ) (∑ 𝑎𝑖 𝜆𝑖 𝑣𝑖 ) =

Suppose we know that 𝑥 ⊥ 𝑣1 ?

It means that the result is a weighted average of all vectors except for 𝜆1

Another way of getting the same thing:

∑𝑖,𝑗 𝑎𝑖𝑗 𝑥1 𝑥𝑗 (element-wise multiplication of the matrix 𝐴 and the matrix 𝑥 ∙ 𝑥 𝑇 .

Large max cut ⇒ 𝜆𝑛 is close to −𝜆1

Can show it using rayleigh’s quitients.

But the interesting thing is that the opposite direction is not so true!

We looked at the relation between 𝜆1 and 𝜆𝑛 . Now let’s look at the relation between 𝜆1 and

𝜆2 .

If a graph is 𝑑-regular all 1 eigenvalue.

If we have a disconnected d-regular graph, 𝜆1 , 𝜆2 are equal! Since 111111 … is an

eigenvector of both 𝜆1 and 𝜆2 .

So if they are equal, the graph is disconnected!

What happens if they are close to being equal?

𝜆2 close to 𝜆1 , then 𝐺 close to being disconnected!

Suppose we have a graph.

TODO: Draw the graph we are supposed to have.

We perform a random walk on a graph. What is the mixing time?

If a token starts at a certain point, what is the probability it will be in any of the other

vertices? If it turns quickly into a uniform distribution then the mixing is good.

Small cuts are obstacles of fast mixing.

If we started on the first vertex, we can say we started with the (probabilities) vector:

[1 0 … 0]

𝐴 ∑ 𝑎𝑖 𝑣𝑖

𝑑

𝐴𝑡

If I do 𝑡 steps of the random walk it’s: 𝑑𝑡 (∑ 𝑎𝑖 𝑣𝑖 ) =

∑ 𝑎𝑖 (𝜆𝑖 )𝑡 𝑥𝑖

𝑑𝑡

If 𝜆𝑖 ≫ |𝜆𝑙 | then we get a uniform distribution over all vertices.

𝝀𝟏 for non-regular graphs

Suppose the graph has maximum degree Δ and average degree 𝑑.

So we know 𝜆1 ≤ Δ

But this is always true: 𝜆1 ≥ 𝑑

𝑥 𝑇 = (1,1, … 1) and consider

𝑥 𝑇 𝐴𝑥

𝑥𝑇𝑥

So:

𝑥 𝑇 𝐴𝑥

𝑥𝑇𝑥

= sum over all entries of 𝐴 =

2𝑚

𝑛

≥ average degree.

c-core of a graph is the largest subgraph in which every vertex has degree ≥ 𝑐.

Let 𝑐 ∗ be the largest 𝑐 for which the graph 𝑔 has a c-core.

At 𝑑-regular graphs 𝑐 ∗ = 𝑑.

𝜆1 ≤ 𝑐 ∗

𝑣 is the eigenvector associated with 𝜆1 . Sort vertices by their value in the vector 𝑣.

So 𝑣1 is the largest value and 𝑣𝑛 is the smallest. And all numbers are positive (according to

the provinious bla bla theorem).

The graph cannot have a 𝜆1 + 1-core.

(a vertex cannot be connected to 𝜆1 neighbors above it, since then it cannot equal to a

multiple 𝜆1 of the values of its neighbors)

If we look at a star graph

Δ = 𝑛−1

𝑑 = 2 (a bit less than)

And the largest eigenvalue 𝜆1 = √𝑛 − 1

We can give the center √𝑛 − 1 and the rest 1.

Sometimes when we have a graph that is nearly regular but have a small number of vertices

with a high degree, we should remove the vertices of high degree since they skew the

spectrum!

Let’s use 𝛼(𝐺) to denote the size of the maximum independent set in the graph.

And let’s assume that 𝐺 is 𝑑-regular.

𝛼(𝐺) ≤ −

𝑛𝜆𝑛

𝑑 − 𝜆𝑛

𝐺 is bipartite. Then 𝜆𝑛 = −𝜆1 and then 𝛼(𝐺) =

Proof: 𝜆𝑛 ≤

𝑥 𝑇 𝐴𝑥

𝑥𝑇𝑥

𝑛𝜆1

2𝜆1

𝑛

=2

∀𝑥

Let 𝑆 be the largest independent set in 𝐺.

TODO: Draw 𝑥

So the entries of 𝑠 will equal 𝑛 − 𝑠 and the other vertices will be – 𝑠

0

0 |

𝑑 ∙ 𝑠 1′ 𝑠

| −𝑠(𝑛 − 𝑠)

0

0 |

|

−

− +

−

−

−

− +

−

− =

𝑑 ∙ 𝑠 1′ 𝑠

| 𝑑 ∙ (𝑛 − 2𝑠) 1′ 𝑠

−𝑠(𝑛 − 𝑠)

|

𝑠2

|

]

|

[

] [

𝑠 2 (𝑛𝑑 − 2𝑑𝑠) − 2𝑠(𝑛 − 𝑠)𝑑𝑠 𝑠 2 𝑛𝑑 − 2𝑑𝑠 3 − 2𝑠 2 𝑛𝑑 + 2𝑑𝑠 3

𝑠𝑑

𝜆1 ≤

=

=−

𝑠(𝑛 − 𝑠)2 + (𝑛 − 𝑠)𝑠 2

𝑛−𝑠

𝑠(𝑛 − 𝑠)((𝑛 − 𝑠) + 𝑠)

(𝑛 − 𝑠)2

𝜆𝑛 ∙ 𝑛 − 𝜆𝑛 𝑠 ≤ −𝑑𝑠

𝑠(𝑑 − 𝜆𝑛 ) ≤ −𝜆𝑛 𝑛

−𝜆𝑛 𝑛

𝑠≤

𝑑 − 𝜆𝑛

If |𝜆𝑛 | ≪ 𝑑 we get a very good bound. Otherwise we don’t.

∑ 𝜆𝑖 = 0

We can also look at the trace of 𝐴2 = ∑(𝜆𝑖 )2

On the other hand, it is also ∑ 𝑑𝑖

Only for regular graphs, this is 𝑛 ∙ 𝑑

So it follows that the average square value: 𝐴𝑣𝑔(𝜆2𝑖 ) = 𝑑

𝐴𝑣𝑔|𝜆𝑖 | ≤ √𝑑

Recall that 𝜆1 = 𝑑!

It turns out that in random graphs (regular or nearly regular).

With high probability ∀𝑖 ≠ 1 ⇒ |𝜆𝑖 | = 𝑂(√𝑑)

In almost all graphs, |𝜆1 | ≫ |𝜆𝑛 |

−𝜆 𝑛

2√𝑑𝑛

Therefore: 𝑠 ≤ 𝑑−𝜆𝑛 ≤ 𝑑+2√𝑑 ≤

𝑛

2𝑛

√𝑑