Eval Video Jour Tech - AEJMC final

advertisement

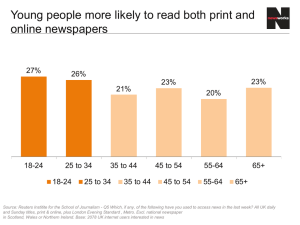

Evaluating Video Journalism Techniques, P. 1 Evaluating Issue-Oriented Video Journalism Techniques A competitive paper delivered to the Electronic News Division of the Association for Education in Journalism and Mass Communication, at the annual Convention in San Francisco, CA. Aug. 7, 2015 Richard J. Schaefer (schaefer@unm.edu) and Natalia Jácquez Dept. of Communication and Journalism Univ. of New Mexico Abstract Measures of cognitive retention and evoked empathy were tested across three video journalism stories dealing with trend data conveyed by continuity, thematic montage, and infographic visual treatments. The infographic and montage techniques proved more effective for communicating the complex dimensions of the trend data, with infographics creating the most self-reported empathy in viewers. The results suggest that graphic visualizations, which are easily produced with digital programs, could best communicate complex social trends. Evaluating Video Journalism Techniques, P. 2 Introduction At their best, journalists and public information professionals provide informational grounding to the public that is a crucial lubricant for a functioning, informed democracy. For over a century, the key issues have been what information provides Lippmann’s (1922) all important action platform for informed citizens in a democracy, and how best to communicate that information. The relationship between narrative journalism and ways of communicating fact-based understandings of baseline information are key to an informed public opinion, which in-turn influences policy formation (Patterson, 2013; Shapiro, 2011). These issues have been at the heart of debates about American democracy since the days of Walter Lippmann and John Dewey, and were part of the motivation for Gallup and others who in the mid 1930s started to routinely sample public opinion on key issues in American life. Today, the “numbers” story or “trend” story can stand in contrast to more time-honored narrative storytelling techniques to convey information crucial to public opinion formation and civic action. This study examines aspects of issue-oriented video trend stories because this type of journalism can help keep citizens informed of the great changes occurring in the society, and thereby influence policy and the direction of social life. Journalists and strategic communicators who have tried to communicate abstract problems or societal trends to the public have often relied on the old cliché to “put a face on it.” This method of illustrating the human dimensions of the problem by depicting the plight of a single individual has been passed down through newsrooms for decades. But this is not the only means journalists and public information communicators have developed for illustrating a significant social issue. Visually-oriented journalists interested in conveying trends and abstract Evaluating Video Journalism Techniques, P. 3 social issues have historically developed three very different techniques for conveying complex social issues in news or public information video pieces. This study looks at two particular aspects of this issue: What types of visual production strategies can video journalists use to convey baseline data and trend information and how might those practices encourage social and civic engagement? The study seeks to shed light on these aspects of the issue through a high-context experiment that draws upon recent research findings on cognitive processing, video editing, the ability of visuals to create empathy and emotional arousal in audience members. We describe it as a high-context experiment because it draws upon commonly used video news package production techniques in standard ways, including the incorporation of recently more accessible scientific visualizations that illustrate complex data sets. Literature Review The particular video editing sequences used to evaluate video journalism reports in these studies were: thematic montage, narrative continuity, and animated data visualizations, or infographics. Narrative continuity and thematic montage video editing styles were signaled out as the most commonly used technique of television broadcasting news (Schaefer, 1997). Once electronic videotaping of news worthy events replaced film in television news during the 1970’s, the pace of video editing became quicker. The time worn video editing techniques that most video journalists borrowed from filmmaking, such as using wide, medium, and close up shots, were and are still used. Yet, the most important part of editing a video sequence for news broadcasting isn’t the technology used to show the video but the meaning conveyed in the shots, and if these elements of a news story do mutually reinforce the script, sound, and cover video, the audience could loose the meaning of the story (Shook, Lattimore, & Redmond, 1992). Evaluating Video Journalism Techniques, P. 4 Data visualizations and infographics play a vital role in communicating data that sometimes readers or viewers may not grasp through words and images within news. Video news stories typically answer the regular six questions of: who, what, when, where, why and how, as do print and radio stories. However, the questions of why and how are crucial to the understanding of any story and usually require more time to answer them. Research indicates that a reader or viewer learns and remembers better if the journalistic questions are answered with a combination of words, images and infographics (Lester, 2003). Graphic elements often help to clarify factual statements. They may also be important for their eye-catching impact. Some recent research indicates that human beings are more motivated to moral action when they see depictions of individuals in difficult situations (Slovic, 2007). Such understandings are based on a research studies in which subjects react to imageristic or nonimagistic means of communicating danger or violence (Barry, Benolirao Griggers, & Jacob, 2009). During the first twenty years of the 20th Century, the techniques of narrative film theorists, particularly in Hollywood, came to be contrasted with the work of more didacticallyoriented formalist film theorists who worked in Russia in the two decades after the Russian Revolution. Relying on this distinction, Schaefer (1997) and Schaefer and Martinez (2009) contrasted traditional film editing theories of narrative realism with thematic montage to describe how television documentary and news editing techniques evolved from being predominantly representational and realistic to more thematically complex and montage oriented from the 1960s through 2005. The narrative realism continuity techniques of depicting individuals in feature films was contrasted with a more complex thematic montage that downplayed the role of individual actors in favor of more abstract exploration of social themes. These understandings Evaluating Video Journalism Techniques, P. 5 were reinforced by the work Lang, Bolls, Potter and Kawahara (1999) and Lang (2000) that explored the cognitive processing potential and limits of various editing techniques while advancing the understandings posited in the limited capacity theory. That theory suggests that although changing scenes through visual editing of disparate shots could arouse attention in viewers, human cognitive processing could be overwhelmed by a rapid pacing of disparate visual images. Thus, there appeared to be a potentially optimum pacing of montage images—though Lang and her fellow researchers used different terminology to describe this process. But since the turn of the millennium, research into how humans process and act on information has begun to explore how the human brain may be “hardwired” to learn through visual observation and empathize with individuals depicted in its visual field, including those seen on video and computer screens. In an extensive review of studies dealing with the portrayal of human tragedies, Slovic (2007) found that humans were more inclined to be motivated toward prosocial action by imagery and stories that depicted individuals or smaller numbers of humans in distress than when large numbers of people were described or depicted in similar situations. Similarly, Oliver, Dillard, Bae and Tamul (2012) recently demonstrated that narrative story techniques produced more sympathetic and empathetic responses in readers than did nonnarrative story techniques portraying the same social problem. Finally, the work of Amit and Greene (2012) relied on functional magnetic resonance imaging (fMRI) techniques and complex experimental analysis to demonstrate that humans relying on visual imaging techniques preferred to save a single visualized individual from harm rather than helping a larger number of people whom they could not visualize. These seemingly anomalous findings may be explained by the strange workings of mirror neurons. These brain structures enable humans to identify with and imitate the actions of other Evaluating Video Journalism Techniques, P. 6 living beings, particularly humans. They fire when we see others doing things that we have learned how to do, and enable us to imitate the actions of others and project intentionality into their actions. In this sense mirror neurons support the modeling of actions, identity and intentions as a largely visual and imitative social learning process that humans perform unconsciously and pervasively (Barry, 2009; Benolirao Griggers, 2009; Jacob, 2008). Not only does the presence of mirror neurons explain the prevalence of social learning theories, it also explains why human beings are so adept at transmitting culture. Given the increasing compilation of trend data and the possibility of using graphics derived from spreadsheet programs to convey complex numerical relationships, the infographic work of print pioneers, such as Tufte (1983), have become available as motion graphics to todays digital video journalists. Yet, few studies have attempted to determine the relative efficacy of using those types of visuals instead of the thematic montage and continuity image techniques that television journalists have relied on for decades. Cognitive Comprehension Research Questions Therefore, this study attempted to address several very narrow, but important, issues with regard to the efficacy of different visual techniques for conveying information: R1: Which of the visual video journalism techniques produces greater cognition and comprehension of the baseline data surrounding a contemporary issue; 1. narrative continuity editing techniques featuring individual subjects, 2. thematic montage editing techniques that convey a social problem through disparate imagery, or 3. graphic visualization techniques? R2: How effective are each of the three visual video journalism techniques at personifying and communicating the human dimensions of an abstract trend issue? Evaluating Video Journalism Techniques, P. 7 R3: How might the interaction of visual choices to depict trends and the use of personification techniques serve to support or undermine viewers’ self-reported sense of being disturbed by a news story, which suggests that viewers would have adopted a more empathetic response to a social issue? R4: What might this study suggest about the efficacy of contemporary video journalism techniques as a means toward creating broader societal understandings of complex social problems—a finding that could be crucial to developing informed public opinion? The first three research questions produced specific hypotheses with regard to the video treatments. H1: The graphic scientific visualization technique will better cognitively communicate the complex dimensions and scope of a trend issue than the narrative continuity or the now commonly used montage visual techniques. H2: The narrative continuity technique depicting an individual affected by a social issue will generate stronger cognitive measures of individual personification of the issues. H3: The stronger cognitive awareness found in items addressing H1 and H2 will combine to provide stronger self-reported feelings of being disturbed by the content covered in the news stories. Finally, when taken together, analysis of HI, H2 and H3 should shed light on RI, R2 and R3, as well as the broader R4 question regarding the efficacy of contemporary video practices for producing a viable platform for civic action and a more functional democracy. Evaluating Video Journalism Techniques, P. 8 Methodology The experiment reported here exposed slightly more than 180 volunteer subjects to three 3-minute news stories that each contained narration, soundbites and various forms of visual video information to convey trend data. The narration and soundbites of the three stories did not change from one treatment version to the next, but within each 3-minute story a 1-minute segment of the visual track contained visuals that relied on three different contemporary video journalism visual content techniques. One of the one-minute visual treatments for each story relied on narrative continuityedited depictions of a subject who had been introduced earlier in the report. This is a common technique, sometimes referred to by television news consultants as a “touch-and-go” or “putting a face on it,” when a subject who illustrates an issue is visually featured while the reporter describes the broader societal dimensions of the issue. It is a commonly used journalistic style that relies on personal narrative to help viewers understand how individuals are affected by an issue. A second visual treatment relied on thematic montage techniques—techniques that Schaefer and Martinez (2009) have described as the most common visual style used by U.S. network journalists since the early 1980s. This technique uses a series of disparate images to illustrate the audio track, and is often used to convey the abstract dimensions of an issue. It is a staple of network journalists and documentarians. The third visual treatment relied on a series of infographics to illustrate the trend information described in the audio track. Although these techniques are not extensively used in local newscasts, they have become more commonly used in network newscasts, as video journalists rely on charts and graphics from databases to convey the numeric dimensions of an Evaluating Video Journalism Techniques, P. 9 issue. Although such graphic techniques were extremely difficult to produce only a decade earlier, in recent years spreadsheet charting and graphing capabilities and digital nonlinear editing programs have made such motion infographics far more practical for contemporary video journalists to include in their news stories. The three news stories dealt with national issues and could have run on a national or international newscast. The first story, referred to henceforth as “Digital Divide,” examined the differing access rates and costs of Internet connectivity across various states and regions. It featured a full-time college student who did not have home Internet access. Therefore to get online materials she had to go to Wi-Fi-equipped coffee shops or libraries to do her homework. The second story that subjects saw dealt with “Senate Bill 744,” the comprehensive immigration reform act passed by the U.S. Senate in 2013, but which has languished in the House of Representatives since its passage. This news story dealt with the declines in the number of apprehensions along the U.S.-Mexico border and featured Border Patrol agents and a border journalist commenting on the number of apprehensions in recent years, as well as the projected benefits and costs of the bill’s enforcement measures. The final news package, “Intercity Sort,” relied on Census Bureau data to illustrate recent interstate moving patterns between two major U.S. metropolitan areas. The figures demonstrated that an ethnic and political sort was occurring between the two regions. It featured a person who had moved twice between the two cities in the last decade. In the story, described her reasons for making each move and her changing perceptions of the two cities. All the stories employed professional-level production values and were produced solely for this experiment. The packages were similar in style and tone to the type of news stories viewers might expect to see on the PBS Newshour. All were journalistically accurate and Evaluating Video Journalism Techniques, P. 10 contained information that had not been previously reported in either the elite or popular media, so it was unlikely that subjects could have been exposed to the presented information or themes prior to seeing the reports. Subjects were convenience-sampled from college classes and other organizational settings where the authors could gain access to groups of 15 or more people. No inducements were offered for participation, other than the researchers providing a 10-minute debrief session after subjects had participated. Willing subjects were asked to watch the 11-minute mininewscast that contained the three news packages and anchor intros to each. The subjects always viewed the stories in the same order; with the Digital Divide first, followed by S.B. 744, and then, Intercity Immigration. Recruitment was strictly voluntary; the researchers simply indicated that they were “doing a research project on journalism techniques,” and that if subjects agreed to participate, they would see an 11-minute video followed by an anonymous 10-minute survey. The hardcopy survey contained several demographic questions (sex, age group, educational attainment) and a question on the amount of time “spent daily with news” and a lone open-ended question on subjects’ “most used news source.” But the heart of the survey had 27 multiple choice questions covering the content of the three stories and semantic differential scales asking subjects to rate how disturbed they were by each story’s content, how likely they would be to talk with people directly affected by the issue, talk with friends about the issue, and contact an elected official regarding the issue. In this way, both subjects’ cognitive comprehension and empathetic and inclination toward engaging in social action were evaluated. To satisfy university IRB requirements, subjects signed a separate consent form, and the researchers answered questions and described the project in more detail after the videos were Evaluating Video Journalism Techniques, P. 11 viewed and the post-viewing surveys had been filled out and collected. In all a total of 190 surveys were turned in during the eight gatherings when respondents were recruited. Although subjects were asked to complete every item on the 32-question survey, 10 surveys were so incomplete that results from them were not tabulated. Therefore, a total in-tab sample of 180 surveys were collected with a treatment sample group breakdown of 63 subjects viewing one treatment version of the three stories, 57 viewing another version, and 60 more viewing the third sequence grouping. Subjects in each treatment convenience sample grouping saw each story in the same sequence, so the breakdown for each in-tab group was as follows, with (Cont) signifying the 1minute put-a-face-on-it visual continuity-edited treatment; (Mont), the thematic montage visual treatment; and (Vis), a version that contained the one-minute infographic visualization treatment. Digital Divide (Cont) - S.B. 744 (Vis) - Intercity Sort (Mont) --- 60 subjects Digital Divide (Mont) - S.B. 744 (Cont) - Intercity Sort (Vis) --- 57 subjects Digital Divide (Vis) - S.B. 744 (Mont) - Intercity Sort (Cont) --- 63 subjects Thus, each of the 180 subjects viewed three versions of the overall 540 news story viewings, with each subject viewing a single version of the Digital Divide, S.B. 744 and Intercity Sort in which each story viewed by a subject contained a different visual treatment. All viewers heard exactly the same audio tracks throughout the story and viewed the same visuals for approximately two minutes of each of the three-minute stories. This meant that each viewing subject could be tested for each story, as well as for each treatment, though a small number of respondents, typically between 3 and 9 subjects for each item, failed to answer one or more of the 32 content questions on the survey, resulting in their data being classified as “missing” for any analyses involving that item. Evaluating Video Journalism Techniques, P. 12 Given the sample size, this posttest design proved statistically powerful enough for very small effects to be tracked across the three visual treatment styles. The researchers anticipated that any effects would be small given that only the visual track for about one-third of each story had been manipulated as an independent variable. A design in which stories were entirely based on one type of treatment or another presumably would have produced far more powerful main effects, but the researchers argue that although isolating such effects would have made the experiment more statistically and seemingly theoretically powerful, relying on a “pure” treatment design might have dramatically changed the contextual validity of the experiment, as journalists rarely would create a three-minute news story made up entirely of one type of visual technique. So although using stories that relied on a single visual presentation treatment would have likely produced much stronger effects, it would not have addressed how those techniques might interact with other audio and visual production elements in a typical news story. In this sense, reports that contain mixed-visual production techniques should have more applicability to the real-world way that journalists and viewers might produce and experience news stories. Therefore, the researchers adopted an approach that sought greater contextual and construct validity (Babbie, 1998, pp. 133-134) by presenting the treatment within longer video reports that themselves contained many of the various other elements typically found in contemporary news reports. Although this presumably made the relative effects of the different treatments less obvious, such an embedded treatment approach likely produces findings that are more generalizable to the types of news forms journalists and viewers are likely to experience in their everyday lives. We anticipated that by showing these reports to a large number of subjects, with each subject viewing each report and each type of treatment, even subtle treatment effects could be gleaned from the data. Evaluating Video Journalism Techniques, P. 13 After a preliminary de-bugging of the questionnaire in June 2014, the surveys were administered from November through the first week in December 2014. And all the stories were still relevant issues at that time, as they still are at the writing of the present report. Coding and data entry were performed in Microsoft Excel and the data were copied into an SPSS database from which frequencies, chi-squares and crosstabs, and ANOVAs, were run, depending on the level of measurement of the variables in question. It should be noted that the original 7-point semantic differential scale was expanded to a 9-point semantic differential scale when a number of the participants circled either “Unlikely” or “Extremely Likely” on the extreme ends of the semantic differential scale, even though a 1-7 close-ended response was originally sought. Thus “Unlikely” was coded as “0” and “Extremely Likely” was coded as “8” for responses on those variables, which were then treated as scaled measures (Babbie, 1998, pp. 194-195). This compromise was adopted to include the maximum survey information produced by the subjects, particularly since it reinforced the normality of the various semantic differential questionnaire items. Presentation and Analysis of Results The first hypothesis, H1, indicated that the graphic scientific visualization technique would better cognitively communicate the complex social dimensions and scope of a social issue than the narrative continuity or the now commonly used montage visual techniques. An index made up of a couple forced-response multiple-choice items was constructed to test the subjects’ understanding of the trends presented in each of the three stories. The average scores, with 1.0 being all correct and 2.0 being all wrong were run as ANOVAs to determine if one treatment type led to increased trend comprehension across all the stories. Results for that ANOVA are presented in Table 1. Evaluating Video Journalism Techniques, P. 14 Table 1: Comparison of Mean Scores on Trend Comprehension Indexes (ANOVA) Treatment Mean Correct St. Ans. Dev. Continuity 1.27 73% .306 Montage 1.18 82% .270 Visualizati 1.18 82% F 7.38 N 178 Welch’s Partial Sig.* Eta2 .002* .03 176 179 on *Levene’s Statistic of homogeneity of variance was .01, which suggests non-homogeneity, therefore Welch’s Robust test of significance was used. Because the data failed to exhibit homogeneity of variance across the answers, Welch’s significance test was used to differentiate between treatments. There was a significant difference between the better comprehension means for subjects who viewed the montage and visualization treatments than for those who viewed the continuity treatments. The scores for understanding the trends depicted by the montage and visualization treatments were essentially identical, with both providing more accurate understandings of the trend issue question item averages than the continuity treatments did. Thus, H1, that “the graphic scientific visualization technique would better cognitively communicate the complex dimensions and scope of a trend issue than the narrative continuity or the now commonly used montage visual techniques,” was only partially supported by the findings. Both the montage and visualization treatments did indeed lead to better understandings of the trend data than the continuity treatments, but neither visualization nor montage demonstrated more communicative power than the other with regard to presenting data trend information. The between groups treatment effects for continuity and two more communicative Evaluating Video Journalism Techniques, P. 15 2 treatments were quite small, with a partial Eta value of .03. This suggests that the differing treatments explained only 3 percent of the differences between the item scores. Nevertheless, this small effect is noteworthy, given that only part of each story contained a different visual treatment. Furthermore, to have contextual validity, the montage treatments contained word graphics that highlighted the key trends being explained by the reporter’s sound track. This technique, which is commonly used in news packages, may have helped viewers of the montage versions of the treatments remember the highlights of the numerical trends discussed in the reports. In this sense, our montage treatments were not “pure” montages because they included some graphic figures, and were therefore contemporary “graphic” permutations of the pure montage image forms first described by early film theorists in the 1910s and 1920s. One good piece of news generated by the study pointed to the fact that all the treatment versions were quite effective at eliciting correct answers about the content of the stories covered by the treatment sections, with subjects who answered the montage and visualization items averaging 82 percent correct answers, and those answering after viewing a continuity treatment still getting 73 percent of the four-part multiple choice questions correct. This is noteworthy because the tested items required subjects to provide answers to questions that were neither particularly simple nor based on common knowledge. Thus, subjects had to learn from the reports, which they only viewed once without further discussion or replay. This strongly suggests that well constructed video reports can indeed be highly efficient mechanisms for conveying fairly complex baseline trend information to an attentive audience. The second hypothesis, H2, was tested by a single multiple-choice item for each story. That item asked viewers to choose the correct answer about a “source” in journalistic terminology—a story “protagonist” in narrative terminology—depicted in the non-treatment Evaluating Video Journalism Techniques, P. 16 portion of each report. However, that person was further visually featured only in the continuity treatment of each report. Therefore, the researchers anticipated that viewers of the continuity treatment would remember more information about that source, and perhaps even identify more with a person who was shown longer on the screen. So the H2 hypothesis indicated that “the narrative continuity technique depicting an individual affected by a social issue will generate stronger cognitive measures of individual personification of the issues.” Table 2: Crosstabs of Put-a-Face-on-It Questions by Treatment Treatment Correct Incrrct 31 Correct Incorrect Ans. Pct. Ans. Pct. 82.8 % 17.2 % Continuity 149 Montage Visualiz’t’n N 180 144 34 80.9 % 19.1 % 178 161 19 89.4 % 10.6 % 180 Chi-Square Sig. .064 Likelyhood Ratio .056 Table 2 shows the results of a crosstab analysis involving H2. It demonstrates that the continuity treatment (82.8 percent) elicited fewer correct answers than the visualization treatment (89.4 percent), which surprisingly drew the most correct answers across the three treatments. Although the Pearson Chi-Square significance level is quite close to the p>.05 level for rejecting the null hypothesis and embracing that something systematic was occurring across these responses, the appropriate level was not achieved. Given that visualization treatment responses achieved the highest number of correct answers among the three treatment conditions, this finding clearly fails to support H2 that the continuity treatment would produce more correct responses. To test the third hypothesis, H3, the correct-versus-incorrect answers for the one question about one of the sources—the question item examined for H2 and displayed in Table 2—was Evaluating Video Journalism Techniques, P. 17 averaged with the correct-versus-incorrect answers for the various other question items about trend data. Again, an average of 1.0 would indicate all correct answers and an average of 2.0 would indicate incorrect answers across all the question items. Contrary to what the researchers had anticipated, this produced a far more consistent pattern of correct and incorrect answers across the three treatment types, as shown in Table 3 below. Table 3: Comparison of Mean Scores on All Comprehension Items (ANOVA) Treatment Mean Correct St. Ans. Dev. F 6.74 N Sig.* 178 .001* Continuity 1.25 75 % .255 Montage 1.19 81 % .239 176 Visualiz’t’n 1.16 84 % .204 179 Partial Eta2 .03 *Levene’s Statistic of homogeneity of variance was .14, therefore homogeneity of variance was established and the ANOVA significance statistic was used. However, the more robust Welch test was also significant, though at p<.002 level, with an “adjusted R2” of .21. Averaging the unanticipated results on the personification question item to the various other items on trends conveyed in the treatments produced a more consistent portrait of how the visualization treatment contributed positively to viewers’ abilities to comprehend the content of the stories, regardless of whether or not viewers were questioned about trends or about people in the stories. In this sense, Table 3 appears to demonstrate evidence of an interaction effect between the personification technique of “putting a face on a story” and the visualization technique of creating a motion infographic. Indeed, we had anticipated a theoretical distinction across the two types of variables we tested—trend data information items and personification information. But contrary to what we had predicted in our theory-driven hypotheses, the visualization treatments appeared to provide a Evaluating Video Journalism Techniques, P. 18 stronger platform for viewers to grasp the material, including viewers’ ability to answer each story’s lone question about sources who were not further depicted in the montage or visualization treatments. And although these statistically significant interaction effects are weak—explaining only about 3 percent of the overall effect (partial Eta squared of .03), the result is nevertheless significant at the p=.001 level, and it is in line with the anticipated marginal weak effects the study was designed to tease out without sacrificing contextual validity. Finally, armed with apparent consistency of knowledge effects across the three treatment scores—with the infogaphics treatment (Vis) producing greater learning than the montage treatment (Mont), followed by the continuity treatment (Cont)—correlations could be run with subjects’ self-reported assessments of how disturbed subjects had become by the issue raised in each of the reports. H3, that “the stronger cognitive awareness found in items addressing H1 and H2 will combine to provide stronger self-reported feelings of being disturbed by the content covered in the news stories,” could therefore be tested, with the expected result that the visualization treatment (Vis) would produce the strongest assessments of disturbance, followed by the montage treatment, then the continuity treatment. Table 4 (below) shows those results. However, unlike the cognitive comprehension scores, the means for the semantic differential scores varied between 0 and 8, with 8 indicating that a subject’s self-report as “extremely likely” that information in the news story was disturbing to the subject, whereas a score of 0 would indicate that the subject described the report’s contents as “unlikely” to be disturbing to the subject. Evaluating Video Journalism Techniques, P. 19 Table 4: Comparison of Mean Scores on the Disturbing Content Questions (ANOVA) Treatment Mean St. Dev. F Continuity 3.68 1.70 Montage 3.96 1.84 176 Visualization 4.16 1.79 179 3.20 N Sig.* 178 .001* Partial Eta2 .012 *Levene’s Statistic of homogeneity of variance was .50, therefore homogeneity of variance was established and the ANOVA significance statistic was used. However, the more robust Welch test was also significant, at p< .037, with an “adjusted R2” of .01. Once again, a very small effect size (Partial Eta2 = .012) was evident for the variable, yet the distribution of the mean scores indicated that the visualization treatment was the most viable one to arouse self-reported disturbance in the viewer. Again, thematic montage was the next most effective technique for arousing disturbing feelings in viewers, followed by the continuity technique. Although the effects were small, these effects were again clearly consistent with the utility of visualization techniques for informing and arousing viewers. Conclusions This study suggests that using graphic visualization or thematic montage visual techniques could better enable journalists to convey complex trend information to viewers. This not very controversial finding was due to the consistently lower measures of comprehension of trend questions that the continuity treatments elicited when compared with the montage or the visualization treatment techniques. This study suggests that increased use of carefully constructed montage and motion infographics could improve video news viewer comprehension of abstract data relationships. There was a time when constructing such montages or motion infographics was costly in terms of specialized software and time. But with any number of popular spreadsheet programs Evaluating Video Journalism Techniques, P. 20 providing graphing capabilities and the ability of digital nonlinear video editors to manipulate inforgraphics, that day has passed. Indeed, the motion graphics for the three graphic visualization treatments came from Federal databases imported in the now ubiquitous Microsoft Excel and edited with Adobe Premiere. Furthermore, the time spent creating and manipulating the graphics was generally no greater than the time required to shoot new video sequences, usually involving a matter of hours rather than days. Hence the infographic age for HD video appears to have arrived. Also the relatively greater efficacy of the montage and visualization treatment versions calls into question “touch and go” continuity depiction strategies of video editing, as the latter did not appear to produce the sought-after broad understandings of baseline information—the type of understandings of data-driven realities that might be crucial toward encouraging critical thinking in news viewers. Furthermore, to the considerable surprise of the researchers, as well as in contradiction to a body of emerging theoretical cognitive research by scholars such as Amit and Greene (2012) and Slovic (2007), the results of this study call into question the time-honored journalistic tradition of “putting a face on an issue” as a strategy for either producing greater awareness of the types of baseline data trends that help viewers understand the underlying dimensions of social problems or eliciting more empathetic responses in news viewers. The study also revealed some interaction effects for visualized infographics with stories containing personification techniques. Study results tentatively showed that combining both infographics and personification techniques could reinforce each other to better inform and disturb viewers with regard to social issues. This, albeit tentative finding, was a big surprise as it runs contrary to journalistic conventions about the importance of “putting a face on a problem,” Evaluating Video Journalism Techniques, P. 21 as well as to recently emerging cognitive theory about the power of representational visual imagery and personification techniques to move viewers and create empathy toward the subjects depicted in news reports. The present study posited that the main effects of each treatment style would drive the overall effects of each story. But to the authors’ surprise, there appears to be a significant interaction effect in which one of two things appeared to have occurred. Either the montage treatment techniques somehow interfered with the theorized overall knowledge gain and disturbing emotional impact of including personification elements in a story, or the synergistic interaction of having both graphic visualization and personification techniques together in a news story far outstripped the synergies realized by combining montage and personification techniques or loading a story with continuity and personification techniques. However, this surprising result would not have even been evident for further consideration if the study design did not include multiple visual strategies within each story— something that was not done to test interaction effects, but for purposes of having stories with greater face validity or contextual validity for journalism professionals who routinely combine such techniques. Indeed, if the researchers had gone with the more traditional research strategy of seeking to maximum effects from the treatments, we would have sought to isolate each treatment as the main driver of each story and therefore would have sought to demonstrate powerful main effects for each treatment. However, that may not be what is likely to happen in the real world of professional journalism or television news viewing. Therefore we approached these stories more as journalists and sought only to manipulate about a third of each story’s visual track to test our hypotheses. Furthermore, we only included one survey question to measure the personification techniques for each of the three stories, and did not seek to fully Evaluating Video Journalism Techniques, P. 22 explore potential interaction effects because, quite frankly, we were not looking for them theoretically. This is why we would describe this as a “tentative finding,” even though it appeared to have strong statistical significance. In this sense, it causes us to humbly rethink the developing theory, rather than make strong claims to have proven unequivocally that such interaction effects can either be reliably reproduced or could be predictively addressed as producing effects of such-and-such a magnitude. We anticipated that by showing complex reports to a large number of subjects, with each subject viewing each report and each type of treatment, even subtle treatment effects could be gleaned from the data. But this is also a potential weakness of our study as the effects sizes are quite small and there could be potential contamination of our results from other unconceptualized but systematic message elements within the three stories each subject saw. In this sense, the reported small effects could have been driven by variations in the non-treatment portions of the messages—a problem Thorson, Wicks and Leshner (2012) describe as “message effects.” So we indeed can think of possible alternative explanations to account for our very small effects. Given that we ran six different analyses with a fairly large 180-person sample, and each subject saw the three stories with differing treatments, the analyses held some possibility to achieve significance even when effect sizes were not merely minimal, but in the 1 percent range. It’s also possible some recency-primacy effects were consistently compounded by not reordering the story presentation sequence or that the survey instrument did not adequately test what we purported it tested. Thus, we describe these somewhat surprising results as “tentative,” but we also contend that they are certainly worthy of further consideration. One overall encouraging finding of the study dealt with how well our subjects appeared Evaluating Video Journalism Techniques, P. 23 to learn the otherwise obscure trend material presented in the stories. Overall, the four-answer multiple-choice survey questions elicited correct responses at rates between 70 and 91 percent. This seemed good for new material presented in a single pass of a single news story. In this regard, the sample that was made up predominantly of Millennials of both sexes (53 percent female and 47 percent male with 85 percent of respondents 18-to-34 years of age) did quite well on the more demanding trend data questions, and did even better on the seemingly easier personification questions. Although the study did not seek to establish the overall efficacy of video journalism strategies for informing the public, this artifact of the study speaks well for the potential of video journalism to inform young adults about complex social trends. Finally, not only do less anticipated findings call out for further study, but the heretofore not discussed aspects of the viewing situation deserve more careful research consideration. Given that the videos were only viewed once on a television screen in a group setting, this experimental context might not generalize well to the way many news viewers, particularly Millenials, might view news reports today or in the future. Therefore, the more intriguing findings of this study might be further examined across different viewing situations, including on smartphones and computers. In both instances, as well as with playback of news programming on digital video recorders, graphic visualizations that might contain far more detailed information for interpolation or extrapolation, might be used to provide cognitive understandings in an environment in which viewers could review video materials several times at their own selfdirected pace. Indeed, it would be interesting to do a similar experiment, perhaps even using the same video treatments, but the linear one-pass viewing model tested in the present study could be altered to permit multiple viewings and viewer-directed engagement with the news stories. That would enable subjects to review and go through the material at their preferred pacing, as is more Evaluating Video Journalism Techniques, P. 24 common with streamed online and mobile video. The implications this could have on graphic visualizations could be particularly intriguing. References Amit, E., & Greene, J. D. (2012, June 28). “You see, the ends don’t justify the means: Visual imagery and moral judgment”. Psychological Science, Online First, 20;10, 1-8. DOI: 10.1177/0956797611434965 Babbie, E. (1998). The practice of social research. Belmont, CA: Wadsworth. Baird, A. D., Scheffer, I. E., & Wilson, S. J. (2011). “Mirror neuron system involvement in empathy: A critical look at the evidence. Social Neuroscience”, 6:4, 327-335. DOI: 10.1080/17470919.2010.547085 Barry, A. M. (2009). Mirror neurons: How we become what we see. Visual Communication Quarterly, 16(2), 79-89. Jacob, P. (2008, April). What do mirror neurons contribute to human social cognition? Mind & Language, 23(2), 190-223. Lang, A., Bolls, P., Potter, R.F., & Kawahara, K., (1999). The Effects of Production Pacing and Arousing Content on the Information Processing of Television Messages. Journal of Broadcasting & Electronic Media, 43(4), 451-475. Lang, A. (2000) The Limited Capacity Model of Mediated Message Processing. Journal of Communication., 50(1), 46-70. Lester, P. M. (2003). "Informational Graphics." Visual Communication: Images with Messages, 3rd Ed., pp. 170-191. CA: Wadsworth Pub. Oliver, M. B., Dillard J. P., Bae, K., & Tamul, D. J. (2012). The effect of narrative news format on empathy for stigmatized groups. Journalism and Mass Communication Quarterly, 89(2), 205-224. DOI: 10.1177/1077699012439020 Patterson, T. E. (2013). Informing the news: The need for knowledge-based journalism. New York: Vintage Books. Schaefer, R.J. (1997) Editing strategies in television news documentaries. Journal of Communication. 47(4), 69-88. Schaefer, R.J., & Martinez III, T.J. (2009) Trends in network news editing strategies from 1969 through 2005. Journal of Broadcasting & Electronic Media. 53(3), 347-364 Shapiro, R. Y. (2011). Public opinion and American democracy. Public Opinion Quarterly, 75(5), 982-1017. Evaluating Video Journalism Techniques, P. 25 Shook, F., Lattimore, D., & Redmond, J. (1992). "Video Editing Basics." The Broadcast News Process, 5th Ed., pp. 229-232. Englewood Cliffs, NJ: Morton. Slovic, P. (2007). “If I look at the mass I will never act”: Psychic numbing and genocide. Judgment and Decision Making, 2:2, 79-95. DOI: 10767360092091 Tal, A., & Wansink, B. (2014). “Blinded with science: Trivial graphs and formulas increase ad persuasiveness and belief in product efficacy. Public Understanding of Science”, 1-9, PUS: Sage. DOI: 10.1177/0963662514549688. Thorson, E., Wicks, R., & Leshner, G. (2012). “ Experimental methodology in journalism and mass communication research.” Journalism and Mass Communication Quarterly, 89;1, 112-124. DOI: 10.1177/1077699011430066 Tufte, E. (1983). The visual display of quatitative information. Chesire, CT: Graphics Press.