Note

advertisement

1

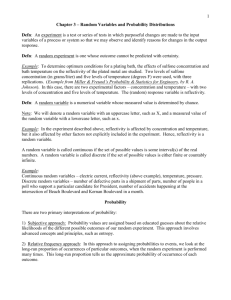

Chapter 5 – Probability Densities

Defn: The probability distribution of a random variable X is the set

A, P A : A . (Note: This definition is not quite correct.

There are some subsets of ℜ for which the probability cannot be

defined. However, any event A that is of practical interest will have

an associated P(A).)

Under certain simple conditions, we may describe the distribution

for a continuous random variable using a probability density

function.

Defn: If the distribution of a continuous random variable has a

probability density function, f(x), then for any interval (a, b), we

b

have

Pa X b f x dx . The probability density function

a

(p.d.f.) has the following properties, which follow from

Kolmogorov’s Axioms:

1) f x 0 everywhere;

2)

f x dx 1 .

Note: If X is a continuous r.v., then P X x 0 for any x.

(Think about this.)

Note: As a result, we have

Pa X b Pa X b Pa X b Pa X b .

2

Examples: pp. 122 – 123

Defn: The cumulative distribution function (or c.d.f.) for a

continuous r.v. X is given by F x P X x

x

f t dt , for all

x . If the distribution does not have a p.d.f., we may still define

the c.d.f. for any x as the probability that X takes on a value no

greater than x.

Note: The c.d.f. for the distribution of a r.v. is unique, and

completely describes the distribution.

Examples: pp. 122 – 123

Mean and Variance

Defn: Let X be a discrete random variable with p.m.f. f(x). We

define the kth moment about the origin to be

+∞

𝜇𝑘′ = ∫ 𝑥 𝑘 𝑓 (𝑥 ) 𝑑𝑥.

−∞

th

We also define the k central moment (or the kth moment about the

mean) as

+∞

𝜇𝑘 = ∫ (𝑥 − 𝜇)𝑘 𝑓 (𝑥 ) 𝑑𝑥.

−∞

The first moment about the origin is just the mean of the

distribution. The second central moment is the variance of the

distribution. Third moments are related to the skewness of the

distribution.

3

Defn: The mean, or expected value, or expectation, of a continuous

r.v. X with p.d.f. f(x) is given by E X

xf x dx .

Note: We interpret the mean in terms of relative frequency. If we

were to repeated take a measurement of the random variable X,

recording all of our measurements, and calculating the average after

each measurement, the value of the average would approach a limit

as we continued to take measurements, and this limit is the

expectation of X.

Defn: Let X be a continuous r.v. with p.d.f. f(x), and mean . The

variance of X, or the variance of the distribution of X, is given by

x

V X E X

2

2

2

f x dx . The standard deviation of

X is just the square root of the variance.

Note: In practice, it is easier to use the computational formula for

the variance, rather than the defining formula:

E X

2

2

2

2

2

x

f

x

dx

.

Use of this formula, rather than the defining formula, often prevents

errors in calculations.

Example: p. 124, Exercise 5.11

4

The Normal Distribution

The normal distribution is a special type of bell-shaped curve.

Defn: A random variable X is said to be normally distributed or to

have a normal distribution if its p.d.f has the form

f x

1

2

e

x 2

2 2

, for - < x < , - < < , and > 0.

Here and are the parameters of the distribution; = the mean of

the random variable X (or of the distribution of X); and = the

standard deviation of X (or of the distribution of X).

Note: The normal distribution is not just a single distribution, but

rather a family of distributions; each member of the family is

characterized by a particular pair of values of and . For

shorthand, we will write X ~ Normal(µ, σ) to mean that the

continuous random variable X has a normal distribution with mean

µ and standard deviation σ.

The graph of the p.d.f. has the following characteristics:

1) It is a bell-shaped curve;

2) It is symmetric about ;

3) The inflection points are at - and + ;

4) It is unimodal;

5) It is continuous on the whole real line.

The normal distribution is very important in statistics for the

following reasons:

5

1) Many phenomena occurring in nature or in industry have

normal, or approximately normal, distributions.

Examples:

a) heights of people in the general population of adults;

b) for a particular species of pine tree in a forest, the trunk

diameter at a point 3 feet above the ground;

c) fill weights of 12-oz. cans of Pepsi-Cola;

d) IQ scores in the general population of adults;

e) diameters of metal shafts used in disk drive units.

2) Under general conditions (independence of members of a

sample and finiteness of the population variance), the possible

values of the sample mean for samples of a given (large) size

have an approximate normal distribution (Central Limit

Theorem, to be covered later).

The Empirical Rule:

For the normal distribution,

1) The probability that X will be found to have a value in the

interval ( - , + ) is approximately 0.6827;

2) The probability that X will be found to have a value in the

interval ( - 2, + 2) is approximately 0.9545;

3) The probability that X will be found to have a value in the

interval ( - 3, + 3) is approximately 0.9973.

Unfortunately, the p.d.f. of the normal distribution does not have a

closed-form anti-derivative. Probabilities must be calculated using

numerical integration methods. This difficulty is the reason for the

importance of a particular member of the family of normal

distributions, the standard normal distribution, which has p.d.f.

6

f z

1

e

2

z2

2

, for z .

The c.d.f. of the standard normal distribution will be denoted by

z PZ z

z

1 w2

e dw .

2

2

Values of this function have been tabulated in the front cover of

your textbook. Alternatively, we may use the TI-83/TI-84 calculator

to find normal probabilities.

To find a normal probability using the calculator:

1) Choose 2nd, DISTR, normalcdf.

2) The calculator then needs four pieces of information: the lefthand endpoint of the interval of interest (if the left-hand endpoint is

-∞, then use 10 standard deviations below the mean), the right-hand

endpoint of the interval of interest (if the right-hand endpoint is +∞,

then use 10 standard deviations above the mean), the mean of the

distribution, and the standard deviation of the distribution. Hit

ENTER. The probability will appear.

Examples: p. 133, Exercise 5.19

a) To find the probability using the TI-83/TI-84 calculator,

𝑃(𝑍 ≤ 1.75) = 𝑛𝑜𝑟𝑚𝑎𝑙𝑐𝑑𝑓(−10,1.75,0,1) = 0.9599.

p. 134, Exercise 5.27.

p. 134, Exercise 5.29.

The reason that the standard normal distribution is so important is

X

that, if X ~ Normal(, ), then Z

~ Normal(0, 1).

7

As a result, we find normal probabilities by using the standard

normal distribution as follows:

Assume that X ~ Normal(µ, σ) Then for any real numbers a ≤ b, we

have

𝑏−𝜇

𝑎−𝜇

𝑃(𝑎 ≤ 𝑋 ≤ 𝑏) = Φ (

) − Φ(

).

𝜎

𝜎

Since we have the TI-83/TI-84 calculator, however, this procedure is

usually unnecessary.

In statistical inference, we will have occasion to reverse the above

procedure. Rather than finding the probability associated with a

given interval, we will want to find the end point of an interval

corresponding to a given tail probability for a standard normal

distribution. I.e., we will want to find percentiles of the standard

normal distribution, by inverting the distribution function (z).

Example: p. 128, (a)

Examples:

a) Find the 90th percentile of the standard normal

distribution.

b) Find the 95th percentile of the standard normal

distribution.

c) Find the 97.5th percentile of the standard normal

distribution.

Note: It was stated in the definition that the two parameters µ and σ

are the mean and standard deviation, respectively, of the normal

distribution. This actually requires some proof. Although the p.d.f.

cannot be integrated in closed form, the mean and variance may

easily be found by integration.

8

The Normal Approximation to the Binomial Distribution

When the number of trials in our binomial experiment is relatively

large, and the success probability, p, is close to 0.50, then we may

approximate the binomial probabilities for intervals of values using

the normal distribution.

Theorem 5.1: If X is a random variable having a binomial

distribution with the parameters n and p, the limiting form of the

distribution function of the standardized random variable

𝑋 − 𝑛𝑝

𝑍=

√𝑛𝑝(1 − 𝑝)

as n → +∞, is given by the standard normal distribution

𝑧

Φ(𝑧) = ∫

−∞

1

√2𝜋

𝑒 −𝑡

2 /2

𝑑𝑡, −∞ < 𝑧 < +∞.

Note: In practice, we correct for the fact that the binomial

distribution is discrete, while the normal distribution is continuous.

The correction for continuity is as follows:

i) If we want to find 𝑃(𝑋 ≤ 𝑥 ), we calculate

𝑋 + 0.5 − 𝑛𝑝

Φ(

).

√𝑛𝑝(1 − 𝑝)

ii) If we want to find 𝑃(𝑋 ≥ 𝑥 ), we calculate

𝑋 − 0.5 − 𝑛𝑝

1 − Φ(

).

√𝑛𝑝(1 − 𝑝)

Example: p. 132.

9

The Uniform Distribution

Consider a continuous r.v. X whose distribution has p.d.f.

1

f x

f x 0 , otherwise. We say that

b a , for a x b , and

X has a uniform distribution on the interval (a, b), abbreviated X ~

Uniform(a, b). If we take a measurement of X, we are equally likely

to obtain any value within the interval. Hence, for some subinterval

c, d a, b , we have P c x d b 1 a dx bd ac .

d

c

The mean of the uniform distribution is 𝜇 =

interval (a, b).

2

The variance is 𝜎 =

(𝑏−𝑎)2

12

𝑎+𝑏

2

, the midpoint of the

, and the standard deviation is

ba

.

2 3

Note: The longer the interval (a, b), the larger the values of the

variance and standard deviation.

Note: The uniform distribution on (0,1) is used as the basis for any

random number generator.

Example: p. 144, Exercise 5.46.

Lognormal Distribution

Defn: We say that a continuous r.v. X has a lognormal distribution

with parameters and if the natural logarithm of X has a normal

distribution. The p.d.f. of X is

10

ln x

f x

exp

, for 0 < x < ,

2

2

x 2

1

and f x 0 , for x 0. The mean and variance of X are

1

2

2

EX e

2

2

e 1 . These may

and V X e

easily be seen by using a change of variable and the results for the

mean and variance of the normal distribution. The parameters and

2 are the mean and variance of the r.v. W = ln(X).

2

2

We write X ~ lognormal(, ) to denote that X has a lognormal

distribution with parameters and .

Note: The c.d.f. for X is given by

ln x

ln x

F X P X x P W ln x P Z

,

for x > 0, and F(X) = 0, for x 0. Hence, we may find probabilities

associated with X by using Table 1 in Appendix A.

Note: This distribution is often applied to model the lifetimes of

systems that degrade over time.

Example: p. 138.

Gamma Distribution

Defn: The gamma function is defined by the integral

1 t

t

e dt , for α > 0.

0

11

It may be shown using integration by parts that

1 1 . Hence, in particular, if α is a positive

integer, 1!. We also have 0.5 .

Defn: A continuous r.v. X is said to have a gamma distribution with

parameters α > 0 and β > 0 if the p.d.f. of X is

1

f x

x 1e x / , for x ≥ 0, and f(x) = 0, for x < 0.

The mean and variance of X are given by E X and

2 V X 2 . We write X ~ Gamma(α, β) to denote that X has

a gamma distribution with parameters α and β.

It may be easily shown that the integral of the gamma p.d.f. over the

interval (0, +) is 1, using the definition of the gamma function.

The gamma distribution is very important in statistical inference,

both in its own right and because it is the basis for constructing

some other distributions useful in inference. For example, the

“signal-to-noise” ratio statistic that we will use in analyzing the

results of scientific experiments is based on a ratio of random

variables which have gamma distributions of a particular form.

The graphs of some gamma p.d.f.’s are shown on p. 139.

Example: p. 144, Exercise 5.53.

Defn: A continuous r.v. X is said to have a chi-square distribution

with k degrees of freedom if X ~ gamma(k, 0.5).

12

The chi-square distribution is important in the analysis of data from

scientific experiments. The “signal-to-noise” ratio that is used to

decide whether the experimental treatments had differing effects is

proportional to a ratio of two random variables, each of which has a

chi-square distribution.

Defn: A continuous r.v. X is said to have an exponential

distribution with mean β if its p.d.f. is given by

1 −𝛽𝑥

𝑒 , 0 ≤ 𝑥 < +∞

𝑓 (𝑥 ) = {𝛽

0, 𝑜𝑡ℎ𝑒𝑟𝑤𝑖𝑠𝑒

Examples: p. 141.

Weibull Distribution

Defn: A continuous r.v. X is said to have a Weibull distribution

with parameters > 0 and > 0 if the p.d.f. of X is

f x x 1 exp x , for x > 0, and

f x 0 ,

for x 0. The mean and variance of X are

E X 1/ 1

2 V X 2/

1

and

2

2 1

1 1 .

We write X ~ Weibull(α,β).

13

The c.d.f. for a Weibull(α, ) distribution is given by

F x 1 exp x , for x > 0, and F(x) = 0, for x 0.

The Weibull distribution is used to model the reliability of many

different types of physical systems. Different combinations of

values of the two parameters lead to models with either a) increasing

failure rates over time, b) decreasing failure rates over time, or c)

constant failure rates over time.

Example: In the paper, “Snapshot: a plot showing program through

a device development laboratory” (D. Lambert, J. Landwehr, and M.

Shyu, Statistical Case Studies for Industrial Process Improvement,

ASA-SIAM 1997), the authors suggest using a Weibull distribution

to model the length of a baking step in the manufacture of a

semiconductor. Let T represent the length (in hours) of the baking

step for a randomly chosen lot of semiconductor. Then T ~

Weibull(α = 0.3, β = 0.1). What is the probability that the baking

step takes at least 4 hours?

We want to find 𝑃(𝑇 ≥ 4 ℎ𝑜𝑢𝑟𝑠) = 1 − 𝑃(𝑇 < 4ℎ𝑜𝑢𝑟𝑠). We use

the Weibull c.d.f. to find this probability.

Checking to See Whether the Data Are Normal

A simple way to assess the fit of a particular probability distribution

to a data set is to superimpose the p.d.f. of the distribution on a

relative frequency histogram of the data.

A better method uses a graph which plots quantiles of the proposed

distribution against the corresponding quantiles of the data set.

Defn: The pth quantile of a data set is the smallest number such that

the fraction of the data values less than that number is p.

14

Defn: The pth quantile of the distribution of a continuous r.v. X is

the smallest number x such that F(x) = p.

Defn: For a random sample of size n, consisting of observed values

x1, x2, …, xn, the ith order statistic is the ith data value when the data

values are ordered from smallest to largest.

The cumulative relative frequency associated with the ith order

i 0.5

statistic is n .

The general procedure for constructing a probability plot (or a

quantile-quantile plot) is as follows:

1) Sort the data in ascending order.

2) For the sample size n, calculate the cumulative relative

frequencies.

3) Invert the assumed distribution function to find the quantiles

associated with the cumulative relative frequencies.

4) Do a scatterplot of the order statistics of the data v. the

quantiles of the distribution.

Constructing a normal probability plot

If we have a set of data consisting of observed values x1, x2, …, xn,

and we want to decide whether it is reasonable to assume that the

data were sampled from a normal distribution, we proceed as

follows:

1) Sort the data from smallest to largest, yielding the order

statistics x(1), x(2), …, x(n).

2) Calculate the standardized normal scores

i 0.5

z(i ) 1

, for each i = 1, 2, …, n, using the standard

n

normal table or using the

15

NORMINV function in Excel, or using the invNorm function of the

TI-83/TI-84 calculator.

3) Plot the order statistics of the data set against the corresponding

standardized normal scores on regular graph paper.

If the plotted points lie near a straight line, then it is reasonable to

assume that the data were sampled from a normal distribution.

Example: Handout