Automated Generation of Benchmarks with High Discriminatory

advertisement

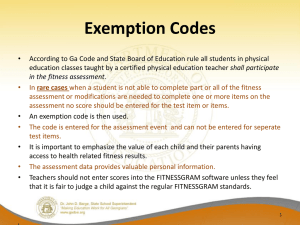

Proceedings of the 7th Annual ISC Graduate Research Symposium ISC-GRS 2013 April 24, 2013, Rolla, Missouri AUTOMATED GENERATION OF BENCHMARKS WITH HIGH DISCRIMINATORY POWER FOR SPECIFIC SETS OF BLACK BOX SEARCH ALGORITHMS Matthew Nuckolls Department of Computer Science Missouri University of Science and Technology, Rolla, MO 65409 ABSTRACT Determining the best black box search algorithm (BBSA) to use on any given optimization problem is difficult. It is self-evident that one BBSA will perform better than another, but determining a priori which BBSA will perform better is a task for which we currently lack theoretical underpinnings. A system could be developed to employ heuristic measures to compare a given optimization problem to a library of benchmark problems, where the best performing BBSA is known for each problem in the library. This paper describes a methodology for automatically generating benchmarks for inclusion in that library, via evolution of NK-Landscapes. 1. INTRODUCTION Some BBSAs lend themselves to straightforward generation of a benchmark problem. For example, a Hill Climber search algorithm, faced with a hill leading to a globally optimal solution, can be expected to rapidly and efficiently climb the hill, and can furthermore be expected to reach the top faster than an algorithm that considers the possibility that downhill may lead to a better solution. For other BBSAs however, constructing a benchmark problem for which that BBSA will outperform all others is a non-trivial task. Imperfect understanding of the interactions between the multitude of moving parts in a modern search algorithm leads to an imperfect understanding of what sorts of problems any given BBSA is best suited for. A method by which a benchmark problem can be automatically generated to suit an arbitrary BBSA would allow the user to assemble a library of benchmark problems. A first step towards building a benchmark where an arbitrary BBSA beats all other BBSAs, is a benchmark where an arbitrary BBSA beats all other BBSAs in a small set. 2. BLACK BOX SEARCH ALGORITHMS Several standard BBSAs were chosen for inclusion in the set, spanning a spectrum of behaviors. By finding benchmark problems for a variety of BBSAs, the validity of this methodology for creating a library of benchmark problems is strengthened. Random Search (RA) simply generates random individuals and records their fitness until it runs out of evaluations. The individual found with highest fitness is deemed optimal. This BBSA is not expected to beat any other BBSA, however one consequence of the No Free Lunch Theorem1 is that we should be able to find a benchmark problem for which none of the other included BBSAs can do better than Random Search. Hill Climber (HC) is a steepest ascent restarting hill climber. It starts from a random location, and at each time step it examines all of its neighbors and moves to neighbor with highest fitness. Should it find itself at a peak -- a point at which all neighbors are downhill -- and then it starts over from a new random location. Note that this algorithm is vulnerable to plateaus, and will wander instead of restart. At each time step, Simulated Annealing2 (SA) picks a random neighbor. If that neighbor has a higher fitness than the current location, then SA moves to that location. If that neighbor has a lower or equal fitness, then SA still may move to that location, with probability determined by a 'cooling schedule'. Earlier in the run, SA is more likely to move downhill. SA does not restart. As implemented in this paper, SA uses a linear cooling schedule so that it is more likely to explore at the beginning of the run and more likely to exploit at the end. As implemented for this paper, Evolutionary Algorithm3 (EA) is a mu + lambda (mu=100, lambda=10) evolutionary algorithm, using linear ranking (s=2.0) stochastic universal sampling for both parent selection and survival selection. 3. N-K LANDSCAPES When using NK-landscapes4, typically the experiment is set up to use a large number of randomly generated landscapes to lessen the impact of any one landscape on the results. This line of research, however, is explicitly searching for fitness landscapes that have an outsized impact. Each NK-landscape generated is evaluated on its ability to discriminate amongst a set of search algorithms. A high scoring landscape is one that shows a clear preference for one of the search algorithms, such that the chosen algorithm consistently finds a better solution than all other algorithms in the set. This is determined via the following methodology. 3.1. Implementation In this paper, NK-landscapes are implemented as a pair of lists. The first list is the neighbors list. The neighbors list is n elements long, where each element is a list of k+1 integer indexes. Each element of the i'th inner list is a neighbor of i, and will participate in the calculation of that part of the overall 1 fitness. An important implementation detail is that the first element of the i’th inner list is i, for all i. Making the element under consideration part of the underlying data (as opposed to a special case) simplifies and regularizes the code, an important consideration when metaprogramming is used. A second important implementation detail is that no number may appear in a neighbor list more than once. This forces the importance of a single index point to be visible in the second list, allowing for easier analysis. The first list is called the neighborses list, to indicate the nested plurality of its structure. The second list is the subfunctions list. The subfunctions list is used in conjunction with the neighborses list to determine the overall fitness of the individual under evaluation. The subfunction list is implemented as a list of key value stores, of list length n. Each key in the key value store is a binary tuple of length k, with every possible such tuple represented in every key value store. For example, if k is 2, then the possible keys are (0, 0), (0, 1), (1, 0), and (1, 1). The values for each key are real numbers, both positive and negative. 3.2. Evaluation of a Bit String Individual To evaluate an individual, the system runs down the pair of lists simultaneously. For each element in neighborses, it extracts the binary value of the individual at the listed indexes in the first list. It assembles those binary values into a single tuple. It then looks at the corresponding subfunc key value store in the subfuncs list and finds the value associated with that tuple. The sum of the values found for each element in the pair of lists is the fitness of that individual in the context of this NKlandscape. Part of the design consideration for this structure was ease of metaprogramming for CUDA5. The various components of the lists plug into a string template of C++ code, which is then compiled into a CUDA kernel. This kernel can then be run against a large number of individuals simultaneously. This approach is not expected to be as fast as a hand-tuned CUDA kernel that pays proper respect to the various memory subsystems available, however it has shown to be faster than running the fitness evaluations on the CPU, given a sufficiently large number of individuals in need of evaluation. 3.3. Evolutionary Operators The search algorithm chosen to guide the modification of the NK landscapes is a canonical mu + lambda evolutionary algorithm, with stochastic universal sampling used for both parent selection and survival selection. Such an algorithm needs to be able to mutate an individual, perform genetic crossover between individuals, and determine the fitness of an individual. Mutation and crossover are intrinsic to the representation of the individual, and will be covered first. Fitness evaluation is left for a later section. Mutation of an NK-landscape is performed in three ways, and during any given mutation event all, some, or none of the three ways may be used. The first mutation method is to alter the neighbors list at a single neighborses location. This does not alter the length of the list, nor may it ever alter the first element in the list. The second mutation method is to alter the subfunc at a single location. All possible tuple keys are still found, but the values associated with those keys are altered by a random amount. The third mutation method alters k. When k is increased, each element of the neighborses list gains one randomly chosen neighbor, with care taken that no neighbor can be in the same list twice, nor can k ever exceed n. Increasing k by 1 doubles the size of the subfunc key value stores, since each key in the parent has two corresponding entries in the child key, one ending in 0, the other ending in 1. For example the key (0, 1) in the original NK-landscape would need corresponding entries for (0, 1, 0) and (0, 1, 1) in the mutated NK-landscape. This implementation starts with the value in the original key and alters it by a different random amount for each entry in the mutated NK-landscape. When k is decreased, a single point in the inner lists is randomly chosen, and the neighbor found at that point is removed from each of the lists. Care is taken so that the first neighbor is never removed, so k can never be less than zero. The corresponding entry in subfuncs has two parents, for example if the second point in the inner list is chosen, then both (0, 1, 0) and (0, 0, 0) will map to (0, 0) in the mutated NKlandscape. This implementation averages the values of the two parent keys for each index in the subfuncs list. Genetic crossover is only possible in this implementation between individuals of identical n and k, via single point crossover of the neighborses and subfuncs lists. The system is therefore dependent on mutation to alter k, and holds n constant during any given system run. 3.4. Evaluation of NK-Landscape Fitness The NK-Landscape manipulation infrastructure is used to evolve landscapes that clearly favor a given search algorithm over all other algorithms in a set. Accordingly, a fitness score must be assigned to each NK-Landscape in a population, so that natural selection can favor the better landscapes, guiding the meta-search towards an optimal landscape for the selected search algorithm. This implementation defines the fitness of the NKlandscape as follows. First, the 'performance' of each search algorithm is found. The performance is defined as the mean of the fitness values of the optimal solutions found across several (n=30) runs. While performance is being calculated all search algorithms also record the fitness of the worst individual they ever encountered in the NK-landscape. This provides a heuristic for an unknown value: the value of the worst possible individual in the NK-landscape. Once a performance value has been calculated for every search algorithm in the set, the performance values and 'worst ever encountered' value are linearly scaled into the range [0, 1], such that the worst ever encountered value maps to zero and the best ever encountered value maps to one. This provides a relative measure of the performance of the various search algorithms, as well as allowing for fair comparisons between NK-landscape. 2 4. NK-LANDSCAPE FITNESS COMPARISONS Once each search algorithm has a normalized performance value, the system needs to judge how well this NK landscape 'clearly favors a given search algorithm over all other algorithms in the set'. This implementation tried two approaches, one more successful than the other. 4.1. One versus All The first heuristic used in this implementation wass to calculate the set of differences between the performance of the favored algorithm and the performance of each of the other algorithms, and then find the minimum of the set of differences. The minimum of the set of differences is then used as the fitness of the NK-landscape. Note that the fitness ranges from negative 1 to positive 1. A fitness of positive 1 would correspond to an NK-landscape where the optimal individual found by all of the non-favored algorithms has identical fitness to the 'worst ever encountered' individual, while the favored algorithm finds any individual better than that. This approach suffered because it needed a 'multiple coincidence' to make any forward progress. The use of the minimum function meant that an NK-landscape needed to clearly favor one algorithm over all others. Favoring a pair of algorithms over all others was indistinguishable from not favoring any algorithms at all, so there was no gradient for the meta-search algorithm to climb. 4.2. Pairwise Comparisons Making pairwise comparisons and allowing the metasearch algorithm to simply compare the normalized optimal individuals between two search algorithms proved to provide a better gradient. Since any change in the relative fitness of the optimal individuals was reflected in the fitness score of the NKlandscape, the meta-search had immediate feedback, not needing to cross plateaus of unchanging fitness. 5. DISTRIBUTED ARCHITECTURE Taking advantage of the parallelizable nature of evolutionary algorithms, this implementation used an asynchronous message queue (beanstalkd) and a web server (nginx) to distribute the workload across a small cluster of high performance hardware. Each of the four machines in the cluster has a quad-core processor and two CUDA compute cards. A worker node system was developed whereby the head node could place a job request for a particular search algorithm to be run against a particular NK landscape. This job request was put into the message queue, to be delivered to the next available worker node. When the worker node received the request, it first checked if it had a copy of the requested NK-landscape in a local cache. If not, the worker node used the index number of the NK-landscape to place a web request with the head node and download the data sufficient to recreate the NK-landscape and place it in the local cache. The use of the web based distribution channel was necessary because the representation of an NK-landscape can grow very large, and the chosen message queue has strict message size limits. For the results presented in this paper, the distributed system ran using 16 worker nodes utilizing the CPU cores, plus another 8 worker nodes running their fitness functions on the CUDA cards. Interestingly, the CUDA-based worker nodes did not outperform the CPU based worker nodes. The CUDA-based fitness evaluation is very fast, but the number of individuals that need evaluated needs to be high before the speed difference becomes apparent, due to the need to move the individuals and kernels across the PCI bus. The exception is the random search algorithm, which was rewritten for CUDA-based evaluation. Since each of the evaluations is independent, all evaluations can happen in parallel. 4. RESULTS Figures 1 through 4 show the results for evolutionary runs using the “One vs All” heuristic. For each figure, the bold red line indicates the fitness of the NK-Landscape in the population that best showcases the chosen BBSA. The bold black dashed line indicates the value of k for that best NK-Landscape. The thinner colored lines indicate the relative fitness of the best individual found by the other BBSAs. The vertical axis on the left measures the fitness of the colored lines, while the vertical axis on the right measures only the dashed black line. The horizontal axis shows how many fitness evaluations have elapsed. While each “One vs All” experiment was repeated 30 times, for clarity each of these figures shows the results of only a single randomly chosen representative run. All runs for each experiment exhibited similar behavior, and inclusion of error bars would unnecessarily clutter the graph. Figures 5 through 8 show the results for evolutionary runs using the “Pairwise” heuristic. In contrast to the previous figures where each graph corresponds to a single evolutionary run, this set of figures combines three runs into each graph. Each graph shows three lines. Each line corresponds to a single randomly chosen representative run, from the set of 30 runs performed for each ordered pair of BBSAs. Each line in the graph shows how performance of the BBSA in the title of the graph compared to the performance of the BBSA corresponding to that line, when applied to the NK-Landscape in the population that best discriminates between the two BBSAs. For clarity, the line showing the k value of the best NK-Landscape is omitted from this graph. 5. DISCUSSION In each experiment, the evolutionary process made forward progress, however most fell short of the goal of finding a highly discriminatory NK-Landscape. In the experiment shown for EA vs All in Figure 1, a landscape with k=7 was found that improved the performance of all search algorithms in the set, but EA improved the most. Later in the run a landscape was found that hurt the performance of all search algorithms in the set, but EA was hurt the least. No further gains were seen in the 1000 evaluations allocated. The performance of Simulated Annealing is strongly dependent on its cooling schedule. As no attempt was made to 3 optimize the cooling schedule for any given NK-Landscape, SA underperformed all other search algorithms in the set. Nevertheless, Figure 2 shows that the evolutionary process found an NK-Landscape that hurt the performance of other search algorithms more than it hurt the performance of SA, resulting in a net positive gain for SA. An NK-Landscape found later in the run proved to be easier for all algorithms to solve, while increasing k. This shows that while an increase in k may mean an increase in difficulty, it may also mean a decrease in difficulty, across all search algorithms. Fitness never became positive however, meaning that the system could not find an NK-Landscape where SA beat all other search algorithms in the set. Hill Climber performed unexpectedly well in this sequence of experiments, consistently beating all other search algorithms in the set. In the experiment shown in Figure 3 it is interesting to note that the performance of the non-competitive search algorithms did change during the run without altering the overall fitness, due to the “One versus All heuristic”. This shows that this heuristic is indeed vulnerable to the need to cross plateaus of unchanging fitness, blind to possible progress. Random Search, as shown in Figure 4, was not expected to reach a fitness of 0. A fitness landscape where random search beat all other search algorithms would be an interesting landscape indeed. Figure 5 shows the performance of EA versus each of the other search algorithms. It is interesting to contrast with Figure 1, where the performance of EA never surpassed that of HC. In Figure 5, we see EA rapidly passing HC and continuing to grow. Clearly the system is capable of evolving an NKLandscape which favors EA over HC, so perhaps if the experiment in Figure 1 were repeated with vastly more evaluations allowed, the system would eventually wander across the unchanging fitness plateau and find the solutions found in Figure 5. Figure 6 shows that evolving an NK-Landscape where Simulated Annealing with a linear cooling schedule is the preferred search algorithm, may take quite some time. Figure 7 provides further evidence that Hill Climber performs very well on this sort of problem, and comparing Figure 7 to all other figures provides evidence that it is relatively easy to evolve NK-Landscapes where Hill Climber outperforms other BBSAs. The interesting part of Figure 8 is that Random Search should not consistently beat other search algorithms, unless the other algorithms are revisiting points on the landscape that have already been tried, and doing so at a rate faster than random search would be expected to. This behavior is expected of SA, which may spend a great deal of time oscillating among already-explored states. This may also happen with EA, which may revisit a state via any number of mechanisms. As implemented, Hill Climber may also revisit states, when wandering across a fitness plateau. The results in Figure 8 indicate that this is a greater weakness in SA and EA than it is in HC. 6. CONCLUSIONS This work definitively shows that discriminatory benchmark problems can be evolved using fitness functions described using the language of NK-Landscapes. However, the discriminatory power between some BBSAs is low. A more expressive description language may be needed to separate the better BBSA, or perhaps the key is to simply allow a much extended runtime. The distributed architecture developed for this research allows for efficient parallelization of fitness evaluations in a heterogeneous environment. The size of the questions we can ask depends on the amount of computational power we can efficiently harness. A framework that allows for efficient horizontal scalability across commodity hardware allows us to ask bigger questions. 7. ACKNOWLEDGMENTS The author would also like to acknowledge the support of the Intelligent Systems Center for the research presented in this paper. Furthermore, the author would like to thank Brian Goldman, a prolific idea factory who epitomizes the concept that if you generate a hundred ideas per day then even if 99.9% of them are terrible, you’re still ahead. 9. REFERENCES [1] [2] [3] [4] [5] 4 Wolpert, D. H., and Macready, W. G., 1997, “No Free Lunch Theorems for Optimization”, IEEE Transactions on Evolutionary Computation, Vol 1(1), pp. 67-82. Kirkpatrick, S., Gelatt, C. D., and Vecchi, M. P., 1983, “Optimization by Simulated Annealing.” Science, Vol 220, pp. 671-680. Eiben, A. E., and Smith, J. E., 2007, “Introduction to Evolutionary Computing,” Springer. Kauffman, S., and Weinberger, E., 1989, “The N-K Model of the application to the maturation of the immune response,” Journal of Theoretical Biology, Vol 144(2), pp. 211-245. Nickolls, J., et al, 2008, “Scalable Parallel Programming with CUDA,” Queue, Vol 6(2), pp. 4053. 1.5 10 8 Fitness 1 6 0.5 4 0 -0.5 0 200 400 600 2 1000 0 800 Evaluations k (landscape overlap) Evolutionary Algorithm vs All fitness EA SA CH RA k Figure 1 – EA performance vs all other algorithms at once 8 1 6 0.5 4 0 -0.5 0 100 200 300 400 500 600 Evaluations 700 800 900 2 1000 0 k (landscape overlap) 10 fitness EA SA CH RA k k (landscape overlap) Fitness 1.5 fitness EA SA CH RA k k (landscape overlap) Simulated Annealing vs All fitness EA SA CH RA k Figure 2 – Simulated Annealing vs all other algorithms at once Hill Climb vs All Fitness 1.5 1 0.5 0 0 100 200 300 400 500 600 700 800 900 10 8 6 4 2 0 1000 Evaluations Figure 3 – Hill Climber vs all other algorithms at once Random Search vs All Fitness 1.5 10 8 1 6 0.5 4 0 -0.5 0 100 200 300 400 500 600 Evaluations 700 800 900 Figure 4 – Random Search vs all other algorithms at once 5 2 1000 0 Evolutionary Algorithm vs Each Pairwise Fitness 0.6 vs HC 0.4 vs RA 0.2 vs SA 0 -0.2 0 100 200 300 400 500 600 Evaluations 700 800 900 1000 Figure 5 – Evolutionary Algorithm vs each other algorithm, pairwise Simulated Annealing vs Each Pairwise Fitness 0 -0.1 0 100 200 300 400 500 600 700 800 900 1000 vs HC vs RA -0.2 vs EA -0.3 -0.4 Evaluations Figure 6 – Simulated Annealing vs each other algorithm, pairwise Fitness Hill Climb vs Each Pairwise 0.8 0.6 0.4 0.2 0 vs SA vs RA vs EA 0 100 200 300 400 500 600 700 800 900 1000 Evaluations Figure 7 – Hill Climber vs each other algorithm, pairwise Random Search vs Each Pairwise Fitness 0.6 vs SA 0.4 vs HC 0.2 vs EA 0 -0.2 0 100 200 300 400 500 600 Evaluations 700 800 Figure 8 – Random Search vs each other algorithm, pairwise 6 900 1000