Old LibSVM support vector machine testing

advertisement

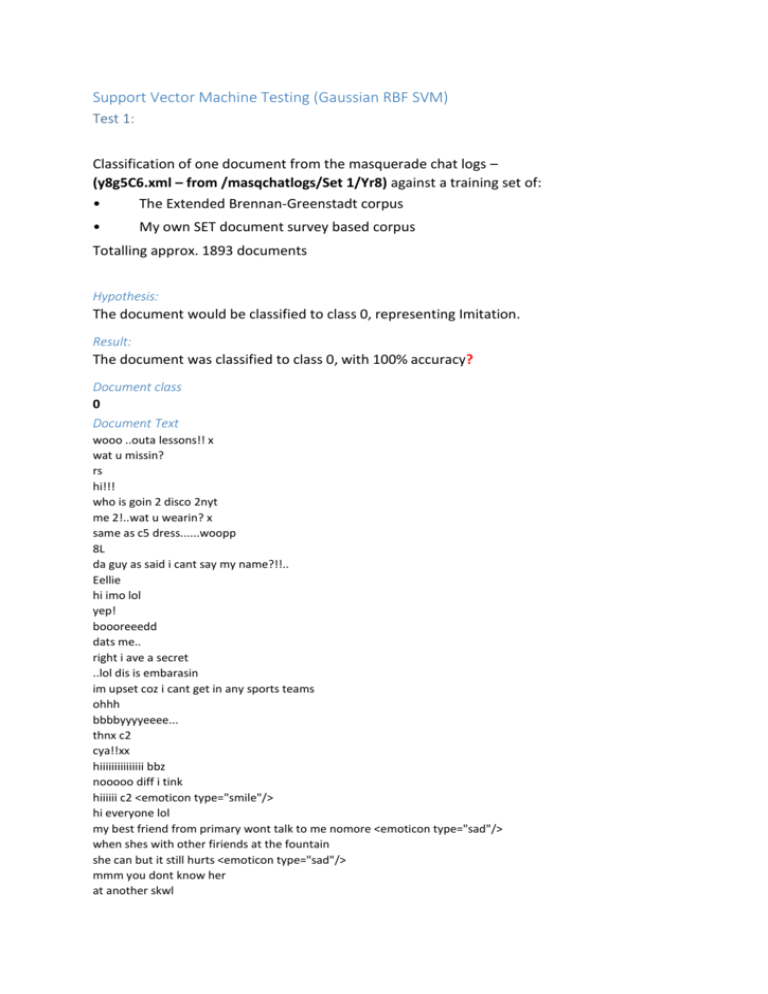

Support Vector Machine Testing (Gaussian RBF SVM)

Test 1:

Classification of one document from the masquerade chat logs –

(y8g5C6.xml – from /masqchatlogs/Set 1/Yr8) against a training set of:

•

The Extended Brennan-Greenstadt corpus

•

My own SET document survey based corpus

Totalling approx. 1893 documents

Hypothesis:

The document would be classified to class 0, representing Imitation.

Result:

The document was classified to class 0, with 100% accuracy?

Document class

0

Document Text

wooo ..outa lessons!! x

wat u missin?

rs

hi!!!

who is goin 2 disco 2nyt

me 2!..wat u wearin? x

same as c5 dress......woopp

8L

da guy as said i cant say my name?!!..

Eellie

hi imo lol

yep!

boooreeedd

dats me..

right i ave a secret

..lol dis is embarasin

im upset coz i cant get in any sports teams

ohhh

bbbbyyyyeeee...

thnx c2

cya!!xx

hiiiiiiiiiiiiiii bbz

nooooo diff i tink

hiiiiii c2 <emoticon type="smile"/>

hi everyone lol

my best friend from primary wont talk to me nomore <emoticon type="sad"/>

when shes with other firiends at the fountain

she can but it still hurts <emoticon type="sad"/>

mmm you dont know her

at another skwl

yeah i have friends!!!!!!

hmmm we'll c <emoticon type="smile"/>

yeaah mite tell her i'm not bothered

naaaaah its coooool7

wuu2 dis weekend?

yeaaaah will do

mmm me too! gt 2 do hmwrk + then cinemaaa woooooo

ny1 goin 2 da disco??!!!

wooooooooo DISCOOOOOOOOO

DISCODISCODISCO

just a dress <emoticon type="smile"/>

yeaaaah THANKS GUYS!!!

dis is boriiiiiiiiiinnnnnn, who r u?

xxx

i'm barney the dinosauuuuurrrr

no rli, who r u?

anna

xxx

yooooooooooooo guys

me tooooooooo

want SOME PIZZZAAAAAA

LOL cafe regent

mmmm love bruno marssssss!!

grenade is sooooo goooooood <emoticon type="smile"/> xx

he's sooooo fitttt!!

yeaaaaahh on waterloo rd he's sooooo goood!

xxx

byeeee c1 xxxxx

think i mite go 22222 byeeeee guys! xxxxxxxxx

Output:

Expected Class(es): 0 Vector(s): {0: 0.8333333333333334, 1: 0.3448275862068965, 2: 1353, 3: 7, 4: 19, 5:

169}

Accuracy = 100% (1/1) (classification)

{'classes': [0.0], 'values': [[0.5357544116729798, 0.46424558832702023]], 'accuracy': (100.0, 0.0, nan)}

Test 2:

Result of a k=5 cross-validation of the following training set:

The Extended Brennan-Greenstadt corpus

o Imitation – Class 0

My own SET document survey based corpus

o Imitation – Class 0

o Obfuscation – Class 1

Totalling approx. 1893 documents

Chosen features

Result:

optimization finished, #iter = 1484

nu = 0.853463

obj = -951.705431, rho = -0.225807

nSV = 1366, nBSV = 948

Total nSV = 1366

.*

optimization finished, #iter = 1524

nu = 0.846096

obj = -939.677400, rho = -0.208196

nSV = 1364, nBSV = 931

Total nSV = 1364

.*

optimization finished, #iter = 1484

nu = 0.831397

obj = -925.413151, rho = -0.208835

nSV = 1330, nBSV = 920

Total nSV = 1330

.*

optimization finished, #iter = 1471

nu = 0.844640

obj = -938.174740, rho = -0.217151

nSV = 1356, nBSV = 941

Total nSV = 1356

.*

optimization finished, #iter = 1561

nu = 0.842478

obj = -940.209623, rho = -0.221545

nSV = 1368, nBSV = 938

Total nSV = 1368

Cross Validation Accuracy = 51.2031%

Conclusion

Since the average accuracy of classification was only slightly above random chance, my model wasn’t

worth using at this time. This was probably due to the set of features I had used to distinguish

between the classes of text - obfuscation and imitation. So, I decided to increase the amount of

features included, in the document representation/feature set per document, in order to specialise

my model, to detecting obfuscation and imitation, as it was currently too general.

Test 3:

Result of a k=5 cross-validation of the following training set:

The Extended Brennan-Greenstadt corpus

o Imitation – Class 0

My own SET document survey based corpus

o Imitation – Class 0

o Obfuscation – Class 1

Totalling approx. 1893 documents

Chosen features

Result

*

optimization finished, #iter = 955

nu = 0.925874

obj = -1323.932837, rho = -0.937927

nSV = 1342, nBSV = 1305

Total nSV = 1342

*

optimization finished, #iter = 984

nu = 0.925874

obj = -1323.856633, rho = -0.925445

nSV = 1338, nBSV = 1311

Total nSV = 1338

*

optimization finished, #iter = 959

nu = 0.925122

obj = -1321.810979, rho = -0.885390

nSV = 1336, nBSV = 1303

Total nSV = 1336

*

optimization finished, #iter = 996

nu = 0.925874

obj = -1323.834650, rho = -0.862457

nSV = 1336, nBSV = 1310

Total nSV = 1336

*

optimization finished, #iter = 953

nu = 0.925122

obj = -1321.794238, rho = -0.848871

nSV = 1332, nBSV = 1309

Total nSV = 1332

Cross Validation Accuracy = 53.7213%

Conclusion

Due to normalizing my features, the cross validation accuracy of my classifier, improved by 2.5182%.

This was a good result, but the accuracy needed to be of a much greater level, before it would be

worth using the classifier. After this, I decided to include more data in my classifier’s model:

Masquerade chat logs (Internet messaging)

o Class 0

And a couple of features to get information out of that data:

Proportion of incorrectly spelt words

Punctuation level/count

Implementation of ‘Proportion of incorrectly spelt words’:

This required a way to track spelling errors, and therefore I would need some kind of

dictionary corpus, to compare words to. This is when I found the ‘big.txt’ corpus file, put

together by Peter Norvig. It contains text from Project Gutenberg’s public domain books,

and lists of frequent words from Wikitionary and the British National Corpus [1].

[1] Source: http://norvig.com/spell-correct.html

This corpus seemed to be a great choice, alone, for detecting spelling errors. But, I had

noticed that the SET emails I had previously tested with, contained slang, and grammar

mistakes, which wouldn’t be picked up by the corpus. So, an extra corpus would be

required:

[EXTRA: Birkbeck spelling error corpus, by Roger Mitton.

It contains text from misspellings in children’s tests, short novels, and excerpts of freewriting by students of adult-literacy. The range of time that it spans, goes from the early 20th

century to the 80’s. Source: http://www.ota.ox.ac.uk/headers/0643.xml]