How to effectively store the history of data in a relational - HSR-Wiki

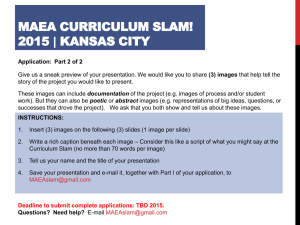

advertisement

How to effectively store the history of data in a relational DBMS

Raphael Gfeller

Software and Systems

University of Applied Sciences Rapperswil, Switzerland

www.hsr.ch/mse

Change history

Date

Version

Comment

11.11.2008

Draft 1

Before “Abgabe Entwurf Fachartikel an Betreuer”

02.12.2008

Draft 2

Time, SCD, SQL examples added

Changed to a compressed writing style

18.12.2008

1.0

Include presentation feedbacks

1. Abstract

Common used relational DBMS data models for storing historical data are analyzed theoretically and

tested by a benchmark in real environment under the following criteria’s: a. Execution time of adding and

mutating entries, b. Searching entries in the past, c. Storage cost of adding historical information, d.

Network bandwidth that is used to retrieve the information

1/16

2. Table of Contents

1.

Abstract ................................................................................................................................................ 1

2.

Table of Contents ................................................................................................................................. 2

3.

Introduction ......................................................................................................................................... 3

3.1.

4.

Excurse Time on a relational DBMS system ................................................................................ 4

Materials and Methods ........................................................................................................................ 5

4.1.

DBMS data models ..................................................................................................................... 6

Overview .............................................................................................................................................. 6

Duplication ........................................................................................................................................... 7

Linked history items ............................................................................................................................. 8

Bidirectional Linked history items ....................................................................................................... 8

Transaction .......................................................................................................................................... 9

4.2.

Test environment and benchmark criteria’s: ............................................................................ 11

Limitation of benchmarks .................................................................................................................. 11

5.

Results ............................................................................................................................................... 12

5.1.

Duplication ................................................................................................................................ 12

5.2.

Linked history items .................................................................................................................. 13

5.3.

Bidirectional Linked history items ............................................................................................. 13

5.4.

Transaction ............................................................................................................................... 13

6.

Discussions ........................................................................................................................................ 14

7.

Literature Cited and References ........................................................................................................ 15

2/16

3. Introduction

Efficient access the exact history of data is a common need. Many areas of business have to handle

history of data in an efficient way.

Examples for using historical data are: 1. A bank has to know at each time what the exact balance of the

customer was. 2. The “Time Machine” (1) function in the Mac OS X operating system that is able to go

back in time for locating older version of your files. 3. A version control system like subversion (2) or cvs

(3) that is able to manage multiple revisions of the same unit of information. 4. A standard software that is

interesting in changes of their underlying data. 5. The storage of legislations (4 S. 1). 6. Office automation

(4 S. 1). Number of articles has been written in the past to show the need of historical data. These are

referenced on (4 S. 1). 7. Regulatory and compliance policies requirements. For example: regulations

such as SOX, HIPAA and BASEL–II. (5).

Storing of historical data cost always disk space and CPU performance to store and restores them.

Unfortunately there is a relation between the CPU performance and the disk space. As more CPU

performance can be used as less disk space is needed and reverse.

Requirements to the historical data are depending on 1. What the access interval of the historical data is.

2. About the interval of the generating of historical data is. 3. Have they any metadata for historical

entries accessible. For example, date of the historical entry, the period that a historical entry is active,

what was the entire state of all entries on a defined point. 4. Have they to be restorable in a deterministic

order.

Operational transaction systems, which are known as OLTP (online transaction Processing) system (6 S.

27) (7), perform day-to-day transaction processing. For OLTP systems common patterns to deal with

historical data does not exist so far. In contrast to operational systems, data warehouses, which are known

as OLAP (Online Analytical Processing) systems (6 S. 27-32), which are designed to facilitate reporting

and analysis (8). There exist some management methodologies to deal with storing historical data. They

called slowly changing dimension, SCD (9) whereas a dimension is a term in data warehousing that refers

to logical groupings of data. There also exits hierarchies’ dimension, for example, date which contains

several possible hierarchies: "Day > Month > Year", "Day > Week > Year", "Day > Month > Quarter >

Year", etc. Slowly changing dimension dimensions are dimensions that have data that slowly changes.

For example, you have a dimension in the database that tracks the changes of the salary of your

employees. There exist six different SDC methods called as type 0, 1, 2, 3, 4, and 6 whereas most

common slowly changing dimensions are types 1, 2, and 3.

On SCD 0, an attribute of a dimension is fixed, it cannot be changed. This slowly changing dimension is

used very infrequently. SCD 1, overwrites the old data with the new data, no historical information is

available. SCD 2, tracks historical data by creating multiple records with a separated key. Unlimited

history entries are available. For example, {Name, Salary, Company, From, To} = {c(“Gfeller Raphael”,

2000, “IMT AG”, 2007,2008), c(“Gfeller Raphael”,0,”HSR”,2008,NULL}. SCD 3, has limited historical

preservation. Additional columns in the tables track changes. For example, {Name, Salary, OldCompany,

CurrentCompany} = {c(“Gfeller Raphael”, “IMT AG”, “HSR”)}. SCD 4, creates separate historical

tables that stores the historical data. Unlimited history entries are available. For example, table 1:

“Person” {Name, Salary, Company} = {c(“Gfeller Raphael”, “HSR”)}, table 2: “PersonChange” {Name,

Salary, Company, Date} = {c(“Gfeller Raphael”, “IMT AG”, 2006)}. SCD 6, is a hybrid approach that

combines SCD 1, 2 and 3 (1 + 2 + 3 = 6). It has been referred by Tom Haughey (10). It is not frequently

used yet, because it has a potential to complicate end user access.

As mention above there are no common patterns for storing historical data within an OLTP system.

Therefore this work analyses how historical data can stored and restored in the most efficient way with

focus on OLTP systems.

3/16

Unfortunately, the used SCD’s data model in data warehouses cannot adapt one to one to OLTP systems,

because they are based on other principles. OLTP are optimized for preservation of data integrity and

speed of recording of business transactions through use of database normalization and an entityrelationship model. OLTP designers follow the Codd (defined by Edgar F. Codd) rules of data

normalization in order to ensure data integrity. Data normalization (11) is a technique for designing

relational database tables to minimize duplication of information which helps to avoid certain types of

logical or structural problems, namely data anomalies. Data warehouses are optimized for speed of data

retrieval. Designers don’t follow the Codd rules of data normalization, normalized tables are grouped

together by subject areas that reflect general data categories. That is often called as flattened data (12).

For example, one table which combines customers, products and sales information.

This work will provide and analyze several common methods for an OLTP system that are based on the

SCD 2. That means that unlimited history of stored entries is available. The other slowly changing

dimensions aren’t so important for OLTP systems.

3.1. Excurse Time on a relational DBMS system

Before we are going to deal with the topic of storing history of entries, an overview about the time in

general, focused on relational DBMS, is given.

Time is defined as “the duration of 9 192 631 770 periods of the radiation corresponding to the transition

between the two hyperfine levels of the ground state of the caesium 133 atom.” (13).

Within a relational DBMS(14) a date is represented by an offset with a defined accuracy on a reference

point; date interval is represented by a value with a defined accuracy; duration is represented by a

composite value of two dates or a date value and an interval. For example a value stored as smalldatetime

(SQL2 (15)) is stored as offset in minutes based on 1900-01-01 based on the Gregorian calendar (which is

the de facto international standard (used in ISO 8601)). For example, the following date and time data

types are available on Microsoft SQL Server (16): c(time:c(hh:mm:ss[.nnnnnnn], 100ns, 3-5 bytes},

date:c(YYYY-MM-DD,1 day, 3 bytes), smalldatetime:c(YYYY-MM-DD hh:mm:ss (1900-01-01 through

2079-06-06), 1 minutes, 4 bytes), datetime:c(YYYY-MM-DD hh:mm:ss[.nnn] (1753-01-01 through

9999-12-31), 0.00333 second, 8 bytes), datetime2:c(YYYY-MM-DD hh:mm:ss[.nnnnnnn]) (0001-0101through 9999-12-31), 100 ns, 6 - 8 bytes), datetimeoffset:c(YYYY-MM-DD hh:mm:ss[.nnnnnnn] [+|]hh:mm, 0001-01-01 00:00:00 through 9999-12-31 23:59:59 (in UTC), 100 ns, 8 – 10 bytes). Remarks:

datetime2, datetimeoffset, date are introduced in Version 2008 of Microsoft SQL Server, compare for that

(16) and (17). The reference point that has been mention above is based on the calendar that is internally

used. For example Microsoft SQL Server 2008 is based on

the Gregorian calendar. If data have to be transferred

between systems that are based on different calendars, or if

they are based on different offsets, they have to be

converted. For example, if server A is on time zone

GMT+1, server B is on time zone GMT-7, transferring

(18)

data between these two servers includes a conversation.

Operations that deal with date are: is a duration d1 totally included by another duration d2, is the

intersection of d1 and d2 empty or not, is a date point p1 before or after a date point p2, is p1 included in d1,

the difference between p1 and p2, is a point p3 between p1 and p2. Other interesting operations are,

manipulating datetime points and extracting information about point p1. For example, use week(p1,

nFirstWeek, nFirstDayOfWeek) to find the week number of the given date time point whereas the second

and the third parameter defines how the week is determined. (18)

4/16

Problems on dealing with date are, based on (19), handling different time zones, handling different

calendars, the time is not synchronized between the clients and servers which makes replication difficult,

the winter and summer time problematic results for example that log entries can be overlapped. Other

problems are, 1. if a used date type is used which is not precise enough to guarantee the order of the

stored entries. For example, an application is able to generate and store more than 0.00333 entries per

second and the standard datetime type (as mention above) is used. That results in the fact that ordering the

entries by the datetime column is not deterministic. This can be avoided for example by: a. using a date

time that is more precise or b. add an additional numeric column that is increased by the relational DBMS

(is called identity specification on a numeric column on Microsoft SQL Server). 2. If the application

lifetime is larger than the used time type can handle. For example: a. an application that is still used after

2080 which stores their entries based on a smalldatetime, b. the “year 2000 problem” which was based on

the this issue. 3. The very high resolution from a date type, like datetime2, is not useful if the time on the

entry creation place has a smaller resolution. This results in a false sense of security and preciseness. 4.

The time on the entry creation place is adjusted; this will result in gaps or in overlapping entries.

Adjusting the time can be done for example: a. manually, b. automatically by using a network time

synchronization protocol like NTP(20) or SNTP(21), c automatically done by the operating system by

synchronization the operation system time with the BIOS time (22 S. 2).

4. Materials and Methods

For analyzing the CPU performance and disk storage lets define the following database schema. This

schema does not contain any history information.

The database schema will be decorated with historical information. For each method that has to be

analyzed the following statistics data are collected:

Cost of inserting 500 companies [in operations and time [ms]]

Cost of inserting 500 persons [in operations and time [ms]]

Storage cost of mutating 500 persons [for easier comparability insert statements are counted]

Cost of changing a person 500 times [in operations and time [ms]]

Cost of changing a company 500 times [in operations and time [ms]]

Average restore costs [in operations and time[ms]]

o a person that changes recently (Get Person at T – 1) and the n changed person (Get

Person at T-n)

o a person at time x:

o persons by a company at the past

o next person by a person at the past

o of retrieving all persons and companies that are active at time x

Cost of operations is defined by counting the insert and the select statements.

These methods of adding historical information to the given data should be analyzed: a. Duplication,

b. Linked history items, c. Bidirectional Linked history items. d Transaction

5/16

The methods are commonly used on practical work. The methods are selected based on my personal

experience during the student time on the HSR in Rapperswil, four years of working time in the industry

and of the opinion of my fellow students.

In the next part of this section, they will be described in detail.

4.1. DBMS data models

Overview

This table gives an overview about the common relational DBMS data models to store historical data. It

focused on the general execution time of the operation that discussed above. Below, the DBMS data

models are described in detail.

Method

Duplication

Linked history

items

Bidirectional

Linked history

items

Transaction

Insert an entry

--

++

++

++

Updating an entry

--

++

++

++

Storage cost

--

++

++

++

Get an entry at (Time – 1)

++

++

++

++

Get en entry at (Time – n)

++

-

-

+

Entry at time x

++

Not available

-

+

Get an integrity state over all

entries

++

Not available

--

-

Get the next entry by a entry at

the past

Not exactly

defined

+

+

++

Get the previous entry by a

entry at the past

++

+

++

+

A person by a company at the

past

++

Not exactly

defined

Not exactly

defined

-

++ : O(1), + : O(n Changes on a table) - : O(n) or O( n changes on the whole database), --: O(N)

n represents the active rows of a table, N represents the total active rows

6/16

Duplication

Realizing

For each change the entire data as a new a

new global change set is created.

Focused on: 1. the fast access of

historically information, 2. that the

implementation is easy for the developer

and 3. Integrity over all entries in time

Insert

Insert or update a person

1 * Insert [Changes], nPerson * Insert, nCompany * Insert

Insert Into Changes, for each Person: insert into Person (newValues, newChangeID) [also for each company]

Update

1 * Insert [Changes], nPerson * Insert, nCompany * Insert

Insert Into Changes, for each Person: insert into Person (newValues, newChangeID) [also for each company]

Disk costs:

Size(Person) * nPerson + Size (Company) * nCompany + Size(Changes)

Restore

Get an entry at (T – 1)

2 * Select

Select top 1 ID_Change from Changes order by Index, Select * from Person where ID_Person=@ID and FK_ID_Change=

@ID_Change

Get an entry at (T – n)

2 * Select

Select top n ID_Change from Changes order by Index, Select * from Person where ID_Person=@ID and FK_ID_Change=

@ID_Change

A person at time x:

2 * Select

Select ID_Change top 1 from Changes order by Index where DateTime=@DateTime, Select * from Person where ID_Person=@ID

and FK_ID_Change= @ID_Change

A person by a company at the past:

1 * Select

Select * from Person where FK_ID_Company=@ID and FK_ID_Change= @ID_Change

Next person by a person at the past

2 * Select

Select top 1 ID_Change from Changes order by Index where Index > @Index, Select * from Person where ID_Person=@ID and

FK_ID_Change= @ID_Change

Get the previous entry by a entry at the past:

2 * Select

Select top 1 ID_Change from Changes order by Index desc where Index > @Index, Select * from Person where ID_Person=@ID

and FK_ID_Change= @ID_Change

Get an integrity state over all entries

1 * Select + nTables * Select

Select top 1 ID_Change from Changes order by Index, Select * from Person where FK_ID_Change=@ID_Change, Select * from

Company where FK_ID_Change=@ID_Changes

Remarks

The column Index [identity that automatically increment] on Changes has been added cause the resolution

of the type datetime is to small and does not allow a explicit ordering.

7/16

Linked history items

Realizing, this method realizes

a log file per table.

Focused on: 1. avoid huge

changes to the underlying

database 2. easy to implement

for the developer and 3. fast

insertion of new entries.

Insert or update a person

Insert

1 * Insert

Insert INTO Person Values ()

Update

1 * Insert + 1 * Update

Insert INTO Person Values (OldValues), Update Person Set FK_Old_ID_Person=@newId where ID=@Id

Disk costs:

Size(Person)

Restore

Get an entry at (T – 1):

1 Select

Select * Person from where ID_Person=@FK_Old_ID_Person

Get an entry at (T – n)

n Select

Do n times: Select * Person from where ID_Person=@FK_Old_ID_Person

A person at time x:

person needed)

A person by a company at the past:

Next person by a person at the past

Not available (additional column “created“ for table

Not exactly defined

n Select

Do n times: Select * Person from where ID_Person=@FK_Old_ID_Person

Get the previous entry by a entry at the past:

n select

Do n times: Select * Person from where FK_Old_ID_Person=@ID

Get an integrity state over all entries

person needed)

Not available (additional column “created“ for table

Bidirectional Linked history items

Realizing: This method realizes

a log file per table that is

bidirectional. Based on method

“linked history items”, the

relation between the old and the

new entry is exposed into a

separate table.

Focused on: 1. Fast insertion of

new entries, 2. Extendibility by

adding additional metadata to the separated table (for example who has changed the entry), and 3. The

additional ability to navigate forward and backward within historical data.

Insert or update a person

Insert

1 * Insert

Insert INTO Person Values ()

Update

Insert INTO Person Values (oldValues, newId), Update PersonChanges Set FK_ID_Company_New =@newId where

ID=@Id,Insert INTO PersonChanges Values (FK_ID_Company_New=@CurrentId, FK_ID_Company_Old=@ newId

Disk costs:

Size(Person) + Size (PersonChanges)

8/16

Restore

Get an entry at (T – 1):

2 Select

Select FK_ID_Person_Old from PersonChanges where FK_ID_Person_New=@ID_Person, Select * from Person where

ID_Person=@ FK_ID_Person_Old

Get an entry at (T – n)

n Select + 2 Select

do n times: Select FK_ID_Person_Old from PersonChanges where FK_ID_Person_New=@ID_Person, Select * from Person where

ID_Person=@ FK_ID_Person_Old

A person at time x:

nChanges * Select + Select

Select top 1 * from PersonChanges where FK_ID_Person_New=@ID_Person and [DateTime]>=@DateTime order by [Index]

Desc, Select * from Person where ID_Person=@ID

A person by a company at the past:

Next person by a person at the past

Not exactly defined

2 Select

Select * from PersonChanges where FK_ID_Person_New=@ID_Person and [order by [Index] Select * from Person where

ID_Person=@ FK_ID_Person_Old

Get the previous entry by a entry at the past:

2 select

Select * from PersonChanges where FK_ID_Person_Old=@ID_Person and [order by [Index] Select * from Person where

ID_Person=@ FK_ID_Person_New

Get an integrity state over all entries

N * Select

find for each person in (Select * from Person where ID_Person NOT IN (Select FK_ID_Person_New from PersonChanges)) the

person a time x

find for each company in (Select * from Company where ID_Company NOT IN (Select FK_ID_Company_New from

CompanyChanges)) the person at time x

Remarks

The column Index [identity that automatically increment] on the ChangesTables has been added cause the

resolution of the type datetime is to small and does not allow a explicit ordering.

Transaction

Realizing, in a separate table transaction

are stored for restoring the history. For

example if the name of the person x has

changed from “Müller” to “Hans”, a new

transaction is inserted that contains

(Action=Person.MutateName,

OldValue=”Müller”, EntryID={ID},

DateTime={Date})

Focused on: 1. less storage cost and 2.

Retrieve precise information about history

at every point on time

Insert or update a person

Insert

1 * Insert

Insert into Person (Values)

Update

1 * Insert + 1 Update

Update Person SET Name=@Name, Salary=@Salear, Image=@Image, FK_ID_Company=@ID_Company Where

ID_Person=@ID, Insert INTO [Transaction] VALUES

(@ID_Transaction,@NewIntValue,@NewStringValue,@DateTime,@EntryID,@Action,@Index)

Disk costs:

Size (Transaction)

Restore

Get an entry at (T – 1):

1 Select

Select top 1* from [Transaction] where EntryID=@ID_Person AND Action Like 'Person.%' order by [Index] Desc. Apply this

transaction.

Get an entry at (T – n)

1 Select + (OCPU(nChanges))

9/16

Select top n* from [Transaction] where EntryID=@ID_Person AND Action Like 'Person.%' order by [Index] Desc. Apply these

transactions.

A person at time x:

1 Select + (OCPU(nChanges))

Select top n* from [Transaction] where EntryID=@ID_Person AND Action Like 'Person.%' AND [DateTime]>=@DateTime

order by [Index] Desc. Apply these transactions.

A person by a company at the past:

1 Select + (OCPU(NChanges))

Select * from Person, Select * from [Transaction] where Action Like 'Person.%' AND [DateTime]>=@DateTime order by [Index]

Desc, Apply these transactions to all person. Apply a filter of these persons with ID_Company=@ID

Next person by a person at the past

1 Select + (OCPU(nChanges))

Select top n * from [Transaction] where EntryID=@ID_Person AND Action Like 'Person.%' And Index> @Index Index< @Index

+ order by [Index] Desc, Apply these transactions.

Get the previous entry by a entry at the past:

1 select + (OCPU(nChanges))

Select top n * from [Transaction] where EntryID=@ID_Person AND Action Like 'Person.%' And Index< @Index Index> @Index

- order by [Index] Desc, Apply these transactions.

Get an integrity state over all entries

nTables * Select + 1* Select + (OCPU(NChanges))

Select * from Person, Select * from Company, Select * Transaction. Apply these transactions on all loaded objects

Remarks

The column Index [identity that automatically increment] on the Transaction has been added cause the

resolution of the type datetime is to small and does not allow a explicit ordering

10/16

.

4.2. Test environment and benchmark criteria’s:

CPU: Intel Core 2, 2Ghz, Memory: 2 Gb, Operating System: Windows XP, Sp3, Database: Microsoft

SQL Server 2005, Express Edition with SP1, Benchmark will be written in C#

The benchmark will be divided into eight test steps. A test step contains n test step points. A test step

point is represented by (execution time, returned rows by the database, inserted rows on the database).

Four configuration parameters are provided for defining the n test points:

countCompanies [Default: 500]

Sets how many companies are inserted by the test step 0.

countPersons [Default 500]

Sets how many persons are inserted by the test step 1.

countChangeCompany [Default 500]

Sets how many times a company is changed by test step 2.

countChangePerson [Default 500]

Sets how many times a person is changed by test step 3.

A benchmark contains the following eight test steps:

0.

1.

2.

3.

4.

5.

6.

7.

Insert companies

countCompanies companies are inserted. After each insertion, a test step point is generated.

Insert persons

countPerson person are inserted. After each insertion, a test step point is generated.

Change companies

countChangeCompany companies are changes. After each update, a test step point is generated.

Change persons

countChangePerson person are changes. After each update, a test step point is generated.

Find a person by its parent person

for i=0 to countChangePerson, after fount the i’te change on the person a test step point is

generated.

Collect all persons and companies that are valid at a specific time

for i=0 to 100, after found all active persons and companies at time T-i a test point is generated.

Find a person in the past by a datetime value

for i=0 to 100, after found the person at time T-i a test point is generated.

Find a person by a company by a datetime value

for i=0 to 100, after found the person for a company at time T-i a test point is generated.

Limitation of benchmarks

C# provides per default a timer “DateTime.Now.GetTickCount” that has only a resolution about

10 milliseconds, see (23): For exact time measurement that is a too few.

A kernel32 function is used that allows a resolution of min 1/ 1193182 sec, (23)

Based on the fact that windows XP is not a real time operating system, time measurements

tended to spread significant.

The used test result is the extended median (24) of 11 rounds of a single test. The extended

medium is the arithmetic middle of the five values that are in the middle of the test data list after

ordering the test data. Example: (Ordered test data:

2,5,8,11,14,17,20,23,26,29,32,35,38,41,44,47,50,53,56,59,62,65,68,71,74; Fives values:

32,35,38,41,44; Result: 38)

11/16

5. Results

For each models, described above, a benchmark has been development. They are available under (25).

The generated test points by the benchmarks displaying a timeline per relational DBMS data model.

These timelines are displayed in the following sections.

5.1. Duplication

The theory and the measurements

are representing the same

principle: 1. Inefficient in inserting

and updating values. 2. Nearly

constant time for reading and

searching operations. 3. Large

amount of disk count is used.

Unfortunately the time for reading

and searching entries is constantly

high. The reason for the constant

but high access time is: inserting

and updating of the entries are created about 0.8 million company entries and about 0.6 million person

entries. An efficient usage of this method is only possible if the amount of entries is small and the interval

of changing entries is small also. The range of the target application has to be focused on handling a small

amount of entries that are optimized for reading. For example: 1.Settings entries, 2. Statistics entries that

are updated every month, 3.

Master data of an application. To

validate this assumption a new

benchmark for this method is

executed with the new following

settings: 1. countCompanies = 1,

2. countPersons = 10, 3.

countChangeCompany = 1, 4

countChangePerson = 100. This

new benchmark results in the

following timeline. As has been seen already above, nearly constant time for reading and searching

operations. The different is that the execution time is now at max about 10 milliseconds.

Optimization two, let’s call them “ChangeSets”, is: only changed entries are duplicated. This results in: 1.

fewer data storage, 2. an acceptable overhead in reading. Realizing change sets in context of a version

control system like (2) is done by this optimized version.

12/16

5.2. Linked history items

The theory and the measurements are nearly equal. Test step four that has not a linear execution time, has

already been identified in the

theory section.

Remark that step 5 until 7 are not

supported by this model: 2.Collect

all persons and companies that are

valid at a specific time, 2. Find a

person in the past by a datetime

value, 3. Find a person by a

company by a datetime value.

5.3. Bidirectional Linked history items

The theory and the measurements

are equal: 1. Inserting and updating

is constant linear, 2. Finding a

person in the past by a datetime has

a constant execution time. The

execution time is proportional to

the count of entries that are

changed.

The different to method “Linked

history items” is: 1. the additional

usage of one insert statement during

updating of entries. By the way,

this offers to run step four to seven additionally.

5.4. Transaction

The theory and the measurements

are equal on this model. The only

bottleneck is the huge amount of

data that has to be transferred for test

step four to seven. This can be

avoided by using so called “anchor

transaction”

or

“savepoint

transaction”(26). Let’s call this

optimization “Transaction with

anchor”. These transactions will

resave the state of each entry in the

database. If an entry has to be

restored: 1. Find the nearest anchor in time. 2. Find the transactions from this “transaction anchor” until a

given time. These will result in: 1. O(maxChangesBetweenTwoAnchors) instead of O(nChanges) for

finding a person in time. (test step 7). 2. Storage cost is increased by the used storage of the “anchors

transactions”. These “anchors transaction” can be generated for example every month or every night.

13/16

6. Discussions

On general, there’s no only one right relational DBMS data model for a given problem.

The four data models from above are just a small view out of the possibilities that are available for storing

historical data. Other possibilities are: a. Combine the data models to reduce some unwanted effects. b.

Optimize the models based on your application requirements. For example: add an anchor to the

transaction method to save CPU performance and network bandwidth, but on the other hand more storage

is needed. c. apply different model – strategies to different columns of a table. d. use the build-in support

of a database management system for storing historical data. For example: on Oracle use the “Oracle

Total Recall” function (5). e. use triggers on the database level to generate the entries for the transaction

table used by method “Transaction” automatically. That makes the collection of history data transparent

to the application level.

But based on the requirements on the application there are some better fit candidates:

Data

volume

Change

frequency

Method

Samples

Low

Low

Duplication

Low

Middle

Linked history items

Bidirectional Linked history items

Low

High

Linked history items

Bidirectional Linked history items

Transaction with anchor

Measurements values on a weather station

Logging of stock exchange data

Middle

Low

Change Set

Linked history items

Bidirectional Linked history items

Master data of a application

CVS system

Middle

Middle

Linked history items

Bidirectional Linked history items

Data on the registration office

Middle

High

Linked history items or

Bidirectional Linked history items

Transaction

Stock data

High

Low

Change Set

Linked history items or

Bidirectional Linked history items

Master data of a application

Data on the department of statistics

CVS system

High

Middle

Linked history items or

Bidirectional Linked history items

Transaction

Data on the department of statistics

High

High

Transaction with anchor

File system that supports timeline

A bank software

14/16

Settings Entries

Storing of photos for a user profile

Single table application

Statistic data that are saved periodically each

month

The following advices can be given: 1. If storage is limited, use the methods in the following order: a.

transaction mechanism, b. linked history items, c. bidirectional linked history items, d. transaction with

anchors, e. change Set based on Duplication, f. duplication. 2 If network bandwidth is limited, use the

methods in the following order: a. Change Set based on Duplication, b. Duplication, c. Linked history

items, d. Bidirectional Linked history items, e. Transaction with anchors, f. transaction mechanism 3. If

the knowledge of the developers is low, use either method duplication or method linked history items. 4 If

data volume is high, use the methods in the following order: a. transaction mechanism, b. Linked history

items, c. Bidirectional linked history items, d. Transaction with anchors, e. Change Set based on

Duplication, f. Duplication. 5. If change frequency of the data is high, use the methods in the following

order: a. Transaction with anchors, b. transaction mechanism, c. Linked history items, d. Bidirectional

linked history items, e. Change Set based on Duplication, f. Duplication

7. Literature Cited and References

1. Apple. Time Machine. A giant leap backwards. [Online] [Cited: 11 5, 2008.]

http://www.apple.com/macosx/features/timemachine.html.

2. SubVersion. Open Source Software Engineering Tools, Subversion. [Online] [Cited: 11 5, 2008.]

http://subversion.tigris.org/.

3. CVS. CVS - Concurrent Versions System. [Online] [Cited: 11 2, 2008.] http://www.nongnu.org/cvs.

4. V, Lum, et al. Designing DBMS support for the temporal dimension. s.l. : ACM, 1984. Vol. 14, 2.

ISSN:0163-5808.

5. Oracle. Total Recall. [Online] 2007. [Cited: 12 18, 2008.]

http://www.oracle.com/technology/products/database/oracle11g/pdf/total-recall-datasheet.pdf.

6. brüggemann@iwi.uni-hannover.de. Vorlesung: Datenorganisation SS 2005. [Online] 2005. [Cited:

11 19, 2008.] http://www.iwi.uni-hannover.de/lv/do_ss05/do-08.pdf.

7. Lee, Gilber and Jan, Hewitt. OLTP. [Online] 8 13, 2008. [Cited: 11 18, 2008.]

http://searchdatacenter.techtarget.com/sDefinition/0,,sid80_gci214138,00.html.

8. Davenport, Thomas H. and Harris, Jeanne G. Competing on Analytics: The New Science of Winning

. s.l. : Harvard Business School Press, 2007. ISBN 978-1422103326.

9. Joy Mundy, Intelligent Enterprise. Kimball University: Handling Arbitrary Restatements of History.

[Online] Intelligent Enterprise, 12 9, 2007. [Cited: 11 13, 2008.]

http://www.informationweek.com/news/showArticle.jhtml?articleID=204800027&pgno=1.

10. Kimball, Ralph. The Soul of the Data Warehouse, Part 3: Handling Time. [Online] Kimball

University:, 4 1, 2003. [Cited: 11 14, 2008.]

http://www.intelligententerprise.com/030422/607warehouse1_1.jhtml.

11. The University of Texas at Austin. Normalization. [Online] Information Technology Services at The

University of Texas at Austin., 2 29, 2004. [Cited: 11 13, 2008.]

http://www.utexas.edu/its/archive/windows/database/datamodeling/rm/rm7.html.

15/16

12. wilson, prof david b. Database Structures. [Online] administration of justice george mason

university, 6 13, 2006. [Cited: 11 13, 2008.]

mason.gmu.edu/~dwilsonb/downloads/database_structure_overheads.ppt (p 1-4).

13. Organisation Intergouvernementale de la Convention du Mètre. The International System of

Units (SI), 8th edition. [Online] 1 1, 2006. [Cited: 11 13, 2008.]

http://www.bipm.org/utils/common/pdf/si_brochure_8_en.pdf.

14. University of Bristol . Dictionary of Computer Technology. [Online] University of Bristol , 2008.

[Cited: 11 17, 2008.] http://www.cs.bris.ac.uk/Teaching/Resources/COMS11200/techno.html.

15. ISO/IEC 9075. BNF Grammar for ISO/IEC 9075:1992 - Database Language SQL (SQL-92).

[Online] 92. [Cited: 11 18, 2008.] http://savage.net.au/SQL/sql-92.bnf.html.

16. Microsoft. Date and Time Data Types and Functions, SQL 2008. [Online] 2008. [Cited: 11 18, 2008.]

http://msdn.microsoft.com/en-us/library/ms186724.aspx.

17. —. Data Types (Transact-SQL). [Online] Microsoft, 9 2007. [Cited: 11 17, 2008.]

http://msdn.microsoft.com/en-us/library/ms187752(SQL.90).aspx.

18. —. WEEK( ) Function. [Online] Microsoft, 1 1, 2008. [Cited: 13 12, 2008.]

http://msdn.microsoft.com/en-us/library/aa978670(VS.71).aspx.

19. Lips, Thomas. Datenbanksysteme 2, Zeit in der Datenbank. [Powerpoint] Rapperswil : HSR, 2004.

20. Mills, D.L. RFC, Network Time Protocol (NTP). [Online] M/A-COM Linkabit, 1985. [Cited: 12 1,

2008.] http://tools.ietf.org/html/rfc958.

21. Mills, D. RFC, Network Time Protocol (SNTP) Version 4 for IPv4, IPv6 and OSI. [Online] University

of Delaware, 2006. [Cited: 12 1, 2008.] http://tools.ietf.org/html/rfc4330.

22. Microsoft. How to configure an authoritative time server in Windows XP, Q314054. [Online]

Microsoft, 4 12, 2006. [Cited: 12 1, 2008.] http://support.microsoft.com/kb/314054/en-us.

23. —. How To Use QueryPerformanceCounter to Time Code. [Online] Microsoft, Juni 25, 2007. [Cited:

11 1, 2008.] http://support.microsoft.com/kb/172338/en-us.

24. Williman, Prof. Dr. Louis Sepp. Wahrscheinlichkeits und Statistik. [book auth.] Prof. Dr. Louis

Sepp William. Wahrscheinlichkeits und Statistik. Rapperswil : HSR, 2004, p. 20.

25. Gfeller Raphael, RaphaelGfeller@sunrise.ch. Implementation of a benchmark for the four common

rational DMS data models for storing historical data. [Online] 11 10, 2008. [Cited: 11 11, 2008.]

http://wiki.hsr.ch/Datenbanken/files/DB.zip.

26. Microsoft. SAVE TRANSACTION (Transact-SQL). [Online] Microsoft, 2008. [Cited: 12 18, 2008.]

http://msdn.microsoft.com/en-us/library/ms188378.aspx.

16/16