IEEE Paper Template in A4 (V1)

advertisement

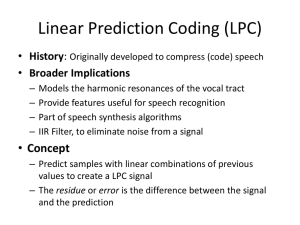

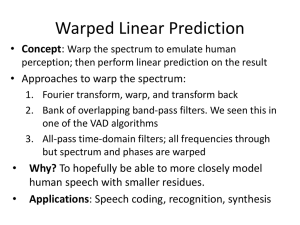

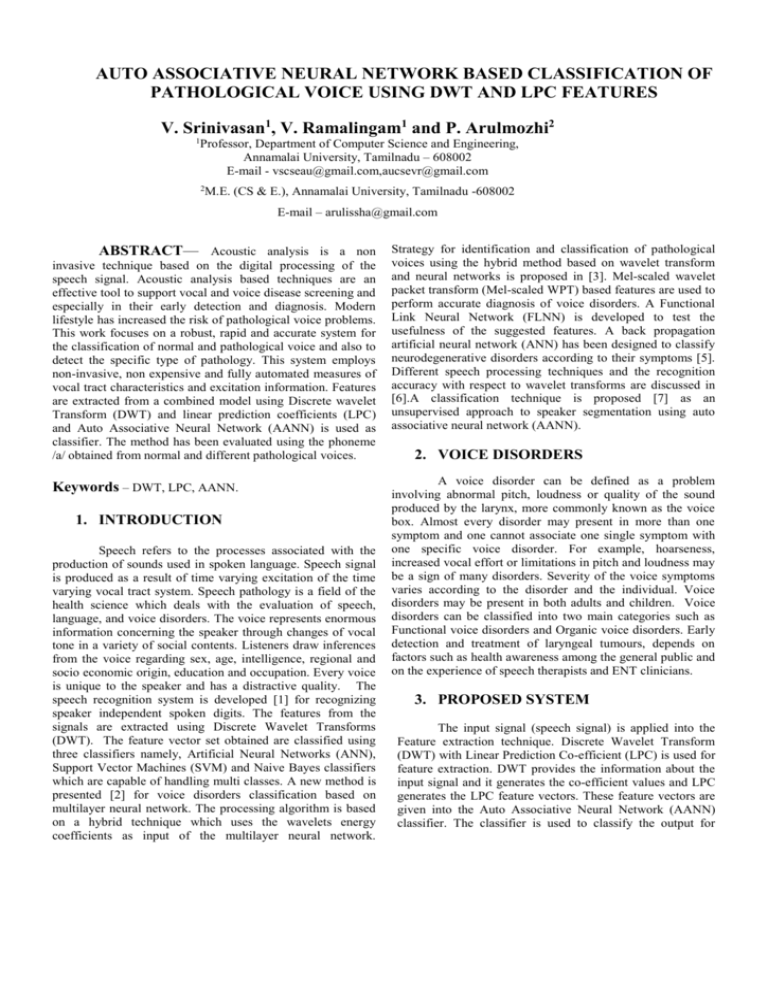

AUTO ASSOCIATIVE NEURAL NETWORK BASED CLASSIFICATION OF PATHOLOGICAL VOICE USING DWT AND LPC FEATURES V. Srinivasan1, V. Ramalingam1 and P. Arulmozhi2 1 Professor, Department of Computer Science and Engineering, Annamalai University, Tamilnadu – 608002 E-mail - vscseau@gmail.com,aucsevr@gmail.com 2 M.E. (CS & E.), Annamalai University, Tamilnadu -608002 E-mail – arulissha@gmail.com ABSTRACT— Acoustic analysis is a non invasive technique based on the digital processing of the speech signal. Acoustic analysis based techniques are an effective tool to support vocal and voice disease screening and especially in their early detection and diagnosis. Modern lifestyle has increased the risk of pathological voice problems. This work focuses on a robust, rapid and accurate system for the classification of normal and pathological voice and also to detect the specific type of pathology. This system employs non-invasive, non expensive and fully automated measures of vocal tract characteristics and excitation information. Features are extracted from a combined model using Discrete wavelet Transform (DWT) and linear prediction coefficients (LPC) and Auto Associative Neural Network (AANN) is used as classifier. The method has been evaluated using the phoneme /a/ obtained from normal and different pathological voices. Keywords – DWT, LPC, AANN. 1. INTRODUCTION Speech refers to the processes associated with the production of sounds used in spoken language. Speech signal is produced as a result of time varying excitation of the time varying vocal tract system. Speech pathology is a field of the health science which deals with the evaluation of speech, language, and voice disorders. The voice represents enormous information concerning the speaker through changes of vocal tone in a variety of social contents. Listeners draw inferences from the voice regarding sex, age, intelligence, regional and socio economic origin, education and occupation. Every voice is unique to the speaker and has a distractive quality. The speech recognition system is developed [1] for recognizing speaker independent spoken digits. The features from the signals are extracted using Discrete Wavelet Transforms (DWT). The feature vector set obtained are classified using three classifiers namely, Artificial Neural Networks (ANN), Support Vector Machines (SVM) and Naive Bayes classifiers which are capable of handling multi classes. A new method is presented [2] for voice disorders classification based on multilayer neural network. The processing algorithm is based on a hybrid technique which uses the wavelets energy coefficients as input of the multilayer neural network. Strategy for identification and classification of pathological voices using the hybrid method based on wavelet transform and neural networks is proposed in [3]. Mel-scaled wavelet packet transform (Mel-scaled WPT) based features are used to perform accurate diagnosis of voice disorders. A Functional Link Neural Network (FLNN) is developed to test the usefulness of the suggested features. A back propagation artificial neural network (ANN) has been designed to classify neurodegenerative disorders according to their symptoms [5]. Different speech processing techniques and the recognition accuracy with respect to wavelet transforms are discussed in [6].A classification technique is proposed [7] as an unsupervised approach to speaker segmentation using auto associative neural network (AANN). 2. VOICE DISORDERS A voice disorder can be defined as a problem involving abnormal pitch, loudness or quality of the sound produced by the larynx, more commonly known as the voice box. Almost every disorder may present in more than one symptom and one cannot associate one single symptom with one specific voice disorder. For example, hoarseness, increased vocal effort or limitations in pitch and loudness may be a sign of many disorders. Severity of the voice symptoms varies according to the disorder and the individual. Voice disorders may be present in both adults and children. Voice disorders can be classified into two main categories such as Functional voice disorders and Organic voice disorders. Early detection and treatment of laryngeal tumours, depends on factors such as health awareness among the general public and on the experience of speech therapists and ENT clinicians. 3. PROPOSED SYSTEM The input signal (speech signal) is applied into the Feature extraction technique. Discrete Wavelet Transform (DWT) with Linear Prediction Co-efficient (LPC) is used for feature extraction. DWT provides the information about the input signal and it generates the co-efficient values and LPC generates the LPC feature vectors. These feature vectors are given into the Auto Associative Neural Network (AANN) classifier. The classifier is used to classify the output for either normal or pathological. The block diagram of the proposed system is shown in Figure 1. Input (Speech Signal) Framing First we split the signal up into several frames such that we are analyzing each frame in the short time instead of analyzing the entire signal at once. Also an overlapping is applied to frames because on each individual frame, we will be applying a hamming window which will get rid of some of the information at the beginning and end of each frame. Overlapping will then reincorporate this information back into our extracted features. Windowing Feature Extraction (DWT with LPC) Classifier (AANN) Windowing step is meant to window each individual frame, in order to minimize the signal discontinuities at the beginning and the end of each frame. This is to select a portion of the signal that can reasonably be assumed stationary. Windowing is performed to avoid unnatural discontinuities in the speech segment and distortion in the underlying spectrum. The choice of the window is a trade off between several factors. In speaker recognition, the most commonly used window shape is the hamming window. Discrete Wavelet Transform (DWT) Output (Normal/ Pathological) Figure 1 Block Diagram of Proposed System 3.1 FEATURE EXTRACTION Feature Extraction is a major part of the speech recognition system since it plays an important role to separate one speech from other and this has been an important area of research for many years. Selection of the feature extraction technique plays an important role in the recognition accuracy, which is the main criterion for a good speech recognition system. Pre -processing To enhance the accuracy and efficiency of the extraction processes, speech signals are normally preprocessed before features are extracted. The aim of this stage is to boost the amount of energy in the high frequencies. The drop in energy across frequencies is caused by the nature of the glottal pulse. Boosting the high frequency energy makes information from these higher formants available to the acoustic model. The pre-emphasis filter is applied on the input signal before windowing. DWT is a relatively recent and computationally efficient technique for extracting information from nonstationary signals like audio. The main advantage of the wavelet transforms is that it has a varying window size, being broad at low frequencies and narrow at high frequencies, thus leading to an optimal time–frequency resolution in all frequency ranges. DWT uses digital filtering techniques to obtain a time-scale representation of the signals. DWT is defined by Equation..1. 𝑊𝑥 (𝑎, 𝑏 ) 𝑤 = 1 √𝑎 ∞ ∫ −∞ 𝑥(𝑡)𝜑∗ ( 𝑡−𝑏 )𝑑𝑡 … . . (1) 𝑎 where the function 𝜑 (t), a, and b are called the (mother) wavelet, scaling factor, and translation parameter, respectively. The DWT can be viewed as the process of filtering the signal using a low pass (scaling) filter and high pass (wavelet) filter. Thus, the first layer of the DWT decomposition of a signal splits it into two bands giving a low pass version and a high pass version of the signal. The low pass signal gives the approximate representation of the signal while the high pass filtered signal gives the details or high frequency variations. The second level of decomposition is performed on the low pass signal obtained from the first level of decomposition as shown in Figure 2. DWT with LPC Figure 2 General Structure of DWT The input signal (speech signal) is applied to the pre-processing, framing and windowing operation. Then three level Discrete Wavelet Transform (DWT) is applied. The three levels of the output are applied into the LPC. (The output of the first level of co-efficient (i.e) approximate components (D1) of the input signal is applied into the LPC. Similarly second and third level of co-efficient is applied into the LPC. In second level choosing the approximate components (D2) of the output is applied into the LPC. In third level both the approximate (D3) and detailed components (A3) of the input signal is applied into the LPC). The Figure 4 shows the feature extraction using DWT with LPC. Linear Predication Coefficient (LPC) Speech Samples Input (Speech Signal) Pre Emphasis Framing Pre-Processing, Framing and Windowing Windowing LPC Feature Vectors LPC Analysis 3 Level DWT Auto correlation Figure 3 Block Diagram of LPC The theory of linear prediction (LP) is closely linked to modelling of the vocal tract system, and relies upon the fact that a particular speech sample may be predicted by a linear weighted sum of the previous samples. The number of previous samples used for prediction is known as the order of prediction. The weights applied to each of the previous speech samples are known as linear prediction coefficients (LPC). They are calculated so as to minimize the prediction error. The steps for computing LPC is illustrated in Figure 3. After obtaining the autocorrelation of a windowed frame, the linear prediction coefficients are obtained using Levinson-Durbin recursive algorithm. This is known as LPC analysis. The LPC analysis produces LPC features vectors. A3 D3 LPC LPC D2 D1 LPC LPC DWT with LPC features Figure 4 Feature Extraction using DWT with LPC. - AUTO ASSOCIATIVE algorithm which starts at the output nodes and works back to the hidden layer. NEURAL NETWORK (AANN) 3.2 CLASSIFICATION A special kind of back propagation neural network called auto associative neural network (AANN) is used to capture the distribution of feature vectors in the feature space. We use AANNs with 5 layers as shown in Figure 5. This architecture consists of three non-linear hidden layers between the linear input and output layers. The second hidden layer contains fewer nodes than the input layer, and is known as the compression layer. In this network, the second and fourth layers have more units than the input layer. The third layer has fewer units than the first or fifth. The processing units in the first and third hidden layer are nonlinear, and the units in the second compression/hidden layer can be linear or nonlinear. As the error between the actual and the desired output vectors is minimized, the cluster of points in the input space determines the shape of the hyper surface obtained by the projection onto the lower dimensional space. Testing is the application mode where the network processes test pattern presented at its input layer and creates a response at the output layer. The vector values are arranged in matrix format to make it feasible to work with AANN. The frames of values are trained using AANN which uses the back propagation algorithm. The weight values are adjusted to get the input features as output. The obtained features are tested to find the normalized square error between the input and the output features. The error is transformed into confidence score which shows the similarity between the input and the output. AANN Training Algorithm 1. Select and apply the training pair from the training set to the network. 2. Calculate the output of the network. 3. Calculate the error between the output of the network and the input. 4. Adjust the weights (V, W matrix) in such a way that the error is minimized. 5. Repeat steps 1 to 4 for all the training pairs. 6. Repeat steps 1 to 5 until the network recognizes the training set or for certain epochs. Testing is the application mode where the network processes a tested input pattern presented at its input layer and creates a response at the output layer. The main application area includes Pattern Recognition, Voice Recognition, Bioinformatics, Signal Validation, and Net Clustering. 4. EXPERIMENTAL RESULT 4.1 Database Figure 5 Auto Associative Neural Network The network’s weights are initially set to small random values. Training is the process of adapting or modifying the weights in response to the training input patterns being presented at the input layer. The training algorithm controls the response of the weights to adapt the learning algorithm. During the training process, weights will gradually converge towards the values which will match the input feature vector to the target. From the input vector, an output vector is produced by the network which can then be compared to the target output vector. If there is no difference between the produced output and target output vectors, learning stops. Otherwise the weights are changed to reduce the difference. The weights are adapted using a recursive The database used in this work is created by recording normal and pathological voices from the patients of Raja Muthiah Medical College Hospital, Annamalai University. The speech samples are the sustained phonation of the vowel /a/ (1-5s) long. All the speech samples were recorded in a controlled environment and sampled with 11.025 kHz sampling rate and 16 bits of resolution. Data have been divided into two subsets one is training and another one is testing. 4.2 Feature Extraction 20 samples are taken for analysis (10 for training and 10 for testing).16 features are extracted from each samples after applying the techniques such as pre-emphasis, framing, windowing, three level DWT and LPC. The speech signals and the extracted features are shown in Figure 6(a), Figure 6(b), Figure 7(a) and Figure 7(b). 4.3 Classification The DWT with LPC feature vectors derived from the speech samples (both normal and pathological voices) are used as input for the Auto Associative Neural Network for training and testing. The extracted features are trained using AANN of structure 16 L 32 N 10 N 32 N 16 L which uses the backpropagation algorithm for updation of weights. 4.4 Performance Evaluation In order to evaluate the performance of the detector, Figure 6(a) Normal Voice and to allow comparisons, several ratios have been taken into account. 𝑇𝑃 Sensitivity (SE) = 100 . 𝑇𝑃+𝐹𝑁 TN Specificity (SP) = 100. TN+FP Efficiency (E) = 100∙ TN+TP TN+TP+FN+FP Where TN - True negative TP –True Positive Figure 6(b) Feature Extraction of Normal Voice FP-False Positive FN –False Negative Table 1 shows a comparative performance exhibited by classification based on DWT features, LPC features separately and the proposed method which uses DWT with LPC features. Table 1 – Performance Comparsion Figure 7(a) Pathological voice Figure 7(b) Feature Extraction of Pathological voices Feature Extraction Technique Classification of Pathological Voice using AANN Sensitivity Specificity Efficiency DWT 93.5 90.0 92.5 LPC 87.2 89.3 90.7 DWT with LPC 96.8 92.4 95.8 4.5 Performance Graph The Mean Square Error Vs Number of Epochs for training, validation, testing and best fit for DWT , LPC and DWT with LPC are shown in Figure 10(a), Figure 10(b) and Figure 10( c). The DWT with LPC shows a better result than DWT and LPC alone. The classification accuracy of DWT with LPC is nearly 95% in identifying pathological voice compared to DWT and LPC separately. Figure 10(c) Mean Square Error Vs Number of Epochs for training, validation, testing and best fit for DWT with LPC. CONCLUSION Figure 10(a) Mean Square Error Vs Number of Epochs for training, validation, testing and best fit for DWT. The accurate detection of pathological voice is a major research. That has attracted attention in the field of biomedical engineering and disorder for many years. This work focused on the problem of automatic detection of voice pathologies from the speech signal. The purpose of this work is to conceive a tool to assist the clinicians to detect the type of voice pathology. The proposed system can be used as a valuable tool for researchers and speech pathologist to detect whether the voice is normal or pathological and also to detect specific type of pathology. In the future the work may be extended to increase the accuracy of the system and also to develop an online diagnosing system. REFERENCES [1] Sonia suuny, David peter S,K.Poulose Jacob, “Performance of Different classifiers in speech recognition”, International Journal of Research Engineering and Technology , vol . 2, Issue 4, pp. 590-597, April 2013. Figure 10(b) Mean Square Error Vs Number of Epochs for training, validation, testing and best fit for LPC. [2] L.Salhi, M.Talbi, A.Cherif, “Voice disorders identification using hybrid Approach: Wavelet analysis and Multilayer Neural Networks”, World Academy of Science, Engineering & Technology 21, pp. 330-339, 2008. [3] Lotfi Salhi, Talbi Mourad, and Adnene cherif, “Voice disorders identification using Multilayer neural Network ” , The International Arab Journal of Information Technology, vol . 7, no-2, pp. 177-185, April 2010. [4] Paulraj M P,Sazali Yaacob and M.Hariharan, “ Diagnosis of voice disorders using Mel Scaled WPT and Functional Link Neural Network”, Biomedical soft computing and human science, vol . 14, no.2, pp. 55-60, 2009. [5] M.A.Anusuya, S.K.Katti, “Comparison of different Speech feature extraction Techniques with and without wavelet transform to Kannada speech recognition”, International Journal of Computer Application, vol. 26, no.4, pp. 19-24,July 2011. [6] Nivedita Chaudhary, Yogender Aggarwal,Rakesh Kumar Sinha, “ Artificial Neural Network based classification of neurodegenerative Diseases”, Advances in Bio-Medical Engineering Research (ABER), vol. 1, Issue 1, pp. 18,March 2013. [7] Navnath S Nehe and Raghunath S Holambe, “DWT and LPC based feature extraction methods for isolated word recognition”, EURASIP Journal on Audio speech, and music processing 2012, A Springer Journal. [8]S.Jothilakshmi, S.Palanivel, V.Ramalingam, “Unsupervised speaker segmentation using AANN”, International Journal of Computer Applications, vol. 1, no7,pp. 24-30,2010. [9] P.Dhanalakshmi, S.Palanivel ,M.Arul, “ Automatic Segmentation of broadcast audio signals using Auto Associative Neural Network”, ICTACT Journal on Communication Technology, vo l. 1, Issue 4, pp. 187190, Dec 2010. [10] Syed Mohammed Ali,Pradeep Tulshiram Karule, “ Speaker analysis of pathological & Normal speech signal ”, International Journal of Scientific and Engineering research, vol . 4, Issue 2, pp. 1-4, Feb 2013. [11] Barnali Gupta Banik,Samir K.Bandyopadhyay, “ A DWT method for image steganography” , International Journal of Advances Research in Computer Science and Software Engineering, vol. 3 ,Issue 6, pp . 983-989, June 2013. [12] K.Sreenivasa Rao, “Role of Neural Network Models for developing Speech systems”, Indian Academy of Science, Sadhana, vol . 36, Part 5,pp. 783-836, Ocotober 2011. [13] Nitin Trivedi,Vikesh Kumar,Saurabh singh,Sachin Ahuja,Raman Chadha, “ Speech Recognition by Wavelet Analysis ” , International Journal of Computer Applications(IJCA), vol. 15, no-8, pp.27-32, Feb 2011. [14] Mahmoud l.Abdalla,Haitham M.Abobakr,Tamer S.Gaafar, “ DWT and MFCC’s based feature extraction methods for isolated word recognition”, International Journal of Computer Applications (IJCA), vol. 69, no 20, pp. 20-26, May 2013. [15]P.Dhanalakshmi, S.Palanivel, V.Ramalingam, “Classification of Audio signals using AANN and GMM”, Elsevier, pp. 16-723, 2011.