Probability Theory

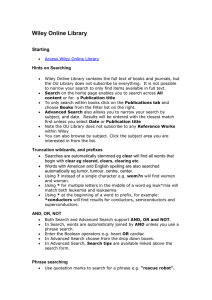

advertisement

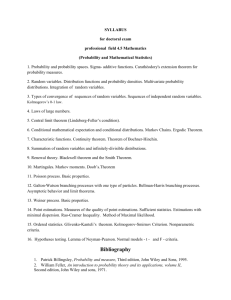

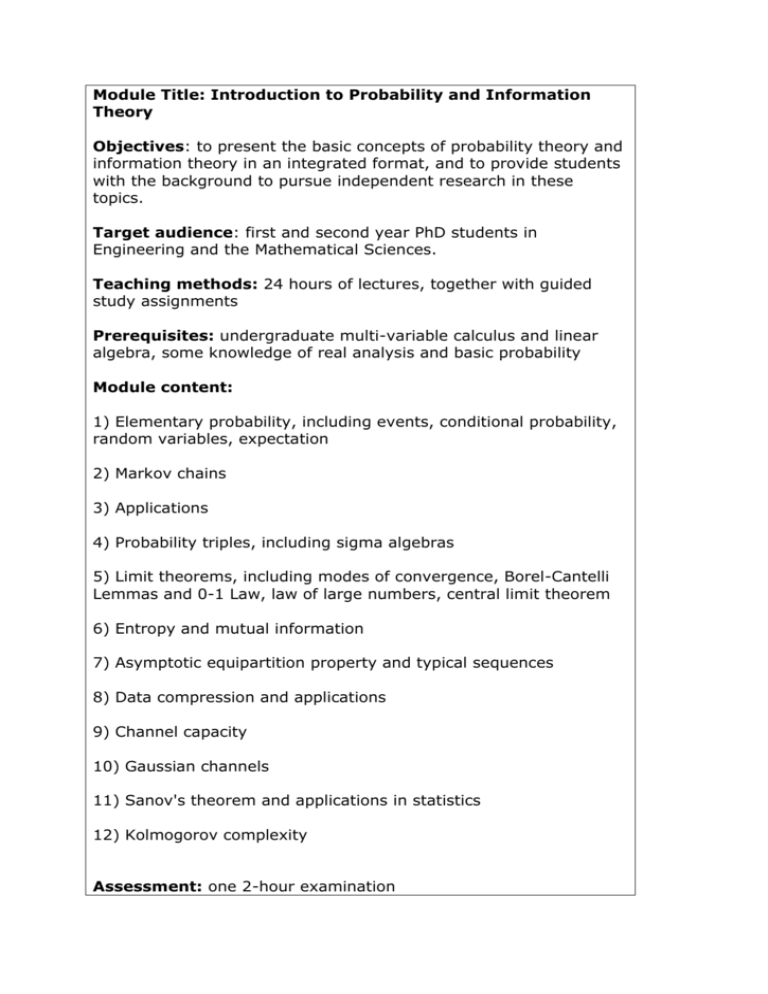

Module Title: Introduction to Probability and Information Theory Objectives: to present the basic concepts of probability theory and information theory in an integrated format, and to provide students with the background to pursue independent research in these topics. Target audience: first and second year PhD students in Engineering and the Mathematical Sciences. Teaching methods: 24 hours of lectures, together with guided study assignments Prerequisites: undergraduate multi-variable calculus and linear algebra, some knowledge of real analysis and basic probability Module content: 1) Elementary probability, including events, conditional probability, random variables, expectation 2) Markov chains 3) Applications 4) Probability triples, including sigma algebras 5) Limit theorems, including modes of convergence, Borel-Cantelli Lemmas and 0-1 Law, law of large numbers, central limit theorem 6) Entropy and mutual information 7) Asymptotic equipartition property and typical sequences 8) Data compression and applications 9) Channel capacity 10) Gaussian channels 11) Sanov's theorem and applications in statistics 12) Kolmogorov complexity Assessment: one 2-hour examination References: P. Billingsley, “Probability and Measure'', third edition. Wiley (1995). [Wiley series in probability and mathematical statistics] J. S. Rosenthal, “A first look at rigorous probability theory'', second edition. World Scientific (2006). Y. Suhov and M. Kelbert, “Probability and Statistics by Example'', Volume 1, Basic Probability and Statistics Cambridge University Press (2005) T. M. Cover and J. A. Thomas, “Elements of Information Theory'', second edition. Wiley (2006). A. Y. Khinchin, “Mathematical Foundations of Information Theory'', Dover, New York (1957).