MSA Generation Algorithm In this section, we present a detailed

advertisement

MSA Generation Algorithm

In this section, we present a detailed description of the MSA generation algorithm.

Preprocessing

Given a set of sentences S derived from our corpus of documents and a target type t, identify all

instances of t within the sentences of S. The target type t can be a number, date, drug name, or anatomy

phrase. For example, if t is the set of all numbers, then in the sentence, “there is a 2.5 x 4.1 mm nodule

in the left upper lobe”, “2.5” and “4.1” would be identified as instances of t.

For each sentence s in S, let the set of instances of t found in s be called Ts. Next, iterate through all

instances of t’ in Ts and for each t’ generate a new sentence st’ that is s with t’ replaced with the special

symbol <target>. The st’ are referred to as target-centered sentences.

As an example, t is again the target type numbers and s is the sentence “there is a 2.5 x 4.1 mm nodule

in the left upper lobe”, then Ts = {2.5, 4.1}. Two target-centered sentences are then generated from the

original s:

s2.5 = “there is a <target> x 4.1 mm nodule in the left upper lobe”

s4.1 = “there is a 2.5 x <target> mm nodule in the left upper lobe”

Next, replace all instances of common highly variable tokens with generic symbols if their actual values

are not important for the task at hand, such as other numeric values. Continuing our example, all

numbers other than the target in s2.5 are replaced with the generic symbol <number>: “there is a

<target> x <number> mm nodule in the <anatomy>”. This generalizes the sentences to increase

flexibility and coverage.

The set of all target-centered sentences S’ is formally defined as:

𝑆 ′ = {𝑠𝑡 ′ |𝑠𝑡 ′ 𝑖𝑠 𝑔𝑒𝑛𝑒𝑟𝑎𝑡𝑒𝑑 𝑓𝑟𝑜𝑚 𝑠 ∈ 𝑆 𝑎𝑛𝑑 𝑡 ′ ∈ 𝑇𝑠 ,

𝑠𝑢𝑐ℎ 𝑡ℎ𝑎𝑡 𝑡 ′ ℎ𝑎𝑠 𝑏𝑒𝑒𝑛 𝑟𝑒𝑝𝑙𝑎𝑐𝑒𝑑 𝑤𝑖𝑡ℎ <target> 𝑤𝑖𝑡ℎ𝑖𝑛 𝑠𝑡′ }

Pairwise Local Alignment

Given two sequences, a pairwise local alignment is the best subsequences of the two sequences that line

up the maximum number of tokens using the fewest number of operations such as insertions, deletions,

or substitutions. We use the Smith-Waterman local alignment algorithm with the following

parameters:

1.0, 𝑖𝑓 𝑎 = 𝑏

Scoring matrix 𝑆𝑀(𝑎, 𝑏) = {100.0, 𝑖𝑓 𝑎 = 𝑏 =< 𝑡𝑎𝑟𝑔𝑒𝑡 >

0.0, 𝑜𝑡ℎ𝑒𝑟𝑤𝑖𝑠𝑒

Gap penalty = -0.01

The scoring matrix is trivial in that it only takes into account exact matches between tokens. The only

special case is if both tokens are targets, then the score returned is much higher than other exact

matches. This is to force Smith-Waterman to always include the target in the subsequences of the

resulting alignment. The gap penalty was chosen to be a small negative number in order to give the

algorithm some freedom to insert a small number of gaps. An example of a pairwise alignment between

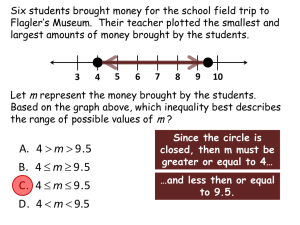

two target-centered sentences is shown in Figure 1 below:

Figure 1 – Example pairwise local alignment between two target-centered sentences.

The alignment is shown within the dotted rectangle, where light gray shaded tokens are exactly aligned

between the two sentences (called the base tokens of the alignment), the dark gray tokens are

mismatches, and the tokens shown in white do not participate in the alignment. Notice that both

targets (<target>) have been aligned as a result of the high match score built into the scoring matrix.

Generating MSAs

The MSA generation algorithm pairwise aligns every target-centered sentence in S’ with every other

sentence based on the process described in the previous section. The subsequences of each alignment

are then added to an appropriate MSA if one exists, or are used as the basis for a newly created MSA.

Determining which MSA to add the results of a pairwise local alignment depends on matching the base

tokens of both the pairwise alignment and the MSA. For instance, the base tokens for the alignment in

Figure 1 is the ordered list {a, mass, in, the, <target>}. In this case, the two subsequences of the

pairwise alignment will be added to an existing MSA that also shares that set of base tokens, if one is

found. Otherwise, they will be used as the initial rows of a new created MSA. Adding a row into an

existing MSA involves the addition of any gaps into either the row being added or the rows already in

the MSA in order to line up the base tokens. Figure 2 shows an MSA with the same base tokens as the

alignment in Figure 1.

Figure 2 – An example MSA. The areas of variation and stability are clearly seen, as well as the base tokens ({a, mass, in,

the, <target>}).

The following is pseudo-code for the MSA generation algorithm where M is the set of all MSAs that have

been generated and is the output of the algorithm. Pseudo-code is provided for supporting methods

unless the method’s semantics are straightforward to understand or the method has been detailed in

other sources (e.g., Smith-Waterman algorithm).

//generate MSAs from a set of target-centered sentences S’

gen-msas(S’)

M = {}

for each ordered pair of sentences (𝑠𝑖′ , 𝑠𝑗 ′) where 𝑖 < 𝑗,

ai.j = sw-align(𝑠𝑖′ , 𝑠𝑗 ′)

m = find-msa(base-tokens(ai.j))

if (m == null)

m = create-new-msa()

𝑀 =𝑀∪𝑚

o for each sequence ak in ai.j

add-row(m, ak)

return M

o

o

o

//Smith-Waterman alignment between sequences si and sj

//Returns an alignment 𝑎𝑖,𝑗 = {(𝑎𝑖 , 𝑎𝑗 )|𝑎𝑖 𝑖𝑠 𝑎 𝑠𝑢𝑏𝑠𝑒𝑞𝑢𝑒𝑛𝑐𝑒 𝑜𝑓 𝑠𝑖 𝑎𝑛𝑑 𝑎𝑗 𝑖𝑠 𝑎 𝑠𝑢𝑏𝑠𝑒𝑞𝑢𝑒𝑛𝑐𝑒 𝑜𝑓 𝑠𝑗 }

sw-align(si, sj)

//locate an MSA with base tokens b

find-msa(b)

for each m in M

o bm = base-tokens(m)

o if (b = bm)

return m

return null

//add sequence s to MSA m

//the number of tokens between every pair of base tokens is counted for both m and s

//if m has fewer tokens, then gaps are inserted into m between the two base tokens until

//the number of tokens is equal to the number of tokens between s’s corresponding base tokens

//likewise, gaps are inserted into s if s has fewer tokens than m

add-row(m, s)

bm = base-tokens(m)

bs = base-tokens(s)

for each bi in bm and bj in bs

o i = index-msa(m, bi)

o j = index(s, bj)

o ci = count-tokens(bm, i, i+1)

o cj = count-tokens(bs, j, j+1)

o if (ci > cj)

insert-gaps(s, j, ci-cj)

o else if (cj < ci)

insert-gaps-msa(m, i, cj-ci)

𝑚 = 𝑚 ∪ 𝑏𝑠

//insert n gaps into a sequence s at index i

insert-gaps(s, i, n)

//insert n gaps into all rows of an MSA m at index i

insert-gaps-msa(m, i, n)

//count number of tokens in sequence s between indexes i and j

count-tokens(s, i, j)

//get base tokens of sequence s

base-tokens(s)

//get the index of a token within a sequence s

index(s, t)

//get the index of a token within an MSA m

index-msa(s, t)

Baseline Methods

Two baseline methods were used to compare with our MSA-based pattern generation technique. The

first, Bwin, uses token windows of varying size (1 to 11 tokens) on both sides of the target to generate

lexical patterns. A pattern is generated for every possible configuration of the window. The pseudocode for Bwin is shown in the following, where S’ is again the set of target-centered sentences from the

previous section:

//generate patterns by varying window of tokens

//P is final set of patterns

b-win(S’)

P = {}

for each 𝑠′ ∈ 𝑆′

o kt = target-index(s’)

o for i from 1 to 11

for j from 1 to 11

k1 = kt - i

k2 = kt + j + 1

p = subsequence(s’, k1, k2)

𝑃 =𝑃∪𝑝

return P

//get index of target symbol within sequence s

target-index(s)

for i from 0 to length(s)-1

o if (s[i] == <target>

return i

return -1

Bdist is a simple relation extractor that links concepts with the least number of words between the two

tokens. For instance, to extract the relation between a tumor size and an anatomy location, Bdist chooses

the anatomy location phrase that has the nearest proximity to the tumor size. The pseudo-code for Bdist

is as follows:

//extract relations between targets and instances of concept c

b-dist(s, c)

C = find-concepts(s, c)

it = target-index(s)

dmin = max-integer

cmin = null

for ci in C

o ic = index(s, ci)

o if (dmin > abs(ic – it)

dmin = abs(ic – it)

cmin = c’

return cmin

//find the concepts of type c within sequence s

find-concepts(s, c)

C = {}

for si in s

o if (instance-of(si) == c)

𝐶 =𝐶∪𝑐

return C