Example #1 – Word Document

advertisement

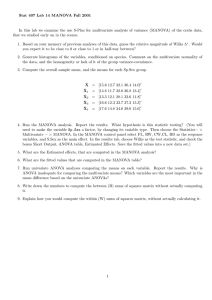

PRE 905: Spring 2014 – Lecture 9, Example 1 -- Page 1 Examples of Adding Predictors to Multivariate Models Uses of the gls() Function with the Factor() Function Comparisons of Multivariate Models with Classical MANOVA The data for this example come from http://www.ats.ucla.edu/stat/sas/library/repeated_ut.htm R Syntax for Data Manipulation – Converting from Wide Format to Long/Stacked Format: Resulting R Data Set (personID = 1 and personID =18 shown) 𝑦𝑝1 112 R’s gls() function is modeling 𝒚𝑝 = [𝑦𝑝2 ], where 𝒚 is the pulse rate. For personID = 1 this would be: 𝒚1 = [166] 𝑦𝑝3 215 Empty multivariate model predicting the mean pulse rate for each intensity level: Multivariate model becomes (simultaneously): 𝒚𝑝 = 𝐗 𝑝 𝜷 + 𝒆𝑝 𝑦𝑝1 𝑒𝑝1 𝛽0 1 0 0 𝑦 𝒚𝑝 = [ 𝑝2 ] ; 𝐗 𝑝 = [1 1 0] ; 𝜷 = [𝛽𝐼2 ] ; 𝒆𝑝 = [𝑒𝑝2 ] 𝑦𝑝3 𝑒𝑝3 𝛽𝐼3 1 0 1 2 𝜎𝑒1 Where 𝒆𝑝 ∼ 𝑁3 (𝟎, 𝐑), and 𝐑 = [𝜎𝑒1 ,𝑒2 𝜎𝑒1 ,𝑒3 𝜎𝑒1 ,𝑒2 2 𝜎𝑒2 𝜎𝑒2 ,𝑒3 𝜎𝑒1 ,𝑒3 𝜎𝑒2 ,𝑒3 ] (the unstructured error covariance matrix model). 2 𝜎𝑒3 PRE 905: Spring 2014 – Lecture 9, Example 1 -- Page 2 Which leads to: 𝑦𝑝1 = 𝛽0 + 𝑒𝑝1 𝑦𝑝2 = 𝛽0 + 𝛽𝐼2 + 𝑒𝑝2 𝑦𝑝3 = 𝛽0 + 𝛽𝐼3 + 𝑒𝑝3 Interpret each effect… Intercept: observationF2: observationF3: PRE 905: Spring 2014 – Lecture 9, Example 1 -- Page 3 R Matrix Estimate Adding a Predictor to a Multivariate Model We will add diet (vegetarian or meat eater) as a predictor to the empty model: Multivariate model becomes : 𝒚𝑝 = 𝐗 𝑝 𝜷 + 𝒆𝑝 For meat eaters: 𝑦𝑝1 1 0 0 𝒚𝑝 = [𝑦𝑝2 ] ; 𝐗 𝑝 = [1 1 0 𝑦𝑝3 1 0 1 0 0 0 0 0 0 𝛽0 𝛽𝐼2 𝑒𝑝1 0 𝛽𝐼3 ; 𝒆𝑝 = [𝑒𝑝2 ] 0] ; 𝜷 = 𝛽𝑉 𝑒𝑝3 0 𝛽𝑉∗𝐼2 [𝛽𝑉∗𝐼3 ] 1 0 1 1 1 0 𝛽0 𝛽𝐼2 𝑒𝑝1 0 𝛽𝐼3 ; 𝒆𝑝 = [𝑒𝑝2 ] 0] ; 𝜷 = 𝛽𝑉 𝑒𝑝3 1 𝛽𝑉∗𝐼2 [𝛽𝑉∗𝐼3 ] For vegetarians: 𝑦𝑝1 1 0 0 𝒚𝑝 = [𝑦𝑝2 ] ; 𝐗 𝑝 = [1 1 0 𝑦𝑝3 1 0 1 2 𝜎𝑒1 Where 𝒆𝑝 ∼ 𝑁3 (𝟎, 𝐑), and 𝐑 = [𝜎𝑒1 ,𝑒2 𝜎𝑒1 ,𝑒3 𝜎𝑒1 ,𝑒2 2 𝜎𝑒2 𝜎𝑒2 ,𝑒3 𝜎𝑒1 ,𝑒3 𝜎𝑒2 ,𝑒3 ] (the unstructured error covariance matrix model). 2 𝜎𝑒3 Which leads to the following simultaneous linear models: For meat eaters: 𝑦𝑝1 = 𝛽0 + 𝑒𝑝1 𝑦𝑝2 = 𝛽0 + 𝛽𝐼2 + 𝑒𝑝2 𝑦𝑝3 = 𝛽0 + 𝛽𝐼3 + 𝑒𝑝3 For vegetarians: 𝑦𝑝1 = 𝛽0 + 𝛽𝑉 + 𝑒𝑝1 𝑦𝑝2 = 𝛽0 + 𝛽𝐼2 + 𝛽𝑉 + 𝛽𝑉∗𝐼2 + 𝑒𝑝2 𝑦𝑝3 = 𝛽0 + 𝛽𝐼3 + 𝛽𝑉 + 𝛽𝑉∗𝐼3 + 𝑒𝑝3 PRE 905: Spring 2014 – Lecture 9, Example 1 -- Page 4 The 𝐑 error covariance matrix has changed by adding the predictor – this is analogous to what happens in a univariate general linear model – the predictor shrinks the error variance: For intensity #1 (pulse after warm up): 2 2 Empty model 𝜎𝑒1 = 279.9; After adding DIET as a predictor: 𝜎𝑒1 = 188.6 2 Therefore 𝑅𝐼1 = 279.9−188.6 279.9 = 0.326 For intensity #2 (pulse after jogging): 2 2 Empty model 𝜎𝑒2 = 473.1; After adding DIET as a predictor: 𝜎𝑒2 = 328.4 2 Therefore 𝑅𝐼2 = 473.1−328.4 473.1 = 0.306 For intensity #3 (pulse after running): 2 2 Empty model 𝜎𝑒3 = 770.0; After adding DIET as a predictor: 𝜎𝑒3 = 530.1 2 Therefore 𝑅𝐼3 = 770.0−530.1 770.0 = 0.312 These 𝑅 2 reflect the proportion of variance accounted for marginally for each variable. What about the covariances? Notice, adding a predictor reduces those, too. What is needed is a joint description of how the combined variation of all three variables is reduced by the predictor. To get that, we can use the generalized sample variance (the determinant of 𝐑) For all three variables jointly: Empty model generalized variance: |𝐑| =1,675,026; After adding DIET as a predictor: |𝐑| = 1,224,462 Therefore the overall 𝑅 2 is: 𝑅 2 = 1,675,026−1,224,462 1,675,026 = .269 This value is less than each of the marginal 𝑅 2 values because of the contributions of the covariances. PRE 905: Spring 2014 – Lecture 9, Example 1 -- Page 5 Because we are using the Residual Maximum Likelihood as our estimator, we can use a multivariate Wald test to determine if DIET significantly improved model fit. This is essentially testing whether the multivariate 𝑅 2 change is zero. More formally, we are testing the hypothesis: 𝐻0 : 𝛽𝑉 = 𝛽𝑉∗𝐼1 = 𝛽𝑉∗𝐼3 = 0 𝐻𝐴 : At least one not equal to zero We can conclude that DIET significantly improved the fit of the multivariate model – but, what that does not tell us is if it improved the fit for each dependent variable marginally. Interpret each effect… Intercept: observationF2: observationF3: dietF2: observationF2:dietF2: observationF3:dietF2: PRE 905: Spring 2014 – Lecture 9, Example 1 -- Page 6 What we cannot find from our direct model output are some key hypothesis tests that show up for this type of analysis: Within subjects main effect of intensity (do mean pulse rates differ when pulse is taken after different intensities?) o Should be 2 degrees of freedom (3 intensity levels - 1) Between subjects main effect of diet type (do mean pulse rates differ for vegetarians and meat eaters?) o Should be 1 degree of freedom (2 diet types - 1) Interaction of within subjects intensity and between subjects diet type (are there differences in pulse rate for varying combinations of intensity and diet?) o Should be 2 degrees of freedom (3 intensity levels - 1)* (2 diet types - 1) PRE 905: Spring 2014 – Lecture 9, Example 1 -- Page 7 Comparison of PROC MIXED Multivariate Analyses with Classical Multivariate Analysis of Variance (MANOVA) A semi-frequent request when running multivariate analyses is for some type of “MANOVA” tests. Usually, this is due to the person requesting the classical tests having been trained in a customary multivariate analysis course. This section of the handout describes MANOVA and how what we have just done can provide a MANOVA-style test if you ever are asked for one. We will begin our discussion of MANOVA by starting with the univariate ANOVA (a one-way ANOVA with one categorical IV). In univariate ANOVA, we develop the hypothesis test of group differences in the group mean of the outcome using the concept of sums of squares. 𝐻0 : 𝜇1 = 𝜇2 = ⋯ = 𝜇𝐺 𝐻𝐴 : at least one 𝜇 not equal to the others Sums of squares between groups comes from the sum of squared differences from each group mean 𝑦̅𝑔 from the overall grand mean 𝑦̅ (where 𝑁𝑔 is the within-group sample size for group 𝑔): 𝐺 2 𝑆𝑆𝐵 = ∑ 𝑁𝑔 (𝑦̅𝑔 − 𝑦̅) 𝑔=1 The sums of squares within groups (here referred to as the sums of squares for error) comes from the squared difference of each person’s outcome and their group’s mean: 𝐺 𝑁𝑔 2 𝑆𝑆𝐸 = ∑ ∑ (𝑦𝑝 − 𝑦̅𝑔 ) 𝑔=1 𝑛𝑔 =1 The F-test is the ratio of these two terms, each divided by their respective degrees of freedom: 𝐹= 𝑆𝑆𝐵 /𝑑𝑓𝐵 𝑆𝑆𝐸 /𝑑𝑓𝐸 A MANOVA extends a univariate ANOVA to test hypotheses about mean vectors across categorical independent variables. The hypothesis test is now: 𝐻0 : 𝝁1 = 𝝁2 = ⋯ = 𝝁𝐺 𝐻𝐴 : at least one 𝝁 not equal to the others 𝜇𝑔1 𝜇𝑔2 Where 𝝁𝑔 = [ ⋮ ], for 𝑉observed outcome variables (i.e., a multivariate analysis). The same concepts of univariate ANOVA still 𝜇𝑔𝑉 apply, just with vectors and matrices instead of individual means. The matrix analog to the sums of squares between groups is the Between Groups Sums of Squares and Cross Products matrix (using SAS’ notation 𝐇, which stands for Hypothesis) is formed ̅𝑔 (size 𝑉 𝑥 1) and the overall grand mean vector 𝒚 ̅ (also size 𝑉 𝑥 1) using each group’s mean vector 𝒚 𝐺 𝑇 ̅𝑔 − 𝒚 ̅)(𝒚 ̅𝑔 − 𝒚 ̅) 𝐇(𝑉 𝑥 𝑉) = ∑ 𝑁𝑔 (𝒚 𝑔=1 PRE 905: Spring 2014 – Lecture 9, Example 1 -- Page 8 The matrix analog to the sums of squares within groups (here referred to as the sums of squares for error) is the Error Sums of Squares and Cross Products Matrix which comes from comparing each person’s vector of outcomes 𝒚𝑝 with their group mean ̅𝑔 : vector 𝒚 𝐺 𝑁𝑔 𝑇 ̅)(𝒚𝑝 − 𝒚 ̅) 𝐄(𝑉 𝑥 𝑉) = ∑ ∑ (𝒚𝑝 − 𝒚 𝑔=1 𝑛𝑔 =1 𝑬 Note: for our multivariate models using maximum likelihood and an unstructured 𝐑 matrix, 𝐑 = 𝑁 Now, instead of forming an F-ratio, we can form a matrix that incorporates this ratio: 𝐅 = 𝐄−1 𝐇 Where MANOVA differs from univariate ANOVA is that there is no one best test statistic for summarizing 𝐅. Instead, four popular statistics are formed: det(𝐄) det(𝐇+𝐄) Wilk’s Lambda = Pillai’s trace = 𝑡𝑟𝑎𝑐𝑒(𝐇(𝐇 + 𝐄)−1 ) Hotelling-Lawley trace = 𝑡𝑟𝑎𝑐𝑒(𝐄−1 𝐇) Roy’s greatest (largest) root = largest eigenvalue of 𝐄−1 𝐇 (we will show how to get this using a modification of a likelihood ratio test) What we seek to say is this: even though these statistics don’t directly come out of PROC MIXED, you can still obtain (at least one of) them. Therefore, the analysis we have done subsumes classical MANOVA (or Multivariate Regression) into a more general framework for investigating multivariate hypotheses. We will now compare a MANOVA with PROC GLM and show how we can achieve the same result from PROC MIXED. The four MANOVA test statistics are shown in the output below. We can obtain Wilks Lambda from a model comparison of our previous two multivariate models in PROC MIXED: Empty Model −2𝐿𝐿𝐸𝑀𝑃𝑇𝑌 = −198.5 ∗ −2 = 397.0 DIET Model −2𝐿𝐿𝐷𝐼𝐸𝑇 = −186.8 ∗ −2 = 373.6 Wilks Lambda = Λ = exp ( −(−2𝐿𝐿𝐸𝑀𝑃𝑇𝑌 − −2𝐿𝐿𝐷𝐼𝐸𝑇 ) −(397.0 − 373.6) ) = exp ( ) = 0.273 𝑁 18