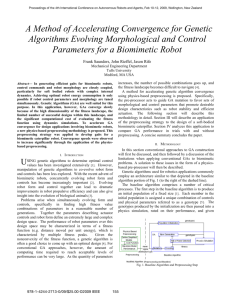

Horizons of evolutionary robotics

advertisement

Context & Challenges for ER:

ER is a field of research that applies evolutionary computation techniques

to evolve overall design OR controllers OR both

ER uses evolutionary computing techniques to automatically develop

some or all of following properties of a robot:

o Controller

o Body

o Sensor & motor properties

o Layout

Populations of artificial genomes encode properties of autonomous

robots required to carry out a particular task or to exhibit some set of

behaviors

o Genomes are mutated (mutation) & interbred (crossover) to

create new generations of robots (Darwinian scheme – fittest

individuals are more likely to produce offspring)

o Fitness is measured to tell how good robot is according to some

evaluation criteria

Fitness is usually measured automatically, but can also be

based on the experimenter’s judgement

Applications of ER:

o Entertainment industries

o Exploration & navigation

o Technology in solving environmental issues

o Robotics (robustness & autonomy are fundamental requirements)

Alan Turing suggests that intelligent machines should be adaptive –

should learn & develop – programming by hand is infeasible – instead,

apply biological evolution

Brooks and others demonstrated methodologies and techniques to evolve

(not design) control systems

Key elements of ER approach:

o Method to measure fitness of a robot’s behavior generated from

genomes

o Method to apply selection and genetic operators to produce next

generation from current one

1. Initial population of genotypes & evaluate fitness

2. Select parents & breed

3. Create mutated offsprings

4. Evaluate offsprings

5. Replace members of population

6. (2)

Genetic encoding:

o Many parts of robot design (also controller) can be evolved

o Most popular controller is ANN (feedforward/recurrent)

Properties of ANN (thresholds/biases) are fixed length

values

Properties of ANN are evolved

o Evolutionary algorithms used to manipulate genetic encoding

vary:

Simple GAs

More sophisticated GAs

Specific GAs for evolving robot controllers

Fitness evaluation:

o Transfer genome to robot and measure resulting behavior

Historically, EA was on host computer while genome was

tested on target robot

Better to test on real robot than on simulator, because

simulators are not accurate enough – this was proven to be

false – this led to many successful simulationpbased

approaches (i.e. minimal simulation methodology [only

important to the desired behavior aspects of the

environment are modeled, masking everything else as a

{carefully structured} noise])

o Plastic controllers adapt through self-organization. Not connection

weights are evolved, but “Learning rules”, for adapting connection

strengths, are evolved -> controllers continually adapt to changes

in their evironment (Floreano, Husbands, and Nolfi [2008])

o Explicit fitness function

Rewards specific behavior (i.e. traveling in straight line) –

shapes overall behavior from a set of specific (hardcoded)

behaviors

o Implicit fitness function

More abstract level – points (fitness) are given for

completing some task, but are not tied to any specific,

hardcoded behavior

Examples:

o Maintain energy levels

o Cover as much area as possible

o etc

o It is possible to define a fitness function that has both

explicit&implicit elements

Evolution can proceed in stages, where competences are built up in

incremental way (additions to current solution) – fitness function may

change as evolution progresses

Advantages:

o Explore designs that have many free variables (a set of possible

robots is defined rather than a specific robot -> fewer constraints)

o Fine tune parameters of already successful design

o Build on already existing solution

o Take into account multiple and/or conflicting criteria/constants

(i.e. [efficiency/cost/weight/power consumption ->

functional]/[robustness/reliability -> non-functional..]/etc)

o Possibly develop not intuitive and minimal design

ER specifies target behavior, but does not tell how this behavior should be

achieved

ER & Neuroscience: