09_Vul0507_compiled - UCSD

advertisement

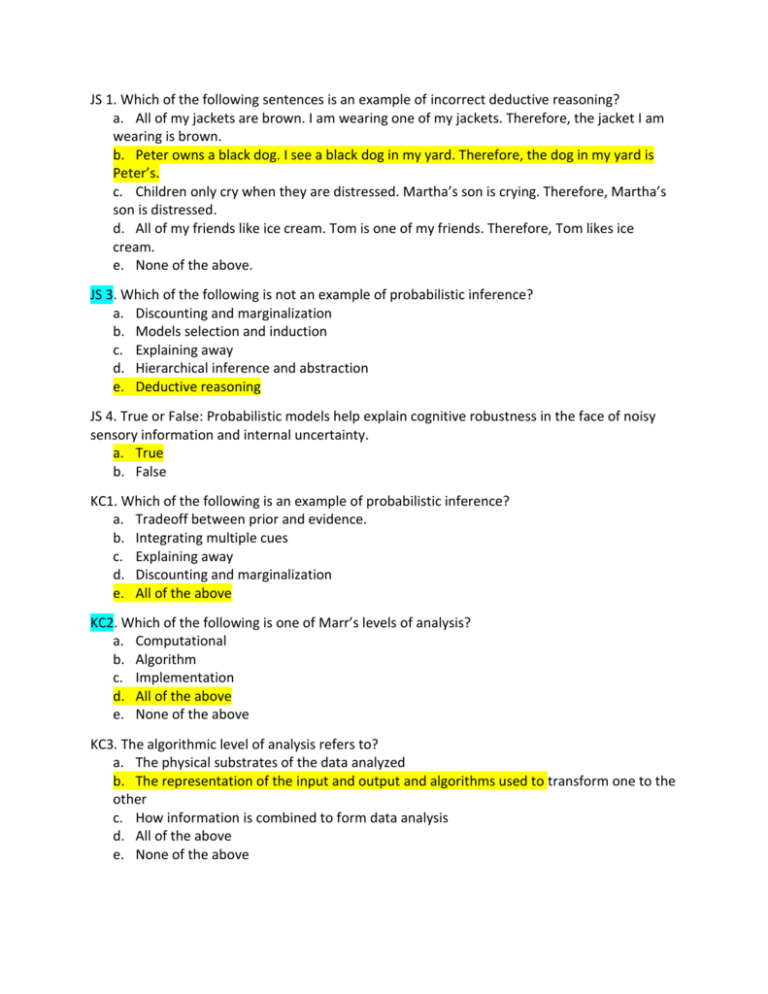

JS 1. Which of the following sentences is an example of incorrect deductive reasoning? a. All of my jackets are brown. I am wearing one of my jackets. Therefore, the jacket I am wearing is brown. b. Peter owns a black dog. I see a black dog in my yard. Therefore, the dog in my yard is Peter’s. c. Children only cry when they are distressed. Martha’s son is crying. Therefore, Martha’s son is distressed. d. All of my friends like ice cream. Tom is one of my friends. Therefore, Tom likes ice cream. e. None of the above. JS 3. Which of the following is not an example of probabilistic inference? a. Discounting and marginalization b. Models selection and induction c. Explaining away d. Hierarchical inference and abstraction e. Deductive reasoning JS 4. True or False: Probabilistic models help explain cognitive robustness in the face of noisy sensory information and internal uncertainty. a. True b. False KC1. Which of the following is an example of probabilistic inference? a. Tradeoff between prior and evidence. b. Integrating multiple cues c. Explaining away d. Discounting and marginalization e. All of the above KC2. Which of the following is one of Marr’s levels of analysis? a. Computational b. Algorithm c. Implementation d. All of the above e. None of the above KC3. The algorithmic level of analysis refers to? a. The physical substrates of the data analyzed b. The representation of the input and output and algorithms used to transform one to the other c. How information is combined to form data analysis d. All of the above e. None of the above WC 1. Among Marr’s levels of analysis, which is involved in how the system (i.e., neurons) is physically connected in the brain? a. Behavioral b. Computational c. Environmental d. Implementational e. Algorithmic WC 2. What level of description is most naturally described in terms of probabilistic inference? a. Behavioral b. Computational c. Environmental d. Implementational e. Algorithmic WC4. If observed data is highly probable when a hypothesis is true, Bayesian probability states that the ____________ is high. a. Posterior probability b. computational probability c. Likelihood d. Prior probability e. Consistency SD1: True / False: There are multiple ways to answer a “how” question based on the level of description. a. True b. False SD2: True / False: According to Professor Vul’s lecture, there is a single best level of description to understand how the brain works. a. True b. False SD3: What are Marr’s levels of analysis? a. Computation, Algorithm, Implementation b. Cognition, Neurons, Ions c. Statistics, Probability, Inference d. Problem, Cognition, Action LS1. Dr. Vul showed an animation of some shapes moving around on a screen (two triangles and a circle). What was the point he was trying to illustrate? a. Our brain excels at making inferences based on very impoverished data b. Language is lateralized to the left hemisphere of the brain c. We have an amazing ability to recognize faces d. All of the above e. None of the above LS 2. Which of the following is NOT one of Marr’s levels of analysis? a. Algorithm b. Computation c. Memory d. Implementation e. All of the above are Marr’s levels of analysis. BT1. Which of the following is not considered a part of Cox’s axioms of probability? a. Degrees of belief are represented by real numbers b. Consistency c. Marginalization and discounting d. Qualitative correspondence with common sense e. b and c BT5. Which of the following is NOT an example of probabilistic inference? a. Tradeoff between prior and evidence b. Hierarchical inference and abstraction c. Deductive planning and action d. Integrating multiple cues e. Growing structured representations DM4. True or false? Bayes’ theorem relates hypotheses and data via conditional probabilities, and is therefore useful for inferring underlying causes for observed data. a. True b. False DM5. If you’re on drugs and see an elephant in a shopping mall, you’re likely to think that the elephant is a hallucination. If you’re on drugs and see an elephant near a zoo, you’re more likely to think that the elephant is real. What is a natural Bayesian inference formulation for this scenario? a. The prior probability of seeing an elephant is higher near a zoo than near a mall, so the hypothesis that you’re seeing correctly is accepted more readily. b. The prior probability of seeing an elephant is higher near a mall than a zoo, so the hypothesis that you’re seeing correctly is accepted more easily. c. The posterior probability of seeing an elephant is higher near a mall than near a zoo, so the hypothesis that you’re seeing correctly is accepted more easily. d. b and c e. a and c DM6. Which of the following is not a term in Bayes’ Theorem? a. Prior probability b. Posterior probability c. Marginal probability d. Likelihood e. Evidence DO NOT USE (too specific) JS 2. Which of the following is one of Cox’s axioms? a. Degrees of belief are represented by real numbers. b. There is qualitative correspondence with common sense. c. If a conclusion can be reached in multiple ways, then every possible way must lead to the same result. d. All of the above. e. Two of the above. (the “wrong” answers are partially correct. Too confusing) JS 5. What does Bayes’ Theorem do? a. Combining prior probability and new evidence to compute a posterior probability. b. Deducing further implications based on a number of true statements. c. The ability to construct a narrative from very limited stimuli, such as moving shapes. d. The implementation of an algorithm to predict future outcomes in a data set. e. None of the above. (poorly phrased) JS 6. Which statement below best describes probabilistic models? a. Probabilistic models are useful statistical tools for analyzing the way the brain functions at a biochemical level. b. Probabilistic models help explain cognitive robustness in the face of noisy sensory information and internal uncertainty. c. Probabilistic models are exact representations of the computational power of the human brain. d. Probabilistic models primarily indicate only one method of probabilistic inference is employed by human brains. e. None of the above. (incorrect) KC4. Bayes Rule has the idea that the probability of H (P(h)) is high if the hypothesis is plausible. a. True b. False (not a main focus of this lecture) KC5. What is the definition of pragmatics? a. b. c. d. e. The relationship between context and meaning. The relationship between meaning and letter spacing. The relationship between context and grammar. None of the above. Both a and b (too subtle a point) WC3. True or False. All computational models are cast at the computational level. a. True b. False (confusing answer) WC5. Kemp et al (2007) found that kids showed a shape bias when making generalizations from newly learned objects. It suggests that: a. There is a tradeoff beween prior and evidence. b. Proabilistic computation is utilized to integrate multiple cues. c. Prbabilistic decisions are sensitive to the uncertainty of environment. d. Hierachcal inference and abstraction are key to the probability cognitive model. e. Vision can be viewed as probabilitic parsing. (no good way to ask this question without making it too easy) SD4: If I find a coin and flip it 5 times and it comes up heads every time, I am more likely to believe that it is not a magic coin given what kind of contextual clue: a. I found it in a park. b. I found it outside a magic shop. c. I found it on the sidewalk. d. None of the above. (not a major point in the lecture) SD5: In the word learning study Professor Vul described in lecture, children are more likely to make generalizations about what object a noun corresponds to using which of the following characteristics? a. Color b. Texture c. Shape d. Size (redundant) LS 3. Not all computational models are cast at the computational level a. True b. False (redundant) LS 4. Which of the following is NOT one of the modes of inference that people make that was discussed by Dr. Vul? a. Integrating multiple cues b. Explaining away c. Probabilistic decisions d. Hierarchical inference e. All of the above are modes of inference discussed by Dr. Vul (not quite right) LS 5. Which of Marr’s levels of analysis has to do with what the system represents and how the system does what it does? a. Implementational b. Computational c. Algorithmic d. Probabilistic e. None of the above (redudant) BT2. Which of the following does not contribute toward Marr’s levels of analysis? a. Algorithms b. Computations c. Marginalization d. Implementation e. All contribute toward Marr’s levels of analysis (too subtle) BT3. True or False: Computational models, such as the Hodgkin-Huxley model or neural networks, all begin on a set computational level. a. True b. False (too subtle) BT4. According to Baye’s rule, which of the following does NOT help in making a good scientific argument? a. Hypothesis must be plausible; P(H) is high. b. Hypothesis strongly predicts the observed data; P(D|H) is high. c. Data is surprising; P(D) is low. d. Posterior probability, P(H|D), is low e. All of the above helps in making a good argument (next three: avoid questions that are too specifically tied to a speaker’s viewpoint or presentation style) DM1. According to Ed Vul, what is the signiicance of the question “how do we do x?” a. There is no single, easy answer to this question, there are several possible answers that are each useful for different goals, like predicting different phenomena. b. It is the first question that needs to be asked in any behavior science study. c. It highlights the importance of starting small, in that it grounds our research on a single topic. d. b and c. e. All of the above. DM2. Which of the following is not an approach to describing behaviors as described by Vul? a. Computation b. Algorithms c. Electrochemistry d. Psychological e. Circuits DM3. According to Ed Vul, probabiltistic approaches are advantaged in that a. probability is a formalism for belief b. probability allows for air-tight computation, allowing for the closest we have to deductive analysis c. probability is very robust because it’s mathematical, d. probabilistic models can evolve very quickly between users, as slight changes in the computation involved can produce huge differences in result. e. probabilistic approaches generalize to many different problems