Speech 3 : Controlling Internet Content: Implications for

advertisement

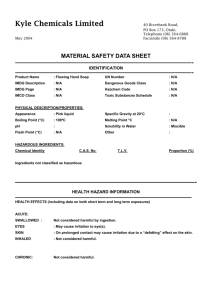

Speech 3: Controlling Internet Content: Implications for Cyber-Speech by Dr. Yaman Akdeniz, Lecturer in CyberLaw, School of Law, University of Leeds; Director, Cyber-rights & Cyber-Liberties (UK) As far as content regulation is concerned, it is necessary to distinguish between illegal and harmful content – these two types of content need to be treated differently. The former is criminalized by national laws while the latter is considered as offensive or disgusting by some people but is generally not criminalized by national laws. Child pornography, for instance, falls under the ‘illegal content’ category while adult pornography falls under the ‘harmful content” category. There are also some grey areas such as hate speech and defamation, which in some countries are regarded as criminal offences and in others not. In general terms. there are two types of responses to illegal and harmful content: (i) government regulation and (ii) self and co-regulation. Government regulation includes laws at the national level, directives and regulations at the supra-national level (EU), and conventions at the Council of Europe (see CyberCrime Convention) and UN level (see Optional Protocol to the Convention on the Rights of the Child on the sale of children, child prostitution and child pornography). Self and co-regulation comprises measures such as the development of hotlines, codes of conduct, filtering software and rating systems. Speech/content regulation Illegal vs. Harmful Content » Separate policy action is required for illegal and harmful content » The difference between illegal and harmful content is that the former is criminalised by national laws, while the latter is considered as offensive or disgusting by some people but certainly not criminalised by national laws. Illegal Content Harmful Content Grey areas – Hate Speech, defamation (competing rights) Responses to Illegal & Harmful Content » Government Regulation » Child Pornography, Hate Speech » Pornography, Hate Speech – Laws at the national level – Directives, Regulations at the Supranational Level (EU) – Conventions at the CoE (CyberCrime) and UN Level (Optional protocol) » Self and Co-Regulation – Development of Hotlines, Codes of Conduct, Filtering Software, and Rating Systems Self and co-regulatory initiatives can provide less costly, more flexible and often more effective alternatives to prescriptive government legislation. A number of international statements and Action Plans at EU level support such initiatives. However, a number of problems remain with self and co-regulatory arrangements. Firstly, they do not apply to those organizations who are not members of the scheme. Secondly, a very limited range of sanctions is available in case of breach of rules. Finally, one may question the accountability and impartiality of self-regulatory bodies. More specific problems appear with rating and filtering systems. They do not offer full protection to citizens. Massive over-blocking and occasionally under-blocking is witnessed in many filtering software. Moreover, there is no consensus on what should be filtered or rated, due to moral and cultural differences but also depending on whether one is concerned with adult rights or children rights. Problems with Rating & Filtering Systems Both rating and filtering systems are problematic » Originally promoted as technological alternatives that would prevent the enactment of national laws regulating Internet speech, filtering and rating systems have been shown to pose their own significant threats to free expression. When closely scrutinised, these systems should be viewed more realistically as fundamental architectural changes that may, in fact, facilitate the suppression of speech far more effectively than national laws alone ever could. (Global Internet Liberty Campaign, 1999). They do NOT offer full protection to concerned citizens They could be defective Massive overblocking is witnessed in may filtering software Too much reliance on mindless mechanical blocking through identification of key words and phrases. They are based upon the morality that an individual company/organisation is committed to: broad and varying concepts of offensiveness, "inappropriateness," or disagreement with the political viewpoint of the manufacturer is witnessed. Apart from overblocking, underblocking is also witnessed with certain filtering software The development of Europe wide ISP Codes of Conduct has been difficult, due to different national definitions of and approaches to illegal and harmful content. The primary responsibility for Internet content remains with the content providers and not with the ISPs. Problems with ISP Codes of Conduct Generally Harmonisations is difficult » Development of Europe wide ISP Codes of Conduct has been problematic » There are different approaches to illegal and harmful content » Each country may reach its own conclusion in defining the borderline between what is permissible (legal) and not permissible (illegal) » It is difficult to draw up a pan-European code which sets substantive limits as to illegal content Market decides? ISPs have different relationship with information » Not truly self-regulatory: Governments are involved and slows down the process » Third party content – they do not want to get involved with policing » Although no ISP controls third party content or all of the backbones of the Internet, the crucial role they play in providing access to the Internet made them visible targets for the control of “content regulation” on the Internet. What happens when there are conflicting rights? What happens if an ISP does not join or act by the Code? » There are areas which is difficult for the ISPs to decide on issues (e.g. defamation) » Complaint mechanisms hard to develop? In conclusion, a credible self and co-regulatory framework can only work if backed not only by government but also by industry and civil society representatives. Fundamental human rights such as freedom of expression and privacy must be respected and all relevant stakeholders must be involved in the design and operation of the scheme. A Workable System? A credible self and co-regulatory framework could only work if » backed not only by government but also by industry and civil society reps. » Respect fundamental human rights such as freedom of expression and privacy » command public confidence » there is strong external consultation and involvement with all relevant stakeholders in the design and operation of the scheme » the operation and control of the scheme is separate from the institutions of the industry (so far as practicable) » consumer, public interest and other independent representatives are fully represented (if possible, up to 75 per cent or more) on the governing bodies of self-regulatory schemes. » the scheme is based on clear and intelligible statements of principle and measurable standards – usually in a Code – which address real consumer and user concerns. » the rules identify the intended outcomes. » the scheme is well publicised, with maximum education and information directed at consumers and users. » the scheme is regularly reviewed and updated in the light of changing circumstances and expectations. » It involves an “independent complaints” mechanism. For further information see the following published work of the author: Akdeniz, Y., “Who Watches the Watchmen? The Role of Filtering Software in Internet Content Regulation,” in Organization for Security and Co-Operation in Europe (“OSCE”) Representative on Freedom of the Media eds, The Media Freedom Internet Cookbook, Vienna: Austria, 2004, pp 101-125 at http://www.osce.org/documents/rfm/2004/12/3989_en.pdf Akdeniz, Y., “Controlling Illegal and Harmful Content on the Internet,” in Wall, D.S. (eds) Crime and the Internet, London: Routledge, November 2001, pp 113-140. Akdeniz, Y., & Strossen, N., “Sexually Oriented Expression,” in Akdeniz, Y., & Walker, C., &, Wall, D., (eds), The Internet, Law and Society, Addison Wesley Longman, 2000, pp 207-231. Akdeniz, Y., “Child Pornography,” in Akdeniz, Y., & Walker, C., &, Wall, D., (eds), The Internet, Law and Society, Addison Wesley Longman, 2000, 231-249. Akdeniz, Y., “Who Watches the Watchmen: Internet Content Rating Systems, and Privatised Censorship,” in eds EPIC, Filters and Freedom - Free Speech Perspectives on Internet Content Controls, Washington DC: Electronic Privacy Information Center, 1999. Akdeniz, Y., “Governing pornography and child pornography on the Internet: The UK Approach,” in Cyber-Rights, Protection, and Markets: A Symposium, (2001) University of West Los Angeles Law Review, 247-275 at http://www.cyberrights.org/documents/us_article.pdf Akdeniz, Y., “UK Government and the Control of Internet Content,” (2001) The Computer Law and Security Report 17(5), pp 303-318 at http://www.cyberrights.org/documents/clsr17_5_01.pdf