Workshop Abstracts - Epistemology of the LHC

advertisement

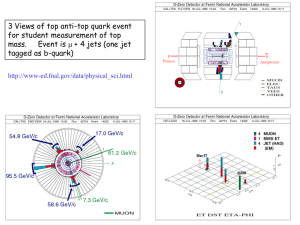

Abstracts for the Wuppertal Workshop Modeling and LHC Arianna Borrelli (Friday, January 27, 16.20-17.10) Models "beyond the Standard Model" in research practices Despite the phenomenological successes of the Standard Model, theoretical physicists have been trying since more than three decades to guess how physics "beyond the Standard Model" (BSM) might look like. Today, finding evidence of such physics is top priority at the LHC, yet physicists readily admit that the complex, ever-changing landscape of BSM-models defies attempts at gaining an overview even on the part of the most experienced theorists. In this situation philosophers of science investigating high energy physics can hardly ignore the question of the function (or functions) which BSM-models perform in actual research ativities. What do terms like "supersymmetry" or "warped extra-dimensions" mean in practice to the members of the different subcultures of microphysics? How do physicists with different backgrounds learn about and form an opinion on BSM-.models? How do they talk about them? Do they usually have commitments to the one or the other model? How do they choose one model to work on or to test? In my talk I will present some preliminary results of an empirical investigation addressing these and related issues based on (1) a classification of relevant HEP-preprints, (2) interviews and (3) an online-survey conducted among high energy physicists. Nazim Bouatta (Saturday, January 28, 11.10-12.00) Renormalisation group and phase transitions in quantum field theory In the last forty years, quantum field theory (QFT) has come to be the unifying framework within several branches of physics: in particular, the physics of high energies, of condensed matter, and of the early universe. Accordingly, philosophers of physics have rightly addressed themselves to discussing various conceptual aspects of QFT. But philosophers have largely ignored phase transitions in QFT: a curious lacuna, since they have discussed phase transitions in quantum statistical mechanics. In this talk we aim to fill this lacuna: or rather, take the first steps to doing so, since this is a vast field. We will also relate this example to the wider issues, which remain topical within physics, about how best, in a quantum field theory, to define---and so to count, and so integrate out!---degrees of freedom. Herbert Dreiner (Friday, January 27, 17.10-18.00) SUSY Model Building and the LHC We discuss how various supersymmetry models are constructed, and how they are motivated. We then show how model building has affected the search for supersymmetry at the LHC. Robert Harlander (Friday, January 27, 15.00-15.50) The Higgs boson and its alternatives I will summarize the motivations of, searches for, and restrictions on the Standard Model Higgs boson, and give an overview of the most popular non-Standard Model solutions to electro-weak symmetry breaking. These include the realization of the Higgs mechanism in supersymmetric models, as well as dynamical symmetry breaking and composite Higgs models. Rafaela Hillerbrand (Friday, January 27, 11.40-12.30) The Hermes effect & the nature of causality In recent years, computational sciences such as computational hydrodynamics or computational field theory supplemented theoretical and experimental investigations in many scientific fields. Often, there is a seemingly fruitful overlap between theory, experiment, and numerics. The computational sciences are highly dynamic and seem a fairly successful endeavor — at least if one measures success in terms of publications or engineering applications. However, we know that for theories success in application and correctness are two very diff erent animals; and just the same for ’methodologies’ like computer simulations. Taking simulations and experiments within high energy physics as pursued at the Large Hadron Collider (LHC) at CERN as a study case, this paper therefore addresses the added value computer simulations may provide on an epistemological level and discusses possible problems when computer simulation and laboratory experiment are intertwined. Here stochastic methods in the form of Monte Carlo simulations are involved in generating the experimental data. It is asked as to how far a realistic stance can be maintained when such stochastic elements are involved. In particular, the so-called Hermes effect is taken as a study case. I will contend that using these types of experiments as a source of new phenomena or for theory testing must presuppose a certain understanding of causality and thus binds us at least to a weak form of realism. Hannes Jung (Thursday, Jan 26,17.10-18.00) Simulation or Fakery - Modelling with Monte Carlo I discuss the principle of the Monte Carlo method and its application to simulate processes in high energy physics. I will discuss whether MC modelling is faking or simulating observables, and I will address the limitations of the MC approach. Koray Karaca (Friday, Jan 27, 9.30-10.20) Lessons from the data-acquisition of the ATLAS experiment: diagram models, visual representation, and scientific communication I examine the data-acquisition system of the ATLAS experiment currently underway at LHC and I show that its design has been implemented through the use of a visual imagery that consists of a suitable set of “diagram models” borrowed from the system and software engineering. I point out that in terms of what and how they represent diagram models and theoretical models differ from each other in that while the former deliver diagrammatic representations of the processes, data-flows and exchanges of messages within information systems, the latter deliver sentential (propositional) representations of natural phenomena. Moreover, I argue that diagram models serve as vehicles of scientific communication within the ATLAS collaboration during the design phase of the dataselection system. For they provide a standard of communication that greatly facilitate the communication among the various research groups that are both internal and external to the dataselection system. I point out that diagrammatic representation’s being external and its ability to facilitate communication are closely linked, in that the former feature makes the rules and conventions of diagram models visually accessible to, and thus shared by, all parties involved in the data-selection process. This is exactly what makes diagram models resources of communication in the context of the data-selection system of the ATLAS experiment. Kevin Kröninger (Friday, Jan 27, 10.20-11.10) Using data to model background processes in top quark physics The top quark is the heaviest of all known elementary particles and several of its properties are currently being studied at the LHC. Most of these studies are based on selecting data events consistent with the production of top quark pairs - the most common production mechanism of top quarks. Inherent sources of background, i.e., processes which mimic top quark pair production, are present in such data samples. The signal process and some sources of background are typically modelled by simulations. For other processes, namely QCD multijet production and the production of W-bosons with additional jets, the data itself are used as a model. The focus of the talk will be on the modelling of processes without simulations, illustrated by using top quark production at the LHC as an example. Advantages and disadvantages of such modelling will be discussed, and its choice in current data analyses will be motivated. Chuang Liu (Thursday, Jan 26, 14.10-15.00) Models and Fiction This paper gives a critical discussion of the recent literature on the fictional nature of scientific models, focusing on the question of what, if any, models used in science can be properly viewed as fictional. The result of this study is a broadening of our conception of models, and it also sharpens our understanding of “the fictional.” Among the arguments is one that leads us to see that certain scientific models (e.g. models for the unobservables) are closer to myths, which we now regard as fictional or make-believe, than any other types of more realistic conceptions. Margaret Morrison (Thursday, Jan 26, 16.20-17.10) Simulation, Experiment and the LHC Much of the recent philosophical literature on simulation focuses on distinguishing it from experiment and providing reasons why experiment enjoys more epistemic legitimacy. Even in contexts where experiment isn’t possible and our only knowledge of physical systems is via simulation (e.g. astrophysics) the status of simulation results is sometimes called into question. However, even those who champion simulation as capable of providing knowledge that is on a par with experimental results would agree that ontological claims about the existence of particles or particular effects cannot be established via simulation; experiment is the only reliable route to such conclusions. An example is search for the Higgs boson and the experiments being conducted at the LHC. But, a closer look at these experiments reveals an extraordinary reliance on simulation, not only in the design of various aspects of the collider but in the interpretation of data and the very identification of the events themselves. I examine some aspects of simulation as it is used at the LHC and in particular discuss how the methodology of verification and validation, an integral part of simulation practice, is applied in the context of LHC experiments. I conclude by arguing that while new physics or the existence of the Higgs boson cannot be established on the basis of simulation it can also not be established without it. Consequently, in this context at least, the distinction between experiment and simulation is one that is difficult to maintain in any non-ambiguous way. Kent Staley (Friday, Jan 27, 14.10-15.00) Modeling Possible Theoretical Errors: The Problems of Scope and Stability At least some experimental knowledge depends on the use of theoretical assumptions, a term that refers to propositions used in drawing inferences from data that, to some significant degree, are susceptible to two kinds of problems: 1. Problems of scope: highly general theoretical assumptions are difficult to test exhaustively and sometimes must be applied in domains in which they have not previously been tested. 2. Problems of stability: the possibility of unforeseen, dramatic change in general explanatory theories constitutes some reason to worry about similar upheavals overturning theoretical assumptions that presently seem sound; moreover, conceptual innovation sometimes brings erroneous implicit assumptions to light. I explore here a strategy for securing theory-mediated inferences from data that uses models of error scenarios to cope with the problems of scope and stability. In particular I consider the extent to which this strategy can help with the particularly difficult challenge of instability due to unforeseen theoretical change. I will illustrate the challenges and the potential of these strategies with an examination of recent results from the search for supersymmetric particles by the ATLAS collaboration at the LHC. In the end, I defend a guardedly optimistic position: Experimenters can avail themselves of methods that allow them to secure their experimental conclusions against the possibilities of erroneous theoretical assumption. This is because of strategies that can secure experimental conclusions against both anticipated and unanticipated possibilities of error. However, judgments about how well a conclusion has been thus secured are themselves subject to correction, making the provisionality and corrigibility of experimental conclusions crucial. Jay Wacker (Saturday, Jan 28, 9.30-10.20) The Post-Model Building Era and Simplified Models The notion of building models of physics beyond the Standard Model of particles physics has evolved substantially over the past decade. In the era of the LHC, this has motivated a change in perspective on how to search for signatures of unexpected new physics. One proposal that has recently been explored is Simplified Models. I will discuss how Simplified Models fit into the post-model building era of high energy physics. James Wells (Saturday, Jan 28, 12.00-12.50) Effective Field Theories and the Preeminence of Consistency in Theory Choice Promoting a theory with a finite number of terms into an effective field theory with an infinite number of terms worsens simplicity, predictability, falsifiability, and other attributes often favored in theory choice. However, the importance of these attributes pales in comparison with consistency, both observational and mathematical consistency. I show that consistency unambiguously propels the effective theory to be superior to its subset theory of finite terms, whether that theory be renormalizable (e.g., Standard Model of particle physics) or unrenormalizable (e.g., gravity). Some implications for the Large Hadron Collider and beyond are discussed. Christian Zeitnitz (Thursday, Jan 26, 15.00-15.50) Simulation in the ATLAS Experiment Simulation is a key ingredient to design, commission and operate a high energy physics experiment. The impact on the analysis is substantial, since the simulation programs are used to extract the signal from the background. The talk gives an overview over the utilization of simulation and physics models at the different stages of the LHC experiments using ATLAS as an example.