My title raises the issue of the relevance of experiments in

advertisement

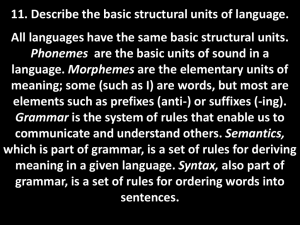

Brain Waves and Button Presses: The Role for Experiments in Theoretical Linguistics Alec Marantz, Utrecht, August 29, 2003 My title raises the issue of the relevance for so-called theoretical linguistics of experiments in neuro and psycholinguistics. By theoretical linguistics, I mean the theory of grammar, that is, the theory of the mental representations of language in the mind and brains of speakers. I run an MEG laboratory at MIT, in which we use magnetic sensors to monitor brain responses of subjects, and I’m often asked what the MEG studies might have to do with my theoretical work in morphology and syntax. Now, on the one hand, it might seem obvious that if theoretical linguistics is about the grammars in the minds and brains of speakers, brain responses from these speakers should be relevant to the enterprise. However, on the other hand, at least for those of us that grew up linguistically in the 60’s and 70’s, we were taught to respect the crucial competence/performance distinction. [SLIDE] We study competence, but brain waves would seem to weigh in more on the performance side. In particular, neuro and psycholinguistic data would be data about language use rather than about linguistic representations. Well, what about the competence/performance distinction? Recall that Chomsky framed this distinction to explain the difference between cognitive approaches to language and the approaches of the structuralist linguists and the behaviorist psychologists. Performance theories for the structuralists and behaviorists weren’t cognitive theories of language use but accounts of the behavior itself (about corpora of utterances, of example). So a structuralist grammar was supposed to be a compact reduction of a corpus of sentences. That is, a “competence” theory isn’t a theory devised without consideration of linguistic performance, used as the data relevant for deciding between competing theories. Rather, competence theories, about internal representations, are contrasted with performance theories, which are reductions of or models of behavior. Although Chomsky recast the competence/performance distinction into the I-language/Elanguage contrast to avoid some confusions, the original distinction is still important, and should be kept in mind when evaluating the neo-behaviorist theories of some connectionists. A connectionist model of English past-tense formation, for example, is a performance theory. The internal representations of the model aren’t representations of knowledge of language – for example, knowledge of verbs or of tense – but rather are representations geared to modeling performance, the performance of connecting stem and past tense forms of verbs. To the extent that any theory of the knowledge of language is involved in such models, it is embodied in the structure of the input and output nodes to the model, and here the structure is built-in and presupposed, not part of the model at issue. [SLIDE] A well-trained graduate of my Department should tell you that, for linguistic theory, data are data – there is no special status associated with judgments of grammaticality or judgments of logical entailment. Every piece of data used by linguists must be the product of the linguistic knowledge of a speaker interacting with various 2 “performance” systems. We test theories by making assumptions about these performance systems and trying to abstract away from their effects on language use. Despite a general understanding of the relationship between data and theory in linguistics, there are still some lingering suspicions and misunderstandings. Let’s first address some of the misunderstandings. I work on issues in lexical access, and frequencies are an important factor in any behavioral response to words. [SLIDE] In general, of course, the more frequent a word or piece of a word is, the faster a speaker’s response to the word. I’ve been told by some that frequency effects are irrelevant to competence theory. Or, alternatively, I’ve been told by others that frequencies must be represented in the grammar to account for behavior. According to these critics, linguistic theory is deficient in ignoring frequency in accounting for linguistic knowledge. I hope it’s clear to this audience that nothing’s at stake here with respect to frequencies, at least given our current knowledge of how frequency affects behavior. If we represent a word’s frequency in the grammar by coding the frequency in the size of the representation, would frequency be part of the linguistic representation? Clearly, the important issue of linguistic representation is whether frequency of the elements in linguistic computation interact with something else in an interesting way. If no rules, constraints or principles of grammar make direct reference to frequency and if the effect of frequency on computation is some function, linear or non-linear, of the frequency of the pieces computed over, than the linguist is free to abstract from frequency in the theory of linguistic representations and computations. And it’s not an issue whether or not 3 frequency is part of linguistic representations. On the other hand, since frequency effects are so robust and consistent, experiments can exploit the frequency variable to probe issues of representation – as I’ll illustrate later. Other misunderstandings include the notion that since, in some theories of grammar, grammaticality vs. ungrammaticality can be a categorical distinction, linguists treat judgments of grammaticality as a categorical response. Whatever judgments of grammaticality are, they are, like any behavioral data, only indirectly related to the computational system of grammar in speakers and don’t represent special access to the principles or representations of language. For example, a linguistic theory might claim that the structure shown here for “gloriosity” is ungrammatical while the similar structure here for “gloriousness” is grammatical. However, this doesn’t directly predict that speakers will judge the sound or letter string “gloriosity” as bad and the string “gloriousness” as good. Rather, predictions about speaker’s judgments must involve both the theory of grammar and the theory of the task presented to the speaker. I take this point as rather obvious, but it’s often missed in the literature, at least in the heat of battle. For example, in a recent article, Mark Seidenburg and colleagues fault a linguistic account of the contrast between “rats eater” and “mice eater” because the account of why “rats eater” is ill-formed does not in itself predict that “mice eater” should be less acceptable that “mouse eater.” That is, they take the categorical label “grammatical” for “mice eater” to imply that speakers should judge this compound as being as good as any other grammatical compound. 4 In general, we can clear away these conceptual impediments to a useful integration of neuro and psycholinguistic data into theoretical linguistic research. However, someone unfamiliar with the daily work of a linguist might legitimately raise a valid suspicion concerning the relevance of a linguist’s grammar to predictions of behavior and brain responses in experiments. Language relates sound and meaning. Linguistic grammars are a formal account of this relation. Generative grammars instantiate the mapping between sound and meaning via a particular computational mechanism. Suppose a linguist’s grammar is accurate in that it describes a speaker’s knowledge of the connection between sound and meaning. [SLIDE] Does that mean that the speaker couldn’t also have other computational mechanisms to mediate between sound and meaning, for example, certain performance strategies optimized for particular types of language use. For example, couldn’t there be separate specialized systems for comprehension and for production that derive sound/meaning connections independent of the grammar? This particular instantiation of a competence/performance distinction would be a rough description of Tom Bever’s position. In particular, I think it would be fair to characterize Bever’s position as one in which listeners use special strategies in sentence comprehension that by-pass the grammar on the way to an interpretation, at least initially. His slogan is, I believe, “Syntax Last.” In the area of morphology – the area for which I’ll present some experimental data during this talk – the notion that speakers can by-pass the grammar in language use most often 5 surfaces in the proposal that words or other chunks of structure can somehow be “memorized” and thus that speakers can produce and comprehend these structures as wholes, without composition or decomposition by the computational system of grammar. To be honest, I have never seen a version of this proposal – that we memorize certain complex chunks of structures and access them in production and comprehension as wholes – I haven’t seen a version of this that I find even coherent. To take a simple case, what would it mean to say that we memorize “gave” as a whole, without always decomposing or composing “gave” into or from a stem GIVE with a past tense morpheme? Unless “gave” has both GIVE and past tense in it, we can’t account for the blocking of *gived. As far as I can tell, people that claim that “gave” is memorized while “walked” is not are simply claiming that the relationship between stem and past tense for “gave” is different than that for “walked,” and this difference involves things we know about the stem GIVE from experience with “gave.” Of course no one would disagree with that kind of statement, but it doesn’t imply any kind of “dual route” to linguistic representations. Suppose we try to take seriously the claim that there are multiple routes to linguistic representations such that the linguist’s grammar would not describe the computational system used in language comprehension or production. Suppose that button-press reaction time data and brain waves lead to a particular theory of linguistic computations during language comprehension and production – say something like Bever’s strategies. If psycholinguists did devise and empirically support such an alternate route to linguistic representations, the linguist should be both shocked and confused. Shocked because 6 there would be some alternative computational system for generating linguistic representations that the linguist hadn’t considered him/herself. How did the psycholinguist come up with a system that would be descriptively adequate in the sense of yielding linguistic representations that successfully connect sound and meaning but using a novel computational mechanism? Confused, because the linguist him/herself has to believe that s/he is using data from comprehension and production in designing and evaluating his/her theory. What special data did the linguist have that the psycholinguist ignored? To argue for multiple computation systems one would have to show that there are two separable types of data such that one computational system would be responsible for one and the other for the other. If you just squint at the issue, one might suppose that comprehension and production could provide these dual data sets requiring separate systems, although as far as I can tell, realistic investigations into this possibility yield the conclusion that comprehension and production are largely computationally equivalent. But there is no way to imagine that there’s a set of data associated with the linguist’s grammar that would be distinct from data connected to computational accounts of language production and comprehension. The linguist would be very worried if some psycholinguistic theory successfully provided an account of the connection between sound and meaning in language that involved computational mechanisms independent of the linguist’s grammar. If a psycholinguist claimed to have developed such a theory, then the linguist should take it on as an alternative hypothesis about the computational system of language in general and put it in competition against his/her own theory. So, for 7 example, we can argue against Tom Bever’s account of computation during sentence comprehension by arguing that the representations he claims listeners’ compute using strategies do not in fact capture the information that listeners extract from linguistic input – that is, the representations that the strategies yield are not representations that speakers of a language actually have in their heads. When we claim, then, that language involves a single generative engine – a single system for linguistic computation necessarily involved in the analysis and/or generation of linguistic representations of all sorts, this claim is meant to cover analysis and generation in language production and comprehension, in fact, in any situation natural or experimental in which speakers are required to access or produce language. If someone were to argue for multiple computational systems, the first step would be to show that that an alternative computation were descriptively adequate, that is, actually described sound/meaning connections of the sort captured by the linguist’s grammar. If a psycholinguist proposed such an alternative computational system, the linguist would take it seriously as a competing theory of grammar. Then the psycholinguist would need to show that in fact one can separate two sorts of data, where each sort should be associated with a particular computational mechanism. [I believe that Tom Bever’s group is the only group of psycholinguists that appreciates the challenges behind proposing dual systems models of language knowledge and use.] I have been arguing that linguists cannot treat psycho or neurolinguistic data as special in any way, subject to an account in terms of an alternative computational system from that 8 proposed by linguists. Every bit of data related to language potentially implicates the sole computational system for language. Nevertheless, random data of any sort may not be of particular interest. Why do I believe it’s important to have an MEG machine in my linguistics department, and why do I applaud the Utrecht Linguistics Institute’s integration of theoretical and experimental approaches to language? It’s useful to separate the positive impact of experimental approaches to language on linguistic theory into three levels: (1) A symbolic importance, a reminder of the potential testability of competing analyses (2) A constraint on linguistic theory from what might be called the logical problem of language use (3) Clarification of the concrete mechanisms of language processing in the brain that allows straightforward interpretation of brain and behavioral data. I find the symbolic importance of neurolinguistics to be invoked in the following Wallace Stevens poem. Take “Tennessee” to stand for the MIT linguistics department and the “jar” to be the MEG machine in the Department. I placed a jar in Tennessee, And round it was, upon a hill. It made the slovenly wilderness Surround that hill. The wilderness rose up to it, 9 And sprawled around, no longer wild. The jar was round upon the ground And tall and of a port in air. It took dominion every where. The jar was gray and bare. It did not give of bird or bush, Like nothing else in Tennessee. Wallace Stevens The presence of the MEG machine changes how one thinks about alternative theoretical possibilities. When theoretical alternatives are being considered, the MEG machine stands as a reminder that there always can be a fact of the matter about a choice between theories. That is, to the extent that we want to take our theoretical machinery seriously, the brain either does it this way or it doesn’t. We may not have any idea at the moment about the data that might decide between the alternatives, but we can imagine finding a brain response predicted by one vs. the other theory, at least in principle. We’re used to constructing linguistic theories under the constraint of the logical problem of language acquisition – the representational system of a language much be such that a child could acquire it. Linguists are less accustomed to overtly relying on another “logical problem” – linguistic computations much be such that they are computable by 10 speakers. I’m calling this a “logical problem” in a very loose sense – I don’t mean that linguistic computations must be computable in a mathematical sense. Rather, I mean that we can reach certain conclusions thinking about what’s necessary for language use independent of particular experiments. For example, any theory that requires linguistic computation always to operate with all the lexical items in a sentence is hard to reconcile with the fact that people seem to start computation with the first words in a sentence and develop representations to a large extent from first to last – left to right. The locality domain in which linguistic computations connect sound and meaning, then, should be much much smaller that the sentence. Chomsky’s “phase” based cyclicity passes this logical test of language use, providing a locality domain that doesn’t obviously fly in the face of what people seem to be doing. In order to make predictions about button presses or brain waves in experiments, we need to map linguistic computations across time and across brain space. Even to get started on this enterprise, the theory must be practically mapable, i.e., mapable in practice. So, contemplating doing experiments requires addressing the “logical problem of language use” through an explicit, although partial, commitment to real-time operation of linguistic computations. Finally, the more we actually learn about linguistic computation in the brain, the more straightforward and natural it becomes to interpret various kinds of behavior or brain data in connection with linguistic issues. For example, what do we make of reaction time data in lexical decision experiments? Suppose, for example, that hearing “walked” speeds up 11 one’s lexical decision to “walk” while hearing “gave” doesn’t speed up one’s lexical decision to “give.” Without detailed knowledge of how the brain does lexical decision, one might be tempted to interpret this result as indicating that “walk” and “walked” are lexically related while “gave” and “give” are not. When we map out lexical access in time and in brain space, however, we can understand the various priming and inhibitory reactions that might yield no button press RT priming in the case of gave/give, despite the fact that processing “gave” involves computations with the same stem as appears in “give.” GO TO POWERPOINT SLIDES. 12