EXPERIMENTAL DESIGNS AND DATA ANALYSIS

advertisement

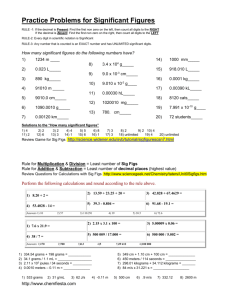

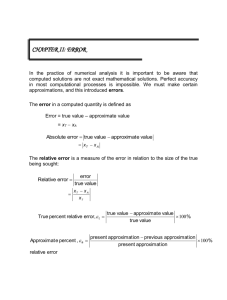

EXPERIMENTAL DESIGNS AND DATA ANALYSISEXPERIMENTAL DESIGNS AND DATA ANALYSIS The focus is on experimental design, the methods used for data collection and analysis The goal in the chemistry laboratory is to obtain reliable results while realizing there are errors inherent in any laboratory technique. Some laboratory errors are more obvious than others. Replication of a particular experiment allows an analysis of the reproducibility (precision) of a measurement, while using different methods to perform the same measurement allows a gauge of the truth of the data (accuracy). There are two types of experimental error: systematic and random error. Systematic error results in a flaw in experimental design or equipment and can be detected and corrected. This type of error leads to inaccurate measurements of the true value. On the other hand, random error is always present and cannot be corrected. An example of random error is that of reading a burette, which is somewhat subjective and therefore varies with the person making the reading. Another random error may be when the sample or subjects vary slightly. These types of error impact the precision or reproducibility of the measurement. The goal in a chemistry experiment is to eliminate systematic error and minimize random error to obtain a high degree of both accuracy and precision. Expression of experimental results is best done after replicate trials that report the average of the measurements (the mean) and the size of the uncertainty, the standard deviation. Both are easily calculated in such programs as Excel. The standard deviation of the trial reflects the precision of the measurements. Whenever possible you should provide a quantitative estimate of the precision of your measurements. The accuracy is often reported by calculating the experimental error. You should then reflect upon and discuss possible sources for random error in your measurements that contribute to the observed random error. Sources of random error will vary depending on the specific experimental techniques used. Some examples might include reading a burette, the error tolerances for an electronic balance etc. Sources of random error do NOT include calculation error (a systematic error that can be corrected), mistakes in making solutions (also a systematic error), or your lab partner (who might be saying the same thing about you!) Basic principles of Error Three basic principles necessary to provide valid and efficient control of experimental error should be followed in the design and layout of experiments. These are: Replication - Replication provides an estimate of experimental error; improves the precision of the experiment by reducing standard error of the mean, and increases the scope of inference of the experimental results. If you replicate the results, one time they may come out high and the next time low, and then find the mean, error may be minimized. Randomization. This is practiced to avoid bias in the estimate of experimental error and to ensure the validity of the statistical tests. This means that your sample should be as random as possible. You shouldn’t select your sample. Sample size - The recommended sample size for each experiment should be as large as possible while still manageable. The larger the sample size the less the impact of individual differences. A lower sample size could be used for multiple trials, but maintaining the same number in each trial is advantageous. Using an unequal sampling, then averaging would lead to a weighted error in the direction of the smaller sample. The design of an experiment is the most critical part of any research. Even the very best data analysis is rendered useless by a flawed design. There are three areas to consider during your design. Percent Error (Percent Deviation, Relative Error) and Accuracy When scientists need to compare the results of two different measurements, the absolute difference between the values is of very little use. The magnitude of error of being off by 10 cm depends on whether you are measuring the length of a piece of paper or the distance from New Orleans to Houston. To express the magnitude of the error (or deviation) between two measurements scientists invariably use percent error. If you are comparing your value to an accepted value, you first subtract the two values so that the difference you get is a positive number. This is called taking the absolute value of the difference. Then you divide this result (the difference) by the accepted value to get a fraction, and then multiply by 100% to get the percent error. So, % error = | your result - accepted value | accepted value x 100% Several points should be noted when using this equation to obtain a percent error. 1) When you subtract note how many significant figures remain after the subtraction, and express your final answer to no more than that number of digits. 2) Treat the % symbol as a unit. The fraction is dimensionless because units in the values will cancel. 3) Notice that the error is a positive number if the experimental value is high, and is a negative number if the experimental value is low. 4) Usually you can minimize the margin of error by using a larger sample size. 5) The margin (size) of error depends on what you are measuring. If you are measuring the length of a room and have an error of 1cm, the error is minimal compared to measuring a worm and having a 1cm error. The error is larger even though it is 1cm in both cases. Example of % error: A student measures the volume of a 2.50 liter container to be 2.38 liters. What is the percent error in the student's measurement? %error = = (accepted value – experimental value) x 100% accepted value Ans. % error = (2.50 liters - 2.38 liters) x 100% 2.50 liters = (.12 liters) 2.50 liters = .048 x 100% x 100% = 4.8% error Error Reduction Consider the following questions. were the control and/or experimental groups equal before the beginning of the experiment How are individual differences controlled What was done to control for selection bias? How appropriate is the measuring device employed? Averaging to reduce error The effects of unusual values (observations) can be reduced by taking an average. This average is referred to as the arithmetic mean. In general, the larger the sample size the more chance mean is correct. In experimentation where there are no groups averaging, it is accomplished by conducting numerous trials. But this would be a problem if this design were used alone if your instrument is reading high because all your trials would be high. Error is generally reduced through averaging averages. It’s true a larger sample would give a better average, but if there’s only one lab doing the experiment they can’t feasibly use 2000 items for their sample. Then it would be better to do the experiment 4 times with samples of 50 each time and average that data. Random assignment means that each subject has an equal chance (probability) to be assigned to a control or experimental group. Best accomplished by trusting a computer program that picks random numbers without replacement. MEASUREMENT A flawless design will be invalidated by an inappropriate measuring instrument. DATA COLLECTION, ORGANIZATION AND DISPLAY INTRODUCTION The purpose of experimentation or observation is to collect data to test the hypothesis. Data is a plural noun and datum is its singular form. Data refers to things that are known, observed, or assumed. Based on the facts and figures collected during an experiment, conclusions about the hypothesis can be inferred. data include stables, graphs, illustrations, photographs and journals. Information should include, but is not limited to, step-by-step procedures, quantities used, formulas, equations, quantitative and qualitative observations, material lists, references, special instructions, diagrams, procedures, data tables, flow charts DATA TABLES The "best" tables provide the most information in the least confusing manner. contain qualitative or quantitative observations. Qualitative data is descriptive but contains no measurements. Quantitative data is also descriptive but is based on measurements. titled and preceded with consecutive identification references such as "Figure 1, Figure 2" labeled columns. quantity labels in the column headers and not the columns themselves. consistent significant digits DRAWING CONCLUSIONS INTRODUCTION The conclusion should contain the following sections: A restatement of the problem, purpose, or hypothesis. A rejection or failure to reject the hypothesis. Rational (support) for the decision on the hypothesis Discussion on the significance (value) and implications regarding the experiment. discuss the "significance" (value) and/or implications of the research. Questions to be considered might include the following: Why was the research conducted? Of what practical value is the research? How could the results be applied to a "real" situation or problem? What implications could this research have on solving future problems? Can the results be used to predict future events and if so how accurately? What can be inferred about the total population, based on the analysis of the sample population? Did the research suggest other avenues for further investigations? SUPPORTING CONCLUSIONS Be sure to refer to the data in explaining how the conclusion was drawn. Make direct references to the appropriate illustrations, tables, and graphs. Make comparisons among the control and experimental groups. Each comparison should have a paragraph of its own. Point out similarities and differences. More on Making comparisons When making comparisons... use terms that are quantitative. Describe in magnitudes such as more than, or less than. rank the results compare your results with that of other authors Avoid the phrase "significant difference" unless you used a test of significance Differences among groups, even if numerically large, may not be significant. MAKING PREDICTIONS One of the strongest supports for a cause and effect relationship is to be able to predict the effects of the independent variable on the dependent variable. not everything is the result of a single cause. the effect is due to the interactions of several variables which were not controlled. the effect may be a correlation... such as increasing height with increasing age. experimental error or chance can give the appearance of a cause and effect relationship. The strongest cases for cause and effect are defined by mathematical equations EXPLAINING DISCREPANCIES Flaws in the experimental design should be pointed out with suggestions for their elimination or reduction. Questions to be considered might be... If the experiment were to be repeated, what would be changed and why? Is there reason to suspect error as a result of the measuring instrument? How were individual variations controlled? Is the sample being studied representative of the entire population and how was it selected. Discuss any discrepancies in the data or its analysis. Attempt to explain any unusual observations or discrepancies in the data. Refer to the data to build support. EXPERIMENTAL ERROR AND ITS CONTROL There will be "real differences" among the control and variable groups. All error can never be entirely eliminated...Random Error occurs in all experimentation. Good experimental design strives to reduce error to its minimum. Systematic error is inherent in all measuring devices It can be reduced by using an appropriate measuring instrument and/or careful calibration. Conclusions can be challenged on the basis of the accuracy and precision of the measuring devices. Sampling errors are generally the result of individual differences and/or the method of selection. LIMITATIONS OF A STUDY Do not generalize the results to an entire population... Limit the conclusions to what was tested. "In vitro" (in the laboratory) may not produce the same results as those done "in situ" (under natural conditions). It is not always possible to control the interactions of variables under natural conditions. Synergistic effects occur when variables interact. o These may modify or create entirely new effects that neither variable would produce if tested alone. Do not propose assumptions that can not be supported. Avoid editorializing. Make only objective observations, not subjective. EXPRESSING MEASUREMENT Measurements are to be recorded using the primary or alternative metric units in the SI All measured or calculated values using measurement must have unit labels. Decimals are to be used in place of fractions. A counted number is not a measurement. When expressing a measured value less than one, place a zero in front of the decimal point. Rules for the written expression of SI units are as follow: Capitalization: Symbols for SI units are NOT capitalized unless the unit was derived from a proper name. Unabbreviated units are NEVER capitalized. Numerical prefixes and their symbols are NOT capitalized except for the symbols T, G, M. Plurals: Unabbreviated symbols form their plurals in the usual way by adding an "s" as in newtons. SI symbols are ALWAYS WRITTEN in their singular form. Punctuation: Periods SHOULD NOT be used after a SI unit unless it is the end of a sentence. SIGNIFICANT DIGITS Rules to determine significant digits. 1. Digits other than zero are always significant.... 23.45 ml (4) 0.43 g (2) 69991 km (5) 2. One or more final zeros used after the decimal point....8.600 mg (4) 29.0 cm (3) 0.1390 g (4) 3. Zeros between two other significant digits....10025 mm (5) 3.09 cm (3) 0.704 dc (3) 4. Zeros used for spacing the decimal point or place holding are not significant....5000 m (1) 0.0001 ml (1) 0.01020 (4) Rules for determining significant digits when calculations are involved. An answer can not be any more accurate than the value with the least number of significant digits. 1. Addition and Subtraction - after making the computation the answer is rounded off to the decimal place of the least accurate digit in the problem. 2. Multiplication and Division - after making the computation the answer should have the same number of significant digits as the term with the least number of significant digits in the problem. When using calculators… Significant digits should be determined before placing values in the calculator. Answers must then be rounded to the proper number of significant digits. SCIENTIFIC NOTATION Scientific or exponential notation is a method for expressing very large or very small numbers. All numbers are expressed as a product between the integer (M) to a power of ten (n). The format is expressed as: n o M x 10 ... where M = an integer from 1 to 9 and n = any integer Examples of scientific notation. 1. Avogadro's Number = 602 217 000 000 000 000 000 000 molecules = 6.02217 X 1023 molecules 2. Angstrom = 0.000 000 000 1 m = 1 x 10-9 m Calculations with Scientific Notation there can only be one number to the left of the decimal point. multiplication... multiply the values of M and add the values of n ... Express answer in M x 10n format. division ... divide the values of M and subtract the values of n... Express answer in M x 10n format. Calculator rounding Be aware that rounding inside a calculator or a computer depends on how it was programmed. Some programs truncate. ... This means they simply "cut-off" the end digits without rounding. Start with the last number the calculator display and round forward to the proper number of significant digits. ACCURACY IN MEASUREMENT Accuracy refers to how close a measurement is to the accepted value. It may be expressed in terms of absolute or relative error. Absolute error is the difference between an observed (measured) value and the accepted value of a physical quantity. In the laboratory it is often referred to as experimental error. Absolute Error = Observed-Accepted Relative error is a ratio of the absolute error compared to the accepted value and expressed as a percent. It is generally called the percent of error. The accuracy of a measurement can only be determined if the accepted value of the measurement is known. PRECISION IN MEASUREMENT Precision relates to the uncertainty (+ or -) in a set of measurements. It is an agreement between the numerical values of a set of measurements taken in the same way. It is expressed as absolute or relative deviation. Uncertainty example 1 EXAMPLE 1: The value read on the triple beam balance is 2.35 grams. This triple beam balance has accuracy (absolute uncertainty) of plus or minus 0.1 gram. Because this balance can not measure values less than 0.1 gram, the last digit in 2.35 grams is referred to as being doubtful. That is to say we are uncertain as to the accuracy. It could be 2.36 grams, or 2.34 grams, or even 2.33 grams. While the number of significant digits is three the last number is doubtful. We are uncertain of its exact value because our measuring device only has an accuracy of 0.1 gram. READING INSTRUMENTS Some metric rulers have rounded ends. Be certain to read beginning at the zero mark. When reading the meniscus, read the lowest point for concaved fluids and the highest point for convex fluids When reading an analog instrument (one with a dial or meter as opposed to digital readouts), look "head-on" at the pointer. Looking from the side will give an improper reading know as a parallax. High quality instruments will have a mirror on the dial face to reduce parallax error. Calibrating Instruments Be consistent when calibrating instruments. Allow for the proper warm-up period for electrical instruments use the same techniques and standards each time you calibrate. MEASURES OF CENTRAL TENDENCY Measures of central tendencies summarize data. Most common ... o mean ... arithmetic, harmonic, and geometric o median o mode Arithmetic Mean The most common measure is the arithmetic mean or average. The mean is calculated by summing the values of each observation and dividing by the total number of observations. Arithmetic Used when only a single variable is involved. Examples are weight, temperature, length, and test scores. The median is the central observation (measurement) when all observations are arranged in increasing sequence. It is the value above and below which lie an equal number of observations. If there are an equal number of observations, then the median is the midpoint value between the two central observations. In grouped data the median is calculated as the mid-point of the central interval for an odd number of groups. If there are an equal number of group intervals it lies between the two central group intervals. The median provides a better measure of central tendency than the mean when the data contains extremely large or small observations. Mode The mode is the most frequently occurring value(s) in a set of observations (measurements). For grouped data the mode is represented by the mid-point of the interval(s) having the greatest frequency. It is possible for a population (group of measurements) to have more than one mode...bimodal more than one mode may indicate of a potential problem with the experimental design. SYMMETRY A frequency curve is said to be symmetrical when the mean, mode, and median are equal It is asymmetrical when the mean, mode, and median are not equal. Most frequency curves are asymmetrical. Curves either lean to the right or to the left and are said to be skewed. Skewed Frequency Curves If the tail points to the right, it is said to be "positively skewed". If the tail points to the left, it is "negatively skewed"