Textbook`s physics versus history of physics: the case of Classical

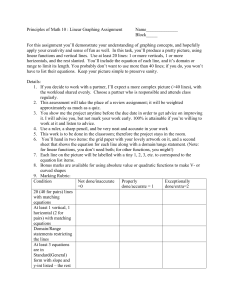

advertisement