Notes16

advertisement

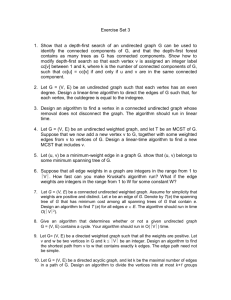

Mark W. Berry

CS 312

Lecture-Notes for 03/04/03

Review:

Comparison Sort –

Definition: Any sort algorithm using comparisons between keys, and nothing else about the keys, to arrange

items in a predetermined order. An alternative is a restricted universe sort, such as counting sort or bucket sort.

Can we answer the question: Why is lower bound n lg n ?

Answer: Lower Bound, L(n), is a property of the specific problem, i.e., sorting problem, not of any particular

algorithm.

Lower bound theory says that no algorithm can do the job in fewer than lg(n) time units for arbitrary inputs, i.e.,

that every sorting algorithm must take at least lg(n) time in the worst case.

Example: For the problem of comparison-based sorting,

if we only know that the input is orderable then there are n! possible outcomes – each of the n!

permutations of n things.

Since, within the comparison-swap model, we can only use comparisons to derive information,

then from information theory lgn! is a lower bound on number of comparisons necessary in the worstcase to sort n things.

Proof: For the problem of comparison-based sorting,

The result of a given comparison between two list elements yields a single bit of information (0=False, 1

= True).

Each of the n! permutations of {1, 2, …, n}has to be distinguished by the correct algorithm.

Thus, a comparison-based algorithm must perform enough comparisons to produce n! cumulative pieces

of information.

Since each comparison only yields one bit of information,

the question is what the minimum number of bits of information needed to allow n! different outcomes

is, which is lgn! bits.

3 bits

<1,2,3>

>

111

<1,3,2>

>

>

<2,1,3>

>

<2,3,1>

<3,1,2>

>

000

<3,2,1>

There are 3! = 6 possible permutations of the n input elements, so lgn! bits are required for lgn! comparisons

for sorting n things. If we take all 3 permutations of the 3 elements…3!=6. Thus, n! corresponds to at least this

number of leaves in the tree. The maximum number of leaves in a tree of height h = 2h. Thus, n! ≤ L ≤ 2h. To

find a sorted sequence, just go down the tree until you reach the position you are looking for.

So, solve for h.

lg(n!) ≤ lg(2h)

≤ h lg(2)

lg(n!) ≤ h

lg(nn) ≤ h

nlgn ≤ h is absolutely true

So why is it Ω(nlgn) instead of O(nlgn)? Solve for two variables → c • n! ≥nn ∀n≥no … c is constant for all

values of n > that value.

If O = Ω, then it equals Θ

g(n) = O(f(n)) = Ω(f(n))

∴g(n) = Θ(f(n))

The answer is obtained as follows:

m bits of 0-1 information can result in 2m different 0-1 sequences of length m.

Thus, to obtain n! different outcomes, we must have 2m n!.

Thus, any comparison-based sorting algorithm must make at least log2n! comparisons to properly

distinguish between n! inputs of size n,

showing (again) that a lower bound for the worst-case complexity of the problem of comparison-based

sorting is log2n!,

which is (nlgn).

If each comparison in a comparison-based solution is represented with a node and the comparisons that follow

each node are called the children of the node, we end up with the decision tree model, a tree modeling of the

algorithms that possible branches after each comparison.

A decision tree models the hierarchy of all possible branching resulting from decisions made by an

algorithm.

The sequence of decisions and resultant branching performed by the algorithm depends on the input and

corresponds to a path from the root to a leaf in the decision tree.

Leaf nodes correspond to outputs of the algorithm (solutions to the problem),

Each internal node corresponds to the performance of one or more basic operations (in comparison

based algorithm it is a comparison operation).

Then it is clear that the depth of the decision tree is lower bound for the worst-case complexity of the

algorithm.

The decision tree method for obtaining lower bounds for the worst-case complexity of a problem

consists of determining the minimum depth of a decision tree over all algorithms for solving the

problem.

Example: (Lower bound for sorting): In this model for sorting problem, any sorting algorithm can be viewed as

a binary tree.

Each internal node of the decision tree represents a comparison between two input elements, and

its two sons correspond to the two possible comparisons which are made next, depending on the

outcome of the previous comparison.

Each leaf node specifies an input permutation that is uniquely identified by the outcomes of the

comparisons along the path from the root of the tree. That is, each leaf node corresponds to one of n!

possible orderings of a list of size n.

There must be n! leaf nodes.

Any binary tree having n! leaf nodes must have depth at least lgn!.

That is, some path of the tree must be of length at least lgn!.

This follows because the number of nodes at any level of a binary tree can be at most twice the number

on the previous level.

<1,2,3>

a2:a3

a1:a2

>

>

a1:a3

a1:a3

>

a2:a3

<2,1,3>

>

>

<1,3,2>

<3,2,1>

<2,3,1>

<3,1,2>

Decision tree for insertion sort operating on 3 elements. There are 3! = 6 possible permutations of the input

elements, so the decision tree must have at least 6 leaves.

The execution of the sorting algorithm corresponds to tracing a path from the root of the decision tree to a leaf.

At each internal node, a comparison ai aj is made. When we come to a leaf, the sorting algorithm has

established the ordering a1 a2 … an.

The length of the longest path from the root of a decision tree to any of its leaves represents the worstcase number of comparisons that any of the sorting algorithm performs.

The worst-case number of comparisons for a comparison-based sort corresponds to the height of its

decision tree.

A lower bound on the heights of decision trees is therefore a lower bound on the running time of any

comparison sort algorithm.

Theorem: Any decision tree that sorts n elements has height (nlgn).

Proof:

Consider a decision tree of height h that sorts n elements.

The height of the tree is the height of its root (the number of edges in the longest path from the root to a

leaf), which is equal to the depth of the deepest leaf and the level of the root.

Since there are n! permutations of n elements, each permutation representing a distinct sorted order,

the tree must have at least n! leaves.

Since a binary tree of height h has no more than 2h leaves, we have

n! 2h h lg(n!),

since lg function is monotonically increasing.

From Stirling’s approximation, we have

n! > (n/e)n, where e = 2.718…

h lg(n/e)n = nlgn – nlge

= (nlgn)

Therefore, the worst-case complexity belongs to (nlgn).

More accurately,

log2n! = nlog2n – n/logen + (1/2)log2n + O(1)

If we consider all possible comparison trees which model algorithms to solve the searching problem, FIND(n) is

bounded below by the distance of the longest path from the root to a leaf in such a tree.

There must be n internal nodes in all of these trees corresponding to the n possible successful

occurrences of x in A.

If all internal nodes of binary tree are at levels less than or equal to m (every height m-rooted binary tree

has at most 2m+1 – 1 nodes), then there are at most 2m – 1 internal nodes.

Thus, n 2m –1 and FIND(n) = m lg(n+1).

Because every leaf in a valid decision tree must be reachable, the worst-case number of comparisons done by

such a tree is the number of nodes in the longest path from the root to a leaf in the binary tree consisting of the

comparison nodes.

Graph Theory:We will briefly look at two types of searches:

A. Breadth first search.

B. Depth first search.

Note: A tree has a hierarchy – a tree is a graph; roots and leaves are not in a graph; trees can’t have loops or

cycles. Compilers use a directed acyclic graph (DAG).

Graphs –

1. Directive graph – uses arrows which must be followed.

2. Nondirective graph – does not use arrows and can be followed in any manner.

Breadth-first search: (visits level by level and not usually implemented in a tree)

(BFS) is a traversal through a graph that touches all of the vertices reachable from a particular source vertex. In

addition, the order of the traversal is such that the algorithm will explore all of the neighbors of a vertex before

proceeding on to the neighbors of its neighbors. One way to think of breadth-first search is that it expands like a

wave emanating from a stone dropped into a pool of water. Vertices in the same ``wave'' are the same distance

from the source vertex. A vertex is discovered the first time it is encountered by the algorithm. A vertex is

finished after all of its neighbors are explored. Here's an example to help make this clear. A graph is shown in

the figure below and the BFS discovery and finish order for the vertices is shown below showing nodes

r,s,t,u,v,w,x, and y.

Breadth-first search spreading through a graph.

order of discovery: s r w v t x u y

order of finish: s r w v t x u y

We start at vertex , and first visit r and w (the two neighbors of ). Once both neighbors of are visited, we visit

the neighbor of r (vertex v), then the neighbors of w (the discovery order between r and w does not matter)

which are t and x. Finally we visit the neighbors of t and x, which are u and y.

For the algorithm to keep track of where it is in the graph, and which vertex to visit next, BFS needs to color the

vertices. The place to put the color can either be inside the graph, or it can be passed into the algorithm as an

argument.

Depth-First Search: (LRP or RLP…preorder, inorder, post order)

A depth first search (DFS) visits all the vertices in a graph. When choosing which edge to explore next, this

algorithm always chooses to go ``deeper'' into the graph. That is, it will pick the next adjacent unvisited vertex

until reaching a vertex that has no unvisited adjacent vertices. The algorithm will then backtrack to the previous

vertex and continue along any as-yet unexplored edges from that vertex. After DFS has visited all the reachable

vertices from a particular source vertex, it chooses one of the remaining undiscovered vertices and continues the

search. This process creates a set of depth-first trees which together form the depth-first forest. A depth-first

search categorizes the edges in the graph into three categories: tree-edges, back-edges, and forward or crossedges (it does not specify which). There are typically many valid depth-first forests for a given graph, and

therefore many different (and equally valid) ways to categorize the edges.

One interesting property of depth-first search is that the discover and finish times for each vertex form a

parenthesis structure. If we use an open-parenthesis when a vertex is discovered, and a close-parenthesis when a

vertex is finished, then the result is a properly nested set of parenthesis. The figure below shows DFS applied to

an undirected graph, with the edges labeled in the order they were explored. Below we list the vertices of the

graph ordered by discover and finish time, as well as show the parenthesis structure. DFS is used as the kernel

for several other graph algorithms, including topological sort and two of the connected component algorithms.

It can also be used to detect cycles.

Depth-first search on an undirected graph.

order of discovery: a b e d c f g h i

order of finish: d f c e b a

parenthesis: (a (b (e (d d) (c (f f) c) e) b) a) (g (h (i i) h) g)

Where do we use graphs in applications? Compilers, databases, video games, Networks, etc.

Today’s Topic:

SECURITY, ENCRYPTION, CRYPTOGRAPHY.

Cryptography relies on the fact that it is easy to do one thing, and difficult to do other things. Given one thing, it

is difficult to solve in polynomial time greater than nk to figure out.

Number Theory: Notation – d|a = a/d i.e., d divides a iff (d)(k) = a for k ∈ …this is the definition of divide.

So, if d divides a, then we have no remainder. Thus, a mod d = 0

Assume a is an integer greater than 1, then ONLY IF d = 1, and d = a, and d|a, then a is prime. 1 and 0 are

neither prime nor composite and any number less than 0 is no composite. So how do we find out if a number is

prime or composite? We can begin dividing by consecutive numbers until we determine if it is prime. This is

not very efficient for large numbers. This is one of the basis’ for cryptography. It is easy to verify a given… but

difficult to determine the next.

Division Theorem:

a∈

n∈ +

q,r∈ |0 ≤ r < n

q = ⌊ a/n ⌋

and a = qn + r

r = a mod n

|N = Natural numbers

Divide integers into equivalent classes:

Definition of Equivalent class – [a]n = {a + kn: k ∈ }. This will give the ‘n’ equivalent classes of a.

Example: If k = 0 [3]7 = {…,-4, 3, 10,…} the equivalent classes will give all of the numbers belonging to the

set. Thus, [3]7 is equivalent to the whole set.

a mod n [a]n

a = b mod n …..expect one number back greater than or equal to 0 but less than n.

If this is true, then

a mod n b mod n

10 mod 7 = 3

[10]7 = {…,-4, 3, 10,…}

Thursday’s Topic: COMMON DIVISORS & RSA (RONALD Rivest, Shamir & Adelman

Caesar Code:

Military need to keep information secret and out of enemy hands. It is encrypted and hidden and the messenger

doesn’t know the message or the code. Caesar shaved his messenger’s head and wrote the message on it. Then

he waited for the hair to grow back and sent the messenger on his mission.

Encryption scheme for 26 letters –

Mi – 3 = 13 – 3 = 10 = J

A – 3 = -2 so we have to loop around.

(ai –3)%26

Decryption scheme for 26 letters –

(Mi + 3)

This rests on the fact that we use a – 3 and a + 3. They are inverse operators. Any operator that is an inverse

operator of another will work, but the algorithm cannot be public.

In RSA we can expose the algorithm because the private key is kept private.