Bayes

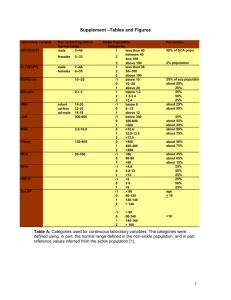

advertisement

Chapter 1 Basic Concepts Thomas Bayes (1702-1761): two articles from his pen published posthumously in 1764 by his friend Richard Price. Laplace (1774): stated the theorem on inverse probability in general form. Jeffreys (1939): rediscovered Laplace’s work. Example 1: yi , i 1, 2,, n : the lifetime of batteries 2 Assume y i ~ N , . Then, p y | , 2 n 1 exp 2 2 n y i 1 i 2 t , y y1 , , yn . To obtain the information about the values of and , two methods 2 are available: (a) Sampling theory (frequentist): and 2 are the hypothetical true values. We can use 2 point estimation: finding some statistics ˆ y and ˆ y to estimate and , for example, 2 n ˆ y y y i 1 n n i 2 , ˆ y y i 1 y 2 i n 1 . interval estimation: finding an interval estimate ˆ1 y , ˆ2 y 1 and ˆ12 y , ˆ 22 y for estimate for and , for example, the interval 2 , s s , Z ~ N (0,1). y z , y z , P Z z 2 2 2 2 n n (b) Bayesian approach: 2 Introduce a prior density , for and . Then, after some 2 manipulations, the posterior density (conditional density given y) f , 2 | y can be obtained. Based on the posterior density, inferences about and 2 can be obtained. Example 2: X ~ b10, p : the number of wins for some gambler in 10 bets, where p is the probability of winning. Then, 10 10 x f x | p p x 1 p , x 1,2, ,10. x (a) Sampling theory (frequentist): To estimate the parameter p, we can employ the maximum likelihood principle. That is, we try to find the estimate p̂ to maximize the likelihood function 10 x 10 x l p | x f x | p p 1 p . x 2 x 10 , For example, as Thus, 10 1010 l p | x l p | 10 p10 1 p p10 . 10 ˆ 1 . It is a sensible estimate. Since we can win all the p time, the sensible estimate of the probability of winning should be 1. On the other hand, as Thus, x 0, 10 100 10 l p | x l p | 0 p 0 1 p 1 p . 0 ˆ 0 . Since we lost all the time, the sensible estimate of p the probability of winning should be 0. In general, as ˆ p xn, n , n 0,1,,10, 10 maximize the likelihood function. (b) Bayesian approach:: p : prior density for p, i.e., prior beliefs in terms of probabilities of various possible values of p being true. Let p r a b a 1 b 1 p 1 p Beta a, b . r a r b Thus, if we know the gambler is a professional gambler, then we can use the following beta density function, p 2 p Beta2,1 , to describe the winning probability p of the gambler. The plot of the density function is 3 1.0 0.0 0.5 prior 1.5 2.0 Beta(2,1) 0.0 0.2 0.4 0.6 0.8 1.0 p Since a professional gambler is likely to win, higher probability is assigned to the large value of p. If we know the gambler is a gambler with bad luck, then we can use the following beta density function, p 21 p Beta1,2 , to describe the winning probability p of the gambler. The plot of the density function is 1.0 0.0 0.5 prior 1.5 2.0 Beta(1,2) 0.0 0.2 0.4 0.6 0.8 1.0 p Since a gambler with bad luck is likely to lose, higher probability is 4 assigned to the small value of p. If we feel the winning probability is more likely to be around 0.5, then we can use the following beta density function, p 6 p1 p Beta2,2 , to describe the winning probability p of the gambler. The plot of the density function is 0.0 0.5 prior 1.0 1.5 Beat(2,2) 0.0 0.2 0.4 0.6 0.8 1.0 p If we don’t have any information about the gambler, then we can use the following beta density function, p 1 Beta1,1 , to describe the winning probability p of the gambler. The plot of the density function is 1.0 0.9 0.8 prior 1.1 1.2 Beta(1,1) 0.0 0.2 0.4 0.6 p 5 0.8 1.0 posterior density of p given x conditiona l density of p given x f x, p joint density of x and p f p | x f x marginal density of x f x | p p f x | p p l p | x p f x Thus, the posterior density of p given x is f p | x p l p | x r a b a 1 b 1 n n x p 1 p p x 1 p r a r b x ca, b, x p x a 1 1 p b 10 x 1 In fact, r a b 10 b 10 x 1 p xa 1 1 p r x a r b 10 x Beta x a, b 10 x f p | x Then, we can use some statistic based on the posterior density, for example, the posterior mean E p | x pf p | x dp 1 0 As xa a b 10 . xn, ˆ E p | n p an a b 10 is different from the maximum likelihood estimate n 10 . Note: f p | x p l p | x the original informatio n about p the informatio n from the data the new informatio n about p given the data 6 Properties of Bayesian Analysis: 1. Precise assumption will lead to consequent inference. 2. Bayesian analysis automatically makes use of all the information from the data. 3. The inferences unacceptable must come from inappropriate assumption and not from inadequacies of the inferential system. 4. Awkward problems encountered in sampling theory do not arise. 5. Bayesian inference provides a satisfactory way of explicitly introducing and keeping track of assumptions about prior knowledge or ignorance. 1.1 Introduction Goal: statistical decision theory is concerned with the making of decisions in the presence of statistical knowledge which sheds lights on some of the uncertainties involved in the decision problem. 3 Types of Information: 1. Sample information: the information from observations. 2.Decision information: the information about the possible consequences of the decisions, for example, the loss due to a wrong decision. 3. Prior information: the information about the parameter. 1.2 Basic Elements : parameter. : parameter space consisti1ng of all possible values of 7 . a: decisions or actions (or some statistic used to estimate : the set of all possible actions. ). L , a : R : loss function L1 , a1 : the loss when the parameter value is 1 a1 and the action is taken. X X 1 , X 2 ,, X n : X 1 , X n are independent observations from a common distribution : sample space (all the possible values of X, usually subset of will be a R n ). f x | dx A f x1 ,, xn | dx1 dxn A X P X A dF x | A f x | A f x1 , , xn | A where F X x | is the cumulative distribution of X. hx f x | dx E h X hx dF X x | . h x f x | Example 2 (continue): Let A 1, 3, 5, 7, 9. Then, 8 10 10 10 x 10 x Pp X A p x 1 p p x 1 p xA x x1, 3, 5, 7 , 9 x 10 10 10 10 10 9 7 5 3 p1 p p 3 1 p p 5 1 p p 7 1 p p 9 1 p 1 3 5 7 9 Let X 10 . a1 the estimate of p Also, let X X h X L p , p loss function 10 10 Then, X X E p h X E p L p, E p p 10 10 10 p X Ep p p0 . 10 10 Example 3: Let X ~ beta ,1 and hx x 2 . Then, E h X hx f x | dx x 2x 1dx 1 0 2 Example 4: 9 x 2 |10 2 . a1 : sell the stock. a2 : keep the stock. 1 : stock price will go down. 2 : stock price will go up. Let L1 , a1 500, L1 , a2 300, L 2 , a1 1000, L 2 , a2 300 The above loss function can be summarized by 1 2 a1 a2 -500 1000 300 -300 Note that there is no sample information from an associated statistical experiment in this example. We call such a problem no-data problem. 1.3 Expected Loss, Decision Rules, and Risk Motivation: In the previous section, we introduced the loss of making a decision (taking an action). In this section, we consider the “expected” loss of making a decision. Two types of expected loss are considered: Bayesian expected loss Frequentist risk (a) Bayesian Expected Loss: Definition: The Bayesian expected loss of an action a is 10 , a E L , a L , a dF L , a d where distribution of and F are the prior density and cumulative , respectively. Example 4 (continue): Let 1 0.99, 2 0.01 . Then, , a1 E L , a1 1 L1 , a1 2 L 2 , a1 0.99 500 0.011000 485 and , a2 E L , a2 1 L1 , a2 2 L 2 , a2 0.99 300 0.01 300 294 (b) Frequentist Risk: Definition: A (nonrandomized) decision rule R . If X is a function from into X x0 is observed, then x0 is the action that will be taken. Two decision rules, 1 and 2 , are considered equivalent if P 1 X 2 X 1, for every . Definition: The risk function of a decision rule X 11 is defined by R , E L , X L , x dF X x | L , x f x | dx Definition: If R , 1 R , 2 , for all , , then the decision rule 1 with strict inequality for some R-better than the decision rule is 2 . A decision rule is admissible if there exists no R-better decision rule. On the other hand, a decision rule is inadmissible if there does exist an R-better decision rule. Note: A rule 1 is R-equivalent to 2 if R , 1 R , 2 , for all . Example 4 (continue): R1 , a1 L1 , a1 500 300 L1 , a2 R1 , a2 and R 2 , a1 L 2 , a1 1000 300 L 2 , a2 R 2 , a2 . Therefore, both a1 and a2 are admissible. Example 5: Let 12 X ~ N ,1, L , a a , 1 X X , 2 X 2 Note that E X and X . 2 Var X 1. Then, R , 1 E L , 1 X E L , X E X 2 Var X 1 and X X R , 2 E L , 2 X E L , E 2 2 2 X X E E 2 2 2 2 X 2 X 2 E 2 2 2 2 2 4 2 2 2 2 X X E E 2 2 4 2 2 2 Var ( X ) 2 X Var 0 4 4 4 2 2 1 2 4 4 Definition: The Bayes risk of a decision rule on with respect to a prior distribution is defined as r , E R , R , a d Example 5 (continue): Let 13 ~ N 0, 2 , Then, 1 2 2 2 e 2 2 r , 1 E R , 1 E 1 1 and 2 1 r , 2 E R , 2 E 4 4 1 E 2 1 Var ( ) 4 4 4 4 1 2 4 4 1.4 Decision Principles The principles used to select a sensible decision are: (a) Conditional Bayes Decision Principle (b) Frequentist Decision Principle. (a) Conditional Bayes Decision Principle: Choose an action a A minimizing a Bayes action and will be denoted a , a . Such a will be called . Example 4 (continue): Let A a1 , a2 , 1 0.99, 2 0.01 . Thus, 14 , a1 485, , a2 294 . Therefore, a a1 . (b) Frequentist Decision Principle: 3 most important frequentist decision principle: Bayes risk principle Minimax principle Invariance principle (1) Bayes Risk Principle: 1 , 2 D , a decision Let D be the class of the decision rules. Then, for rule 1 is preferred to a rule 2 based on Bayes risk principle if r , 1 r , 2 . A decision rule minimizing r , among all decision rules in class D is called a Bayes rule and will be denoted as . The quantity r r , is called Bayes risk for . Example 5 (continue): X ~ N ,1, ~ N 0, 2 , D cx : c is any constant . 15 Let c X cX . Then, R , c E cX E cX E cX c c 1 2 2 2 E cX c 2cX c c 1 c 1 2 2 2 c 2Var X c 1 2 2 c 2 c 1 2 2 and , c E R , c E c 2 c 12 2 c 2 c 1 E 2 2 c 2 c 1 2 2 Note that , c is a function of c. , c attains its 2 minimum as c , 1 2 2 ( f c , c c c 1 , f c 0 c ) 1 2 2 2 2 ' Thus, 2 1 2 2 X X 2 1 is the Bayes estimator. In addition, 16 2 r r , 2 2 2 1 1 2 2 2 2 1 2 1 4 2 2 2 1 1 2 2 2 1 2 1 2 2 2 1 2 Example 4 (continue): Let D a1 , a2 , 1 0.99, 2 0.01. Then, r , a1 E R , a1 E L , a1 485 and r , a2 E R , a2 E L , a2 294 . a1 Thus, is the Bayes estimator. Note: In a no-data problem, the Bayes risk (frequentist principle) is equivalent to the Bayes expected loss (conditional Bayes principle). Further, the Bayes risk principle will give the same answer as the conditional Bayes decision principle. Definition: Let X X 1 , X 2 ,, X n have the probability distribution function (or probability density function) f x | f x1 , x2 ,, xn | with prior density and prior cumulative distribution 17 and F , respectively. Then, the marginal density or distribution X X 1 , X 2 ,, X n of mx mx1 , x2 ,, xn is f x | d f x | dF f x | The posterior density or distribution of given x f | x f | x1 , x2 ,, xn The posterior expectation of g given is f x | mx x . is g f x | d g f | x d m x E f |x g g f | x g f x | m x Very Important Result: Let X X 1 , X 2 ,, X n have the probability distribution function (or probability density function) f x | f x1 , x2 ,, xn | with prior density and prior cumulative distribution and F , respectively. Suppose the following two assumptions hold: (a) There exists an estimator 0 with finite Bayes risk. 18 (b) For almost all x , there exists a value x minimizing L , x f | x d f | x L , x , E L , x f | x Then, (a) if L , a a g 2 , then g f | x d f | x g x E g f | x and, more generally, if L , a w a g 2 , then w g f | x d f | x w f | x E w g x f | x w w g f | x E w f | x (b) if then L , a a x , is the median of the posterior density or distribution 19 f | x given x . Further, if of k 0 a , a 0 L , a , k1 a , a 0 then x is the k0 k0 k1 percentile of the posterior density or f | x distribution of given x . (c) if 0 when a c L , a 1 when a c then, x is the midpoint of the interval I of length 2c which maximizing f | x d I P I | x f | x I [Outline of proof] (a) , a E f |x L , x E f |x w a g 2 E f |x w a 2 2ag g 2 E f |x w a 2 2 E f |x g w a E f |x g 2 w Thus 20 , a 2 E f |x w a 2 E f |x g w 0 a E f |x g w a E f |x w (b) Without loss of generality, assume m is the median of f | x . We want to prove , m , a E f |x L , m L , a 0 , a m . for Since L , m L , a m a m a m a, m a 2 m a, m a a m, m m a a then E f |x L , m L , a m a P m | x 2 m a Pm a | x a m P a | x m a 2 m a m a P m | x a m Pm a | x m a m a a m P a | x m a P m | x a m P m | x ma am 0 2 2 21 [Intuition of the above proof:] a1 a3 a2 c1 c3 c2 3 We want to find a point c such that a i 1 2 i 1 i achieves its c a2 , minimum. As 3 ca ai a2 a1 a2 a2 a2 a3 a3 a1 . As c c1 3 c i 1 ai c1 a3 a3 a1 . 1 As 3 c c2 c2 ai c2 a1 c2 a2 c2 a3 a3 a1 c2 a2 i 1 , a3 a1 As c c3 3 c i 1 3 ai c3 a1 a3 a1 . 3 Therefore, As c a2 , ca i 1 i achieves its minimum. (2) Minimax Principle: A decision rule principle if 1 is preferred to a rule 2 based on the minimax sup R , 1 sup R , 2 . 22 A decision minimizing sup R , among all decision rules in M class D is called a minimax decision rule, i.e., sup R , M inf sup R , . D Example 5 (continue): D cx : c is any constant and R , c c 2 c 1 2 . 2 Thus, 1 if c 1 2 sup R , c sup c 2 1 c 2 . if c 1 Therefore, M 1 X X is the minimax decision rule. Example 4 (continue): D a1 , a2 . Then, sup R , a1 sup L , a1 1000 and sup R , a 2 sup L , a 2 300 Thus, . M a2 . (3) Invariance Principle: If two problems have identical formal structures (i.e., the same sample space, parameter space, density, and loss function), the same decision rule should be obtained based on the invariance principle. 23 Example 6: X: the decay time of a certain atomic particle (in seconds). Let X be exponentially distributed with mean f x | 1 e x Suppose we want to estimate the mean , ,0 x . . Thus, a sensible loss function is L , a 1 2 a 2 a 1 2 . Suppose Y: the decay time of a certain atomic particle (in minutes). Then, X 1 y Y , f y | e , 0 y , 60 60 . Thus, 2 a 2 a 60 a L , a 1 1 1 L , a 60 2 where a a 60 .. Let X : the decision rule used to estimate and Y : the decision rule used to estimate . 24 , Based on the invariance principle, X X Y X 60 Y 60 . 60 60 The above augments holds for any transformation of the form Y cX , c R , based on the invariance principle. Then, 1 c 1 1 X X Y cX X X 1 kX , k 1 c c Thus, X kX is the decision rule based on the invariance principle. 1.5 Foundations There are several fundamental principles discussed in this section. They are : (a) Misuse of Classical Inference Procedure (b) Frequentist Perspective (c) Conditional Perspective (d) Likelihood Principle (a) Misuse of Classical Inference Procedure: Example 7: Let X 1 , X 2 ,, X n ~ N ,1 In classical inference problem, H 0 : 0 v.s. H1 : 0 , the rejection rule is 25 n n x 1.96, x as 0.05. x i i 1 n , 10 24 Assume the true mean 10 , n 10 , and X ~ N 1010 ,1024 Suppose x 10 11 . , then n x 10 24 10 11 10 1.96 and we reject H 0 . Intuitively, x 10 11 seems to strongly indicate H 0 should be true. However, for a large sample size, even as x is very close to 0, the classical inference method still indicates the rejection of H 0 . The above result seems to contradict the intuition. Note: it might be more sensible to test, for example, H 0 : 103 v.s. H1 : 103 . Example 8: Let X 1 , X 2 ,, X 100 ~ N ,1 In classical inference problem, H 0 : 0 v.s. H1 : 0 , 26 the rejection rule is 100 n x 10 x 1.645, x x i 1 i 100 as 0.05. , If x 0.164,. then 10x 1.64 1.645 and we do not reject H 0 . However, as 0.51 , p value PX x PX 1.64 0.0505 0.51 , we then reject H 0 . (b) Frequentist Perspective: Example 9: Let X 1 , X 2 ,, X 100 ~ N ,1 In classical inference problem, H 0 : 0 v.s. H1 : 1 , the rejection rule is 100 n x 10 x 1.645, x x i 1 i 100 , as 0.05. By employing the above rejection rule, about 5% of all rejection of the null hypothesis will actually be in error as H 0 is true. However, suppose the parameter values 0 and 1 occur equally often in repetitive use of the test. Thus, the chance of H 0 being true is 0.5. Therefore, correctly speaking, 5% error rate is only correct for 27 50% repetitive uses. That is, one can not make useful statement about the actual error rate incurred in repetitive use without knowing R , for all (c) . Conditionalt Perspective: Example 10: Frequentist viewpoints: X 1 , X 2 are independent with identical distribution, P X i 1 P X i 1 1 . 2 Then, X1 X 2 X 1 , X 2 2 X 1 1 can be used to estimate if X 1 X 2 if X 1 X 2 . In addition, P X 1 , X 2 P X 1 1, X 2 1 or X 1 1, X 2 1 P X 1 1, X 2 1 1 1 1 1 2 2 2 2 2 0.75 Thus, a frequentist claims 75% confidence procedure. Conditional viewpoints: Given X 1 X 2 , X 1 , X 2 is 100% certain to estimate 28 correctly, i.e., P X1 , X 2 | X1 X 2 1 . Given X 1 X 2 , X 1 , X 2 is 50% certain to estimate correctly, i.e., P X1, X 2 | X1 X 2 0.5 . Example 11: X 1 0.005 0.0051 1.02 f x | 0 f x | 1 f x | 1 f x | 0 2 0.005 0.9849 196.98 3 0.99 0.01 0.01 X 1 : some index (today) indicating the stock (tomorrow) will not go up or go down X 2 : some index (today) indicating the stock (tomorrow) will go up X 3 : some index (today) indicating the stock (tomorrow) will go down 0 : the stock (tomorrow) will go down. 1 : the stock (tomorrow) will go up Frequentist viewpoints: To test H 0 : 0 v.s. H1 : 1 , by the most powerful test with 0.01, we reject H 0 as X 1, 2 since P X 1, 2 | 0 0.005 0.005 0.01. Thus, as X 1 , we reject H 0 and conclude the stock will go up. This conclusion might not be very convincing since the index does not indicate the rise of the stock. 29 Conditional viewpoints: As X 1 , f 1 | 1 1.02 . f 1 | 0 Thus, f 1 | 1 and f 1 | 0 are very close to each other. Therefore, based on conditional viewpoints, about 50% chance, the stock will go up tomorrow. Example 12: Suppose there are two laboratories, one in Kaohsiung and the other in Taichung. Then, we flip a coin to decide the laboratory we will perform an experiment at: Head: Kaohsiung ; Tail: Taichung Assume the coin comes up tail. Then, the laboratory in Taichung should be used. Question: should we need to perform another experiment in Kaohsiung in order to develop report? Frequentist viewpoints: we have to call for averaging over all possible data including data obtained in Kaohsiung. Conditional viewpoints: we don’t need to perform another experiment in Kaohsiung. We can make statistical inference based on the data we have now. The Weak Conditionality Principle: Two experiments E1 or E2 can be performed to draw information about . Then, the actual information about should depend only on the experiment E j j 1 or 2 that is actually 30 performed. (d) Likelihood Principle: Definition: For observed data x, the function l f x | , considered as a function of , is called the likelihood function. Likelihood Principle: All relevant experimental information is contained in the likelihood function for the observed x. Two likelihood functions contain the same information about if they are proportional to each other. Example 13: : the probability that a coin comes up head. Suppose we want to know if the coin is fair, i.e., H0 : 1 1 v.s. H1 : , 2 2 with 0.05. Then, we flip a coin in a series of trials, 9 heads and 3 tails. Let X : the number of heads. Two likelihood functions can be used. They are: 1. Binomial: n n x X ~ B12, p , l1 f1 x | x 1 x In this example, 12 12 9 3 l1 f1 9 | 9 1 220 9 1 9 31 2. Negative Binomial: n x 1 x 1 n X ~ NBn, , l2 f 2 x | x In this example, we throw a coin until 3 tails come up. Therefore, n 3, x 9 and 3 9 1 9 1 3 55 9 1 3 l2 f 2 9 | 9 By likelihood principle, l1 and l2 contain the same information. Thus, intuitively, the same conclusion should be achieved based on the two likelihood functions. However, classical statistical inference would result in bizarre conclusions from frequentist point of view. 1. Binomial: The reject rule is X c, c is some constant. Thus, in this example, p value P X 9 | 1 1 1 f1 9 | f1 10 | f1 11 | 2 2 2 0.075 0.05 1 f 12 | 2 Thus, we do not reject H 0 and conclude the coin is fair. 2. Negative Binomial: The reject rule is X c, c is some constant. Thus, in this example, 32 p value P X 9 | 1 1 f 2 9 | f 2 10 | 2 2 0.0325 0.05 Thus, we reject H 0 and conclude the coin is not fair. Note: The “robust” Bayesian paradigm which takes into account uncertainity in the prior is fundamentally correct paradigm. Chapter 2 Bayesian Analysis 2.1 Posterior Distribution I Discrete case: Motivating example 1: 0 : no AIDS, 1 : AIDS 0 0.99, 1 0.01 Let positive (indicate AIDS) 1 : X 0 : negative (indicate no AIDS) From past experience and records, we know f (0 | 0 ) 0.97, f (1 | 0 ) 0.03, f 0 | 1 0.02, f 1 | 1 0.98 Objective: find f 0 | 1 , where f 0 | 1 : the conditional probability that a patient really has no AIDS given the test indicating AIDS. 33 Note: high f 0 | 0 , f 1 | 1 imply the AIDS is accurate in judging the infection of AIDS. Bayes’s Theorem (two events): P( A | B) P( A B) P( A) P( B | A) P( B) P( A) P( B | A) P( A c ) P( B | A c ) [Derivation of Bayes’s theorem (two events)]: A Ac B B∩A B∩Ac We want to know P( A | B) P( B A) P( B) . Since P( B A) P( A) P( B | A) , and P( B) P( B A) P( B Ac ) P( A) P( B | A) P( Ac ) P( B | Ac ) , thus, P( A | B) P( B A) P( B A) P( A) P( B | A) P( B) P( B A) P( B Ac ) P( A) P( B | A) P( Ac ) P( B | Ac ) Similarly, if the parameter taking two values 0 and 1 and the data X taking values at c1 , c2 ,, cn , , then let 0 A , 1 Ac 34 and X ck B . 0 X= ck (X= c k ) 1 (X= c k ) ∩ 1 0 Since P(X ck 0 ) P( 0 ) P(X ck | 0 ) 0 f ck | 0 , and P(X ck ) P(X ck 0 ) P(X ck 1 ) P( 0 ) P(X ck | 0 ) P( 1 ) P(X ck | 1 ) 0 f ck | 0 1 f ck | 1 , thus, f 0 | c k P ( 0 | X ck ) P (X c k 0 ) P (X ck ) P (X ck 0 ) P (X c k 0 ) P (X c k 1 ) 0 f ck | 0 0 f ck | 0 1 f ck | 1 Motivating example 1 (continue): By Bayes’s theorem, f 0 | 1 P ( 0 | X 1) 0 f 1 | 0 0 f 1 | 0 1 f 1 | 1 0.99 0.03 0.99 0.03 0.01 0.98 0.7519 A patient with test positive still has high probability (0.7519) of no AIDS. 35 Motivating example 2: 1 : the finance of the company being good. 2 : the finance of the company being O.K. 3 : the finance of the company being bad. X 1: good finance assessment for the company. X 2: O.K. finance assessment for the company. X 3: bad finance assessment for the company. From the past records, we know 1 0.5, ( 2 ) 0.2, ( 3 ) 0.3, f (1 | 1 ) 0.9, f (1 | 2 ) 0.05, f (1 | 3 ) 0.05. That is, we know the chances of the different finance situations of the company and the conditional probabilities of the different assessments for the company given the finance of the company known, for example, f (1 | 1 ) 0.9 indicates 90% chance of good finance year of the company has been predicted correctly by the finance assessment. Our objective is to obtain the probability f (1 | 1) , i.e., the conditional probability that the finance of the company being good in the coming year given that good finance assessment for the company in this year. Bayes’s Theorem (general): Let A1 , A2 ,, An be mutually exclusive events and A1 A2 An S , then 36 P( Ai | B) P( Ai B) P( B) .............. , P( Ai ) P( B | Ai ) P( A1 ) P( B | A1 ) P( A2 ) P( B | A2 ) P( An ) P( B | An ) i 1,2, , n . [Derivation of Bayes’s theorem (general)]: A1 A2 B∩A1 B∩A2 B∩A1 B∩A2 B∩A1 B∩A2 ……………….. B …………… An B∩An B∩An B∩An Since P( B Ai ) P( Ai ) P( B | Ai ) , and P( B) P( B A1 ) P( B A2 ) P( B An ) ....... P( A1 ) P( B | A1 ) P( A2 ) P( B | A2 ) P( An ) P( B | An ) , thus, P( Ai | B) P( B Ai ) P( Ai ) P( B | Ai ) P( B) P( A1 ) P( B | A1 ) P( A2 ) P( B | A2 ) P( An ) P( B | An ) Similarly, if the parameter taking n values 1 , 2 , , n and the data X taking values at c1 , c2 ,, cn , , then let 37 1 A1 , 2 A2 ,, n An , and X ck B . Then, f i | c k P( i | X c k ) P(X c k i ) P(X c k ) P( i ) P(X c k | i ) P( 1 ) P(X c k | 1 ) P( n ) P(X c k | n ) i f c k | i 1 f c k | 1 2 f c k | 2 n f c k | n 2 1 X ck B1 ……………….. X ck n X ck B∩A1 B∩A2 12 2 B∩A B∩An B∩A n n …………… Example 2: ( 1 ) f (1 | 1 ) ( 1 ) f (1 | 1 ) ( 2 ) f (1 | 2 ) ( 3 ) f (1 | 3 ) 0.5 * 0.9 ............. 0.95 0.5 * 0.9 0.2 * 0.05 0.3 * 0.05 f 1 | 1 A company with good finance assessment has very high probability (0.95) of good finance situation in the coming year. II Continuous case: hx, f x | : the joint density. 38 m x f x | d hx, d : the marginal density Thus, the posterior is f | x h x, f x | f x | l | x m x m x Example 1: Let X i ~ Poisson , i 1,2,, n and 1 1 ~ gamma , , e . Then, n f x1 ,, xn | x e n i i 1 xi ! xi i 1 e n n xi ! . i 1 Thus, n xi 1 e n f | x1 , , xn e n xi ! i 1 i 1 n xi 1 n n i1 e xi ! i 1 n 1 gamma x , i n i 1 Note: the MLE (maximum likelihood estimate) based on n f x1 ,, xn | l | x1 ,, xn is 39 x x i 1 n i while the posterior n mean (Bayes estimate under square loss function) is x i 1 i . The n posterior mean incorporates the information obtained from the data (sample mean, (prior mean, x) with the information obtained from the prior ) Example 2: Recall: n n x X ~ bn, p , f x | p p x 1 p x and p ~ betaa, b , p Then, a b a 1 b 1 p 1 p . a b f p | x ~ betax a, n b x. Extension: X ,, X ~ muln, ,, , ,, , p 1 p 1 p 1 p i 1 i 1 p f x1 , , x p | n! x 1x1 p p 1 i p i 1 x1! x p ! n xi ! i 1 p n xi i 1 and ~ Dirichlet a1 , , a p , a p 1 , p 1 ai a p 1 1 p a p 1 i 1 a1 1 . p 1 1 p 1 i i 1 a i i 1 40 Then, f | x1 , , x p f x1 , , x p | p p n! xp x1 1 1 p i p i 1 x1! x p ! n xi ! i 1 n xi i 1 p 1 ai a p 1 1 p a 1 p1i 1 1a1 1 p p 1 i i 1 a i i 1 p x1 a1 1 1 x p a p 1 p p 1 i i 1 n xi a p 1 1 i 1 p ~ Dirichlet x1 a1 , , x p a p , n xi a p 1 i 1 Note: the mean of X i is n i and thus the MLE (maximum likelihood estimate) for i based on f x1 ,, xn | l | x1 ,, xn xi is while the posterior mean (Bayes estimate under square loss n function) is xi ai xi ai p 1 p n ak x a n x a k k k p 1 k 1 k 1 k 1 p . The posterior mean incorporates the information obtained from the data xi (MLE estimate, ) with the information obtained from the prior n (prior mean, ai ) p 1 a k 1 k 41 Example 3: n n X 1 , , X n ~ N ,1, f x1 , , xn | and xi 2 1 i 1 e 2 2 ~ N ,1 .Then, f | x1 , , xn f x1 , , xn | n n 1 e 2 xi 2 i 1 2 1 e 2 2 2 n n n 1 1 n 2 2 x x 2 2 2 2 i i 2 i 1 i 1 1 e 2 n n n 1 1 n 1 2 2 x x 2 2 i i 2 i 1 i 1 1 e 2 n 1 2 2 2 n 1 1 e 2 n xi i 1 n 1 1 2 n 1 e xi i 1 n 1 n xi i 1 n 1 2 xi i 1 n 1 2 n n x2 i 2 i 1 n 1 n 1 2 n x2 i n 1 i 1 2 2 n 1 n 1 e n xi 1 N i 1 , n 1 n 1 42 n xi u i 1 n 1 n 2 Note: the MLE (maximum likelihood estimate) based on n f x1 ,, xn | l | x1 ,, xn is x x i 1 i while the posterior n mean (Bayes estimate under square loss function) is n x i 1 i n 1 n 1 x . n 1 n 1 The posterior mean incorporates the information obtained from the data (sample mean, (prior mean, prior is ). x ) with the information obtained from the prior The variance of x is 1/ n 1 . Intuitively, the variation of prior is n and the variance of times of the one of x . Therefore, we put more weights to the more stable estimate. A Useful Result: Let T X 1 , X 2 , , X n be a sufficient statistic for the parameter with density g t | . If, T X 1 , X 2 ,, X n t Then f | x1 ,, xn f | t g t | m t , m t is the marginal density for T X 1 , X 2 ,, X n . Example 3 (continue): 43 n f x1 , , xn | xi 2 n 1 i 1 e 2 n 1 e 2 2 n n 1 n 2 2 xi xi2 2 i 1 i 1 n 1 nx 2 n 2 e e 2 n 1 e 2 1 1 nt n 2 2 n xi2 i 1 2 n xi2 i 1 e 2 t x By factorization theorem, t x is a sufficient statistic. By the above useful result, x 1 n f | x1 ,, xn f | x ~ N , 1 n 1 1 n Definition (Conjugate family): Let denote the class of density function f x | . A class P of prior distribution is said to be a conjugate family for f | x is in the class P for all f x | P . A Useful Result: Let f x | hx e x . 44 if and If the prior of is k , e , then the posterior is f | x k x, 1e x 1 . [proof:] f | x f x | k , e h x e x k , h x e x 1 e x 1 Since d k , e d 1 k , 1 e d , thus k x, 1 1 x 1 e d . Therefore, e x 1 f | x x 1 k x, 1e x 1 d e Note: Some commonly used conjugate families are the following: Normal-normal, Poisson-gamma, Normal-gamma, Binomial-beta, Multinomial-Dirichlet. 45 Negative Gamma-gamma, binomial-beta, 2.2 Bayesian Inference For Bayesian analysis, f | x , the posterior distribution, plays an important role in statistical inferential procedure. Some Bayesians suggest that inference should ideally consist of simply reporting the entire posterior f | x distribution (maybe for a non-informative prior). However, some standard uses of the posterior are still helpful!! Some statistical inference problems are I. Estimation (point estimate), estimation error II. Interval estimate III. Hypothesis testing IV. Predictive inference I. Estimation (point estimate) (a) Estimation is ˆ is the most likely value of given the The generalized maximum likelihood estimate of maximizes f | x . ˆ which prior and the sample X. Example 3 (continue): n n xi 2 1 i 1 X 1 ,, X n ~ N ,1, f x1 , , xn | e 2 and ~ N ,1 .Then, 46 2 x 1 n f | x1 ,, xn ~ N , 1 n 1 1 n ˆ x n 1 1 n ◆ is then the posterior mode (also posterior mean) Other commonly used Bayesian estimates of include posterior mean and posterior median. In Normal example, posterior mode=posterior mean=posterior median. Note: The mean and median of the posterior are frequently better estimates of than the mode. It is worthwhile to calculate and compare all 3 in a Bayesian study. Example 4: X ~ N ,1, 0; 1, 0. Then, f | x f x | I 0, I 0, e 1 e 2 x 2 2 Thus, f | x I 0, e e 0 47 x 2 2 x 2 2 d . x 2 2 Further, E f | x e 0 e x 2 e d 2 x 2 0 e d 2 0 e 0 e x 2 d 2 x 2 2 d 0 x 2 d 2 x 2 x x d 2 0 x e 2 d 2 x e 2 2 x x e d x 1 2 x e 2 2 2 2 d d e x x e 2 d 2 / 2 2 e 2 2 2 2 d d x x 1 x x2 1 e 2 x 2 1 x Note: The classical MLE in this example is x. However, classical MLE might result in senseless conclusion!! (b) Estimation error The posterior variance of x is 48 0. The V x E f | x x The posterior variance is defined as f | x V x E 2 . x 2 , f | x is the posterior mean. where x E Note: V x V x x x 2 Example 3 (continue): x 1 n . f | x1 ,, xn ~ N , 1 n 1 1 n Then, the posterior mean is x1 , , xn x n 1 , 1 n and the posterior variance is V x1 ,, xn 1 . n 1 Suppose the classical MLE x1 , , xn x is used, then V x1 ,, xn V x1 ,, xn x1 ,, xn x1 ,, xn 2 n n xi xi 1 i1 i 1 n 1 n 1 n 49 2 n n x i 1 i 1 n 1 nn 1 1 x n 1 n 1 2 2 Example 5: X ~ N , 2 , 1. Then, f | x ~ N x, 2 . Thus, the posterior mean=the posterior mode=posterior mode=x =classical MLE Note: The Bayesian analysis based on a non-informative prior is often formally the same as the usual classical maximum likelihood analysis. Example 4 (continue): The posterior density is f | x I 0, e e 0 and the posterior mean is 50 x 2 2 x 2 2 d x2 1 e 2 E f | x x x 2 x x , 1 x x2 1 e 2 2 where x . If x x , then 1 x V x V x x x 2 V x x x x 2 V x 2 x Therefore, V x V x 2 x . V x 1 x x x since V x E f | x x 2 x e 2 0 e x 2 x 2 d 2 2 2 0 0 e d 0 xe x e 2 x 2 e e 0 x 2 2 x 2 d 0 x x 1 where 51 2 d x 2 x 2 2 2 d d x e 2 x 2 2 d 2 e 2 2 d de 2 2 2 2 e e d 2 e 2 2 2 e x e 2 2 x 2 2 d e x 2 2 d (c) Multivariate estimation Let 1 , , p t be p-dimensional parameter. Then, the posterior mean is x 1 x 2 x p x t E f ( | x ) 1 E f ( | x ) 2 E f ( | x ) p and the posterior variance is f | x V x E x x t t Further, The posterior variance of x is V x E f | x x x t . V x x x x x t II. Credible sets (interval estimate) (a) Highest posterior density (HPD) A 1001 % credible set C for f | x d C PC | x f | x C is continuous discrete 52 , PC | x 1 . A 1001 % HPD (highest posterior density) credible set C for is CH : f | x k , where k is the largest constant such that PCH | x 1 . Example 3 (continue): x 1 n . f | x1 ,, xn ~ N , 1 n 1 1 n Therefore, a 1001 % HPD credible set for CH n xi i1 z1 2 n 1 n 1 , n 1 x i 1 i is n 1 z1 2 1 n 1 Example 5 (continue): As 2 1 , X ~ N ,1, 1. Then, f | x ~ N x,1 . Thus, a 1001 % HPD credible set for is CH x z1 , x z1 . 2 2 Note that the above HPD credible set is the same as the 1001 % 53 confidence interval based on classical analysis. Note: The classical confidence interval and the Bayesian credible set for are frequently the same. ◆ To find the Bayesian credible set, two approaches can be used. They are (I) using some numerical algorithm. (II) using asymptotic results: approximating f | x by N x , V x , then a 1001 % HPD credible set for is CH x z1 V x , x z1 V x . 2 2 For multivariate case 1 , , p , approximating f t | x by N x , V x , where x 1 x 2 x p x t and V x E f | x x x Then, t . xt V x 1 x ~ p2 . Thus, a 1001 % HPD credible set for is CH : x V x x p2,1 where p2,1 1 t is the 1001 54 , percentile of the chi-square distribution. Example 6: f x | e x I , x , 0, and 1 . 1 2 Then, e x x f | x f x | I 1 2 , e I 0 , x 1 2 e x Thus, the plot of the above posterior density is Posterior density b(a) The 1001 % HPD credible set for x is CH b , x . Let e log . Then, d 1 1 1 log d 1 log 2 Further, f x | e x log Ilog , x e xI log , x 55 and f | x f x | e x 1 I x 1 log 2 1, e 1 e x 1 I x 1 log 2 1, e Thus, the plot of the above posterior density is Posterior density 1 d(a) The 1001 % HPD credible set for x is CH 1, d . Although log , log 1, log d 0, log d b , x . That is the transformation of the 1001 % HPD credible set for not equivalent to the 1001 % HPD credible set for is . (b) General approach to find “optimal” credible set A 1001 % “optimal” credible set C for size with respect to S C , where 56 l has minimum S C l d , C and l is some specific function. For example, as l 1 , S C d the length of an interval C the resulting 1001 % “optimal” credible set is the 1001 % HPD credible set!! III. Hypothesis testing (a) Bayes factor To test H 0 : 0 v.s. H1 : 1 , we need to calculate 0 P 0 | x , 1 P 1 | x . Then, 0 1 reject H 0 0 1 not reject H 0 The conceptual advantage is that 0 and 1 are the actual (subjective) probabilities of the hypothesis based on the information from the data and prior opinions. Example 3 (continue): x 1 n . f | x1 ,, xn ~ N , 1 n 1 1 n Suppose 57 9 n 9, xi 110, 10 . i 1 Then, f | x1 ,, x9 ~ N 12,0.1 . To test H 0 : 10 v.s. H1 : 10 , 10 12 12 | x 0.1 0.1 P Z 6.3245 0 0 P 10 | x P and 1 P 10 | x 1 . Example 7: X ~ Binomial n, p, p 1. Then, f p | x p x 1 p n x That is, . f p | x ~ Betax 1, n x 1 . To test H 0 : 10 v.s. H1 : 10 , 1 1 n 2 n x | x 2 p x 1 p dp 0 x 1 n x 1 2 1 n 2 n x 2 p x 1 p dp x 1 n x 1 0 0 P p n 2 1 x 1 n x 1 2 n1 1 n x ! x! 1 2 n 1! x 1 x 1x 2 Definition (Bayes factor): Denote 58 0 P 0 d , 0 1 P 1 d 1 0 0 H H Let 1 be the posterior odds ratio of 0 to 1 and let 1 be the prior odds ratio. Then, the Bayes factor in favor of 0 is define as 0 B posterior odds ratio 1 0 1 prior odds ratio 1 0 . 0 1 [Intuition of Bayes factor:] Suppose 0 0 , 1 1 H 0 : 0 v.s. H1 : 1 . Then, 0 B 0 1 1 f 0 | x f 1 | x 0 1 0 f x | 0 f x | 0 1 f x | 1 0 f x | 1 1 is the classical likelihood ratio. Note: Bayes factor B can be perceived as a Bayesian likelihood ratio. Example 3 (continue): f | x1 ,, x9 ~ N 12,0.1 . Since ~ N 10,1 59 0 10 d 10 1 1 2 e d 1 2 2 2 10 The Bayes factor then is 0 B 0 1 1 0 1 0 1 . 2 1 2 Note: The use of p-value will sometimes have a similar result to the Bayesian approach especially as the vague prior information is used. For example, for X ~ N ,1, 1. Then, f | x ~ N x,1 . To test H 0 : 0 v.s. H1 : 0 , x 0 x 0 x 1 1 0 P 0 | x P However, for classical approach with data x, since X ~ N ,1 reject H 0 as X c . x 0 X 0 p - value P X x P 1 1 PZ x 0 1 x 0 Z ~ N 0,1 0 x Both p-value and 0 give the same value. 60 (b) Testing a point null hypothesis To test H 0 : 0 v.s. H1 : 0 , the Bayesian answers differ radically from the classical answers. The continuous prior density is not acceptable for the above hypothesis since any such prior will give 0 prior (and hence posterior) probability 0. We can choose the proper prior by the following way. Assume 0 0 P 0 1 1 0 P 0 . A proper prior is 0 , 0 1 g , 0 , where g is some proper density function such that g d 1 . 0 Note that d 0 g d 1 0 1 g d 0 0 0 1 1 Then, the marginal density of X is m x f x | d 0 f x | 0 g f x | d 1 0 0 f x | 0 1 0 g f x | d 0 0 f x | 0 1 0 m1 x where m1 x g f x | d . Thus, 0 61 f 0 | x 0 f x | 0 m x 1 1 0 1 0 0 f x | 0 0 f x | 0 1 0 m1 x m1 x f x | 0 1 0 m1 x 1 0 f x | 0 1 Therefore, the posterior odds ratio is 0 f x | 0 0 0 | x m x 1 1 0 | x 1 0 f x | 0 m x 0 f x | 0 0 f x | 0 m x 0 f x | 0 1 0 m1 x 0 f x | 0 1 0 m1 x and the Bayes factor is 0 B 0 1 1 f x | 0 m1 x . Example 7: Let H 0 : 0 v.s. H1 : 0 , X 1 ,, X n ~ N ,1. Then, 1 X ~ N , . A proper choice of prior is n 62 0 , 1 0 0 2 2 1 e 2 1 0 N ,1, 0 Then, m1 x n 0 2 e n x 2 1 1 2 1 n e 2 1 e 2 2 2 d x 2 2 1 1 n 1 ~ N ,1 n Then, 0 1 0 m1 x f 0 | x 1 0 f x | 0 1 0 1 0 1 2 1 1 n 1 2 1 e e 1 x 2 2 1 1 n x 0 2 1 n 2 n If 0 , then 0 1 0 1 0 1 0 1 0 1 0 1 0 1 1 n 1 1 n 1 1 n 63 e 1 n x 0 2 1 1 n e e n x 0 2 2 1 1 n z2 2 1 1 2 n 1 1 1 z n x 0 where . Further, if we let 0 1 1 1 n e 0 10 z2 2 1 1 n 1 2 , then 1 . The classical approach for the above hypothesis is to reject H 0 as z n x 0 c . Thus, the p-value is p - value P n X 0 n x 0 P Z z 21 z n X 0 Z ~ N 0,1. We have the following comparisons since for fixed z 1.96 : n=20 0.05 0.42 p-value 0 Note that n=50 0.05 0.52 n=100 0.05 0.60 n=1000 0.05 0.80 p value 21 1.96 0.05 . For n 20 , z 1 2 1 1 n 0 1 e 1 n 0.42 2 Similarly, 0 1 1 1 1 20 e 1.96 2 2 1 1 20 evaluated at the other n values can be obtained. 64 1 Theorem: For any prior 0 , 0 1 g , 0 , then 0 1 0 r x f 0 | x 1 0 f x | 0 where 1 r x sup f x | usually r x f x | ˆ . 0 Further, B f x | 0 f x | 0 . m1 x r x Example 7 (continue): 1 X ~ N , . . Then, n f x | 1 2 1 The maximum likelihood estimate is e x 2 2 1 n n ˆ x . Further, r x sup f x | f x | ˆ 0 Therefore, 65 1 2 1 n , 0 1 0 1 0 1 2 1 1 2 1 1 0 1 e 0 n x 0 2 z 1 0 1 e 2 0 2 e n x 0 2 1 n 2 n 2 1 1 Further, 1 2 1 f x | 0 B r x e As e x 0 2 1 n 2 n 2 1 n z2 2 z 1.96 and 0 10 z 0 1 e 2 2 1 1 p value 0.05 , 2 , then, 1.96 1 e 2 and B 1 6.83 . 66 2 1 0.127 1 2.3 Applications Example 8: Yi ~ N i , 2 N xi , 2 N xi11 xi 2 2 xip p , 2 , i 1,, n. , where x11 x 21 X xn1 Let x12 x22 xn 2 x1 p xi1 1 x x2 p i 2 , x t , 2 i xnp x ip p c , f | y f y | c 1 e 2 2 1 e 2 e y Xˆ Xˆ X y Xˆ Xˆ X t 2 1 e 2 2 2 e n y i x i 2 i 1 2 2 y X t y X 1 y Xˆ 2 2 2 n 1 ˆ t y Xˆ ˆ t t X t X ˆ N ˆ , X t X 1 ˆ X X t X ˆ t X 1 Xty X y Xˆ 0 t 2 Thus, posterior mean posterior mode posterior median ˆ X t X 1 Xty is the least square estimate. Further, 1001 % highest probability density (HPD) credible 67 set is 1 : 2 ˆ X X ˆ t t 2 p ,1 , which is the classical 1001 % confidence ellipsoid. Example 9: Y1 , Y2 ,, Yn ~ N , 2 , known. . 2 Let 1 2 .Then, f 2 | y 2 f y | 2 n n y i 2 1 1 i 1 2 2 e 2 2 2 n 2 y ns 2 i 1 2 n 2 e 2 s 2 i 1 n n 2 Inverse Gamma , 2 2 ns The posterior mean is n 2 1 ns 2 n n2 1 2 ns 2 Since 68 y i 1 i n2 2 . 1 n 2 | y ~ Gamma , 2, 2 2 ns 1001 % highest probability density (HPD) credible interval for 2 0 , 12 satisfy 1. 02 | y 12 | y 2. P 02 2 12 | y 1 Example 10: Y1 , Y2 ,, Yn ~ N , 2 , , 2 are unknown. Let , 1 .Then, f , | y , f y | , n 1 1 2 2 n y i 2 i 1 2 2 n y i 2 i 1 1 e 2 2 e n 1 n y i y 2 n y 2 i 1 1 2 2 e n 1 Thus, the posterior mode is n ˆ y , ˆ 2 y i 1 i y n 1 2 , by taking logarithm of the posterior density and solving for 69 . log f , | y 2n y 0 0 2 2 ˆ y log f ˆ, | y 2 0 n y n 1 2 i 1 2 n ˆ 2 1001 % credible set for , y i 1 y 2 i 2 4 0 y 2 i n 1 has the contour , c , where c is some constant. Therefore, 1001 % credible set for , is , : , c, satisfying f , | y dd , : , 1 . c To find c, the large sample result for the log-likelihood ratio is f , | y 2 log ~ 22 . ˆ f , ˆ | y Thus, 1 n 2 2 n 1 log y y n y i 2 2 i1 2 2 ~ 2 n 1log ˆ 1 2 since log ˆ, ˆ | y n 1 log ˆ 1 2 . Thus, 1001 % credible set for , has the contour 70 1 n yi y 2 n y 2 n 1log ˆ 1 1 22,1 , : n 1 log 2 2 i1 2 2 The component distribution f | y is f | y f , | y d 1 n 1 n y i y 2 n y 2 i 1 2 e 2 d n 1 n 2 2 r yi y n y i 1 2 2 n 2 yi y i 1 1 y s n n 1 n 2 2 2 n y 1 n 2 yi y i 1 n 2 n 2 n 2 n yi y 2 2 s i 1 n 1 s2 , the noncentral t distribution Note that f | y ~ t n 1, y , n 2 with location parameter y and s n . Further, y s n n y ~ t n1 . s The posterior mean is y and 1001 % highest probability density (HPD) credible interval for 71 s s y t , y t n1, n1, 2 2 n n . Example 11: Yi ~ N i , 2 N zi , 2 , i 1,, n. is a single parameter. Let , 2 f , 2 | y , 2 1 2 , f y | , 2 1 n 2 2 2 1 ˆ ˆ yi zi zi zi 2 2 i 1 e ˆ 2 1 n yi zi ˆ 2 2 i 1 n2 2 2 e e n yi zi 2 i 1 2 2 z y i i i 1 n 2 z i i 1 n 2 2 z ˆ n zi yi zi ˆ 0 i 1 1 n 1 1 2 2 2 n n 2 i i 1 2 The component distribution f | y is f , f 2 | y 1 2 n2 2 e | y d y i z i ˆ n 2 i 1 y i z i ˆ 2 2 n n2 2 2 i 1 e n i 1 z i2 ˆ 1 2 2 n ˆ 2 2 y z i i 2 2 e 2 i 1 n 2 zi i 1 1 1 2 e 1 1 2 n2 2 1 2 2 2 72 2 n i 1 d z i2 ˆ 1 2 2 d Thus, the posterior mode is y n ˆ 2 i 1 i zi ˆ 2 n 1 Chapter 3 Prior Information 3.1 Subjective Determination of the Prior Density Several useful approach can be used to determine the prior density. They are 1. the histogram approach 2. the relative likelihood approach 3. matching a given functional form 4. CDF determination (1) The histogram approach Divide the parameter space into intervals, determine the subjective probability of each interval, and then plot a probability histogram. (2) The relative likelihood approach Compare the intuitive “likelihoods” of various points in , and directly sketching a prior density from these determinations. (3) Matching a given functional form Assume that is of a given functional form, and to then choose the density of this given form which most closely matches prior beliefs. (4) CDF determination 73 Subjectively determine several percentiles, plot the points z , , sketch a smooth curve joining them, where P z . Note: The histogram and the relative likelihood approaches are the most useful. Note: Untrained or unpredicted people do quite poorly in determining the probability distributions. Through the study of “Bayesian robustness”, we can alleviate this problem. 3.2 Non-informative Priors (a) Introduction Non-informative prior is a prior which contains no information about . Example 1: X ~ N ,1, , . c , c is some constant. ◆ Laplace (1812) used 1. As c d cd . is not a proper density (improper prior). In addition, the 74 other severe criticism of the non-informative prior is the lack of invariance under transformation. Example 2: is the parameter we are interested . Suppose 1. Let e log . Then, the prior for is d 1 1 log d . Therefore, the prior for is not non-informative. (b) Non-informative prior for location and scale problems (i) Location problem The density of X is of form f x x f x (i.e., depends only on x ). The density is then said to be a location density. Example 3: X ~ N , 2 Then, let f z z2 1 2 e 2 2 . The density of X is f x x f x 1 e 2 x 2 2 2 ◆ 75 For any constant c, if Y X c X Y c , then Y has the density f y y f x x dx f x x f x . dy Further, denote c , then Y has the density f y which is identical to the density of X, f x , in structure. Example 3 (continue): N , , 5 . X ~ N , 2 , Y X 5 . Then, Y ~ N 5, 2 2 Y has the density f y y f y 1 e 2 y 2 2 2 ◆ Assume and are the non-informative priors of and c , respectively. Let A be any set. Then, d d A A A c A A c d Ac c d A Thus, c . Let c . c 0 some constant 76 . It is convenient to choose the constant to be 1; that is, c 1 . (ii) Scale problem The density of X is of form x f , 0 1 The density is then said to be a scale density. Example 4: X ~ N 0, 2 . Then, let f z 1 e 2 z2 2 . The density of X is x 1 f 1 1 e 2 1 x 2 2 x2 1 2 e 2 2 ◆ Let Y cX X f y y f x x Y . Then, Y has the density function c dx 1 1 y 1 y x 1 f f f , dy c c c where c . Y has the density to the density of X, y f which is identical 1 y f , in structure. 1 Example 4: 77 Y 5 X , X ~ N 0, 2 . Then, Y ~ N 0,25 2 . . The density of Y is fY y 1 2 5 e y2 1 y 2 2 25 2 2 1 1 e 2 2 x f , 5 ◆ Assume and are the non-informative priors of and c , respectively. Let A be any set. Then, d d A A A c A d A A c c 1 d c c A Thus, 1 . Let c . c c c 1 . 1 c It is convenient to set 1 1. Then, c 1 c Note: 78 1 . 1 is an improper prior since 1 d . 0 Note: The main difficulty in using the non-informative prior is the uniqueness. For example, in location problem, c can be possible choices. There are a variety of different choices. (c) Non-informative priors in general settings 2 log f x | Let I E be the expected Fisher’s 2 information. Jeffreys (1961) proposed the non-informative prior I 1 If 1 2 p t 2 . is a vector of parameter, then 1 , 2 ,, p det I 1 2 , where 2 log f x | 2 log f x | E E 2 1 2 1 2 2 E log f x | E log f x | I 22 2 1 2 2 E log f x | E log f x | p 1 p 2 Example 5: X ~ N ,1. Then, 79 2 log f x | E 1 p 2 log f x | E . 2 p 2 log f x | E p2 X 2 1 2 log e 2 2 I E 2 1 X 2 2 2 2 log E 1 2 . Thus, the non-informative prior is I 2 1 1 Example 6: X ~ N 0, 2 . Then, X 2 1 2 2 log e 2 2 I E 2 2 log E 1 X2 2 2 2 2 1 3X 2 1 3 E 2 2 4 E X2 4 2 2 80 . Then, I 2 1 1 3.3 Maximum Entropy Priors Motivation: Since partial information is available, it is desirable to find a prior that is as non-informative as possible. Definition (Entropy, discrete case): Assume 1 , 2 ,, n , is discrete, and let be a probability on . The entropy of , is denoted by n , n i log i Elog . i Note: If i 0 , then i log i is defined to be 0. Example 7: 0,0.1,0.2,,1, i 1 , i 11 Then, n i 1 1 log log 11 . 11 11 Example 8: 1 , 2 ,, n , i 81 1 , n . . Then, 1 n log log n n i n 1 ◆ The following result can be used to find the maximum entropy as some partial prior information Eg k , k 1,2,, m is available, for example, as g k , Eg k E ; as g k , Eg k E k as k ; g k I ( , z k ] , E g k E I ( , z k ] P zk . Important Result: The prior satisfying Eg k k , k 1,2,, m , has maximum entropy, m ~ i k g k i e k 1 m e k g k i , k 1 i where 1 , 2 , , m can be determined by equations Eg k k , k 1,2,, m 82 Example 9: 0,1,2,, g1 , E 5 . Then, ~ e1 e 1 1 e1 e1 1 p p , p e1 , 0 which is geometric distribution. E 1 p 1 1 5 p e1 1 log . p 6 6 Therefore, 1 1 51 ~ 1 6 6 66 . Definition (Entropy, continuous case): Assume is continuous. The entropy of is log d 0 0 n E log , where 0 is the natural “informative” prior. Important Result: The prior satisfying E g k g d k has maximum entropy, 83 k , k 1,2, , m , m ~ 0 e k g k k 1 m k g k d 0 e k 1 , where 1 , 2 , , m can be determined by equations Eg k k , k 1,2,, m Example 10: 0, and assume is location parameter. Also, let 0 1 Assume we know g1 E 3 . Then, ~ 1 e1 1 1 e d e1 1e1 1 0, 1 0 1 Thus, E 3 1 1 1 1 3 . That is, 1 3 ~ e 3 . Example 11: , R and assume is location parameter. Also, let 0 1 . Assume we know g1 E 3 . Then, 84 ~ 1 e 1 1 1 e d does not exist since 1 e 1 d does not exist. Example 11: , R and assume is location parameter. Also, let 0 1 . Assume we know g1 E 3, g 2 3 E 3 Var 1 . 2 2 Then, ~ 1 e 1 2 3 2 1 e 1 2 3 2 e d e 2 3 1 2 2 2 3 1 2 2 1 1 ~ N 3 , 2 22 2 Thus, E 3 3 Therefore, 1 1 1 , Var 1 1 0, 2 . 2 2 22 2 ~ ~ N 3,1 85 2 2 d Note: Two difficulties arise in trying to use the maximum entropy approach to determine a prior: the need to use a non-informative prior in the derivation of ~ ~ does not exist. 3.4 Using the Marginal Distribution (I) Introduction Recall the margin density of X is f x | d continuous m x discrete f x | Example 12: X ~ N , 2f , ~ N , 2 Then, the marginal density of X is m x f x | d ~ N , 2 2f 1 2 f e x 2 2 2 f 1 2 e 2 2 2 d ◆ Note: m x is sometimes called the predictive distribution for X. 86 Let m c x m w x be the marginal density under the correct prior and be the marginal density under the wrong prior. Then, the statistic obtained from the data should be “close” to the same statistic based on xc m c x , not on m w x . For example, let be the mode of m c x and xw be the mode of m w x . Intuitively, the observed data x should be around xc , not xw . To find the “correct” or “sensible” prior effectively, one could restrict the choice of the priors to some class. Then, based on some criteria, the best prior could be found. Several classes of priors are frequently used. 1. Priors of a given functional form: : g | , , where is some set and is called a hyper-parameter of the prior. Example 13: : ~ N , 2 , 0, 2 0 , , 2 is the hyper-parameter. 2. Priors of a given functional form: p t : 0 i , 0 is any density, 1 ,, p , i 1 87 Example 14: X1, X 2 ,, X p , X i ~ N i , 2f , 2f is a known constant. p : 0 i , 0 ~ N , 2 , , 2 0. i 1 3. Priors close to an elicited prior: Any prior “close” to a sensible prior For example, 0 would also be reasonable. contamination class is : 1 0 q , q L, where L is a class of possible “contaminations” and q is some density function for . (II) Prior selection There are several approaches to select a sensible prior. They are (i) the ML-II approach (ii) the moment approach (iii) the distance approach (i) ML-II approach Let be a class of priors under consideration. ML-II (maximum likelihood-type II) is to find ˆ satisfying mˆ x sup m x . 88 Example 15: X1, X 2 ,, X p , X i ~ N ,1, ~ N , 2 Then, m xi ~ N ,1 2 The ML-II method is to find and 2 . maximizing m x . Thus, p m x 1 2 1 2 i 1 2 1 2 p 2 x i 2 e 2 1 2 x i x 2 2 e 2 1 e p x 2 1 2 2 m x m x 0, 0 2 ˆ x , p ˆ 2 Therefore, x i 1 x 2 i p 1 s 2 1 ˆ 2 max 0, s 2 1 ˆ ~ N x , max 0, s 2 1 . Example 16: : 1 0 q , q L, Then, m x f x | 1 0 q d f x | q d 1 f x | 0 d 1 m 0 x mq x 89 q̂ Now, ML-II prior is to find in L which maximizes is the class of all possible distributions and Let at ˆ mq x . If L maximizes f x | ˆ be the distribution with P ˆ 1 (all mass ˆ ). Since mq x f x | q d f x | ˆ q d f x | ˆ q d f x | ˆ f x | ˆ d m ˆ x then ˆ 1 0 ˆ . (ii) Moment approach Let f and 2f be the conditional mean of variance of X with respect to f x | . Also, let m and m2 be the known marginal mean of variance of X with respect to m x . Then, the following equations can be used to obtain the moment of the prior density such as and 2 : m E f E X E E X | Y m2 E 2f E f m 2 Var X EVar X | Y EE X | Y E X 2 90 One special example is f m E f , 2f 2f m2 2f 2 Example 17: X ~ N ,1, ~ N , 2 Suppose we know . 2 m 1, m 3 . Since f , 2f 1 then m 1 , m2 3 2f 2 1 2 2 2 Thus, ~ N 1,2 is the appropriate prior. (iii) Distance approach Let m̂ x be the marginal density estimate of X obtained from the data. Also, let mˆ x f x | ˆ d be the marginal density when the best prior is found. Then, we try to find ˆ to minimize m ˆ x ˆ , mˆ E mˆ log d m mˆ x m ˆ x m ˆ x dx continuous log m x ˆ m ˆ x ˆ x discrete log m x m ˆ x 91 Note: ˆ x mˆ x d m ˆ , mˆ 0 . m 3.5 Hierarchical Priors Hierarchical prior is called a multistage prior. Let the prior class 1 : 1 | , , where 1 | is to consider prior is of a given functional form. The second stage 2 2 on the hyper-parameter. The second stage is also called a hyper-prior. Note: 1 | 2 d Example 18: X 1 , X 2 ,, X n , X i ~ N i ,1, n 2 2 1 : 1 | 0 i | , , , 0 ~ N , i 1 , 2 0 2 2 , 2 1 e 800 1002 800 1 6 0.0016 2 7 N 100,400 IG 6,0.001 1 1 z Z ~ IG , f z e 1 z 92 e , . 1000 2 Example 19: X 1 , X 2 ,, X n , X i ~ N i ,1, n 2 1 : 1 | 0 i | 2 , 0 ~ N 100, 2 , 2 0. i 1 n 1 | 2 i 1002 i 1 1 2 2 p 2 2 2 2 e 2 1000 1 60.0016 2 7 2 e 1000 1018 120 2 7 e 2 Then, 1 | 2 1 2 d 2 0 n i 1002 0 i 1 1 2 2 n e 2 2 2 10 1 1202 10 1202 n n 2 2 7 2 0 18 2 d 2 n i 1002 2000 i 1 2 2 e d 2 n 6 2 2 120 2 7 e 18 n 1000 1018 1 2 1000 1000 i 2 i 1 n 1 2 1000 1000 i 2 i 1 0 n 6 2 n 6 6 n 2 n 2 n i 1002 2000 i 1 2 2 93 1 n 2 2 6 1 e d 2 18 10 1202 n n 6 2 6 1 2 1000 1000 i 2 i 1 12 n 18 10 1 2 2 n 6 2 2 10 3 6 n n 2 n 2 1 n 2 1 1000 i 2000 i 1 12 n 2 12 n 12 n n 2 2 2 1 1 1000 i n 6 2000 2 2000 i 1 12 n 12 n 1 2 t 1 2 1 1 12 n 2 500 n 12 2 6 3 500 t Multivaria te - t distributi on with 12, 1000,,1000 , I 3 since 1 2 1000 1000 i 2 i 1 n 6 2 n 6 n 2 n i 1002 2000 i 1 1 n 2 2 6 1 2 2 e is the density of n IG 6 , 1000 2 n i 1 100 2 i 2 and for a multivariate-t distribution Y with parameters , 1 2 n t , , 94 1 has the density n 2 1 t 1 f y f y1 ,, yn 1 y y p det 12 2 2 2 2 n 2 3.6 Criticisms I Objectivity Box (1980): it is impossible logically to distinguish between model assumptions and the prior distribution of the parameters. II Misuse of prior distribution Question: When different reasonable priors yield substantially different answers, can it be right to state that there is a single answer? Would it not be better to admit that there is scientific uncertainty, with the conclusion depending on the prior beliefs? Answer: In reporting conclusions from Bayesian analyses, the prior (also, data and loss) should be reported separately, in order to allow others to evaluate the reasonableness of the subjective inputs. III Robustness Question: Slight changes in the prior distribution might cause significant changes in the decision. Answer: Through “robust Bayesian” methodology and choice of “robust priors”, concern can be reduced. 95 3.7 Empirical Bayes Analysis Motivation: For X i i i , i ~ N 0, 2 , i 1,2,, p. Then, the MLE estimate is ˆi X i (higher dimensional model). If 1 2 p , the MLE estimate is p ˆ X X i 1 i (lower dimensional model). p Question to ask: which model is better (lower or higher)? Answer: empirical Bayes analysis is particular desirable in this situation. Empirical “compromise” Bayes between the method model provides where i a are completely unrelated (higher dimensional model) and that where all the i are assume to be equal (lower dimensional model). (a) Introduction There are two types of empirical Bayes methods. One is parametric empirical Bayes (PEB) and the other is nonparametric empirical Bayes (NPEB). Parametric empirical Bayes: the prior is in some 96 parametric class with unknown hyperparameters. Nonparametric empirical Bayes: one typically assume only that i are i.i.d. from some prior . Example 20: Let X i ~ N i , 2 , i ~ N , 2 , where and 2 are unknown hyperparameters. ◆ Two different ways to carry out empirical Bayes analysis are 1. estimating the prior or posterior by data first, then use or ˆ | x ˆ to carry out the standard Bayes analysis. 2. finding the Bayes rule in term of unknown prior, and use the data to estimate the Bayes rule. (b) Parametric empirical Bayes for normal mean Example 15 (continue): X1, X 2 ,, X p , X i ~ N i ,1, ~ N , 2 Then, 1. m xi ~ N ,1 2 The prior using ML-II method is . ˆ ~ N ˆ ,ˆ2 N x , max 0, s 2 1 , where ̂ x and ˆ 2 max 0, s 2 1. 2. 97 The posterior distribution for X i is 2 xi 2 f i | xi ~ N , 2 2 . 1 1 Then, the parameter estimate using Bayes rule under square-loss function is the posterior mean 2 xi 1 2 xi xi ˆ i 1 2 1 2 x i xi 1 2 xi B xi 1 B xi B where B 1 . Further, the posterior variance is 1 2 Vˆi 2 1 B . 1 2 Note: B 0 ˆi xi ˆ B 1 i ◆ Morris (1983, JASA) suggested p 3 1 ˆ B p 1 1 ˆ 2 , and then the empirical Bayes estimate is ˆiEB 1 Bˆ xi Bˆ ˆ 1 Bˆ xi Bˆ x Vˆi where ̂ EB and 2 p 1Bˆ 2 Bˆ 2 xi x 1 p p3 ˆ 2 . are the estimates obtained in step 1. 98 , The estimated posterior distribution is fˆ i | x ~ N ˆiEB ,Vˆi EB Note: B 0 ˆi xi ˆ B 1 i The 1001 % HPD credible set for i is CiEB ˆiEB z1 Vˆi EB ,ˆiEB z1 Vˆi EB 2 2 and The 1001 % HPD credible set for is C EB p i ˆiEB : Vˆi EB i 1 2 2 p ,1 . Example 21: Y1 , Y2 ,, Yn , Yi ~ N i ,1, i xi 1 xi1 2 xi 2 p xip i , i ~ N 0, 2 xi xi1 Then, 1. Since xi 2 xip , 1 2 p t i ~ N xi , 2 m yi ~ N xi ,1 2 . The marginal distribution is then m y m y1 , y2 ,, yn 2 1 2 2 1 2 n 2 99 n 2 n y i x i 2 i 1 e e 2 1 2 y x t 1 y x 2 where x11 x12 y1 x y x22 21 2 y ,X xn1 xn 2 yn ̂ and ˆ 2 minimizing x1 p 1 2 0 x2 p 0 1 2 , xnp 0 0 0 0 1 2 m x also minimize y X t 1 y X . Thus, ˆ X t ˆ 1 X n 1 ˆ 1 y X t y x ˆ i i 2 i 1 ˆ n 2 1 where 1 ˆ 2 0 2 ˆ 0 1 ˆ 0 0 Since ̂ involves ˆ 2 and ˆ 2 0 0 . 1 ˆ 2 involves ̂ , we have to use iterative scheme to solve. 2. The posterior distribution for Yi is 2 yi xi 2 f i | yi ~ N , 2 2 . 1 1 Similar to the previous example, the Empirical Bayes estimates are 100 ˆiEB yi Cˆ i yi xi ˆ ˆ Cˆ ˆ2 n l 2 C EB i i i ˆ Vi 1 yi xi ˆ ˆ n n li 2 where n lˆi 2 1 ˆ Ci 1 ˆ2 n lˆi and ˆ 1 X nX X ˆ li 1 ˆ 2 t 1 , Xt ii (c) Non-parametric empirical Bayes analysis Example 22: X 1 , X 2 ,, X p , X i ~ P i , i are i.i.d. from a common prior 0 Under square loss, the estimate is the posterior mean, f i | x i xi E i i f i | xi d i i f xi | i 0 i d i m 0 xi 1 m 0 x i i 101 ix e i xi ! i 0 i d i 2 x 1 ix 1e 0 i d i m xi xi 1! x 1m xi 1 f xi 1 | i m xi 1 0 i d i m xi x 1m xi 1 f i | xi 1d i m xi x 1m xi 1 m xi i i 0 0 0 0 0 0 0 0 Further, we can estimate the marginal distribution by the empirical distribution p ˆ 0 x m I x i 1 x p i , 1 as xi x I x where x i . Thus, the empirical Bayes estimate for 0 as xi x i is ˆ EB x xi 1 I xi 1 x j p j 1 I xi x j p j 1 102 . Chapter 4 Bayesian Computation (Monte Carlo Methods) Let let y y1 , y 2 ,, y n be the data observed and r E g g f | y d , be of interest. Sometimes, it might be very difficult to find the explicit form the above integral. In such cases, Monte Carlo method might be an alternative choice. (I) Direct sampling The direct sampling is to generate 1 , 2 ,, N from f | y and then use N rˆ g i i 1 N to estimate r. Note that N is usually large. The variance of Varg . N Var rˆ Thus, the standard error of r̂ is N s.erˆ 2 ˆ g r i i 1 N N 1 An approximate 95% confidence interval for r is rˆ 2s.erˆ . Further, the estimate of 103 . r̂ is p Pa g b | y is number of g i a, b . N ˆ p p̂ The standard error of is ˆ 1 p ˆ p . N ˆ s.e p (II) Indirect sampling As 1 , 2 ,, N can not be generated from the posterior directly, the following sampling methods can be used: (a) important sampling (b) rejection sampling (c) the weighted boostrap (a) Important sampling Since r E g | y g f | y d g w h d w h d N g w 1 N w 1 N i i 1 i N i i 1 N g w i i 1 i N w i 1 i 104 g f y | d f y | d where f y | h w and h is a density which the data can be generated from easily and be in generally chosen to approximate the posterior density, i.e., h c f y | , c R . X h is called importance function. Var can be estimated by Y s X2 x sY2 x s XY 2 3 2 y y y , where N x x i 1 N i N ,y N s 2 Y y i 1 i y i 1 N i N , s X2 i 1 i x 2 , N 1 N y N 1 x 2 , s XY x x y i 1 i i y N 1 The accuracy of the important sampling can be estimated by plugging in xi g i w i and yi w i . (b) Rejection sampling Let h be a density which the data can be generated from easily and be in generally chosen to approximate the posterior density. In addition, there is a finite known constant M 0 such that f y | Mh , 105 for every . The steps for the rejection sampling are: 1. generate j from h . 2. generate j independent of j from U ~ Uniform0,1 . 3. If j j f y | j Mh j accept j ; otherwise reject j . 4. Repeat steps 1~3 until the desired sample (accepted j ) 1 , 2 ,, N are obtained. Note that 1 , 2 ,, N will be the data generated from the posterior density. Then, N rˆ g i i 1 N . Note: f y | P j P j | U Mh j f y | Mh h dd 0 f y | Mh h dd 0 j f y | d f y | d 106 Differentiation with respect to j yield j f y | j f y | d , the posterior density function evaluated at j . (c) Weighted boostrap It is very similar to the important sampling method. The steps are as follows: 1. generate 1 , 2 ,, N from h . 2. draw from the discrete distribution over 1 , 2 ,, N which put mass qi wi N w i 1 , wi i f y | i h i , i at i . Then, N rˆ (III) g i i 1 N . Markov chain Monte Carlo method There are several Markov chain Monte Carlo methods. One of the commonly used methods is Metropolis-Hastings algorithm. Let be generated from h f y | which is needed only up to proportionality constant. Given an auxiliary function q , such that q, is a probability density function and q, q , , the Metropolis algorithm is as follows: 1. Draw from the p.d.f. q , 107 , where j is the current state of the Markov chain. h 2. Compute the odds ratio . h 3. If 1 , then j 1 . If 1 , then j 1 with probabilit y j with probabilit y 1 4. Repeat steps 1~3 until the desired sample (accepted j ) 1 , 2 ,, N , are obtained. Note that 1 , 2 ,, N will be the data generated from the posterior density. Then, N rˆ g i i 1 N . Note: For the Metropolis algorithm, under mild conditions, j converge in distribution to the posterior density as j . Note: q , is called the candidate or proposal density. The most commonly used q , is the multivariate normal distribution. Note: Hastings (1970) redefine h q , h q , , where q , is not necessarily symmetric. 108 Appendix: Exams Midterm 2003. 4. 18 1. (30%) Let X have a Binomial 1, distribution, 0,1, A 0, . The loss function is L , a a 2 . Consider decision rules of the form x cx . Assume the prior distribution is Beta2,2 . (a) Calculate Bayesian (prior) expected loss , a , and find the Bayes (prior) action. (Note that this is the optimal Bayes action for the no-data problem in which x is not observed). (b) Find R , . (c) Show that is inadmissible if c 1 . (d) Find the Bayesian (posterior) expected loss E f |x L , x given x 1 and find the Bayesian (posterior) action. (e) Find r , . (f) Find the value of c which minimizes r , 2. (15%) The loss matrix for some business actions is given in the following table. a1 a2 1 2 3 0 3 1 2 3 0 The prior belief is that 1 2 3 1 3 (a) Determine if each action is admissible or inadmissible. (b) What is the Bayes action? (c) What is the minimax action? 109 3. (40%) Let X 1 , X 2 ,, X 5 ~ Negative Binomial 1, and the prior distribution is Beta1,1 . Find the Bayes rule (or Bayes estimate) under loss (a) L , a a 2 (b) L , a a given x1 0, x2 0, x3 0, x4 0, x5 0 (c) L , a a 2 1 a, if a 3a , if a (d) L , a given x1 0, x2 0, x3 0, x4 0, x5 0 m z 1 m 1 z ) Z ~ Negative Binomial m , f z | ( z 4. (40%) If X ~ Exponential and 1 1 1 e ~ Inverse Gamma , . (a) Find the posterior mean. (b) If the loss function is a 2 L , a , find the Bayes estimate. (c) If the loss function is L , a a , find the Bayes estimate. 2 (d) Find the generalized maximum likelihood estimate of . ( If a random variable Z ~ Inverse Gamma , , then E Z 1 1 ,Var Z 1 1 2 2 2 110 , 2; 1 ~ Gamma , ) Z Quiz 2003. 05. 27 1. (60%) Observations yi , i 1,, n , are independent given two parameters and 2 , and normally distributed with respective means xi and variance 2 , where the xi are specified explanatory variables. (a) Suppose and 2 are unknown and the prior is , 2 1 2 . Find the marginal posterior density of 2 . Find the posterior mean based on the above marginal posterior. Find the generalized maximum likelihood estimate of 2 based on the marginal posterior. (b) Suppose 2 1 is known and the prior is 1. Find the marginal posterior density of . Find the generalized maximum likelihood estimate of based on the above estimate. For the following data, xi 1 -1 yi 3 2 0 0 -1 3 1 2 and the hypothesis H 0 : 0.98 v.s. H1 : 0.98 , find the posterior probability for H 0 and the posterior odds ratio. 2. (15%) X 1 , X 2 , , X 10 ~ Poisson , 1 ,0 . The observed data is 0 1 1 0 0 0 1 1 1 0 Find the approximate 90% HPD credible set, using the normal 111 approximation to the posterior. 3. (20%) Find the noninformative prior for the parameters yi ~ Exponential , i 1, , n . yi ~ N , 2 , i 1, , n, 2 and are the parameters of interest. 4. (30%) A large shipment of parts is received, out of which 10 are tested for defects. The number of defective parts, X, is assumed to have a Binomial 10, . From past shipments, it is known that ~ Beta1,10 . Find the Bayes rule (or Bayes estimate) under loss (a) L , a a 2 (b) L a , x 0 (c) L , a a, if a 2a , if a (d) L , a 112 , x 0. a 2 1 Final 2003. 06. 19 1. (20%) Find the noninformative prior for the parameters yi ~ Poisson , i 1, , n . yi ~ N , 2 , i 1, , n, 2 and are the parameters of interest. 2. (30%) Suppose that X ~ N ,1 , 0 ~ N 0,2.19 and : 0.8 0 0.2q , q L. (a) If L is the class of all distributions, find the ML-II prior for any given x. (b) If L q : q ~ N 0, 2 , 2 1 . , find the ML-II prior for any given x. 3. (30%) (a) Assume X ~ Binomial 3, . If the improper prior density As 1 I 0,1 , 1 x 3 , find 1001 % HPD credible set. (b) The waiting time for a bus at a given corner at a certain time of day is known to have a Uniform0, distribution. It is desired to test H 0 : 0 10 versus H1 : 10 . From other similar routes, it is known that has a Pareto6,3 distribution. If waiting times of 7, 8, 9, 10, and 11 and 12 are observed at the given corner, calculate the posterior probability for H 0 : 0 10 , the posterior odds ratio, and the Bayes 113 factor. 4. (30%) (a) Suppose that X 1 , X 2 ,, X n ~ Negative Binomial m, , and the prior distribution is Beta , . Find the posterior distribution of , the posterior mean, the posterior variance, the generalized MLE and the posterior variance of the generalized MLE. (b) Suppose that , and that X X 1 ,, X k ~ Multinomial n,1 ,, k t 1,, k t ~ Dirichlet 1,, k . Find the posterior mean and the generalized MLE. 5. (15%) A insurance company is faced with taking one of the following 3 actions: a1 : increase sales force by 10%; a 2 : maintain present sales force; a 3 : decrease sales force by 10%. Depending on whether or not the economy is good ( 1 ), mediocre ( 2 ), or bad ( 3 ), the company would expect to lose the following amounts of money in each case: a1 a2 a3 1 2 3 -90 -40 -20 -50 -40 -30 20 10 0 (d) Determine if each action is admissible or inadmissible. (e) The company believes that has the probability distribution 1 0.2, 2 0.3, 3 0.5 . Order the action according to their Bayesian expected loss (equivalent to Bayes risk, here), and state the Bayes action. (f) Order the actions according to the minimax principle and find the minimax action. 114