Topic 2 - Pegasus @ UCF

advertisement

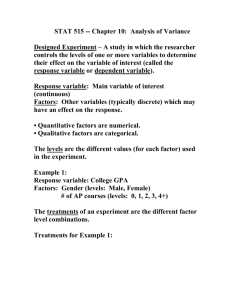

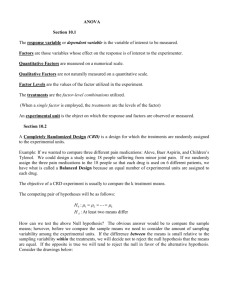

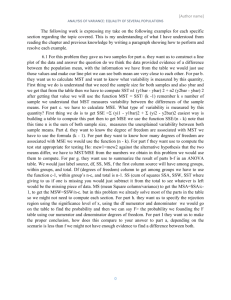

Lecture & Examples Topic 2: Completely Randomized Design The completely randomized design is the simplest form of experimental designs. In a completely randomized design, each treatment is applied to each experimental unit completely by chance. Although the completely randomized design is very simple, it has many advantages: (1) It is very flexible. Any number of treatments and any number of experimental units can be used. (2) The statistical analysis is easy even if the number of experimental units in different treatments is different. (3) The statistical analysis remains simple in the presence of missing values. We can actually prove that the relative loss of information due to missing data is smaller than any other experimental designs. Completely randomized design is extremely attractive in the case when experimental units are homogeneous. For example, it is the method of choice for many laboratory experiments, e.g., in physics, chemistry, or cookery, where a quantity of material, after through mixing, is divided into small samples or batches to which the treatments are applied. Steps for Conducting an Analysis of Variance (ANOVA) for a Completely Randomized Design: Step 1: To make sure the data come from a completely randomized design. This means that each treatment is assigned to an experimental unit completely by chance. Step 2: Use a graphical procedure such as box-plots or dot-plots to visualize the equal variance assumption. The normality assumption is guaranteed if the data truly comes from a completely randomized design. Suppose that the equal variance assumption is not satisfied, you can either use a nonparametric method, discussed in Chapter 15, or find a statistical consultant to get help. The method discussed in Chapter 15 is just one alternative when the equal variance assumption is not satisfied. It is not the "best" alternative. Step 3: Create an ANOVA table that is similar to Table 10.1 below with any statistical package such as SAS (used in this lecture) or SPSS. In Table 10.1, we assume that there are p treatments and n experimental units. Table 10.1: ANOVA Table for a Completely Randomized Design Source df SS MS F Treatment SST MST(a) MST/MSE (p 1) (b) Error SSE MSE (n p) Total SS(Total) (n 1) (a) MST = SST/(p 1) (b) MSE = SSE/(n p) Note: (1) The degrees of freedom for treatment is (p 1) in a completely randomized design with p treatments. (2) The degrees of freedom for error is (n p) in a completely randomized design with p treatments and n experimental units. (3) SST + SSE = SS(Total) (4) We can complete the ANOVA table if we know any two of these three quantities: SST, SSE, or SS(Total). (5) We can complete the ANOVA table if we know both MSE and MST. (6) Partially complete ANOVA table will always be available in exams or practice problems. Step 4: Use the F-test provided in the ANOVA table to perform the following test: H0: 1 = 2 = . . . = p Ha: At least two treatment means are different. The output from SAS or SPSS always includes the pvalue of the above F-test. We can reject the null hypothesis at significance level when "p-value ." Step 5: Suppose the F-test suggests that one can reject the null hypothesis, one can then use the multiple comparison procedures discussed in Section 10.3 to find the mean differences. However, all of those multiple comparison procedures discussed are very conservative and will not be discussed this semester. Example 10.4: A manufacturer of television sets is interested in the effect on tube conductivity of four different types of coating for color picture tubes. The following conductivity data are obtained. Coating Type 1 2 3 4 143 152 134 129 Conductivity 141 150 149 137 136 132 127 132 146 143 127 129 (a) Complete the following ANOVA table. General Linear Models Procedure Dependent Variable: RESP Sum of Source DF Squares Model (1) 844.68750 Error (2) (4) Corrected Total(3) 1080.93750 Mean Square (5) (6) F Value (7) Pr > F .00029 Solution: n = 16, p = 4, df(Treatment) = (p 1), df(Error) = (n p), df(Total) = (n 1), SSE = SS(Total) SST, MST = SST/(p 1), MSE = SSE/(n p), F = MST/MSE Note: In the above SAS printout, Model = Treatment and Pr > F = p-value. General Linear Models Procedure Dependent Variable: RESP Sum of Source DF Squares Model 3 844.68750 Error 12 236.25000 Corrected Total 15 1080.93750 Mean Square 281.56250 19.68750 F Value 14.30 Pr > F .00029 (b) Test the null hypothesis that 1 = 2 = 3 = 4, against the alternative that at least two of the means differ. Use = 0.05. Solution: H0: 1 = 2 = 3 = 4 Ha: At least two means differ Test Statistic: Fc = 14.30 p-value: p-value = 0.00029 Since the p-value = 0.00029 < = 0.05, one can reject the null hypothesis. Example 10.5: A manufacturer suspects that the batches of raw material furnished by her supplier differ significantly in calcium content. There is a large number of batches currently in the warehouse. Five of these are randomly selected for study. A chemist makes five determinations on each batch and obtains the following data. Batch 1 23.46 23.48 23.56 23.39 23.40 Batch 2 23.59 23.46 23.42 23.49 23.50 Batch 3 23.51 23.64 23.46 23.52 23.49 Batch 4 23.28 23.40 23.37 23.46 23.39 Batch 5 23.29 23.46 23.37 23.32 23.38 (a) Complete the following ANOVA table. General Linear Models Procedure Dependent Variable: RESP Sum of Source DF Squares Model (1) (4) Error (2) (5) Corrected Total(3) (6) Mean Square 0.0242440 0.0043800 F Value (7) Pr > F .00363 Solution: n = 25, p = 5, SST = (MST)(p 1), SSE = (MSE)(n p), SS(Total) = SST + SSE General Linear Models Procedure Dependent Variable: RESP Sum of Source DF Squares Model 4 0.0969760 Error 20 0.0876000 Corrected Total 24 0.1845760 Mean Square 0.0242440 0.0043800 F Value 5.54 Pr > F .00363 (b) Is there a significant variation in calcium content from batch to batch? Use = 0.05. Solution: H0: 1 = 2 = 3 = 4 = 5 Ha: At least two means differ Test Statistic: Fc = 5.54 p-value: p-value = 0.00363 Since the p-value = 0.00363 < = 0.05, one can reject the null hypothesis that all means are equal. Thus, there is a significant variation in calcium content from batch to batch, for = 0.05.