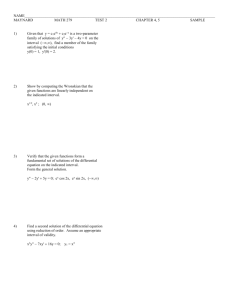

chap3

advertisement

1 3. Differential Equations 3.1 Introduction Almost all the elementary and numerous advanced parts of theoretical physics are formulated in terms of differential equations(DE). Sometimes these are ordinary DE in one variable (ODE). More often the equations are partial DE(PDE) in two or more variables. Since the dynamics of many physical systems involve just two derivatives, e.g., acceleration in classical mechanics and the kinetic energy operator (-2) in quantum mechanics, DE of second order occur most frequently in physics. Examples of PDEs 1. Laplace's eq., 2 This very common and important eq. occurs in studies of a. electromagnetic phenomena, b. hydrodynamics, c. heat flow, d. gravitation. 2. Poisson's eq. 2 -0. In contrast to the homogeneous Laplace eq., Poisson's eq. is nonhomogeneous with a source term -0. 3. The wave (Helmholtz) and time-independent diffusion eqs., 2 k2 = 0. These eqs. appear in such diverse phenomena as a. elastic waves in solids, b. sound or acoustics, c. electromagnetic waves, d. nuclear reactors. 4. The time-dependent diffusion eq. 2 1 a 2 t 5. The time-dependent wave eq., 1 2 2 2 2 c t is a four-dimensional analog of the Laplacian. 2 where 2 2 6.The scalar potential eq., 2 0 7.The Klein-Gordon eq., 2 , and the corresponding vector eqs. in which is replaced by a vector function. 8.The Schrodinger wave eq. 2 2 V i 2m t and 2 2 V E 2m for the time-independent case. Some general techniques for solving second-order PDEs: 1. Separation of variables, where the PDE is split into ODEs that are related by common constants which appear as eigenvalues of linear operators, L = l, usually in variable. 2. Conversion of a PDE into an integral eq. using Green's functions applies to inhomogeneous PDEs. 3. Other analytical methods such as the use of integral transforms. 4. Numerical calculations Nonlinear PDEs Notice that the above mentioned PDEs are linear (in ). Nonlinear ODEs an PDEs are a rapidly growing and important field. The simplest nonlinear wave eq C ( ) 0 t t results if the local speed of propagation, c, depends on the wave . When a nonlinear eq. has a solution of the form (x,t) = Acos(kx-t) where (k) varies with k so that ''(k) 0, 3 then it is called dispersive. Perhaps the best known nonlinear eq. of second is the Korteweg-deVries (KdV) eq. 3 0 t x x3 which models the lossless propagation of shallow water waves and other phenomena. It is widely known for its soliton solutions. A solition is a traveling wave with the properties of persisting through an interaction with another soliton: After they pass through each other, they emerge in the some shape and with the same velocity and acquire no more than a phase shift. On the other aspect, another important and interesting phenomenon, chaos, can be numerically found in some nonlinear PDEs in certain parameter regime. Classes of PDEs and boundary conditions* (Optional Reading) For a linear PDE (two variables) 2 2 2 a 2 b c d e f F ( x1 , x2 ) 2 2 x1x2 x2 x1 x2 x1 we say it is (i) elliptic PDE, if D = ac - b2 > 0; (Laplace, Possion in x, y) (ii) parabolic PDE, if D = 0; ( Diffusion eq. in (x,t)) (iii) hyperbolic PDE, if D < 0. (wave eq. in (x,t)) For a physical system, we want to find solutions that match given points, curves, or surfaces (boundary value problems). Eigenfunctions usually are required to satisfy certain imposed boundary conditions. These boundary conditions may take these forms: 1. Cauchy boundary conditions. The value of a function and normal derivative specified on the boundary. 2. Dirichlet boundary conditions. The value of a function specified on the boundary. 3. Neumann boundary conditions. The normal derivative (normal gradient) of a function specified on the boundary. 4 A summary of the relation of these three types of boundary conditions to the three types of 2D PDEs is given in the table for reference. The tern boundary conditions includes as a special case the concept of initial conditions. Boundary conditions Neumann Dirichlet Cauchy Open surface Closed surface Open surface Type of partial differential equation Elliptic Hyperbolic Parabolic Laplace Poission Wave function in Diffusion in (x,y) (x,t) equation in (x,t) Unphysical Unique, stable Too restrictive result(instability) solution Too restrictive Too restrictive Too restrictive Insufficient Insufficient Closed surface Open surface Unique, stable solution Insufficient Solution not unique Insufficient Closed surface Unique, stable solution Solution not unique Unique, stable solution in one direction Too restrictive Unique, stable solution in one direction Too restrictive 3.2 First-order Differential Equations Physics includes some first order DEs. As well, they are sometimes intermediate steps for second order DEs. We consider here DEs of the general form dy P ( x, y ) f ( x, y ) dx Q ( x, y ) (3.1) The eq. is clearly a first-order ODE. It may or may not be linear, although we shall treat the linear case explicitly later. 5 Separable variables Frequently, the above eq. will have the special form dy P( x) f ( x, y ) dx Q( y ) or P(x)dx + Q(y) dy = 0 Integrating from (x0, y0) to (x, y) yields, x x0 y P( x)dx Q ( y )dy 0 y0 Since the lower limits x0 and y0 contribute constants, we may ignore the lower limits of integration and simply add a constant of integration. Example Boyle's Law In differential form Boyle's gas law is dV V dP P V-volume, P - Pressure. or ln V + ln P = C. If we set C = ln k, PV = k. Exact Differential Equations Consider P(x,y) dx + Q(x,y) dy = 0 . This eq. is said to be exact if we match the LHS of it to a differential dφ, d dx dy x y . Since the RHS is zero, we look for an unknown function (x,y) = const. and d= 0. 6 We have P( x, y )dx Q( x, y )dy dx dy x y and P( x, y ), Q ( x, y ) x y The necessary and sufficient for our eq. to be exact is that the second, mixed partial derivatives of assumed continuous) are independent of the order of differential: . 2 P( x, y ) Q( x, y ) 2 yx y x xy If (x,y) exists then (x,y) = C. It may well turn out the eq. is not exact. However, there always exists at least one and perhaps an infinity of integrating facts, (x,y), such that (x,y) P(x,y) dx + (x,y)Q(x,y) dy = 0 is exact. Unfortunately, an integrating factor is not always obvious or easy to find. Unlike the case of the linear first-order DE to be considered next, there is no systematic way to develop an integrating factor for a general eq. Linear First-order ODE If f (x,y) has the form -p(x)y + q(x), then dy p ( x) y q( x) (3.2) dx It is the most general linear first-order ODE. If q(x) = 0, Eq.(3.2) is homogeneous (in y). A nonzero q(x) may represent a source or deriving term. The equation is linear; each term is linear in y or dy/dx. There are no higher powers; That is, y2, and no products, ydy/dx. This eq. may be solved exactly. . Let us look for an integrating factor α(x) so that ( x) dy ( x) p( x) y ( x)q( x) dx may be rewritten as (3.3) 7 d ( x) y ( x)q( x). dx (3.4) The purpose of this is to make the left –hand side of Eq.(3.2) a derivative so that it can be integrated—by inspection. It also, incidentally, makes Eq. (3.2) exact. Expanding Eq. (3.4), we obtain ( x) dy d y ( x)q ( x). dx dx Comparison with Eq.(3.3) shows that we must require d ( x) ( x) p ( x). dx Here is a differential equation for α(x) , with the variables α and x separable. We separate variables, integrate, and obtain ( x) exp p( x)dx x (3.5) as our integrating factor. With α(x) known we proceed to integrate Eq.(3.4). This, of course, was the point of introducing α(x) in the first place. We have x x d ( x) y ( x)dx p( x)dx. dx Now integrating by inspection, we have x ( x) y ( x) ( x)q ( x)dx C. The constants from a constant lower limit of integration are lumped into the constant C. Dividing by α(x) , we obtain y ( x) ( x) 1 ( x)q( x)dx C. x Finally, substituting in Eq.(3.5) for α yields x x s p(t )dt q( s)ds C . y( x) exp p(t )dt exp (3.6) 8 Here the(dummy)variables of integration have been rewritten to make them unambiguous. Equation (3.6) is the complete general solution of the linear, first-order differential equation, Eq.(3.2). The portion x y1 ( x) C exp p(t )dt corresponds to the case q(x) =0 and is a general solution of the homogeneous differential equation. The other term in Eq.(3.6), x x s y 2 ( x) exp p(t )dt exp p(t )dt q(s)ds . is a particular solution corresponding to the specific source term q(x) . The reader might note that if our linear first-order differential equation is homogeneous(q =0),then it is separable. Otherwise, apart from special cases such as p=constant, q=constant, or q(x) =ap(x) , Eq.(3.2) is not separable. Example RL Circuit For a resistance-inductance circuit Kirchhoff’s law leads to L dI (t ) RI (t ) V (t ) dt for the current I(t) , where L is the inductance and R the resistance, both constant. V(t) is the time-dependent impressed voltage. From Eq.(3.5) our integrating factor α(t) is x R (t ) exp dt L Rt L e . Then by Eq.(3.6) V (t ) t I (t ) e Rt L e Rt L dt C , L with the constant C to be determined by an initial condition (a boundary condition). For the special case V(t)=V0 , a constant, V L I (t ) e Rt L 0 e Rt L C L R V0 Ce Rt L . R 9 If the initial condition is I(0) =0, then c V0 R I (t ) V0 1 e Rt R L and . 3.3 SEPARATION OF VARIABLES The equations of mathematical physics listed in Section 3.1 are all partial differential equations. Our first technique for their solution splits the partial differential equation of n variables into ordinary differential equations. Each separation introduces an arbitrary constant of separation . If we have n variables, we have to introduce n-1 constants, determined by the conditions imposed in the problem being solved. Cartesian Coordinates In Cartesian coordinates the Helmholtz equation becomes 2 2 2 2 2 k 2 0 x 2 y z (3.7) Using ( x, y, z ) for the Laplacian. For the present let k2 be a constant. Perhaps the simplest way of treating a partial differential equation such as (3.7) is to split it into a set of ordinary differential equations. This may be done as follows. Let 2 ( x, y, z ) X ( x)Y ( y ) Z ( z ) YZ (3.7a) d2X d 2Y d 2Z XZ XY k 2 XYZ 0 2 2 2 dx dy dz Dividing by XYZ and rearranging terms, we obtain 1 d2X 1 d 2Y 1 d 2 Z 2 k . X dx 2 Y dy 2 Z dz 2 (3.8) Equation (3.8) exhibits one separation of variables. The left-hand side is a function of x alone, whereas the right-hand side depends only on y and z. So Eq.(3.8) is a sort of paradox. A function of x is equated to a function of y and z , but x , y , and z are all independent coordinates. This independence means that the behavior of x as an independent variable is not determined by y and z . The paradox is resolved by setting each side equal to a constant, a constant of separation. We choose 10 1 d2X l 2 , X dx k2 (3.9) 1 d 2Y 1 d 2 Z l 2 . Y dy 2 Z dz 2 (3.10) Now, turning our attention to Eq.(3.10), we obtain 1 d 2Y 1 d 2Z 2 2 k l , Y dy 2 Z dz 2 and a second separation has been achieved. Here we have a function of z equated to a function of and the same paradox appears. We resolve it as before by equating each side to another constant separation, -m2, 1 d 2Y (3.11) m 2 , 2 Y dy 1d Z k 2 l 2 m 2 n 2 , 2 Z dz (3.12) introducing a constant n2 by k2=l2+m2+n2 to produce a symmetric set of equations. Now we have three ordinary differential equations((3.9),(3.11),and (3.12)) to replace Eq.(3.7). Our assumption (Eq.(3.7a))has succeeded and is thereby justified. Our solution should be labeled according to the choice of our constants l, m ,and n ,that is , lmn ( x, y, z ) X l ( x)Ym ( y)Z n ( z ). (3.13) Subject to the conditions of the problem being solved and to the condition k2=l2+m2+n2 ,we may choose l , m ,and n as we like, and Eq.(3.13)will still be a solution of Eq.(3.7), provided Xl(x) is a solution of Eq.(3.9), and so on . We may develop the most general solution of Eq.(3.7)by taking a linear combination of solutions ψlmn , a lmn lmn (3.14) l , m, n The constant coefficients almn conditions of the problem. are finally chosen to permit ψ to satisfy the boundary Circular Cylindrical Coordinates With our unknown function ψ dependent on ρ, φ , and z becomes , the Helmholtz equation 11 2( , , z ) k 2( , , z ) 0. (3.15) or 1 1 2 2 ( ) 2 2 k 2 0, 2 z (3.16) As before, we assume a factored form for ψ , ( , , z ) P( ) ( ) Z ( z ). (3.17) Substituting into Eq.(3.16), we have ` Z d dP PZ d 2 d 2Z ( ) 2 P k 2 PZ 0. 2 2 d d d dz (3.18) All the partial derivatives have become ordinary derivatives. Dividing by PΦZ moving the z derivative to the right-hand side yields 1 d dP 1 d 2 1 d 2Z 2 ( ) 2 k . P d d Z dz 2 d 2 (3.19) Then d 2Z l 2Z , 2 dz (3.20) And 1 d dP 1 d 2 ( ) 2 k 2 l 2 . 2 P d d d (3.21) Setting k2+l2=n2, multiplying by ρ2, and rearranging terms, we obtain d dP 1 d 2 ( ) n2 2 . P d d d 2 (3.22) We may set the right-hand side to m2 and d 2 m 2. 2 d (3.23) and 12 Finally, for the ρ dependence we have d dP ( ) (n 2 2 m 2 ) P 0. d d (3.24) This is Bessel’s differential equation . The solution and their properties are presented in Chapter 6. The separation of variables of Laplace’s equation in parabolic coordinates also gives rise to Bessel’s equation. It may be noted that the Bessel equation is notorious for the variety of disguises it may assume. For an extensive tabulation of possible forms the reader is refered to Tables of Functions by Jahnke and Emde. The original Helmholtz equation , a three-dimension partial differential equation, has been replaced by three ordinary differential equations, Eqs.(3.20),(3.23),and (3.24). A solution of the Helmholtz equation is ( , , z ) P( )( ) Z ( z ). (3.25) Identifying the specific P, Φ, Z solutions by subscripts, we see that the most general solution of the Helmholtz equation is a linear combination of the product solutions: ( , , z ) a mn Pmn ( ) m ( ) Z n ( z ). (3.26) m,n Spherical Polar Coordinates Let us try to separate the Helmholtz equation, again with k2 constant , in spherical polar coordinates: 1 2 r sin 2 1 2 sin ( r ) (sin ) k 2. (3.27) 2 r r sin Now, in analogy with Eq.(3.7a) we try (r , , ) R(r )( )( ). (3.28) By substituting back into Eq.(3.27) and dividing by RΘΦ , we have 1 d 2 dR 1 d d 1 d 2 (r ) 2 (sin ) k 2 . (3.29) 2 2 2 2 Rr dr dr r sin d d r sin d Note that all derivatives are now ordinary derivatives rather than partials. By multiplying by r2sin2θ , we can isolate (1 )( d 2 d 2 ) to obtain 13 1 d 2 1 d dR 1 d d r 2 sin 2 k 2 2 (r 2 ) 2 (sin ) . (3.30) 2 d dr d Rr dr r sin d Equation(3.30) relates a function of φ alone to a function of r and θ alone. Since r , θ, and φ are independent variables, we equate each side of Eq.(3.30) to a constant. Here a little consideration can simplify the later analysis. In almost all physical problems φ will appear as an azimuth angle. This suggests a periodic solution rather than an exponential. With this in mind, let us use -m2 as the separation constant. Any constant will do, but this one will make life a little easier. Then 1 d 2 ( ) m 2 2 d (3.31) and 1 d 2 dR 1 d d m2 (r ) (sin ) 2 k 2 . (3.32) 2 2 2 dr d Rr dr r sin d r sin Multiplying Eq.(3.32) by r2 and rearranging terms, we obtain 1 d 2 dR 1 d d m2 2 2 (r )r k (sin ) . R dr dr sin d d sin 2 (3.33) Again, the variables are separated. We equate each side to a constant obtain 1 d d m2 (sin ) Q 0. sin d d sin 2 Q and finally (3.34) QR 1 d 2 dR (r ) k 2 R 2 0. 2 dr r dr r (3.35) Once more we have replaced a partial differential equation of three variables by three ordinary differential equations. Eq.(3.34) is identified as the associated Legendre equation in which the constant Q becomes l(l+1); l is an integer. If k2 is a (positive) constant, Eq. (3.35) becomes the spherical Bessel equation. Again, our most general solution may be written Qm (r , , ) RQ (r )Qm ( ) m ( ). (3.36) Q,m The restriction that k2 be constant is unnecessarily severe. The separation process will be possible for k2 as general as 14 k 2 f (r ) 1 1 g ( ) 2 2 h( ) k 2 . 2 r r sin (3.37) In the hydrogen atom problem, one of the most important examples of the Schrodinger wave equation with a closed form solution is k2=f(r). Equation (3.35) for the hydrogen atom becomes the associated Legendre equation. The great importance of this separation of variables in spherical polar coordinates stems from the fact that the case k2= k2(r) covers a tremendous amount of physics: a great deal of the theories of gravitation, electrostatics, atomic physics, and nuclear physics. And with k2= k2(r), the angular dependence is isolated in Eq. (3.31) and (3.34), which can be solved exactly. Finally, as an illustration of how the constant m in Eq.(3.31) is restricted, we note that φ in cylindrical and spherical polar coordinates is an azimuth angle. If this is a classical problem, we shall certainly require that the azimuthal solution Φ(φ) be singled valued, that is, ( 2 ) ( ). (3.38) This is equivalent to requiring the azimuthal solution to have a period of 2π or some integral multiple of it. Therefore m must be an integer. Which integer it is depends on the details of the problem. Whenever a coordinate corresponds to an axis of translation or to an azimuth angle the separated equation always has the form d 2 ( ) m 2 ( ) 2 d For φ, the azimuth angle, and d 2Z ( z) a 2 Z ( z) (3.39) dz 2 For z, an axis of translation in one of the cylindrical coordinate systems. The solutions, of course, are sinaz and cosaz for –a2 and the corresponding hyperbolic function (or exponentials) sinhaz and coshaz for +a2 . Table Solution in Circular Cylindrical Coordinates * am m , 2 0 m , a. J () cos m ez m m z N m () sin m e b. I () cos m cosz m m K m () sin m sin z 15 c. If α=0 (no z-dependence) m cos m m m sin m * Reference for the radial functions are Jm(αρ), Nm(αρ), Im(αρ) and Km(αρ) in Chapter 6. For the Helmholtz and the diffusion equation the constant k is added to the 2 separation constant a to define a new parameter or 2 2 2 >0) we get Jm(γρ) and Nm(γρ). For the choice 2 2 . For the choice 2 (with (with >0) we get Im(γρ) and 2 Km(γρ) as previously. 3.4 Singular Points Let us consider a general second order homogeneous DE (in y) as y'' + P(x) y' + Q(x) y = 0 (3.40) where y' = dy/dx. Now, if P(x) and Q(x) remain finite at x = x0, point x = x0 is an ordinary point. However, if either P(x) or Q(x) ( or both) diverges as x x, x0 is a singular point. Using Eq. (3.40), we are able to distinguish between two kinds of singular points. (1) If either P(x) or Q(x) diverges as x x0 but (x - x0)P(x) and (x-x0)2Q(x) remain finite, then x0 is called a regular or non-essential singular point. (2) If either (x - x0)P(x) or (x - x0)2Q(x) remain still diverges ax x x0, then x0 is labeled an irregular or essential singularity. These definitions hold for all finite values of x0. The analysis of x is similar to the treatment of functions of a complex variable. We set x = 1/z, substitute into the DE, and then let z 0. By changing variables in the derivative, we have dy ( x) dy ( z 1 ) dz 1 dy ( z 1 ) dy 2 z 2 dx dz dx dz dz x 2 1 d 2 y ( x) d dy ( x) dz dy ( z 1 ) 2 2 d y( z ) ( z ) 2 z z dz dx dx dz dx 2 dz 2 dy ( z 1 ) d 2 y ( z 1 ) 2z 3 z4 dz dz 2 (3.41) (3.42) 16 Using these results, we transform Eq.(3.40) into z4 d2y dy 2 z 3 z 2 P( z 1 ) Q( z 1 ) y 0 2 dz dz (3.43) The behavior at x = (z = 0) then depends on the behavior of the new coefficients 2 z P( z 1 ) Q( z 1 ) ~ ~ P ( z) and Q( z ) z4 z2 as z 0. If these two expressions remain finite, point x = is an ordinary point. If they diverge no more rapidly than that 1/z and 1/z2, respectively, x = is a regular singular point, otherwise an irregular singular point. Example Bessel's eq. is x2y'' + x y' + (x2 - n2) y = 0. Comparing it with Eq. (3.40) we have P(x) = 1/x, Q(x) = 1 - n2/x2, which shows that point x = 0 is a regular singularity. As x (z 0), from Eq. (3.43), we have the coefficients 1 n2 z 2 1 P( z ) and Q( z ) z z4 4 Since the Q(z) diverges as z , point x = is an irregular or essential singularity. We list , in Table 3.4, several typical ODEs and their singular points. Table 3.4 Equation 1. Hypergeometric Regular singularity 0,1, Irregular singularity ___ 2. Legendre -1,1, ___ -1,1, ___ x( x 1) y (1 a b) x cy aby 0 (1 x ) y 2 xy l (l 1) y 0 2 3. Chebyshev (1 x ) y xy n y 0 2 2 17 4. Confluent hypergeometric 0 5. Bessel 0 6. Laguerre 0 7. Simple harmonic oscillator ___ ___ xy (c x) y ay 0 x y xy ( x n ) y 0 2 2 2 xy (1 x) y ay 0 y 2 y 0 8. Hermite y 2 xy 2y 0 3.5 Series Solutions In this section, we develop a method of a series expansion for obtaining one solution of the linear, second-order, homogeneous DE. This method will always work, provided the point of expansion is no worse than a regular singular point. In physics this very gentle condition is almost always satisfied. A linear, second-order, homogeneous ODE may be written in the form y'' + P(x) y' + Q(x) y = 0. In this section we develop (at least) one solution. In the next section we develop a second, independent solution and prove that no third, independent solution exists. Therefore, the most general solution may be written as y = c1y1(x) + c2y2(x). Our physical problem may lead to a nonhomogeneous, linear, second-order DE y'' + P(x) y' + Q(x) y = F(x). F(x) represents a source or driving force. Specific solution of this eq., yp, could be obtained by some special techniques. Obviously, we may add to yp any solution of the corresponding homogeneous eq. Hence, y(x) = c1y1(x) + c2y2(x) + yp(x). 18 The constants c1 and c2 will eventually be fixed by boundary conditions. For the present, we assume F(x) = 0, and we attempt to develop a solution of the eq. by substituting in a power series with undetermined coefficients. Also available as a parameter is the power of the lowest nonvanishing term of the series. To illustrate, we apply the method to two important DEs. First, the linear oscillator eq. y'' + 2y = 0, (3.44) with known solutions y = sinx, cos t. We try y ( x) x k (a0 a1 x a2 x 2 ...) a x k 0 with k and a still undetermined. Note that k need not be an integer. By differentiating twice, we obtain y a (k )(k 1) x k 2 0 By substituting into Eq. (3.44), we have 0 x 0 a (k )(k 1) x k 2 2 a x k 0 (3.45) From the uniqueness of power series the coefficients of each power of x on the LHS must vanish individually. The lowest power is xk-2, for = 0 in the first summation. The requirement that the coefficient vanishes yields a0 k(k-1) = 0. Since, by definition, a0 0, we have k(k-1) = 0 This eq., coming from the coefficient of the lowest power of x, is called the indicial equation. The indicial eq. and its roots are of critical importance to our analysis. If k = 1, the coefficient a1(k+1)k of x k-1 must vanish so that a1 = 0. Clearly, in this example, we must require either that k = 0 or k = 1. 19 Now, we return to Eq. (3.45) and demand that the remaining net coefficients vanish. We set = j+2 in the first summation and ' = j in the second. This results in aj+2 (k+j+2)(k+j+1) + 2aj = 0 or a j 2 a j 2 (k j 2)( k j 1) This is a two-term recurrence relation. Given aj, we may compute aj+2 and then aj+4, aj+6, and so on as far as desired. We note that, for this example, if we start with a0, the above eq. leads to a2, a4 and so on, and ignores a1, a3, a5 and so on. Since a1 is arbitrary if k = 0 and necessarily zero if k =1, let us set it equal to zero and then a3=a5=... = 0. We first try the solution k = 0. The recurrence relation becomes a j 2 a j 2 ( j 2)( j 1) , which leads to 2 a 2 a 0 a4 a2 2 1 2 43 2 2! 4 4! a0 a0 …… a2 n (1) n 2n (2n)! a0 …… So our solution is y ( x) k 0 2n (x) 2 (x) 4 n (x) a 0 1 ... a 0 (1) a 0 cos(x) 2! 4! (2n)! n 0 If we choose the indicial eq. root k = 1, the recurrence relation becomes a j 2 a j 2 ( j 3)( j 2) 20 Again, we have a2 n (1) n 2n (2n 1)! For this choice, k = 1, we obtain y( x) k 1 (x) 2 (x) 4 a a 0 x 1 ... 0 3! 5! (x) 2 n 1 a 0 (1) sin( x) (2n 1)! n 0 n . This series substitution, known as Frobenius' method, has given us two series solution of the linear oscillation eq. However, two points must be strongly emphasized: (1) The series solution should be substituted back into the DE, to see if it works, as a precaution against algebraic and logical errors. (2) The acceptability of a series solution depends on its convergence (including asymptotic convergence). Expansion above x0 It is perfectly possible to write y ( x) a ( x x0 ) k , a0 0 0 Indeed, for the Legendre, Chebyshev and hypergeometric eqs the choice x = 1 has some advantages. The point x should not be chosen at an essential singularity -or our Frobenius method will probably fail. Symmetry of solutions It is interesting to note that we obtained one solution of even symmetry, y1(x) = y1(-x), and one of odd symmetry y2(x) = -y2(-x). This is not just an accident but a direct consequence of the form of the DE. Writing a general DE as L(x) y(x) = 0, in which L(x) is the differential operator, we see that for the linear oscillator eq, L(x) is even; namely L(x) = L(-x). Often this is described as ever parity. Whenever the differential operator has a specific parity or symmetry, either even or odd, we may interchange +x and -x, and have 21 L(x) y (-x) = 0. Clearly, if y(x) is a solution, y(-x) is also a solution. Then any solution may be resolved into even and odd parts, y(x) = [y(x)+y(-x)]/2 + [y(x)-y(-x)]/2, the first bracket giving an even solution, the second an odd solution. If we refer back to section 3.4, we can see that Legendre, Chebyshev, Bessel, simple harmonic oscillator, and Hermite eqs. all exhibit this even parity. i.e., their P(x) is odd and Q(x) even. Our emphasis on parity stems primarily from the importance of it in quantum mechanics. We find that wave functions usually are either even or odd, meaning that they have a definite parity. Most interactions (beta decay is the big exception) are also even or odd and the result is that parity is conserved. Limitations of Series Approach This attack on the linear oscillator eq. was perhaps a bit too easy. To get some idea of what can happen we try to solve Bessel's eq. x2 y'' + x y' + (x2-n2) y = 0. (3.46) Again, assuming a solution of the form y ( x) a x k 0 we differentiate and substitute into Eq. (3.46). The result is 0 0 0 0 a (k )(k 1) x k 1 a (k ) x k a x k 2 a n 2 x k 0 . By setting= 0, we get the coefficient of xk, a[k(k-1) + k-n2] = 0. The indicial equation k2 - n2 = 0 22 with solution k = n. For the coefficients of xk+1, we obtain a1[(k+1)k + k+1 -n2] = 0. For k = n (k 1/2), [ ] does not vanish and we must require a1 = 0. Proceeding to the coefficient of xk+j for k = n, we set = j in the 1st, 2nd, and 4th terms and = j-2 in the 3rd term. By requiring the resultant coefficient of xk+j to vanish, we obtain aj[(n+j)(n+j-1)+(n+j)-n2] + aj-2=0. When j j+2, this can be written for j≥0 as a j 2 a j 1 ( j 2)( 2n j 2) , (3.47) which is the desired recurrence relation. Repeated application of this recurrence relation leads to 1 a n! a2 a0 2 0 2(2n 2) 2 1!(n 1)! a 4 a 2 a n! 1 4 0 4(2n 4) 2 2!(n 2)! a 6 a 4 a n! 1 , and so on, and in general 6 0 2(2n 2) 2 3!(n 3)! a 2 p (1) p a 0 n! 2 2p p!(n p)! Inserting these coefficients in our assumed series solution, we have n! x 2 n! x 4 y( x) a0 x n 1 2 4 ... 2 1!(n 1)! 2 2!(n 2)! In summation form y ( x) a0 (1) j j 0 n! x n 2 j 1 x n a 2 n ! (1) j ( )n 2 j 0 2j 2 j!(n j )! j!(n j )! 2 j 0 (3.48) 23 The final summation is identified as the Bessel function Jn(x). When k = -n and n is not integer, we may generate a second distinct series to be labeled J-n(x). However, when -n is a negative integer, trouble develops. The recurrence relation is still given by Eq (3.47), but with 2n replaced by -2n. Then when j+2 = 2n, aj+2 blows up and we have no series solution. This catastrophe can be remedied in Eq. (3.48). Since m! = for a negative integer m (note that (Z-1)!=Z!/Z ), J n ( x) (1) j j 0 1 x 1 x ( ) n2 j (1) jn ( ) 2 jn (1) n J n ( x) j!( j n)! 2 j !( j n)! 2 j 0 The second solution simply reproduces the first. We have failed to construct a second independent solutions for Bessel's eq. by this series technique when n is an integer. By substituting in an infinite series, we have obtained two solutions for the linear oscillator eq. and one for Bessel's eq. (two if n is not integer) Can we always do this? Will this method always work? The answer is no! Regular and Irregular Singularities The success of the series substitution method depends on the roots of the indicial eq. and the degree of singularity. To have clear understanding on this point, consider four simple eqs. 6 y0 x2 6 y 3 y 0 x 1 a2 y y 2 y0 x x 1 a2 y 2 y 2 y 0 x x y (3.49a) (3.49b) (3.49c) (3.49d) For the 1st eq., the incicial eq. is k(k-1) - 6 =0, giving k = 3, -2. Since the eq. is homogeneous in x ( counting d2/dx2 as x-2), here is no recurrence relation. However, we are left with two perfectly good solution, x3 and x-2. For the 2nd eq., we have -6a0 = 0, with no solution at all, for we have agreed that a0 0. The series substitution broke down at Eq. (3.49b) which has an irregular singular point at the origin. 24 Continuing with the Eq. (3.49c), we have added a term y'/x. The indicial eq. is k2-a2=0, but again, there is no recurrence relation. The solutions are y = xa, x-a, both perfectly acceptable one term series. For Eq. (3.49d), (y'/x y'/x2), the indicial eq. becomes k = 0. There is a recurrence relation a j 1 a j a 2 j ( j 1) j 1 Unless a is selected to make the series terminate we have lim j a j 1 aj lim j j ( j 1) j 1 Hence our series solution diverges for all x 0. Fuchs's Theorem We can always obtain at least one power-series solution, provided we are expanding about a point that is an ordinary point or at worst a regular singular point. If we attempt an expansion about irregular or essential singularity, our method may fail. Fortunately, the more important eqs. of mathematical physics listed in Section 3.4 have no irregular singularities in the finite plane. SUMMARY If we are expanding about an ordinary point or at worst about a regular singularity, the series substitution approach will yield at least one solution (Fuchs’s theorem). Whether we get one or two distinct solutions depends on the roots of the indicial equation. 1. 2. 3. If the two roots of the indicial equation are equal, we can obtain only one solution by this series substitution method. If the two roots differ by a nonintegeral number, two independent solutionsmay be obtained. If the two roots differ by an integer, the larger of the two will yield a solution. The smaller may or may not give a solution,depending on the behavior of the coefficients. In the linear oscillator equation we obtain two solutions; for Bessel’s equation, only one solution. 25 The usefulness of the series solution in the terms of what is the solution(i.e., numbers) depends on the rapidity of the convergence of the series and the availability of the coefficients. Many, probably most, differential equations will not yield nice simple recurrence relations for the coefficients. In general, the available series will probably be useful for |x| (or |x-x0|) very small. Computers can be used to determine additional series coefficients using a symbolic language such as Mathematica, Maple or Reduce. Often, however, for numerical work a direct numerical integration will be preferred. 3.6 A Second Solution In this section we develop two methods of obtaining a second independent solution: an integral method and a power series containing a logarithmic term. First, however we consider the question of independence of a set of function. Linear Independence of Solutions Given a set of functions, , the criterion for linear dependence is the existence of a relation of the form k 0 , (3.50) in which not all the coefficients k are zero. On the other hand, if the only solution of Eq. (3.50) is k=0 for all , the set of functions is said to be linearly independent. Let us assume that the functions are differentiable. Then, differentiating Eq. (3.50) repeatedly, we generate a set of eq. n k 0 1 n k 0 1 … n k 1 ( n 1) 0 . This gives us a set of homogeneous linear eqs. in which k are the unknown quantities. There is a solution k 0 only if the determinant of the coefficients of the k's vanishes, 26 1 1 2 2 ... ... ... ... ... ... ( n 1) ... n 1( n 1) 2( n 1) n n 0 This determinant is called the Wronskian. 1. If the wronskian is not equal to zero, then Eq.(3.50) has no solution other than k = 0. The set of functions is therefore independent. 2. If the Wronskian vanishes at isolated values of the argument, this does not necessarily prove linear dependence (unless the set of functions has only two functions). However, if the Wronskian is zero over the entire range of the variable. the functions are linearly dependent over this range. Example: Linear Independence The solution of the linear oscillator eq. are = sinx, = cos x. The Wronskian becomes sin x cosx 0 cosx sin x and are therefore linearly independent. For just two functions this means that one is not a multiple of the other, which is obviously true in this case. You know that sin x (1 cos2 x)1 2 but this is not a linear relation. Example Linear Dependence Consider the solutions of the one-dimensional diffusion eq.: y y 0 . We have = ex and = e-x, and we add = cosh x, also a solution. Then ex ex ex ex e x ex cosh x sinh x 0 cosh x , 27 because the first and third rows are identical. Here, they are linearly dependent, and indeed, we have ex + e-x - 2 coshx = 0 with k 0. A Second Solution Returning to our 2nd order ODE y'' + P(x) y' + Q(x) y = 0 Let y1 and y2 be two independent solutions. Then Wroskian is W= y1y2' - y1'y2. By differentiating the Wronskian, we obtain W y1 y2 y1 y2 y1y2 y1 y2 y1 P( x) y2 Q( x) y2 y2 P( x) y1 Q( x) y1 (3.51) P( x)( y1 y2 y1 y2 ). In the special case that P(x) = 0, i.e. y'' + Q(x) y = 0, (3.52) W = y1y2'-y1'y2 = constant. Since our original eq. is homogeneous, we may multiply solutions y1 and y2 by whatever constants we wish and arrange to have W =1 ( or -1). This case P(x) = 0, appears more frequently than might be expected. ( 2 in Cartesian coordinates, the radical dependence of 2(r) in spherical polar coordinates lack a first derivative). Finally, every linear 2ndorder ODE can be transformed into an eq. of the form of Eq.(3.52). For the general case, let us now assume that we have one solution by a series substitution ( or by guessing). We now proceed to develop a 2nd, independent solution for which W 0. dW P ( x1 )dx1 W We integrate from x1 =a to x1 = x to obtain . 28 ln x W ( x) P( x1 )dx1 , a W (a) or x W ( x) W (a) exp P( x1 )dx1 . a But (3.53) W ( x) y1 y2 y1 y2 y12 (3.54) d y2 ( ). dx y1 By combining Eqs. (3.53) and (3.54), we have x exp P( x1 )dx1 d y2 a ( ) W (a) 2 dx y1 y1 . (3.55) Finally, by integrating Eq. (3.55) from x2=b to x2=x we get x2 exp P( x1 )dx1 a y2 ( x) y1 ( x)W (a) dx2 . 2 b y1 ( x2 ) x Here a and b are arbitrary constants and a term y1(x)y2(b)/y1(b) has been dropped, for it leads to nothing new. As mentioned before, we can set W(a) =1 and write y 2 ( x) y1 ( x) x x2 exp P( x1 )dx1 dx 2 . 2 y1 ( x 2 ) (3.56) If we have the important special case of P(x) = 0. The above eq. reduces to y 2 ( x) y1 ( x) x dx 2 y1 ( x 2 )2 . Now, we can take one known solution and by integrating can generate a second independent solution. Example A Second Solution for the Linear Oscillator eq. From d2y/dx2 + y = 0 with P(x) = 0, let one solution be y1 = sin x. 29 y 2 ( x) sin( x) x dx 2 sin 2 x 2 sin x( cot x) cos x. Series Form of the Second Solution* (Optional Reading) Further insight may be obtained by the following sequence of operations: 1. Express P(x) and Q(x) as P( x) pi xi , and Q( x) i 1 q j 2 j x j. 2. Develop the first few terms of a power-series solution, as in section 3.5. 3. Using this solution as y1, obtain y2, with Eq. (3.56), integrating term by term. Proceeding with Step 1, we have y ( p1 x 1 p0 p1 x ...) y (q 2 x 2 q1 x 1 ...) y 0 (3.57) in which x = 0 is at worst a regular singular point. If p-1 = q-1 = q2 = 0, it reduces to an ordinary point. Substituting y a x k 0 (Step 2), we obtain (k )(k 1)a x k 2 0 pi x i (k )a x k 1 i 1 0 a x q x j j 2 j k 0 . 0 Assuming that p-10, q2 0, our indicial eq. is k(k-1) + p-1k + q-2 = 0, which sets the net coefficient of xk-2 equal to zero. We denote the two roots by k = and k = -n, where n is zero or positive integer. (If n is not an integer, we expect two independent series solutions by the method of Section 3.5 and there is no problem). Then (k-) (k-+ n) = 0, or k2 + (n-2)k + (-n) = 0. 30 We have p-1 -1 = n-2. (3.58) The known series solution corresponding to the larger root k = may be written as y1 x a x . 0 Substituting this series solution into Eq. (3.56) (Step 3), yields, y2 ( x) y1 ( x) x x ( x2 a i 1 exp( pi x1i dx1 ) a x )2 0 2 2 2 dx2 , (3.59) where the solutions y1 and y2 have been normalized so that the Wronskian, W(a)=1. Tracking the exponential factor first, we have p x dx x2 a i i 1 i 1 1 pk k 1 x2 f (a ). k 0 k 1 p1 ln x2 Hence exp( x2 a p x dx ) i i 1 1 i exp f (a )x 2 p1 exp( p k k 1 x2 ) k 0 k 1 p 1 p exp f (a )x 2 p1 1 k x 2k 1 ( k x 2k 1 ) 2 .... 2! k 0 k 1 k 0 k 1 This final series expansion of the exponential is certainly convergent if the original expansion of the coefficient P(x) was convergent. The denominatorin Eq. (3.59) may be handled by writing 2 x 2 ( a x 2 ) 2 0 1 x 22 ( a x2 ) 2 0 x 22 b x2 . 0 (3.60) 31 Neglecting constant factor that will be picked up anyway by the requirement that W(a)=1, we obtain y2 ( x) y1 ( x) x2 p 1 2 ( c x2 )dx2 . x (3.61) 0 By Eq. (3.58), x2 p1 2 x2 n 1 and we have assumed here that n is an integer. Substituting this result into Eq. (3.61), we obtain x y2 ( x) y1 ( x) (c0 x2 n 1 c1 x2 n c2 x2n 1 ... cn x21 ...)dx2 . (3.62) The integration indicated in Eq. (3.62) leads to a coefficient of y1(x) consisting of two parts: 1. A power series starting with x-n. 2. A logarithm term from the integration of x-1 (when λ=n). This term always appears when n is an integer unless cn fortuitously happens to vanish.