Weak of Random Variables

advertisement

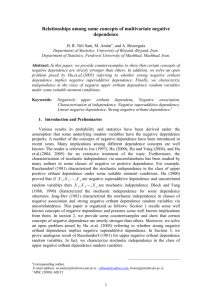

Relationships among some concepts of multivariate negative

dependence

M. Amini, H. R. Nili Sani, and A.Bozorgnia

Department of Statistics, University of Birjand,Birjand,Iran.

Department of Statistics, Ferdowsi University of, Mashhad,Mashhad,Iran.

Abstract: In this paper, we provide counterexamples to show that some concepts of

negative dependence are strictly stronger than others. In addition, we solve an open

problem posed by Hu,et.al.(2005) referring to whether strong negative orthant

dependence implies that negative superadditive dependence. Finally, we

characterized independence in the class of negative upper orthant dependence

random variables under some suitable moment conditions.

Keywords: Negatively upper orthant dependent, Negative association,

Characterization of independence, Negative superadditive dependence, Linear

negative dependence, Strong negative orthant dependence.1

1. Introduction and Preliminaries

Various results in probability and statistics have been derived under the

assumption that some underlying random variables have the negative dependence

property. A number of concepts of negative dependence have been introduced in

recent years. Many implications among different dependence concepts are well

known. The reader is referred to Joe(1997), Hu (2000), Hu and Yang (2004) and Hu

et.al.(2004, 2005) for an extensive treatment of the topic. Furthermore, the

characterization of stochastic independence via uncorrelatedness has been studied by

many authors in some classes of negative or positive dependence. For example,

Ruschendorf (1981) characterized the stochastic independence in the class of upper

positive orthant dependence under some suitable moment conditions. Hu, (2000)

proved that if X 1 , X 2 , X n are negative superadditive dependence and uncorrelated

random variables then X 1 , X 2 , X n are stochastic independence. Block and Fang

(1988, 1990) characterized the stochastic independence for some dependence

structures also Joag-Dev (1983) characterized the stochastic independence in classes

of negative association and strong negative orthant dependence random variables via

uncorrelatedness. This paper is organized as follows: Section 1 recalls some well

known concepts of negative dependence and presents some well known implications

from them. In section 2, we provide some counterexamples and show that some

concepts of negative dependence are strictly stronger than others. Moreover, we solve

an open problem posed by Hu,et.al.(2005) referring to whether strong negative orthant

dependence implies that negative superadditive dependence. In section 3, we prove

analogous result of Ruschendorf (1981) for upper negative orthant dependence

Corresponding author.

E-mail address: m-amini@ferdowsi.um.ac.ir , nilisani@yahoo.com,, bozorg@math.um.ac.ir,

1

MSC(2000): 60E15

random variables. In fact, we characterize stochastic independence in the class of

upper negative orthant dependence random variables.

Definition 1: A function f : R m R is supermodular, if

f ( x y ) f ( x y ) f ( x) f ( y )

for all x, y R m

where x y (min{ x1 , y1},, min{ xm , y m }) and

x y (max{ x1 , y1},, max{ xm , y m }) .

Note that if f has continuous second partial derivatives, then supermodularity of f

is equivalent to

2 f ( x)

0 for all 1 i j m and x R m (Muller and Scarsini,

xi x j

2000). Let ( X 1 , X 2 , ... X n ), n 3 be a random vector defined on a probability space

( , , P )

Definitions 2: The random variables X 1 , X 2 , X n are :

(a) (Joag-Dev and Proschan, 1982). Negatively associated (NA) if for every pair of

disjoint nonempty subsets A1 , A2 of {1, ... , n} ,

Cov( f1 ( X i , i A1 ) , f 2 ( X i , i A2 )) 0,

whenever f1 and f 2 are coordinatewise nondecreasing functions and covariance exists.

(b) Weakly negatively associated (WNA) if for all nonnegative and nondecreasing

functions

f i , i 1,2, n,

.

n

n

i 1

i 1

E ( f i ( X i )) E ( f i ( X i )).

(c) Negatively upper orthant dependent (NUOD) if for all x1 ,, xn R

n

1

P( X i xi , i 1, , n) P( X i xi ).

i 1

Negatively lower orthant dependent (NLOD) if for all x1 ,, xn R

n

P( X i xi , i 1, , n) P( X i xi ).

(2)

i 1

And negatively orthant dependent (NOD), if both (1) and (2) hold.

(d) (Hu,2000). Negatively superadditive dependent (NSD) if

E ( f ( X 1 , X 2 ,... X n )) E ( f (Y1 , Y2 ,...Yn )),

(3)

st

where Y1 , Y2 ,, Yn are independent variables with X i Yi for each i and f is a

supermodular function such that the expectations in (3) exist.

(e) Linearly negative dependent (LIND) if for any disjoint subsets A and B of

{1,2, , n} and j 0, j 1, , n , kA k X k and kB k X k are NA.

(f) (Joag-Dev, 1983). Strongly negative orthant dependent (SNOD) if for every set of

indices A in {1,2, , n} and for all x R n , the following three conditions hold

P[

n

(X i x i )] P [X i x i , i A ].P [X j x j , j A c ]

i 1

P[

n

(X i x i )] P [X i x i , i A ].P [X j x j , j A c ]

i 1

P [X i x i , i A , X j x j , j A c ] P [X i x i , i A ].P [X j x j , j A c ]

.

The following implications are well known.

i) If ( X 1 , X 2 , ... X n ) is NA then it is LIND, WNA and consequence NUOD.

ii) If ( X 1 , X 2 , ... X n ) is NA then it is NSD.(Christofides and Vaggelatou, 2004).

iii) If ( X 1 , X 2 , ... X n ) is NSD then it is NUOD.(Hu, 2000).

iv) If ( X 1 , X 2 , ... X n ) is NA then it is SNOD and if ( X 1 , X 2 , ... X n ) is SNOD then it

is NOD.( Joag-Dev, 1983)

It is well known that some of negative dependence concepts do not imply others.

Remark 1: i) Neither of the two dependence concepts NUOD and NLOD implies the

other (Bozorgnia et.al, 1996)

ii) Neither NUOD nor NLOD imply NA. (Joag-Dev and Proschan, 1982).

iii) The NSD does not imply LIND and NA.(Hu, 2000).

iv) The NSD does not imply SNOD. (Hu, et.al., 2005).

We use the following Lemma that is important in the theory of negative dependence

random variables.

Lemma 1: (Bozorgnia et.al., 1996) Let X 1 , X 2 ,..., X n be NUOD random variables and

let f1 , f 2 ,..., f n be a corresponding of monotone increasing, Borel functions which are

continuous from the right, then f1 ( X 1 ), , f n ( X n ) are NUOD random variables.

2. Some counterexamples

In this section, we present some counterexamples showing that some concepts of

negative dependence are strictly stronger than others.

Lemma 2: Neither of the two dependence concepts SNOD and LIND implies the

other.

Proof: i) ( LIND does not imply SNOD). Let ( X 1 , X 2 , X 3 ) have the following

distribution.

p (1,1,1) 0.05, p (1, 0, 0) p (0,1, 0) 0.225, p (0, 0,1) 0.22,

p (0, 0, 0) 0.065, p (1,1, 0) 0.08, p (0,1,1) 0.06, p (1, 0,1) 0.075.

It can be checked that the random variables X 1 , X 2 , X 3 are LIND and also NOD,

since for all 0 ai 1, i 1, 2,3 .

P (X 1 a1 , X 2 a2 , X 3 a3 ) 0.05 P (X a1 ).P (X a2 ).P (X a3 ) 0.07227,

P (X 1 a1 , X 2 a2 , X 3 a3 ) 0.065 P (X a1 ).P (X a2 ).P (X a3 ) 0.1984.

But the random variables X 1 , X 2 , X 3 are not SNOD, since for all

0 ai 1, i 1, 2,3

3

P[

(X i ai )] 0.05 P [X 1 a1 ].P [X 2 a2 , X 3 a3 ] 0.0473.

i 1

ii) (SNOD does not imply LIND). Let (X 1 , X 2 , X 3 , X 4 ) have the joint distribution as

given in Table 6 of Hu, et.al.(2005). Then the random variables X 1 , X 2 , X 3 , X 4 are

SNOD but not LIND, since

9

8

P [X 1 1,Y 2 2]

P [Y 1 1].P [Y 2 2] ,

32

32

Where Y 1 X 1 , Y 2 X 2 X 3 X 4 .

The next Lemma indicates that strong negative orthant dependence does not imply

NSD which gives the answer to the question posed by Hu, et.al.(2005).

Lemma 3: SNOD does not imply NSD.

Proof: Let ( X 1 , X 2 , X 3 ) have the following distribution.

1

p (1,1,1) p (0, 0, 2) p (0, 2, 0) p (2, 0, 0) ,

40

2

10

p (1, 0, 0) p (0, 0,1) p (0,1, 0) , p (1,1, 0) p (0,1,1) p (1, 0,1) .

40

40

It can be checked that ( X 1 , X 2 , X 3 ) is SNOD, since for all

0 ai 1, 1 bi 2, i 1, 2,3, and i j k ,we have

3

P[

(X i ai )]

i 1

3

1

264

P [X i ai ].P [X j a j , X k ak ]

,

40

40

37

1482

P [X i bi ].P [X j b j , X k b k ]

,

40

1600

i 1

14

608

P [X i ai , X j b j , X k b k ]

P [X i ai ].P [X j b j , X k b k ]

,

40

1600

2

117

P [X i ai , X j a j , X k b k ]

P [X i ai ].P [X j a j , X k b k ]

,

40

1600

3

72

P [X i ai , X j a j , X k ak ]

P [X i ai ].P [X j a j , X k ak ]

,

40

1600

24

912

P [X i ai , X j b j , X k b k ]

P [X i ai ].P [X j b j , X k b k ]

,

40

1600

10

176

P [X i ai , X j a j , X k ak ]

P [X i ai , X j a j ].P [X k ak ]

,

40

1600

11

429

P [X i ai , X j a j , X k b k ]

P [X i ai , X j a j ].P [X k b k ]

,

40

1600

similarly it is easy to show that all conditions of Definition 2(f) are true. But

( X 1 , X 2 , X 3 ) is not NSD. Let f (x 1 , x 2 , x 3 ) max{x 1 x 2 x 3 1,0} , this function is

supermodular since it is a composition of an increasing convex real value function and

an increasing supermodular function. For this function we get

56000

50494

Ef (X 1 , X 2 , X 3 )

Ef (Y 1 ,Y 2 ,Y 3 )

.

64000

64000

P[

(X i bi )]

st

Where Y 1 ,Y 2 ,Y 3 are independent random variables with X i Y i for all i 1, 2,3, .

The following example shows that the converse implication NA LIND fails to

hold.

Example 2 : Let ( X 1 , X 2 , X 3 ) have the following distribution.

2

3

p (0, 0, 0) 0, p (0, 0,1) p (1, 0, 0) , p (1,1,1) ,

15

15

2

p (0,1, 0) p (1,1, 0) p (0,1,1) p (1, 0,1) .

15

It is easy to show that X 1 , X 2 , X 3 are LIND. Now we define the two monotone

functions f and g as following

1

1

x1 0.5, x 2 0.5

( x1 15 )( x 2 15 ),

f ( x1 , x 2 )

1

x1 0.5, x 2 0.5

15 2

and

1

x3 0.5

( x3 15 ),

g ( x3 )

1,

x3 0.5

15

we have

7920 5940 1980

Cov (f (X 1 , X 2 ), g (X 3 ))

4

0

152

15

154

This show that X 1 , X 2 , X 3 are not NA.

Example 3: (NOD implies neither NA nor LIND). Let ( X 1 , X 2 , X 3 ) have joint

distribution as following:

2

p (0, 0, 0) p (1, 0,1) 0, p (0,1, 0) p (0, 0,1) ,

10

1

3

p (0,1,1) p (1,1, 0) p (1,1,1) , p (1, 0, 0) .

10

10

i. It is easy to see that X 1 , X 2 , X 3 are ND ,

1

1

1

ii. If f (x 1 , x 2 ) I (x 1 , x 2 ), and g (x 3 ) I (x 3 ), then

2

2

2

1

8

E {f (X 1 , X 2 ).g (X 3 )}

Ef (X 1 , X 2 ).Eg ( X 3 )

.

10

100

Therefore the random variables X 1 , X 2 , X 3 are not NA.

iii.The random variables X 1 , X 2 , X 3 are not LIND. Since if Y 1 X 1 X 2 and

Y 2 X 3 , then

1

12

P [Y 1 1,Y 2 0] P [Y 1 1]P [Y 2 0] .

2

25

iv) The NOD does not imply SNOD, because for 0 ai 1, i 1, 2,3 , we have

3

1

4

P [X 3 a3 ].P [X 1 a1 , X 2 a2 ]

.

10

50

i 1

Remark 3: i) Lehmann (1966) proved that NUOD of X 1 and X 2 is equivalent to

Cov( f1 ( X 1 ) , f 2 ( X 2 )) 0 , for all nonnegative and nondecreasing Borel

functions f1 and f 2 .Therefore for n 2 NUOD is equivalent to weak negative

association.

ii). The condition of non-negativity functions f i , i 1, 2,..., n in Definition b) is a

necessary condition. To see this consider Example 3,

1

1

f 1 (x ) f 2 (x ) I (x ) and f 3 (x ) x .

2

4

Then

P[

(X i ai )]

E {f 1 (X 1 ).f 2 (X 2 ).f 3 (X 3 )}

1

3

Ef 1 (X 1 ).Ef 2 (X 2 ).Ef 3 (X 3 ) .

20

80

3. Characterization of independence

It is well known that for a normally distributed n-dimensional random variables

stochastically independence is equivalent to Cov( X ) I -the identity matrix.When

n=2 this result is generalized to NUOD random variables in Lehmann(1966).

Moreover, Joag-Dev and Proschan (1983) proved that If (X 1 , X 2 ,..., X n ) have

N ( , ) -distribution, and then (X 1 , X 2 ,..., X n ) is NUOD if and only if ij 0, for

all i j, i, j 1,2,..., n, where ( ij ) . In the following, we present two Theorems:

Theorem 1 implies that WNA is equivalent to NUOD and in Theorem 2 shows that

E X j EX j

NUOD and

jT

are equivalent to stochastic independence

jT

of X 1 , X 2 ,..., X n .

Theorem 1: The random variables X 1 , X 2 ,..., X n are WNA if and only if they

NUOD.

Proof: Let X 1 , X 2 ,..., X n be NUOD and f i , i 1,2,..., n be nonnegative and

nondecreasing real value functions. Then by Lemma 1 f1 ( X 1 ), , f n ( X n ) are

NUOD. The continuation of the proof is a simple generalization of Theorem 1 of

Ruschendorf (1981) and the following equality,

n

n

E ( f i (X i ) E (f i (X i ))

i

n

0

i 1

[P (

0

i

n

n

i

i

f i (X i ) u i ) P (f i (X i ) u i )] du i 0.

Where, f i ( X i ) I (ui , f i ( X i )) dui , 1 i n,

I (u , x) 1 if x u and I (u , x) 0

0

elsewhere. This will complete the proof.

Corollary: Let X 1 , X 2 , , X n be non-negative NUOD random variables, then

n

n

i

i

E f i ( X i ) Ef i ( X i ) implies independence of X 1 , X 2 ,

,X n .

Now it is easy to prove the following Theorem.

Theorem 2: Let X 1 , X 2 , , X n be NUOD random variables assuming that

E X j exists for all T {1,2, , n} . If E X j EX j for all T {1,2, , n} ,

jT

jT

jT

then X 1 ,, X n are stochastically independent.

Conclusions :

The counterexamples have been presented in this paper show that the following

implications holding among these concepts of dependence are strict for all n 3 :

NUOD NOD SNOD NA NSD NUOD W NA

LIND

Moreover, we characterized the stochastic independence in the class of NUOD

random variables under condition E X j EX j for all T {1,2,, n} . The

jT

jT

characterization of stochastic independence in smaller class LIND , is still an open

problem.

References

[1]. Bozorgnia, A., Patterson, R.F. and Taylor, R.L. (1996). Limit theorems for

dependent random variables. World Congress Nonlinear Analysts, 92, Vol. IIV(Tampa, FL, 1992), 1639-1650, de Gruyter, Berlin.

[2]. Christofiedes, T.C. and Vaggelatou, E. (2004). A connection between

supermodular ordering and positive/negative association. Journal of Multivariate

Analysis 88, 138-151.

[3]. Joag-Dev, K. and Proschan, F. (1982). Negative association of random variables

with applications. Ann. of Stat.11, 286-295.

[4]. Joag-Dev, K. (1983). Independence via uncorrelatedness under certain

dependence structures. Ann. Probab.Vol. 11, No.4, 1037-1041.

[5].Joe, H.(1997). Multivariate models and dependence concepts. Chapman and Hall,

London.

..

[6]. Hu, T. M u ller, A. and Scarsini, M. (2004). Some counterexamples in positive

dependence. Journal of statistical Planning and Inference, 124. 153-158.

[7]. Hu, T. and Yang, J. (2004) Further developments on sufficient conditions for

negative dependence of random variables. Statistics & Probability Letters, 369-381.

[8]. Hu, T. (2000). Negatively superadditive dependence of random variables with

applications. Chinese Journal of Applied Probability and Statistics, 16, 133-144.

[9]. Hu, T. Ruan, L. and Xie, C. (2005). Dependence structures of multivariate

Bernoulli random vectors. J.Multivariate analysis, 94(1), 172-195.

..

[10].M u ller, A. and Scarsini, M.(2000). Some remarks on the supermodular. J.

Multivariate Analysis.73. 107-119.

..

[11] R u schendorf, L. (1981). Weak association of random variables. J.Multivariate

analysis,11,448-451.