Orthogonal Matrices

advertisement

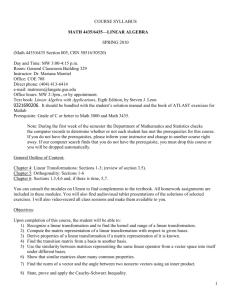

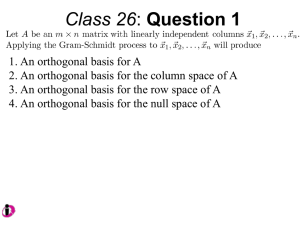

8.2 Orthogonal Matrices The fact that the eigenvectors of a symmetric matrix A are orthogonal implies the coordinate system defined by the eigenvectors is an orthogonal coordinate system. In particular, it makes the diagonalization A = TDT-1 of the matrix A particularly nice. Recall T is the matrix whose columns are the eigenvectors v1,…vn of A and D is the diagonal matrix with the eigenvalues 1,…,n of A on the main diagonal and zero's elsewhere. It is convenient to normalize the eigenvectors before using them as the columns of T. Recall, normalizing a vector v means dividing it by its length | v | so the v resulting vector | v | has unit length. If we normalize an eigenvector the resulting vector is still an eigenvector, since if we multiply an eigenvector by a constant it is still an eigenvector. If we use the normalized eigenvectors as the columns of T then the columns of T are orthogonal and have length one. Such a matrix is called an orthogonal matrix. Definition 1. An orthogonal matrix is a square matrix S whose columns are orthogonal and have length one. Example 1. Find the diagonalization of A = - 6 17 - 6 8 using the normalized eigenvectors as the columns of T. From Example 3 in the previous section, the eigenvalues of A are 1 -2 1 = 5 and 2 = 20 and the eigenvectors are v1 = 2 and v2 = 1 . One has 1/ 5 - 2/ 5 | v1 | = | v2 | = 5 . So the normalized eigenvectors are u1 = and u2 = . So 2/ 5 1/ 5 1 1 -2 T = 2 1 and the diagonalization of A is 5 A = TDT-1 = Note that T = 1 1 -2 52 1 1 1 -2 52 1 -1 5 0 1 1 - 2 0 20 5 2 1 is an orthogonal matrix. cos - sin sin cos be the matrix for a rotation by an angle . Then is 1 0 orthogonal R. Let F = 0 - 1 be the matrix for a reflection across the y axix. Then F is cos - sin 1 0 cos sin orthogonal. Also RF = sin cos 0 - 1 = sin - cos is orthogonal. Example 2. Let R = a b a Proposition 1. If S = c d is orthogonal then S = R or S = RF where is the angle c makes with the x axis. 8.2 - 1 a Proof. S is orthogonal c is a unit vector a2 + c2 = 1 r = 1 and are the polar a a b coordinates of c a = cos and c = sin . S is orthogonal c and d are orthogonal b -c b d = t a for some number t. S is orthogonal d is a unit vector t = 1 or t = - 1. If t = 1 then S = R. If t = - 1 then S = RF. // An orthogonal matrix is nice because it is easy to compute its inverse since its inverse turns out to be equal to their transpose. Theorem 2. S is orthogonal if and only if S-1 = ST. Proof. It suffices to show S is orthogonal STS = I, i.e. (STS)ij = Iij. One has (STS)ij equal to the product of row i of ST and column j of S. However row i of ST is the transpose of column i of S. So (STS)ij = (S●,i)T(S●,j) = S●,i . S●,j. Note that S is orthogonal S●,i . S●,j = 0 = Iij if i j and S●,i . S●,j = 1 = Iij if i = j. So S is orthogonal = Iij. // Example 3. Find the inverse of T = 1 1 -2 . 52 1 1 1 2 . In particular the diagonalization 5-2 1 1 1 -25 0 1 1 2 . 5 2 1 0 20 5 - 2 1 T is orthogonal so T-1 = TT = 17 - 6 Example 1 is - 6 8 = of A in An application of this would be to compute An. One has 1 1 - 2 5n 0 1 1 2 n 5 2 1 0 20 5 - 2 1 1 5n - 4(20n) 2(5n) - 2(20n) = 2(5n) - 2(20n) 4(5n) + 20n 5 An = = 5 2(5n) 1 5n - 2(20n) 1 2 20n - 2 1 6 -2 Problem 2. Let A = - 2 3 be the matrix in Problem 1 in section 6.1. (a) Normalize the eigenvectors you found in that problem. (b) What is the matrix T whose columns are the normalized eigenvectors. (c) Find the inverse of T. (d) Find An. 0.98 0.12 Problem 2. Let A = 0.12 1.08 be the matrix in Problem 2 in section 6.1. (a) Normalize the eigenvectors you found in that problem. (b) What is the matrix T whose columns are the normalized eigenvectors. (c) Find the inverse of T. (d) Find An. (e) Find the solution to the difference equations sn+1 = 0.98sn + 0.12gn gn+1 = 0.12sn + 1.08gn with the initial conditions s0 = 1 and g0 = 2. 8.2 - 2 1 3 1 2 1 3 2 and u1 = . (b) T = . (c) T is orthogoanl 13 - 2 13 3 13 - 2 3 1 3 -2 1 9(0.9)n + 4(1.16)n - 6(0.9)n + 6(1.16)n n so T-1 = TT = . (d) A = 13 - 6(0.9)n + 6(1.16)n 4(0.9)n + 9(1.16)n 13 2 3 sn 1 1 - 3(0.9)n + 16(1.16)n (e) g = An 2 = 13 2(0.9)n + 24(1.16)n n Answers: (a) u1 = One consequence of proposition 1 is that the product of two orthogonal matrices is orthogonal and the inverse of an orthogonal matrix is orthogonal. Proposition 3. If S and T are orthogonal then so is ST and S-1. Proof. S and T are orthogonal S-1 = ST and T-1 = TT (ST)-1 = T-1S-1 = ST = (ST)T ST is orthogonal. S is orthogonal S-1 = ST (S-1)-1 = (ST)-1 = (S-1)T S-1 is orthogonal. // Proposition 1 has one characterization of orthogonal matrices. Another is the following that says the orthogonal matrices are the ones that preserve inner products and lengths. Proposition 4. (a) S is orthogonal if and only if (Sx) . (Sy) = x . y for all x and y. (b) S is orthogonal if and only if | Sx | = | x | for all x. Proof. (a) 8.2 - 3