Game Theory: Problems and Solutions

advertisement

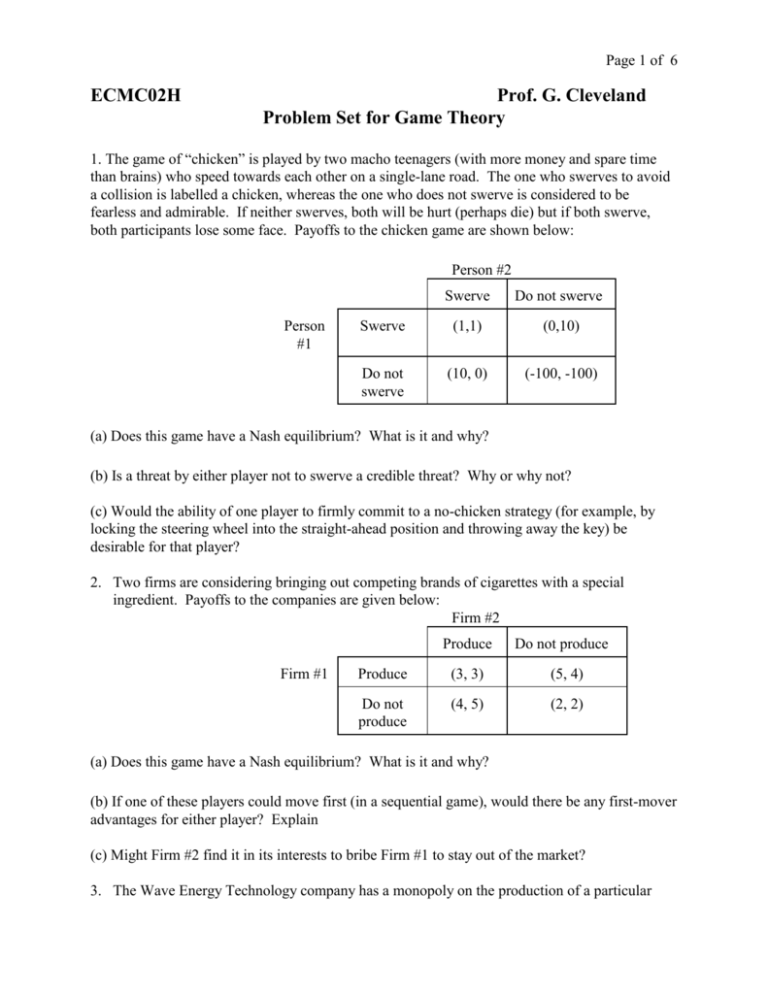

Page 1 of 6 ECMC02H Prof. G. Cleveland Problem Set for Game Theory 1. The game of “chicken” is played by two macho teenagers (with more money and spare time than brains) who speed towards each other on a single-lane road. The one who swerves to avoid a collision is labelled a chicken, whereas the one who does not swerve is considered to be fearless and admirable. If neither swerves, both will be hurt (perhaps die) but if both swerve, both participants lose some face. Payoffs to the chicken game are shown below: Person #2 Person #1 Swerve Do not swerve Swerve (1,1) (0,10) Do not swerve (10, 0) (-100, -100) (a) Does this game have a Nash equilibrium? What is it and why? (b) Is a threat by either player not to swerve a credible threat? Why or why not? (c) Would the ability of one player to firmly commit to a no-chicken strategy (for example, by locking the steering wheel into the straight-ahead position and throwing away the key) be desirable for that player? 2. Two firms are considering bringing out competing brands of cigarettes with a special ingredient. Payoffs to the companies are given below: Firm #2 Produce Firm #1 Do not produce Produce (3, 3) (5, 4) Do not produce (4, 5) (2, 2) (a) Does this game have a Nash equilibrium? What is it and why? (b) If one of these players could move first (in a sequential game), would there be any first-mover advantages for either player? Explain (c) Might Firm #2 find it in its interests to bribe Firm #1 to stay out of the market? 3. The Wave Energy Technology company has a monopoly on the production of a particular Page 2 of 6 type of highly-desirable waterbed. Demand for these beds is relatively inelastic…at a price of $1000 per bed, 25,000 will be sold while at a price of $600, 30,000 will be sold. The only costs associated with waterbed production are the initial costs of building a plant. This company has already invested in a plant capable of producing up to 25,000 beds and this is a sunk cost (and therefore irrelevant for current pricing decisions). (a) suppose a would-be entrant to this industry could always be assured of getting half the market, but would have to invest $10 million in a plant to accomplish this. Construct a sequential game showing the payoffs to this firm from the entry/no entry decision. What is the Nash equilibrium and why? (b) Suppose the Wave Energy Technology firm could invest $5 million more to enlarge its existing plant so that it would produce 40,000 beds (another sunk cost). Would this strategy be a profitable way to deter entry by its rival? Explain. 4. Assume now that mixed strategies are possible – not just pure strategies. Take a simple “zero-sum” game. In zero-sum games, the payoffs to both players are assumed to sum to zero, so that one player's gain is inevitably another player's loss. These games correspond to most of the sports which we play and/or watch, since generally one side either wins or loses (or there is a tie). Thus, if we designate the outcome as +1 for a win, 0 for a tie, and -1 for a loss, you can see that two players must always have their results "sum to zero". These games do not allow for collusion, since there is no possible agreement that will make both parties better off. a) Begin with a simple game of "matching" in which two players simultaneously put out either one or two fingers. One of the players - Jon - has chosen "evens" in advance; the other player - Sue - has chosen "odds." If the number of fingers put out by the two players matches, then Jon wins; if they do not match, then Sue wins. Set out the payoff matrix for this game. Is there a Nash equilibrium in pure strategies? b) Now suppose that we allow each player to choose a mixed strategy. In a mixed strategy, the player chooses to play randomly, with a probability assigned to each "pure" strategy. Surprisingly, this is often the best alternative. Suppose that Jon plays the mixed strategy of putting out one finger with probability PJ and two fingers with probability (1-PJ). Show that if Sue can figure out this strategy and respond with the best possible strategy, that Jon's maximin will occur at PJ = 0.5. Then show that if the positions are reversed and Sue chooses PS (defined the same way for her as PJ is for Jon), that her best choice is PS = 0.5. Explain the "common sense" of this result. 5. Suppose that in a football game, the offense can choose one of two strategies - run or pass while the defense can choose one of two strategies - run defense or pass defense. The results of the play are as follows: if the offense runs, it gains 3 yards against a run defense and 9 yards against a pass defense; if the offense passes, it gains 15 yards against a run defense and 0 yards against a pass defense. Show that a mixed strategy is best for both sides. Explain the common sense of this result. Page 3 of 6 Solutions 1(a). There are actually two Nash equilibria; in each of these equilibrium positions, one player swerves and the other does not. Consider player #1’s incentives in the case that player #2 chooses swerve. His incentives are to not swerve and get the payoff of 10. Consider player #1’s incentives in the case that player #2 chooses to not swerve. Now player #1’s incentive is to swerve in order to get the payoff of 0, rather than minus 100. We can use the same logic to analyze player #2’s incentives. In the case where player #1 chooses to swerve, player #2 has the incentive to not swerve, to get the payoff of 10. However, if player #1 chooses to not swerve, then player #2 has the incentive to swerve, to get the payoff of 0, rather than minus 100. Either of these two equilibria is stable. (b) We would expect these players to use any possible threat to try to convince the other player that he (i.e., the one making the threat) is definitely not going to swerve. This might involve a lot of bragging and looking very tough, and not showing the least doubt. However, the threat is not credible. Each player has enormous incentives to avoid ending up in the southeast cell of the payoff matrix (do not swerve and do not swerve). This will affect the behaviour of the “tough guy” no matter what he threatens before the race. (c) Locking the steering wheel into position (as long as it kept the car going straight) would be very effective, if and only if the other player knew about this locking. If player #2 knows that player #1 has a locked steering wheel, then player #2 knows that player #1 cannot swerve. So, player #2 will have to decide to swerve, (unless he is crazy…). Therefore, player #1 will end with a payoff of 10 and player #2 will end up with zero. 2(a). Just like the previous question, there are two Nash equilibria; in both of them one firm will produce and the other firm will not produce. Firm #1 has the incentive not to produce if firm #2 produces, but it has the incentive to produce if firm #2 does not produce. In similar fashion, firm #2 is better off not producing if firm #1 chooses to produce the cigarettes and firm #2 is better off producing the cigarettes if firm #1 decides not to produce. (b) Consider a sequential game with player #1 moving first. Prod 3, 3 Don’t Player #1 5, 4 #2 Prod 4, 5 Player #1 knows that if it decides to produce this brand of cigarettes, then Firm #2 will not Don’t produce. Player #1 can also see that if it decides not to produce cigarettes, then Firm #2 will 2, 2 decide to produce cigarettes. In the former case, Firm #1’s payoff will be 5; in the latter case, Page 4 of 6 Firm #1’s payoff will be 4. Therefore, Firm #1 will choose the former move…it will decide to produce cigarettes. The Nash equilibrium will be that Firm #1 produces cigarettes and Firm #2 does not. In other words, there clearly would be first-mover advantages for Firm #1 in order to determine that the most favourable of the Nash equilibria from the simultaneous game is the one which prevails in a sequential game. If Firm #2 were the first mover, a similar set of advantages would come to Firm #2. 2(c). If Firm #2 were able to bribe Firm #1 to stay out of the cigarette market, it could get a payoff of 5 rather than a payoff of 4. Any bribe less than 1 which was successful would be worth it from Firm #2’s point of view. However, since Firm #1 loses 1 unit by not producing this brand of cigarettes, it is unlikely to accept the bribe. 3. The diagram below shows the sequential decision of Firm #1 (the potential entrant) and Firm #2 (the incumbent monopolist). If Firm #1 enters, then the incumbent firm has the choice of producing at a price of $1000 (and gaining $12.5 million of revenue) or $600 (and gaining $9 million). The incumbent will prefer the $1000 price. If the potential entrant does not enter, it earns nothing and the incumbent firm gains either $25 million or $15 million. The entrant will enter and the incumbent will take the path of least resistance and share the market at the most profitable price of $1000. $1000 Player #1 $600 2.5, 12.5 12.512.5 3, 3 -1, 9 #2 $1000 0, 25 $600 0, 15 (c) If the WET company were to expand its existing plant, this would not affect its incentives in the case of entry. Its incentives would still be to sell at the price of $1000, so any threat to expand its output, and price at the lower price, would not be credible. 4. The matching game is summarized in the zero sum payoff matrix below (where the payoffs to Jon are shown first): Page 5 of 6 Sue One Finger Jon Two Fingers One Finger +1, -1 -1, +1 Two Fingers -1, +1 +1, -1 There is no Nash equilibrium; this accords with our intuition. Each player will refuse to continue with a simple strategy if the other person is winning, so no simple strategy can be a stable result. Suppose instead that Jon plays the mixed strategy of putting out one finger with probability PJ and two fingers with probability (1-PJ). Then Sue's payoff will be R1 = (-1)(PJ) + (+1)(1-PJ) = 1 - 2PJ if she puts out one finger, and R2 = (+1)(PJ) + (-1)(1-PJ) = 2PJ - 1 if she puts out two fingers. If PJ < 0.5, then Sue obtains a positive result by always putting out one finger; if PJ > 0.5, then Sue obtains a positive result by always putting out two fingers. Only if Jon chooses PJ = 0.5 will Sue end up with a zero result (which also means a zero result for Jon, since this is a zero sum game). The argument for Sue is identical, but it is worth going through it. Suppose that Sue plays the mixed strategy of putting out one finger with probability PS and two fingers with probability (1PS). Then Jon's payoff will be R1 = (+1)(PS) + (-1)(1-PS) = 2PS - 1 if he puts out one finger, and R2 = (-1)(PS) + (+1)(1-PS) = 1 - 2PS if he puts out two fingers. If PS < 0.5, then Jon obtains a positive result by always putting out two fingers; if PS > 0.5, then Jon obtains a positive result by always putting out one finger. Only if Sue chooses PS = 0.5 will Jon end up with a zero result (which also means a zero result for Sue). The common sense of this is obvious. In the game of matching, if you can pick up any pattern in the other player's choices, then you can exploit that pattern. If you are playing "evens" and, at any point, you figure out that the other player is "likely" to put out one finger (meaning that the probability of putting out one finger is greater than 0.5), then of course you put out one finger so as to win most of the time. The only way to avoid this is for each player to employ a completely random strategy. 5. Consider first the decisions by the defense. If the defenders defend against the run, you always pass; if the defenders defend against the pass, you always run. Of course, no football team would behave so stupidly on defense (that is, no one would reveal exactly how they are going to play). Suppose now that the defense chooses the run defense with probability PRD and thus chooses the pass defense with probability 1 - PRD (of course, the two probabilities must add to one). Then, if the offense runs, the expected gain is Page 6 of 6 GR = (+3)(PRD) + (+9)(1-PRD) = 9 - 6PRD If the offense passes, the expected gain is GP = (+15)(PRD) + (+0)(1-PRD) = 15PRD The two results are equal (that is, GR=GP) if 9 - 6PRD = 15PRD or PRD = 3/7 When PRD = 3/7, GR = GP = 6.43 The defense will always choose PRD = 3/7 because if they do not, the offense can do better than 6.43 (assuming that the offense is only interested in the expected result - for football fans, you know that there are short yardage situations and end of game situations that dictate different strategies!). If the defense chooses PRD > 3/7, then the offense will always pass because GP > GR, and this will hurt the defense because GP > 6.43 On the other hand, if the defense chooses PRD < 3/7, then the offense will always run because GR > GP, and this will hurt the defense because GR > 6.43. Thus the best that the defense can do is to choose PRD = 3/7. Now consider the decisions by the offense. If the offense runs, you always defend against the run; if the offense passes, you always defend against the pass. Again, no football team would behave so stupidly on offense. Suppose now that the offense the run with probability PR and thus chooses the pass with probability 1 - PR Then, if the defense chooses the run defense, the expected gain is GRD = (+3)(PR) + (+15)(1-PRD) = 15 - 12PRD If the defense chooses the pass defense, the expected gain is GPD = (+9)(PR) + (+0)(1-PR) = 9PR The two results are equal (that is, GRD=GPD) if 15 - 12PR = 9PR or PR = 5/7 When PR = 5/7, GRD = GPD = 6.43 The offense will always choose PR = 5/7 because if they do not, the defense can do better than 6.43 If the offense chooses PR > 5/7, then the defense will always defend against the run because GRD < GPD, and this will hurt the offense because GRD < 6.43 On the other hand, if the defense chooses PR < 5/7, then the defense will always defend against the pass because GRD > GPD, and this will hurt the offense because GPD < 6.43. Thus the best that the offense can do is to choose PR = 5/7. The common sense of the mixed strategy is that it will generally hurt you in any sport to be too predictable!