Finishing up Linear Algebra 101

advertisement

Linear Algebra 101

Tuesday, November 2

I.

Defining Matrices and Vectors

II.

Multiplying Two Matrices by Computing Cross-Products

III. Manipulating and Inverting Matrices

I. Defining Matrices and Vectors

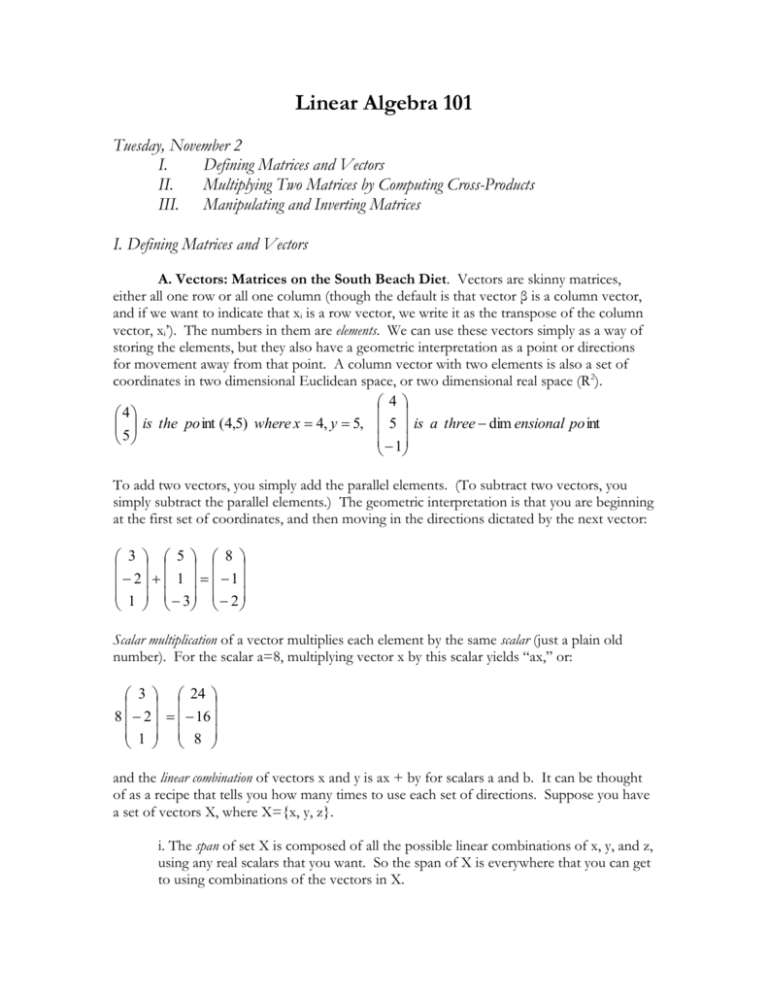

A. Vectors: Matrices on the South Beach Diet. Vectors are skinny matrices,

either all one row or all one column (though the default is that vector β is a column vector,

and if we want to indicate that xi is a row vector, we write it as the transpose of the column

vector, xi’). The numbers in them are elements. We can use these vectors simply as a way of

storing the elements, but they also have a geometric interpretation as a point or directions

for movement away from that point. A column vector with two elements is also a set of

coordinates in two dimensional Euclidean space, or two dimensional real space (R2).

4

4

is the po int (4,5) where x 4, y 5, 5 is a three dim ensional po int

5

1

To add two vectors, you simply add the parallel elements. (To subtract two vectors, you

simply subtract the parallel elements.) The geometric interpretation is that you are beginning

at the first set of coordinates, and then moving in the directions dictated by the next vector:

3 5 8

2 1 1

1 3 2

Scalar multiplication of a vector multiplies each element by the same scalar (just a plain old

number). For the scalar a=8, multiplying vector x by this scalar yields “ax,” or:

3 24

8 2 16

1 8

and the linear combination of vectors x and y is ax + by for scalars a and b. It can be thought

of as a recipe that tells you how many times to use each set of directions. Suppose you have

a set of vectors X, where X={x, y, z}.

i. The span of set X is composed of all the possible linear combinations of x, y, and z,

using any real scalars that you want. So the span of X is everywhere that you can get

to using combinations of the vectors in X.

ii. The basis of X is a set of vectors that include X in their span. There are many

possible bases for X, and these bases can be thought of as starting points that will get

you to the vectors in X. The natural basis of (0,1)’ and (1,0)’ can get you to any twodimensional point.

A set of vectors is linearly dependent if there is some non-trivial linear combination of the

vectors that is zero (a trivial combination is one where all of the scalars are zero).

Otherwise, the set of vectors is linearly independent. Are the following sets of matrices

linearly dependent?

8 0 4

6 4 8

X 4 , 2 , 0 Y 3 , 2 , 4

12 3 3

4 17 3

In two-dimensional space, any set of three vectors are linearly dependent, since the third can

always be written as a linear combination of the first two. We will see that this implies that

you can’t have more variables than cases in a regression.

B. Matrices: Vectors Get Together. Matrices are arrays of numbers which look

like a set of vectors all stuck inside the same parentheses. They have rows and columns, and

the dimension of a matrix is its number of rows and columns written the same order as Royal

Crown cola: R x C. So here are a couple of matrices:

6 14

1 9

6

12 9

X 3

8 12 Y

4 9

4 274 1

98 5

X is a 3x3 matrix, and Y is a 4x2 matrix. The i,jth element of a matrix is the element in its

ith row and its jth column. The 3,1th element of both matrixes is -4. If two matrices have

exactly the same dimensions, we can add or subtract them like we do with vectors simply by

adding or subtracting the parallel elements. We can also do scalar multiplication of matrices

simply, by multiplying each element of matrix X by scalar a to compute aX.

II. Multiplying Two Matrices by Computing Cross-Products

A. Conformability. The order of the two matrices that you may want to multiply

matters. If you want to compute the product of matrices AB, then you are premultiplying B by

A. Alternatively, premultiplying A by B is denoted by BA. Here’s the important rule:

Matrix B is “conformable for premultiplication” by A iff (if and only if) the number

of columns in A is equal to the number of rows in B. The resulting product AB will

have as many rows as A, and as many columns as B.

B. Cross-Products. OK, so you have two conformable matrices, now what do you

do? Basically, you take a row of the first matrix, multiply each of its elements by the

elements in the corresponding column of the second matrix, and take the sum. More

formally, to get the i,jth element of the product AB, you compute the sum of the elementby-element products of row i and column j. An example is:

4 2

12 1 10 6 7 2 21 11

3 1 2

1 7

3

4 2 3 5 1 16 2 15 8 14 3 3

C. The Identity Matrix. This helpful matrix has ones on its diagonal and zeros

elsewhere, and is very useful. It is denoted by IN for N dimensions. It is the multiplicative

identity for any other square matrix (a matrix where R=C). Thus, AI = IA = A. Why?

D. The Rank of a Matrix. The number of linearly independent rows of a matrix is

its row rank, the number of linearly independent columns is its column rank, and if its row and

column ranks equal its number of rows and columns, it is full rank.

III. Manipulating and Inverting Matrices

A. Rules for Matrix Manipulation. Using A, B, and C to stand for matrices of

dimension R x C, and A’ to represent the transpose of matrix A, the following rules apply:

i. The commutative property does NOT hold for matrices. AB does not equal BA,

even if both matrices are square and thus conformable for premultiplication by each other.

ii. The associative property does hold, so (AB)C=A(BC)

iii. The distributive property also holds, so A(B+C)=AB+AC

iv. You can take the transpose of products of matrices by the following rules:

(AB)’=B’A and (ABC)’=C’B’A’.

B. Finding the “determinant” of a 2 x 2 matrix. As we will see in the next class,

learning how to invert matrices is a very important skill. The first step in inverting a matrix

is finding its determinant, and the determinant of matrix X is denoted as |X|. (This

representation has nothing to do with absolute values.) By definition, for a 2 x 2 matrix with

elements labeled as a, b, c, and d,

a c

If X

then det( X ) X ad bc and X X

b d

C. Finding the inverse of a 2 x 2 matrix. To find X-1, the (multiplicative) inverse

of a 2 x 2 matrix X, you’ll want to first find its determinant. This will become a scalar by

which you will multiply a rearranged version of the matrix. If you’d like to see a proof of

why this yields the inverse, or if you are disappointed in me for not making you learn how to

invert a matrix of larger dimension and want to bring that burden upon yourself, probably

the best source to learn this all is a nice little book called Introduction to Matrix Theory and

Linear Algebra, by Irving Reiner). If not, here’s the definition of an inverse of a 2 x 2 matrix:

a c

1 d c

If X

then X 1

X b a

b d

D. What You Can and Can’t Invert. As you can see, the determinant needs to be

non-zero in order for a matrix to be “invertible.” Even more constraining is the rule that a

matrix needs to be square in order for it to have an inverse. This is a key rule. Its proof has

to do with thinking of matrices as geometric mapping instructions, and seeing that a matrix

must be square in order to for two successive transformations to be one-to-one. This is also

the basis of the third rule of matrix inversion that is important for OLS: a square matrix

must have linearly independent columns (full rank) in order to be inverted.