Notes 10 - Wharton Statistics Department

advertisement

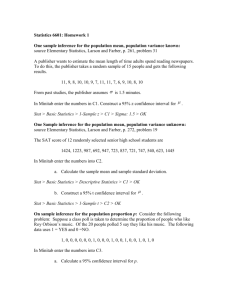

Statistics 512 Notes 10: Bootstrap Procedures

Bootstrap standard errors

, X n iid with CDF F and variance 2 .

2

X1 X n 1

Var

2 Var X1 X n

n

n .

n

SD( X )

n.

s

SE

(

X

)

s

We estimate SD( X ) by

n where is the

sample standard deviation.

X1 ,

What about SD{Median( X1 , , X n )} ? This SD depends in

a complicated way on the distribution F of the X’s. How to

approximate it?

Real World: F X1 ,

, X n Tn Median( X 1 ,

, Xn).

The bootstrap principle is to approximate the real world by

assuming that F Fˆn where Fˆn is the empirical CDF, i.e.,

1

the distribution that puts n probability on each of

X 1 , , X n . We simulate from Fˆn by drawing one point at

random from the original data set.

Bootstrap World:

Fˆn X1* , , X n* Tn* Median( X1* ,

, X n* )

The bootstrap estimate of SD{Median( X1 , , X n )} is

SD{Median( X 1* , , X n* )} where X 1* , , X n* are iid draws

*

*

from Fˆ , i.e., X , , X is a sample drawn with

n

1

n

replacement from the observed sample ( x1 ,

, xn ) .

Example: Suppose we draw X 1 , X 2 , X 3 iid from an

unknown distribution. The observed sample is 4, 6, 8. The

distribution of samples of size 3 from the empirical

distribution puts 1/27 probability on the following 27

samples

{(4,4,4), (4,4,6), (4,4,8), (4,6,4), (4,6,6), (4,6,8), (4,8,4),

(4,8,6), (4,8,8), (6,4,4), (6,4,6), (6,4,8), (6,6,4), (6,6,6),

(6,6,8), (6,8,4), (6,8,6), (6,8,8), (8,4,4), (8,4,6), (8,4,8),

(8,6,4), (8,6,6), (8,6,8), (8,8,4), (8,8,6), (8,8,8)}

The distribution of the median puts 1/27 probability on

each of the following values of the median

{(4, 4, 4, 4, 6, 6, 4, 6, 8, 4, 6, 6, 6, 6, 6, 6, 6, 8, 4, 6, 8, 6, 6,

8, 8, 8, 8)}

The standard deviation of the median of samples of size

three from the empirical distribution is

SD{Median( X 1* , X 2* , X 3* )} 1.44 .

*

How to approximate SD{Median( X 1 ,

The Monte Carlo method.

, X n* )} ?

2

1 m

1 m

g

(

X

)

g

(

X

)

i

i

i 1

i 1

m

m

2

1 m

2

1 m

P

g ( X i ) i 1 g ( X i )

i 1

m

m

2

2

E g ( X ) E g ( X ) Var g ( X )

Bootstrap Standard Error Estimation for Statistic

Tn g ( X1 , , X n ) :

*

1. Draw X 1 ,

, X n* as a sample with replacement from

the observed sample x1 , , xn .

*

*

2. Compute Tn g ( X 1 ,

, X n* ) .

*

3. Repeat steps 1 and 2 m times to get Tn,1 ,

4. Let seboot

, Tn*,m

*

1

1

m *

m

T

T

n,i m r 1 n,r

m 1 i 1

The bootstrap involves two approximations:

not so small approx. error

SDF (Tn )

small approx. error

SDFˆ (Tn )

n

seboot

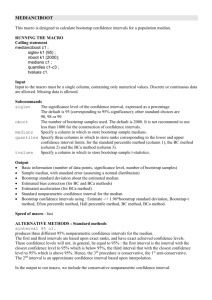

R function for bootstrap estimate of SE(Median)

bootstrapmedianfunc=function(X,bootreps){

medianX=median(X);

# vector that will store the bootstrapped medians

2

bootmedians=rep(0,bootreps);

for(i in 1:bootreps){

# Draw a sample of size n from X with replacement and

# calculate median of sample

Xstar=sample(X,size=length(X),replace=TRUE);

bootmedians[i]=median(Xstar);

}

seboot=var(bootmedians)^.5;

list(medianX=medianX,seboot=seboot);

}

Example: In a study of the natural variability of rainfall, the

rainfall of summer storms was measured by a network of

rain gauges in southern Illinois for the year 1960.

>rainfall=c(.02,.01,.05,.21,.003,.45,.001,.01,2.13,.07,.01,.0

1,.001,.003,.04,.32,.19,.18,.12,.001,1.1,.24,.002,.67,.08,.003

,.02,.29,.01,.003,.42,.27,.001,.001,.04,.01,1.72,.001,.14,.29,.

002,.04,.05,.06,.08,1.13,.07,.002)

> median(rainfall)

[1] 0.045

> bootstrapmedianfunc(rainfall,10000)

$medianX

[1] 0.045

$seboot

[1] 0.02167736

Bootstrap for skewness

The skewness of a distribution F is

( x )3

dF ( x)

3

where and are the mean and standard deviation of F.

The skewness is a measure of asymmetry; it equals 0 for a

symmetric distribution. A natural point estimate of the

skewness based on an iid sample X 1 , , X n is

1 n

3

(

X

X

)

i

n

i 1

ˆ n

.

s3

>thetahat=(sum((rainfallmean(rainfall))^3)/length(rainfall))/var(rainfall)^.5

> thetahat

[1] 0.5517379

Bootstrap estimate of standard error of ˆ :

bootstrapskewnessfunc=function(X,bootreps){

skewnessX=(sum((X-mean(X))^3)/length(X))/var(X)^.5;

# vector that will store the bootstrapped skewness estimates

bootskewness=rep(0,bootreps);

for(i in 1:bootreps){

# Draw a sample of size n from X with replacement and

# calculate skewness estimate of sample

Xstar=sample(X,size=length(X),replace=TRUE);

bootskewness[i]= (sum((Xmean(Xstar))^3)/length(Xstar))/var(Xstar)^.5;

}

seboot=var(bootskewness)^.5;

list(skewnessX=skewnessX,seboot=seboot);

}

> bootstrapskewnessfunc(rainfall,10000)

$skewnessX

[1] 0.5517379

$seboot

[1] 0.3362528

Bootstrap confidence intervals

There are several ways of using the bootstrap idea to obtain

approximate confidence intervals. Here we present one

approach – percentile bootstrap confidence intervals.

Suppose we want to estimate a parameter and have a

point estimator ˆ( X1 , , X n ) .

To form a (1 ) percentile bootstrap confidence interval,

we obtain m bootstrap resamples (typically m=3000 or

more),

*

( X 11* , , X 1*n ), , ( X m* 1 , , X mn

).

*

*

We calculate ˆ , ,ˆ based on the bootstrap resamples.

1

m

*

*

*

Let ˆ(1) ˆ(2) ˆ( m) denote the ordered values of

ˆ1* , ,ˆm* . Let [] denote the greatest integer function.

The percentile bootstrap confidence interval is

ˆ([ m / 2]) ,ˆ( m1[ m / 2]) .

Motivation for percentile bootstrap confidence intervals:

The interval from the ( / 2 ) quantile to the ( 1 / 2 )

quantile of the sampling distribution of ˆ has a ( 1 )

probability of containing the true . We can view

ˆ1* , ,ˆm* as approximate samples from the sampling

ˆ

ˆ

distribution of ˆ and ([ m / 2]) ,( m1[ m / 2]) as estimates of

the ( / 2 ) quantile and the ( 1 / 2 ) quantile of the

sampling distribution of ˆ .

Let ˆ be a point estimate of and seˆ be the estimated

standard error of . Suppose

Example: Percentile Bootstrap Confidence Interval for

Median

# Function that forms percentile bootstrap confidence

# interval for median. To find the percentile bootstrap

# confidence interval for a parameter other than the median,

# substitute the appropriate function at both calls to the

# median.

percentciboot=function(X,m,alpha){

# X is a vector containing the original sample

# m is the desired number of bootstrap replications

theta=median(X);

thetastar=rep(0,m); # stores bootstrap estimates of theta

n=length(X);

# Carry out m bootstrap resamples and estimate theta for

# each resample

for(i in 1:m){

Xstar=sample(X,n,replace=TRUE);

thetastar[i]=median(Xstar);

}

thetastarordered=sort(thetastar); # order the thetastars

cutoff=floor((alpha/2)*(m+1));

lower=thetastarordered[cutoff]; # lower CI endpoint

upper=thetastarordered[m+1-cutoff]; # upper CI endpoint

list(theta=theta,lower=lower,upper=upper);

}

> percentciboot(rainfall,10000,.05)

$theta

[1] 0.045

$lower

[1] 0.01

$upper

[1] 0.1

Percentile bootstrap confidence interval for skewness:

Replace median in above R function by the skewness

percentciboot=function(X,m,alpha){

# X is a vector containing the original sample

# m is the desired number of bootstrap replications

theta=(sum((X-mean(X))^3)/length(X))/var(X)^.5;

thetastar=rep(0,m); # stores bootstrap estimates of theta

n=length(X);

# Carry out m bootstrap resamples and estimate theta for

# each resample

for(i in 1:m){

Xstar=sample(X,n,replace=TRUE);

thetastar[i]=(sum((Xstarmean(Xstar))^3)/length(Xstar))/var(X)^.5;

}

thetastarordered=sort(thetastar); # order the thetastars

cutoff=floor((alpha/2)*(m+1));

lower=thetastarordered[cutoff]; # lower CI endpoint

upper=thetastarordered[m+1-cutoff]; # upper CI endpoint

list(theta=theta,lower=lower,upper=upper);

}

> percentciboot(rainfall,10000,.05)

$theta

[1] 0.5517379

$lower

[1] 0.05368078

$upper

[1] 1.079607

Validity of bootstrap CIs: This is a complicated subject and

there is a huge literature on it, but for most problems,

bootstrap CIs have asymptotically correct coverage.

Davison and Hinkley (1997, Bootstrap Methods and Their

Applications) is a good reference.

Bootstrap summary

1. The bootstrap is a powerful method for estimating

standard errors and obtaining approximate confidence

intervals for point estimators.

2. There are several methods for using the bootstrap to

obtain approximate confidence intervals other than the

percentile confidence interval bootstrap. One idea is to

standardize the estimator ˆ by an estimate of scale (see

Problem 5.9.5). The book Efron and Tibshirani, An

Introduction to the Bootstrap is an excellent book on the

bootstrap.