EXAM 2 MATERIAL

advertisement

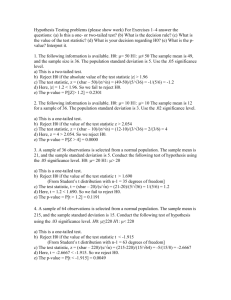

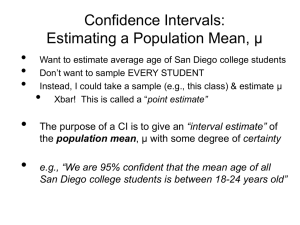

Stat303 - EXAM 2 MATERIAL Exam Date: March 25, 26 or 27 Week 4: Probability and Inference IPS - Ch 4 I) Inference - Using information in a sample to make a guess about a population A) Parameters - associated with population 1) Do not vary - fixed 2) Common parameters a) - population mean b) - population standard deviation c) - population proportion B) Statistics - associated with sample 1) Sampling variability - statistics can change from sample to sample 2) Common statistics a) xbar - sample mean b) s - sample standard deviation c) p - sample proportion C) Probability - how we make statements about populations using samples 1) Probability of an event=Proportion of time the event occurs in population 2) Notation: P(event)=Probability of an event Week 5: Normal distributions IPS - Ch 1.3 II) Normal Distribution Revisited A) Recall - Empirical Rule : If distribution is approximately normal 1) 68% probability of being within 1 SD of mean 2) 95% probability of being within within 2 SD of mean 3) 99.7% probability of being within within 3 SD of mean B) Notation: X~N(,2)=the variable X is normal with mean= and SD= 1) Special case a) Standard Normal - N(0, 12) b) Notation: Z = standard normal variable C) Z table - gives exact probabilities for normal variables 1) Using table to find probabilities for standard normal variables a) z = column heading plus row heading b) P(standard normal variable is less than the value z) given in table c) Notation: P(Z<z)=P(standard normal variable is less than the value z) 2) Finding probabilities for X~N(,2) a) P(your variable is less than the value x)=P(Z<z) where z=(x-)/ Since Z=(X-)/ is a new variable with N(0,1) distribution b) Notation: P(X < x)= P(your variable is less than the value x) c) Intuitive interpretation of table z=(x-)/ is the number of SD's x is from the mean P(Z < z)=probability a normal variable less then z SD's away from the mean If z > 0: P(Z < z) =P(normal var. no more than z SD’s above mean) P(Z > z) =P(normal var. more than z SD’s above mean) If z < 0: P(Z < z) =P(normal var. more than z SD’s below mean) P(Z > z) =P(normal var. no more than z SD’s below mean) 3) Useful properties of normal distribution a) Symmetric -> P(Z > z) = P(Z < -z) b) Total probability under curve equals 1 -> P(Z > z) = 1-P(Z< z) D) Types of problems you need to solve using the Z table 1) Value, z, that corresponds to x z = (x-)/ 2) Value, x, that corresponds to z x = + z() 3) Probabilities for a variable X~N(,2) for the following a) P(X < x)= P(X is less than the value x) Find how many SD's x is from : z = (x-)/ Get P(Z < z) directly from table b) P(X > x)= P(X is greater than the value x) Find how many SD's x is from : z = (x-)/ Get P(Z > z) as 1 - P(Z < z) c) P(X is less than x1 or greater than x2) = P(X < x1 or X > x2) Find how many SD's x1 and x2 are from z1 = (x1-)/ and z2 = (x2-)/ Get P(Z < z or Z > z) as P(Z < z) + P(Z > z) d) P(X is between x1 and x2) = P(x1 < X < x2) Find how many SD's x1 and x2 are from z1 = (x1-)/ and z2 = (x2-)/ Get P(z1 < Z < z2) as P(Z < z2) - P(Z < z1) 4) Values of X~N(,2) or Z satisfying given probabilities a) The value x* satisfying P(X < x*) = Find in table Add row and column heading to get z* satisfying P(Z < z*) = x* = + z* b) The value x* satisfying P(X > x*) = Find 1- in table Add row and column headings to get z* satisfying P(Z > z*) = x* = + z* c) The value z*, satisfying P(Z > z*) =P(Z is more than z* from 0) = Find /2 in table Add row and column headings to get the z*....denoted z* /2 To get the x*'s corresponding to -z and +z x1* = - z* x1* = + z* d) The value z*, satisfying P(-z* < Z < z*) = P(Z within z* of 0) = 1- Find z* satisfying P(Z more than z* from 0) = (see above- part c) Week 6: Sampling Distributions IPS - Ch 5 I) Sampling Distributions - distribution of a statistic calculated from a sample A) Overview 1) Meaning of sampling distribution a) Associated with each sample is a value of the statistic b) Value of statistic changes from sample to sample c) Distribution - what values of statistic occur and how often each value occurs 2) Why sampling distributions important a) If distribution of X unknown - can't make probability statements about X b) Under certain circumstances, many statistics (like xbar & p) are normal Can make probability statements about Xbar and p B) Sampling distribution of the sample proportion, p 1) Meaning - what values the sample proportion takes on, and how often each value occurs 2) Under certain conditions p is approximately normally distributed a) Mean = population proportion, b) Standard Error of p, SE(p)=sqrt[(1-)/n] Sometimes called SD(p) c) Z = (p-)/sqrt[(1-)/n] is approximately N(0,1) d) P(Z < z) = P(sample proportion is less than SE(p)'s away from ) If z > 0: P(Z < z) =P(sample proportion no more than z SE(p)’s above ) P(Z > z) =P(sample proportion more than z SE(p)’s above ) If z < 0: P(Z < z) =P(sample proportion. more than z SE(p)’s below ) P(Z > z) =P(sample proportion no more than z SE(p)’s below ) 3) Properties of Standard error of p a) Bigger samples->Better estimates more often->less spread->smaller SE 4) Conditions that must hold a) All n observations in a sample independent b) Large sample size and not close to 0 or 1 n* 10 AND n*(1-) 10 C) Sampling distribution of the sample mean, Xbar 1) Interpretation - what values the sample mean takes on, and how often each value occurs 2) Under certain conditions, Xbar is approximately normally distributed a) Mean = population mean, b) Standard Error of Xbar , SE(Xbar) = /sqrt(n) Sometimes called SD(xbar) or σxbar c) Z = (Xbar-)/[/sqrt(n)] is approximately N(0,1) d) P(Z < z) = P(sample mean is less than z SE's away from ) If z > 0: P(Z < z) =P(sample mean no more than z SD’s above ) P(Z > z) =P(sample mean more than z SD’s above ) If z < 0: P(Z < z) =P(sample mean more than z SD’s below ) P(Z > z) =P(sample mean. no more than z SD’s below ) e) Properties of Standard error of Xbar Bigger samples ->Better estimates more often ->less spread ->smaller SE 3) Conditions that must hold a) Variable you're sampling from is normally distributed(check normal quantile plot of sample) OR sample size large (n>30) b) All n observations in a sample independent 4) t Distribution - when s is used in place of a) [s/sqrt(n)] is an estimate of SE(xbar) b) T = (Xbar-)/[s/sqrt(n)] has t distribution with n-1 df c) P(T < t)=P(sample mean is less than t estimated SE's away from ) If t > 0: P(T < t) =P(sample mean no more than t estimated SE's above ) P(T > t) =P(sample mean more than t estimated SE's above ) If t < 0: P(T < t) =P(sample mean more than t estimated SE's below ) P(T > t) =P(sample mean no more than t estimated SE's below ) d) Properties of estimated standard error of Xbar Changes from sample to sample even for same sample size Bigger samples->Better estimates of more often->t more like Z Bigger samples->Better estimates more often->less spread->smaller SE e) Conditions that must hold i) Variable you're sampling from is normally distributed(check normal quantile plot of sample) OR sample size large (n>30) ii) All n observations in a sample independent f) Using t table on web Find df = n-1 Table set up the same as a Z table g) Types of problems you need so solve using t tables Same as those for Z table Week 7: Confidence Intervals IPS - Ch 6 I) Confidence Intervals A) Overview 1) Purpose: estimate a parameter with an range of plausible values (interval) 2) Why use interval - sampling variability 3) Basic idea: Suppose X~N(, 2) a) 95% of the X's within 1.96 SD's of (empirical rule) -> for 95% of the x's, the interval (x-1.96 , x+1.96 ) contains b) Choose just one observation, x c) "95% confident" that x is one of the values within 1.96 SD's of -> "95% confident" that is within (x - 1.96 , x + 1.96 ) d) Change 1.96 to z*/2 for other levels of confidence See section II) Normal distribution, D, 2, c and d for finding z*/2 B) Confidence intervals for the probability/proportion in the population, 1) Recall:under certain conditions p~N( , sqrt[(1-)/n]2 ) a) 95% of the p's within 1.96 SE's of -> for 95% of the p's in the population, (p-1.96 SE(p),p+1.96 SE(p)) contains b) Choose just one sample proportion, p c) 95% confident that p is one of the sample proportions that is within 1.96 SE's of -> 95% confident that is in the interval (p-1.96 SE(p), p +1.96 SE(p)) d) Estimate SE(p) = sqrt[(1-)/n] with sqrt[(1-p)p/n] e) Change 1.96 to z*/2 for other levels of confidence 2) When to use a) Large sample size and not close to 0 or 1 Estimate with p Check that n*p 10 AND n*(1-p) 10 b) All n observations are independent C) Confidence intervals for the mean in the population, 1) Recall: under certain conditions, Xbar~ N(, 2/n) a) 95% of the xbar's within 1.96 SE's of -> for 95% of the xbar's, (xbar-1.96 SE(Xbar), xbar+SE(Xbar)) contains b) Choose just one sample mean, xbar c) 95% confident that xbar is one of the sample means that is within 1.96 SE's of -> 95% confident that is in (xbar-1.96SE(xbar),x bar+SE(xbar)) d) Change 1.96 to z*/2 for other levels of confidence 2) When to use a) Original variable is normally distributed OR sample size large (n>30) b) All n observations are independent c) True SD of variable, , is known 3) Recall: when estimating the with s T = (Xbar-)/[s/sqrt(n)] has a t distribution with n-1 df a) 95% confident is in (xbar-t*n-1,0.025 s/sqrt(n), xbar-t*n-1,0.025 s/sqrt(n)) b) Change t*n-1,0.025 to t*/2 for other levels c) When to use Variable you're sampling from is normally distributed(check normal quantile plot of sample) OR sample size large (n>30) All n observations are independent True SD of variable NOT known Week 8: Hypothesis Testing IPS Ch 7.1 I)Hypothesis Tests - answering questions about parameter(s) in population(s) A) Overview 1)Logic behind hypothesis testing a) Make claim about parameter in popln (usually Alternate Hypothesis, Ha) b) Assume to the contrary that your claim is false (Null hypothesis, H o) c) See if data are too unlikely given your assumption to the contrary Too unlikely - reject Ho (your claim is supported) Not too unlikely - Can't reject Ho (your claim is NOT supported) 2) Steps in hypotheses tests a) Make a claim about the population (usually Alternate Hypothesis, H a) Construct Null hypothesis, Ho, as opposite of Alternate Hypothesis, Ha b) Decide on a probability that will be called "too unlikely" () Commonly used : 0.05, 0.01 c) Collect sample d) Form a test statistic e) See how likely data is if Ho really is true (p value) f) Reject Ho if your data are too unlikely (p value<some set limit, ) or fail to reject Ho if data are not too unlikely (p value NOT < ) B) Deciding on 1) Types of errors that can be made a) Rejecting Ho when you shouldn't have - Type I b) Failing to reject Ho when you should have - Type II 2) If Type I more critical - choose small 3) If type II more critical - choose large II) Questions about single populations A) Z Tests 1) Two tail a) Ho : = o Ha : o b) Decide on an = probability that will be considered "too unlikely" c) Test statistic:z=(xbar-o)/[/sqrt(n)]=# of SE's xbar is from o d) p value= P[seeing data at least this extreme if Ho true (= o)] = P(Xbar is at least this many SE's away from o) = 2 P(Z < -z) if z is positive, or 2 P(Z < z) if z is negative e) Reject Ho (accept your claim, Ha) if p value < or fail to reject Ho (fail to accept claim, Ha) if p value is NOT < f) Type I error - Reject Ho when really is = o Type II error - Fail to Reject Ho when really is o 2) One tail a) Ho : o Ha : > o (or switch inequalities to test Ho: o ) b) Set c) Test statistic : z = (xbar-o)/[/sqrt(n)] d) p value=P[ seeing data at least this extreme if Ho is true ( o)] = P(Xbar is more than z SE's above o) (or below if Ho: o) = P(Z > z) (or switch to < if Ho : o) e) Reject Ho (accept your claim, Ha) if p value < or fail to reject Ho (fail to accept claim, Ha) if p value is not< f) Type I error - Reject Ho when really is o Type II error - Fail to Reject Ho when really is > o ( or switch inequalities) 3) When to use Z tests a) True SD of variable is known b) Variable you're sampling from is normally distributed (check normal quantile plot of sample) OR sample size large (n > 30) c) All n observations are independent B) t Tests 1) Two tail a) Ho : = o Ha : o b) Decide on an = probability representing "too unlikely" c) Test statistic: t = (xbar-o)/[s/sqrt(n)] df = n-1 d) p value =Probability of seeing data at least this extreme if Ho is true = P(Xbar is at least this many SE's away from o) = 2 P(T < -t) if t is positive, or 2 P(T < t) if t is negative e) Reject Ho (accept your claim, Ha) if p value < or fail to reject Ho (fail to accept claim, Ha) if p value is NOT < f) Type I error - Reject Ho when really is = o Type II error - Fail to Reject Ho when really is o 2) One tail a) Ho: o Ha: > o (or switch inequalities to test Ho: o ) b) Set c) Test statistic : t = (xbar-o)/[s/sqrt(n)] df = n-1 d) p value =Probability of seeing data at least this extreme if Ho is true = P(Xbar is more than this many SE's above o) (or below if Ho: o) = P(T > t) (or switch to < if Ho: o) e) Reject Ho (accept your claim, Ha) if p value < or fail to reject Ho (fail to accept claim, Ha) if p value is not< f) Type I error - Reject Ho when really is o Type II error - Fail to Reject Ho when really is > o ( or switch inequalities) 3) When to use t tests a) True SD of variable is NOT known b) Variable you're sampling from is normally distributed (check normal quantile plot of sample) OR n is large (> 30) c) All n observations are independent C) Situations where z and t tests should NOT be used 1) Observations not independent 2) Data are not sampled from a normal distribution and small sample size (n<30) YOU SHOULD KNOW FOR EXAMS/QUIZZES: How to Calculate: z tables 1. P(Z < z), P(Z > z), P(Z < - z), P(Z > -z) 2. P(Z more than z from 0) = P(Z< -z or Z > z) 3. P(Z within z of 0) = P( -z < Z < z) 4. z* given P(Z < z*) = 5. z* given P(Z > z*) = 6. z* given P(Z farther than z* from 0) = 7. z* given P(Z within z* of 0) = 1- 8. The z corresponding to a given x 9. The x corresponding to a given z 10. P(a variable, X, is farther than z SD's from ) given X~N(, 2) 11. P(X < x), P(X > x), P(X<-x), P(X > -x) given x and X~N(, 2) 12. P(X is within z SD's of ) given n, z, and X~N(, 2) 13. P(Xbar<xbar),P(Xbar>xbar),P(Xbar<-xbar),P(Xbar>xbar) given xbar, n, and X~Normal or n>30 14. P(Xbar is farther than z SE(xbar)'s from ) given xbar, n, and X~Normal or n>30 15. P(Xbar is within z SE(xbar)'s of ) given xbar, n, and X~Normal or n>30 16. P(p < some#),P(p > some#),P(p < -some#),P(p > some#) given some#, , and n 17. P(p is farther than z SE(p)'s from ) given and n 18. P(p is within z SE(p)'s of ) given and n t tables 19. P(T < t), P(T > t), P(T < - t), P(T > -t) for some given t and n 20. P(T farther than t from 0) for some given t and n 21. P(T within t of 0) for some given t and n 22. t* given n and P(T < t*) = 23. t* given n and P(T > t*) = 24. t* given n and P(T farther than t* from 0) = 25. t* given n and P(T within t* of 0) = 1- 26. t corresponding to xbar, given n and s and X~Normal with mean= 27. xbar corresponding to t, given n and s and X~Normal with mean= 28. P(Xbar<xbar), P(Xbar>xbar), P(Xbar<-xbar), P(Xbar>xbar) given xbar, n, s, and X~Normal or n>30 29. P(xbar farther than t estimated SE's from ) given t,n,s and X~Normal or n>30 30. P(xbar within t estimated SE's of ) given t, n, s and X~Normal or n>30 Standard Errors 31. Standard error of xbar, given and n 32. Estimated standard error of xbar, given s and n 33. Standard error of p, given and n 34. Estimated Standard Error of p, given p and n Confidence intervals 36. 95% confidence interval for given xbar, n, and s 37. 95% confidence interval for given p, and n How to perform Hypothesis tests: Given description of problem 1. Decide which test to use (if any) 2. Know how to check if conditions of test are satisfied 3. Decide on Ho and Ha 4. Choose between 2 given 5. Compute test statistic 6. Compute and interpret p value of test statistic (one tail, two tail) 7. Reject Ho or fail to reject Ho based on and p value 8. State conclusion in terms of the problem Facts: 1. Conditions for sample mean to be normally distributed 2. Conditions for sample proportion to be normally distributed 3. When to construct confidence interval for using z table 4. When to construct confidence interval for using z table 5. When to construct confidence interval for using t table 6. When to use each test 7. When not to use z or t tests How to Identify: 1. Type I or Type II error in a given problem 2. Reasons why Xbar might not have normal distribution in a given problem 3. Reasons why p might not have normal distribution in a given problem 4. Reasons why z or t tests should not be used in a given problem The Definition of: sample population parameter statistic xbar s p normal distribution standard normal distribution probability of an event sampling distribution standard error of Xbar standard error of p t distribution degrees of freedom independent observations sampling variability confidence interval null hypothesis alternate hypothesis Type I error Type II error one tail two tail p value