Least Squares Fitting of Polynomials and Exponentials,

advertisement

Least Squares Fitting of Polynomials and Exponentials,

With Programming Examples

Timothy J. Rolfe 1

The University of Chicago

Chicago, Illinois

Mathematics and Computer Education, Vol. 16 (Spr'82), pp. 122-132.

I. INTRODUCTION

In chemistry, as indeed in all of the sciences, one may have a collection of data points to

which he wishes to fit some curve. There are several situations in which this arises. For instance:

a. We may wish to investigate the appropriate functionality for a set of data. Thus in

chemical kinetics we may wish to determine the order of a reaction. Is it first order with

respect to some reactant (dc/dt = -kc), or second order (dc/dt = -kc2), or perhaps some

non-integral order (e. g., dc/dt = -k√c).

b. Given the (theoretical) functionality of the data, we wish to determine the coefficients for

that function. Again in kinetics an example would be the determination of the rate

coefficients (the "k"s above).

c. We wish to determine the best equation for interpolation/extrapolation, given a set of data

for which there need not be any expected functionality on theoretical grounds. For

instance, we may wish to calibrate a thermometer (e.g., thermocouple or thermistor)

against standard points such as phase transitions, or against another thermometer (perhaps

a gas thermometer).

II. DERIVATION OF THE "LEAST SQUARES" FIT

A descriptive model for curve fitting is found in the simple fitting of data to a straight

line: we plot the points on a graph, then drop a (presumably transparent) straight edge on the

graph and move it around until a "best" straight line is seen — the line may not pass through any

of the points, yet it is "close" to almost all of them. A person may be tempted to describe this

process as finding the line that minimizes the sum of deviations of the data from the fitted line.

While such a description is attractive, when phrased mathematically it is found to have a serious

difficulty; namely that any straight line will give a "perfect" fit by this criterion subject to one

condition: f(xi) = yi

If we back up, however, to our initial descriptive model, we see that what we are in fact

attempting to achieve is a minimum in the sum of absolute deviations. But when this is a

minimum, unless the distribution of deviations is perverse, so also will the sum of deviations

squared be near minimal, this latter criterion is easier to handle mathematically.

1

Timothy J. Rolfe is a graduate student in theoretical physical chemistry at the University of Chicago. He

enjoys gymnastics and recreational projects on the computer.

Printed 2016-Feb-06 at 01:20

Page 1

T. Rolfe, Least Squares Fitting . . .

Page 2

Thus, the criterion for the "best fit" of a function to the tabular data will be that for some

f(x) there is a minimum in the function

Χ2 = ∑[f(xi) – yi]2

For a given set of data, we will consider this Χ2 to be a function not of x or y (since for

these data x and y are fixed), but rather of the coefficients of f(x), which are what we in fact vary

to find the best fit. Thus we have Χ2({a}) for a set of coefficients ak in f(x; {a}).

From calculus, we have the necessary condition for an extremum of a function with

respect to a variable: that the derivative with respect to that variable disappear (provided that the

function and its derivative are continuous). Since in general we can choose such inappropriate

aks that Χ2 goes to infinity, the extremum that we find will be a minimum (note that Χ2 > 0). In

short, ∂ Χ2 / ∂ak = 0 finds the best value for ak. From this we proceed thus:

X2

a k

[ f ( x i ) y i ]2

=

a k

[ f ( xi )] 2 2

[ f ( xi ) y i ]

=

a k

a k

[ f ( xi )] yi

[ f ( xi )]

= f ( xi )

a k

a k

Rearranging, we obtain

0

=

[ f ( xi )] f ( xi )

[ f ( xi )]

(1)

a k

a k

This is the basic equation for least-squares fitting of any functional form to any data. As

examples we will apply it to two different functional forms: the generalized polynomial or power

series, and the exponential function.

y

i

III. THE GENERALIZED POLYNOMIAL

As the generalized polynomial we will consider all functions that we can write as:

f(x) = a1 xp1 + a2 xp2 + . . . + an xpn

(2)

where the pi as well as the ai are any real numbers. Then equation (1) can easily be applied, since

∂f(x)/ ∂ak = xpk. So we may write

y

xi k a1 xi 1 xi k a2 xi 2 xi k

p

i

p

a1 xi

p

( p1 pk )

p

a 2 xi

p

( p2 pk )

Similar equations can be written for all coefficients of f(x;{a}). But since the (x,y) pairs

Printed 2016-Feb-06 at 01:20

T. Rolfe, Least Squares Fitting . . .

Page 3

are given, the sums yi xi k and xi 1 k can be computed from these data, and we end up

with a system of n linear equations in n unknowns (the {a}).

(p p )

p

y

i

xi

pk

a x

k

( p1 pk )

i

a 2 xi

( p2 p k )

1 < k < n

(3)

k

The system of equations in (3) is particularly useful if we have access to a computer since

various well documented methods exist for solving systems of linear equations, some of which

can be found preprogrammed in software libraries. (See Appendix I for such a FORTRAN

program.)

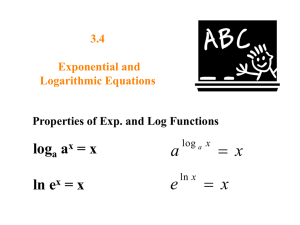

IV. THE EXPONENTIAL FUNCTION

We will consider the exponential function without offset: i.e.,

f(x)= aebx

(4)

(See Appendix II for the method of Guggenheim to reduce evenly spaced points of an

exponential with offset to an exponential without offset.) Applying (I) to (4) we get the two

equations:

∂ Χ2 / ∂a = 0:

y

∂ Χ2 / ∂b = 0:

x y

i

i

ebxi a e 2bxi

ebxi a xi e 2bxi

i

These maybe rearranged to give two equations for a:

a1

ye

e

a2

x y e

x e

bxi

i

2 bxi

i

bxi

i

2 bxi

i

Unfortunately, there is no analytic expression for b, even though we have two analytic

expressions for a. If, however, we have an initial estimate for b, we may search through various

values of b until a1 goes to a2. Therefore, let us consider the function.

e(b) = a1(b) – a2(b)

(5)

Finding the appropriate b then becomes the problem of finding the zero of a function. Again, this

is a well-characterized problem in numerical analysis, and methods for solving it may be found

preprogrammed in software libraries (see Appendix I for such a FORTRAN program).

The advantage of zeroing (5) to find the appropriate exponential function rather than

doing a linear fit of ln(y) vs. x is that no individual observation errors contribute

Printed 2016-Feb-06 at 01:20

T. Rolfe, Least Squares Fitting . . .

Page 4

disproportionately, while the operation of taking a logarithm magnifies errors at small values of

y. An example of this excessive weighting of small ys is shown in the figure.

The experimental variable, the volume of gas evolved, is fit to a decaying exponential.

The offset is removed by the method of Guggenheim (cf. Appendix II), and then the data are fit,

first by linearization (ln(y) vs. x) and then by zeroing (5). As a final check, a "brute force" fit was

performed, explicitly varying a, b, and the offset to find the minimum in Χ2. The following table

shows the results:

Where V(t) = v0 + a [ 1 – e-bt]

method

v0

linearized (29 pts)*

linearized (30 pts)*

zeroing (5) (36 pts)

brute force (36 pts)

0.1000

0.1000

0.1000

0.2903 .

a

b

Χ2

75.38

72.97

77.44

77.54

0.07384

0.06701

0.07693

0.07593

158.6

1031.

4.235

3.309

*Note: After 31 observations the change in volume is slightly negative; hence data points

from here out cannot be used in the linearized fit.

While the reader may find it an interesting exercise to derive the appropriate equations

Printed 2016-Feb-06 at 01:20

T. Rolfe, Least Squares Fitting . . .

Page 5

for the exponential function with offset, he is warned that the equation comparable to (5) above

does not turn out to be well-behaved with respect to finding its zero since it passes through a

positive and negative infinity near its zero, and also is singular at the origin — with

lim[b=0] e(b) = 0.

V. CONCLUSION

The above represents a brief introduction to what is a broad field on which entire books

are written. It is hoped that it will aid the reader in understanding the reasoning behind leastsquares data fitting and provide useful equations for two functional forms of interest in

Chemistry: y = ax+n, and y = ae±bx. Those wishing to read further may well start with a book such

as Bevington's or Meyer's.

REFERENCES

1. Bevington, Philip R. Data Reduction and Error Analysis for the Physical Sciences. New

York: McGraw-Hill Book Company, 1969.

2. Meyer, Stuart L. Data Analysis for Scientists and Engineers. New York: John Wiley & Sons,

Inc., 1975.

APPENDIX I: PROGRAM EXAMPLES

[Omitted]

APPENDIX II: THE METHOD OF GUGGENHEIM2

Consider the exponential function with offset:

g(x) = a ebx + c

It is still possible to obtain an exponential function without offset provided that the

observations are evenly spaced; i.e., xj = x0 + jh:

f(jh) = g(xj) – g(xj–l)

= g(x0 + jh) – g(x0 + jh – h)

= a exp[b(x0 + jh)] + c – a exp[b(x0 + jh – h)] – c

= a exp[bx0](exp[bjh] – exp[bjh]exp[–bh])

= a exp[bx0](1 – exp[–bh])exp[bjh]

= k exp[b(jh)]

where k = a exp[bx0](1 – exp[–bh]).

F. Dahlquist, University of Oregon Chem. 447 (Physical Chemistry Laboratory) Lectures,

Winter. 1974.

2

Printed 2016-Feb-06 at 01:20

T. Rolfe, Least Squares Fitting . . .

Page 6

Thus the exponential factor b may be found directly in the fit. If the pre-exponential

factor a is desired, it may be obtained as follows:

a

k exp[ bx0 ]

( 1 exp[ bh ])

Note that the offset c is not found, since it is in fact subtracted out in arriving at the

function f.

The above method is of particular value where one's data show a drift in offset, perhaps

due to instrumental limitations, or where the process being observed is so slow that it is not

possible or practical to continue observations until an infinity point (offset) is found. Even where

such an offset is observed, the subtracting of the observed offset from the experimental data has

the difficulty of extending the possible error of that observation through all of the experimental

data.

Printed 2016-Feb-06 at 01:20