national research institutions ranks observations

advertisement

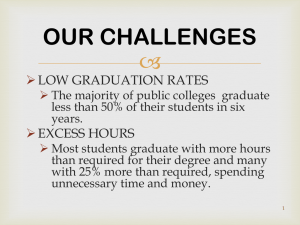

NATIONAL PUBLIC RESEARCH INSTITUTIONAL QUALITY RANKS DATA AND OBSEVATIONS FALL 2009 BY DR. HARRY C. STILLE HIGHER EDUCATION RESEARCH/POLICY CENTER,INC. P.O. BOX 203 DUE WEST, S.C. 29639 (864-379-3080) <STILLEHR@LIVE.COM> NATIONAL RESEARCH INSTITUTIONS RANKS OBSERVATIONS Introduction As the Higher Education Research/Policy Center (HER/PC) looks at the undergraduate academic admissions quality of major public senior (4-year) research institutions in the United States (518) by state, we attempted to isolate those major national research institutions to compare their undergraduate academic admissions quality in relation to each other and their undergraduate students academic potential to later support the research process as graduate students in those institutions. It is our opinion, from data we have looked at for twenty years, that no public senior institution (research, comprehensive, or teaching) is any better than the full time freshmen they enroll over a period of years. This freshmen enrollment data tends to be stable from year to year. It is rare for dramatic changes in the data to occur over short periods of time in these public institutions. It is also our opinion that for these selected national research institutions, academic quality of undergraduates enrolled also affects the quality of research that may be conducted on that campus. It is these former academically qualified undergraduates on these campuses that continue into graduate school and help graduate faculty with their research. Lower academic quality of undergraduates on a campus limits the number of students with the ability to assist in campus research (low 4 or 6-year graduation rates) and may continue into graduate studies. The only other option for an institution with weaker academically prepared undergraduates is to import their potential higher quality graduate students for research purposes from other campuses. The majority of the graduate students should come from the undergraduates with degrees on that campus. Process The HER/PC has selected 109 national research institutions to rank. At least one from each state and some of those recognized by the “Center for Measuring University Research Performance” 2009 Annual Report Top 200 Institutions for Total Research Expenditures (2007). (Pg.174-178.) The HER/PC’s process for ranking these national research institutions is similar to other admissions criteria rankings we do within states (public and private Institutions) and rankings of average data for public institutions by state. Our method is to rank institutions’ freshmen classes by average 25th SAT/ACT scores of full time freshmen enrolled by institutions and combine those test score ranks with the percent of full time enrolled freshmen on average who come from the top 10% of their high school class rank (based on grade point average- GPA). These two independent ranks are averaged, and an overall Academic Quality Rank for the institution is established based on academic quality ranks of the enrolled freshmen class. The higher the test scores and the higher the percent of freshmen from the top 10% of high school 2 class rank the better academic quality of the institution and, over a period of years, a better quality of all undergraduate students on campus. Other HER/PC research indicates that the two above criteria for senior higher education institutions have a Pearson Correlation of .908 when compared to the U. S. News Best Colleges (fifteen criteria) top tier national institutions. We believe the HER/PC rankings to be a simple and reliable method of rankings. Rankings are of value to show the student academic admissions quality between institutions. We use the SAT score numbers for continuity, but for those states that are primarily ACT testing states we converted ACT 25th scores to SAT with American College Testing recommended conversions. For our purpose, it simplified the process of rankings and the data ranks would be no different if we converted all 25th scores data to ACT. Findings It is clear to HER/PC when we look at the rankings of these 109 national four-year research institutions the quality potential for substantive research is evident in these rankings. Those institutions with higher input data of test scores and ranks also compare directly with the higher percentage of output data of sophomore retention, four and sixyear graduation rates. There is also a reverse criterion for the non-graduation rates percent, number of non-graduates per institution and the state cost for these nongraduates to the state each year. As one looks at the total rankings, the data show clear implications. High input data leads to high output data if student academic quality is in the admissions process. As the input data scores and percentages decline so do the percentages of the output data results. For example, in comparing the top fifteen institutions on the data collected (input to output) for freshmen admissions quality to the bottom fifteen institutions there is a great disparity within the data. It is clear those top institutions with better quality freshmen enrolled are the ones more likely to have more substantive research findings and results. There are rare exceptions, but these may be explained by very high enrollment of engineering and technology majors compared to institutions with a broadly orientated curriculum of majors. The research expenditures cost column for those institutions also support this thesis. Higher quality undergraduate students seem to indicate higher research expenditures. We realize that quality research faculty at any institution can get substantive result findings, but it generally is not the case when there are academically weaker undergraduates on those campuses. Higher quality research faculty are drawn to institutions which have higher quality of undergraduates through their admissions process and where research funds are expended. Quality in the research area tends to promote quality everywhere on campus. In general, overall quality research on a campus is supported by quality undergraduates advancing into graduate studies and assisting in the 3 process. Quality of admissions of students enrolled equals quality out in degree recipients. HER/PC also sees quality similarities among some of these research institutions. The rankings are based upon the quality of input data for the freshmen for the academic quality rank, but these institutional profiles also indicate the higher quality of the output data of sophomore retention, four and six-year graduation rates. Two other data sets shown are the percent of students at these institutions that are from in state and the number of freshmen who enroll coming from the bottom 50% of their high school class rank. As one looks at the output result data for these institutions, it is clear the relationship of the higher the sophomore retention, four and six-year graduation rates and the fewer students enrolled from the bottom 50% of their high school class rank the higher the academic admissions profile of the institution will be. The institutions which rank higher in this data table have a much better chance of conducting better overall research than those institutions ranking lower in the data sets and the research expenditures for each indicate this for the most part. It is also HER/PC’s opinion that of the two academic criteria we use, the 25th SAT/ACT test scores and the top 10% of high school students class ranks, the test scores are the more valid of the two. We understand the debate over test scores, but on that given day when the test is administered all students in the United States sit down and take the same test, with the answer in front of them for the verbal and math portion. Our logic is the top 10% of high school class ranks portion of our rankings is slightly less of value, because of grade inflation in high school grading, size of the high school student population and that some students drop out and are not part of the data mix. This may also inflate the numbers of top 10% population and who may be counted. One other study on SAT test score results we looked at that we considered very reliable was by Dr. Peter Salins, Provost of the State University of New York (SUNY) System from 1997 to 2006. Dr. Salin’s research indicated that higher SAT test scores for enrolled freshmen improved the retention and graduation rates for those institutions. There were over 200,000 students at the sixteen institutions to compare. Nine campuses chose to increase selectivity. These nine campuses which did this process had higher average test scores for freshmen enrolled and as a result also increased graduation rate percentages. The seven campuses which chose not to use Dr. Salin’s process remained about the same or deteriorated in their graduation rate result data percentages. Sophomore retention is the first criteria within the output data to indicate the overall quality of the freshmen enrolled the prior year. In our data set for sophomore retention 84% is the median percentage score attained by these 109 institutions. This median score should be at least 90% or better for all research institutions with the current median score the lowest for any of them should have. There are four institutions having 97% sophomore retention. They are Ca. Berkeley, U. of Virginia, UCLA and UNC-Chapel Hill. There are another 29 institutions which have sophomore retention in the 90% range 4 or better. Twenty-six of the top twenty-eight are in this group. These institutions should be commended for their quality of admissions. Twenty-nine of the remaining eighty-one are in the 80% range. No research institution should be below the median sophomore retention of 84%, but there are 54 of them in our data set. In other research the HER/PC has conducted there is a high correlation between sophomore retention percentages and six-year graduation rate percentages when comparing similar average state data for all 518 public senior institutions by state. For these 109 national research institutions, the median four-year graduation rate is 35% with a range between 84% and 7%.This median four-year graduation rate score is well below one half of the sophomore retention score. Not good. There are only forty-five institutions with a four-year graduation rate above the one half score of sophomore retention of 84% at 42%. One of the major problems within our senior public institutions is the lack of concern for students to graduate in four years. Institutions do not seem concerned about this and actually promote students to stay on campus for more than four years to attain a degree. Campus life is a great social atmosphere and it keeps the money flowing into the institution and supports the need for more faculties. We doubt most parents assume their child will spend more than four years to attain a degree. Of the top 29 institutions in this study, only five institutions had a four-year graduation rate below 50%. These were Ohio State at 46%, Colorado School of Mines at 41%, Georgia Tech at 31%, and Missouri U. of Science and Technology/ Cal. State –SLO at 25%. Three of these have high engineering and technology oriented majors. Only six of the bottom 80 institutions have four-year rates over 50%. In all there are 76 institutions of the 109 that have four-year graduation rates below 50%. This is a travesty for these type institutions. Also, there are 37 institutions with four-year graduation rates below 30%. Now that the national standard for graduation rates for senior institutions is at the six year rate it is interesting to look at the six-year graduation rate for these 109 national research institutions. The median percentage graduation rate is 66%. The range is from 93% at U. of Virginia to 25% at U. of Alaska-Anchorage. Of the top 28 institutions all but two (Colorado School of Mines and Missouri U. of Science and Technology) are above the 70th percentile. Both these institutions are high in engineering majors. Thirty-nine of these institutions have six-year graduation rates below 60%, with twenty-two below 50% and nine below 40%. Of the institutions in this study ranked 53rd or below (56), all but six had at least 11% to 43% of freshmen enrolled coming from the bottom 50% if their high school graduating class. The median percent of students from the bottom 50% of their high school class rank was 9%. None of these students need to be on a national public research institution campus for any reason. 5 There are twelve institutions studied that have no students enrolled from the bottom half of their high school class; fourteen with 1%; four with 2%; four with 3%; four with 4%; three with 5%; two with 6%; five with 7%; one with 8% and four with 9%. In reality, for what these institutions represent there should not be any of these bottom 50% of students on these campuses under any circumstances yet fifty had 11% or more. For the enrollment prospects of these 109 national research institutions the HER/PC’s premise is supported by the thesis of The National Center for Public Policy in Higher Education (NCPPHE) and Southern Region Educational Board’s (SREB) comment in “Beyond the Rhetoric – Improving College Readiness Through Coherent State Policy” stated that “Increasingly, it appears that states or post-secondary institutions may be enrolling students under false pretences”. What a dramatic statement by two nationally recognized agencies about the crisis in admissions factors in our colleges and universities in the United States. These institutions seemingly enroll these students only for the funds they bring and do not care about results because they simply roll over a new group next year. In our analysis of the four and six-year graduation rates above we need to emphasize and denote those students on these campuses enrolled six years earlier. We also have to consider the large number of students on campus who will not graduate ever. From our prior research and comments there is very little variation over time for increases in output data without an increase in test scores and top 10% of high school class rank data. When quality initiatives begin with the input data it takes at least five to seven years to see any results in the output data. The HER/PC does have a twelve –year admissions input and retention and four or six-year graduation rate track on all the institutions in this study based on their data sent to our selected resources. In the past we have only seen one or two of these 518 institutions with much change in the input or output data. The latter does not come without the former. The max rate data we have seen for the ultimate national average of six-year graduation rate is 62% by the Higher Education Research Institute at UCLA after ten years of beginning college studies. In the HER/PC’s national study of 2008 data our national sixyear graduation rate was 59% which included intrastate transfers. These non-graduation rate student numbers have been consistent for years. This gives HER/PC what we call the non-graduation rate for each institution and then we calculate the State Higher Education Executive Officers SHEO Project cost for average state allocation for post-secondary institutions for 2009. From this we calculate the student non-graduation cost per institution or state. A point to note for this SHEEO-SHEF Project data is that each state allocation is a statewide average of all two and four year institutions. The state allocation cost for these 109 public research institutions would be higher than we show. Research institutions are far more costly to operate than other type public institutions within a state. These costs for research institutions may be at least one third higher than we show. 6 Another issue which affects the graduation rate percentages is the rate of inflation within grade distribution on any campus. Our study in South Carolina using five data years from 1989-90 through 2003-04 shows an increase from about 11% to 32% depending on institution. This is a shift to A and B grades from C and D grades. One set of grades up and the other down by proportion. Also, Stuart Rojstaczer in a report at gradeinflation.com indicates a 5.6% rise in GPA’s (2.85 to 3.01) from 1991 – 2006 in 43 public senior institutions studied. This is an excellent source available for individuals to access the data. The report is dated March, 2009. A counterargument against the importance of academic preparedness is that students have several issues beyond academics that cause drop outs and lower graduation rates. Every freshmen class in any national research institution is a random sample of students except for the academic profile of these freshmen. It is clear the better academically prepared students (test scores and class rank) return and graduate at a higher rate than those students with lower test scores and class rank. Social issues, economics, jobs and other procedures not withstanding at these higher academically profiled institutions. When looking at the non-graduation rate student numbers for each institution it is difficult to determine the higher or lower population numbers, because these nongraduation rate numbers are dependant upon the total enrolled undergraduates on campus. What is important is the non-graduation rate percentages shown above and their relationship to the number of students enrolled coming from the bottom 50% of their high school class rank. All but one of the bottom ranked 17 institutions, on page 4 of the Table, have 40% or more non-graduation rates and have more than the 13th percentile students in the bottom 50% of their high school class rank. They have an average with about 26% of students from the bottom 50% of high school class rank. We also note, there are institutions that have 10,000 or more non-graduates on campus. All have high enrollments, but also high non-grad rates as well. They are U. of Central Florida at 32% (10,510); U. of South Florida at 47% (10,672); U. of Arizona at 37% (10,028); Arizona State U. at 39% (17,783) and U. of Wisconsin- Milwaukee at 50% (10,503). The state allocation costs are high as well with $71.6 million at UCF; $70.1 million at USF; $73.2 million at U. Arizona; $129.8 million at ASU and $68.6 million at UW-M for these nongraduates. Remember, these data are basically a constant year to year figure based on current admissions enrollment policies used by these institutions. Based on this above data for just these five institutions and if we know the results of data we have seen of NEAP scores in 4th, 8th and 12th grades of K-12 institutions that student critical knowledge learning declines from 4th to 8th and 8th to 12th grades. How then can we expect the large number of under qualified students entering college to be prepared to do undergraduate or graduate studies? Especially, if any of these students are coming from the bottom half of their high school class? The total non-graduation cost for these 109 national research institutions is $3.7 billion. The undergraduate state costs can be calculated from the data presented in the table attached. 7 Three other institutions we isolate for comment are Indiana U. /Purdue U- Indianapolis with 9,575 non-grad students (61%) at $45.5 m cost; Virginia Commonwealth U. with 8,440 non-grads (44%) at $48.1 m cost and U. of New Mexico with 8,346 non-grads (52%) at $ 69.8 m cost. As HER/PC looks at all these research institutions we see general cut off points of stages for value vs lesser-value in the admissions process including the research funding process in categories. The first group is the top 29 institutions; the second is the next 23 institutions through # 52 ranked; the third group of 13 institutions through # 65; the forth group of 16 institutions through # 80 and the final group of 28 institutions to the end. All institutions should strive to have the quality admissions data numbers of the top 29 institutions. All the other institutions should at least strive to have fewer than 5% of students coming from the bottom 50% of high school class ranks and eventually to zero. It is understood there may be modest discrepancies in the data groups. The important point the HER/PC wants to make is no institution should have students on campus who do not have a reasonable chance to graduate with a degree in four, or no more than six years. It should not be about the funding students bring by their presence, but the quality of student in the process. This way every one wins in the end. Recommendations If cost controls are to be implemented at senior research institutions, the following should be considered. No institution listed on this Table should have any student’s enrolled coming from the bottom 50% of their high school class rank. It may even be the case for states like Iowa, Minnesota, Washington or Wisconsin with the highest SAT/ACT test score averages ranks. For those states with lowered SAT/ACT test score results (bottom two-thirds of states) not enrolling these bottom 50% of high school class rank students should be an absolute. A minimum admissions score on SAT or ACT is set for any research institution at 950/20 respectively with the 25th enrollment score average for the freshmen class at 1060 to 1100 minimum and a high school GPA of 2.5 to 2.75 or better. Tuition for undergraduates and graduate students be such that no undergraduate fees support any graduate teaching faculty or their research. Graduate students should pay tuition to cover all graduate school teaching or research costs. Students should only pay for services they elect to use on campus. No universal fees for student services, athletics, wellness centers, etc. Many of these services could be privatized and students select those they desire. Set retention or graduation rate minimums. When institutions fall below the selected mark, the loss in the next class is one half of the percent difference they record and the 8 set standard. They keep this process up until they meet the standard. Say for example, the standard for sophomore retention is at 88% and the institutions sophomore retention is 70% that year, then the next class of freshmen students must be reduced by 9% absolute. Notably the bottom test scorers on the tests are the ones not enrolled. Average admissions scores will increase slowly and the quality of all students will be better. (The SUNY research result as noted earlier). No research institution should enroll students needing remedial/developmental studies under any circumstances unless these students have successfully completed these R/D studies at another two-year institution. These type programs should never occur on a research institutional campus. Also, separate completion rate data should be maintained for these type students as well as transfer students. Conclusion HER/PC clearly understands the roll all public higher education institutions play in our state economic climate and especially those national research institutions in our study. We clearly understand the parochial nature of getting state legislators to look at the broad picture of their state and the overall value institutions play for the students, parents and taxpayers who pay these costs. Individuals reviewing our data may draw some conclusions other than what we have seen in the data for these 109 national research institutions. We are open to any comments or questions on our data and conclusions. Research is an open ended process. Dr. Harry C. Stille Researcher February 8, 2011 9 Bibliography Center for Measuring University Research Performance; 2009 Annual Report ( pg.174177) <Center for Measuring University Research Performance.com> , 2009. Higher Education Research Institute, UCLA; “Graduation Data after Ten Years” Alexander Astin, 3005 Moore Hall/Box 951521, Los Angeles, Ca. 90095-1521 Higher Education Research/Policy Center, Inc. “State Ranks for Public Senior Higher Education Institutions – Academic Admissions Criteria with Retention and Graduation Rates with Cost for Non-Graduates”. Fall 2008. P.O. Box 203 Due West, S.C. 29639 stillehr@live.com July 30, 2010. Higher Education Research/Policy Center, Inc. “Various State Input and Output Data on Admissions Criteria with Retention and Graduation Rates”, Fall 2009, P.O. Box 203 Due West, S.C. 29639 stillehr@live.com . Minding the Campus, Reforming Our Universities. “Does SAT Predict College Success”; Dr. Peter Salins, October 15, 2008, <Minding the Campus.com /originals/2008/10> National Center for Public Policy in Higher Education/ Southern Regional Education Board, “ Beyond the Rhetoric – Improving College Readiness Through Coherent State Policy” SREB June 2010. 592 10th Street NW, Atlanta, Ga. 30318. Rojstaczer, Stuart, <Gradeinflation.com>, “ Grade Inflation at American Colleges and Universities – Recent GPA Trends Nationwide” March 10, 2009. State Higher Education Executive Officers, SHEEO-SHEF Project Report, 2010, 3035 Center Green Drive, Suite 100, Boulder, Co. 80301. The College Board, “College Handbook” 48th Edition, (2011) 45 Columbus Avenue, New York, N.Y. 10023-6992 The Princeton Review, “Complete Book of Colleges” 2011 Edition, 111 Speen Street, Suite 550, Framingham, Ma. 01701. U.S. News – Ultimate College Guide, 2011 – 8th Edition, Sourcebooks, Inc., P.O. Box 4410 Naperville, Il. 60567-4410. 10