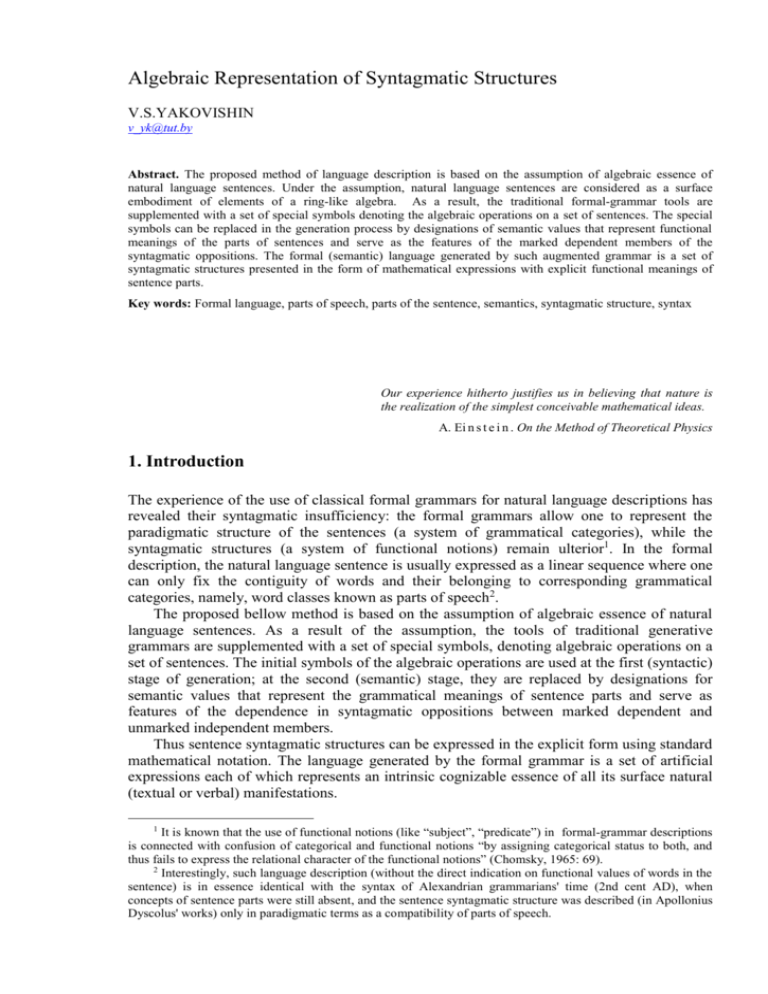

Algebraic Representation of Syntagmatic Structures

advertisement

Algebraic Representation of Syntagmatic Structures

V.S.YAKOVISHIN

v_yk@tut.by

Abstract. The proposed method of language description is based on the assumption of algebraic essence of

natural language sentences. Under the assumption, natural language sentences are considered as a surface

embodiment of elements of a ring-like algebra. As a result, the traditional formal-grammar tools are

supplemented with a set of special symbols denoting the algebraic operations on a set of sentences. The special

symbols can be replaced in the generation process by designations of semantic values that represent functional

meanings of the parts of sentences and serve as the features of the marked dependent members of the

syntagmatic oppositions. The formal (semantic) language generated by such augmented grammar is a set of

syntagmatic structures presented in the form of mathematical expressions with explicit functional meanings of

sentence parts.

Key words: Formal language, parts of speech, parts of the sentence, semantics, syntagmatic structure, syntax

Our experience hitherto justifies us in believing that nature is

the realization of the simplest conceivable mathematical ideas.

A. Ei n s t e i n . On the Method of Theoretical Physics

1. Introduction

The experience of the use of classical formal grammars for natural language descriptions has

revealed their syntagmatic insufficiency: the formal grammars allow one to represent the

paradigmatic structure of the sentences (a system of grammatical categories), while the

syntagmatic structures (a system of functional notions) remain ulterior1. In the formal

description, the natural language sentence is usually expressed as a linear sequence where one

can only fix the contiguity of words and their belonging to corresponding grammatical

categories, namely, word classes known as parts of speech2.

The proposed bellow method is based on the assumption of algebraic essence of natural

language sentences. As a result of the assumption, the tools of traditional generative

grammars are supplemented with a set of special symbols, denoting algebraic operations on a

set of sentences. The initial symbols of the algebraic operations are used at the first (syntactic)

stage of generation; at the second (semantic) stage, they are replaced by designations for

semantic values that represent the grammatical meanings of sentence parts and serve as

features of the dependence in syntagmatic oppositions between marked dependent and

unmarked independent members.

Thus sentence syntagmatic structures can be expressed in the explicit form using standard

mathematical notation. The language generated by the formal grammar is a set of artificial

expressions each of which represents an intrinsic cognizable essence of all its surface natural

(textual or verbal) manifestations.

1

It is known that the use of functional notions (like “subject”, “predicate”) in formal-grammar descriptions

is connected with confusion of categorical and functional notions “by assigning categorical status to both, and

thus fails to express the relational character of the functional notions” (Chomsky, 1965: 69).

2

Interestingly, such language description (without the direct indication on functional values of words in the

sentence) is in essence identical with the syntax of Alexandrian grammarians' time (2nd cent AD), when

concepts of sentence parts were still absent, and the sentence syntagmatic structure was described (in Apollonius

Dyscolus' works) only in paradigmatic terms as a compatibility of parts of speech.

2. Formal Language of Sentences

The necessary extension of the formal-grammar tools can be obtained on the basis of the

following assumption - hypothesis of algebraic essence of natural language sentences

(Yakovishin, 1999:77).

Ring-like algebra. Every natural language sentence is a surface manifestation of a

certain element of the ring-like dibasic algebra with a set of words (free monoid defined

over the alphabet) and a set of sentences on which one unary operation and a pair of

binary operations – coordination (“addition”) and determination (“multiplication”) - are

defined. The operations satisfy the following conditions: each of them can be denoted by

different symbols that represent its semantic values; coordination is commutative and

associative; determination is non-commutative, non-associative, and one-sided

distributive over coordination.

All the properties of the binary operations manifest themselves in the presence or absence of

semantic identity in pairs of phrases such as:

books and journals journals and books (commutativity of coordination);

books and journals as well as newspapers books as well as journals and newspapers

(associativity of coordination);

the author of the book the book of the author (non-commutativity of determination);

new books of the author books of the new author (non-associativity of determination);

new and old books new books and old books (one-sided distributivity of determination

over coordination).

Note that the distributivity of determination becomes apparent in case of coordinated

dependent members. (In the formal representation, this one-sided property is expressed as

left-hand distributivity.) As regard the distributivity over coordinated independent (head)

members (or right-hand distributivity), we suppose that it is a weak (“non-algebraic”)

property. It is known that the right distributivity can not be fulfilled in the case of the “plural

predication”3. One can suppose that the right distributivity is also not fulfilled in the case of

the attribute and all other “non-distributive” parts of sentence, e.g.:

new books and journals (new journals?) new books and new journals.

The mentioned operations give support for expression of all the grammatical (functional)

meanings of words in the sentence. The unary operation is used for expression of the common

(sentential) grammatical meanings such as modality, negation, question, exclamation, etc. The

binary operations are used for expression of the meanings of coordinate connection

(conjunctive, disjunctive, adversative, etc.) and the meanings of subordinate connection

(attributive, predicative, etc.). At the same time, the algebraic operations serve as markers to

separate adjacent words in the sentence. Thus the natural language can be considered as a

surface material embodiment of some formal language that represents a set of abstract

sentences closed under the given algebraic operations.

In order to obtain the necessary extension of the formal-grammar tools we need some set

of special symbols (we shall call it the auxiliary alphabet) used to represent given algebraic

operations. In the presence of the special symbols (that are not in the basic alphabet), the

E.g., the predicate are students is distributive over coordination of subjects (A and B are students A is a

student and B is a student), whereas the predicate are shipmates is non-distributive (McKay, 2006).

3

formal language is defined as a set of compound strings, sentences, derived over a set of

words (vocabulary) by means of algebraic operations.

Let A and Ω be respectively the basic and auxiliary alphabets (AΩ); then the

formal language L is in general case a set LL(A*Ω ) derived over a vocabulary A* with

some collection of algebraic operations Ω . Since the whole vocabulary can consist of singleletter words (A*A), and the collection of operations can be an empty set (Ω), the various

versions of formal languages are distinguished:

L A*L(AΩ)L(A*Ω);

one of them is a traditional language of words L(A)A*, and the others are languages of

sentences: L(AΩ) is a language of syntactic structures, and L(A*Ω) is a language of

semantic structures.

Thus, the units of the formal language can represent together a three-level hierarchy, with

symbols, elements of the basic and auxiliary alphabets, i.e., letters (graphemes),

phonemes,

words, elements of the set A*, i.e., strings over A, finite sequences formed from symbols

by means of concatenation, and

sentences, elements of the set L(A*Ω), i.e., finite sequences formed from words by

means of the certain algebraic operations.

Interestingly enough, the formal language whose units represent together a three-level

hierarchy (all sentences consist of words and these of symbols) is obviously a typical

representation of various natural (“not made by hands”) languages4.

3. Syntax vs. Semantics

It seems that every real language can be characterized as some semantically rich algebra semi-group, group, ring, etc., loaded with sense elements representing the semantic force of

the given language. In the semantically rich algebra, each of the operations and each of the

operands can be denoted by sets of different symbols.

So we can assert that the grammar of any real language contains two parts, namely,

syntax and semantics: syntax is a set of rules that generate purely algebraic (extremely

abstract) structures, and semantics is a set of rules that realize the sense interpretation of the

obtained syntactic structures.

The purely abstract structures generated at the syntactic level are evaluated at the

semantic level following the replacement of variable operands by their values – numeric

values (in arithmetic expressions), propositional constants “true” or “false” (in logic

expressions). In case of natural language, all the symbols of syntactic structures can be

“evaluated” as various semantic distinctions (values) known as lexical and grammatical

meanings. The distinctions between operands (terms) can be denoted as lexical meanings by

usual (natural) word stems, while the semantic distinctions between operations are expressed

as grammatical meanings by special (artificial) affixes of generated word forms.

We can suppose that syntactic structures are generated by rules which are identical (or

similar) to those used for description of mathematical (logical) formulas and known as

recursive definitions of well-formed formulas (wffs). It is obvious that the recursive

definitions like “if A and B are wffs, so are A, B, (AB), (AB)” are in essence the usual

4

It looks as if the three-level hierarchy of language units is a characteristic feature for both usual natural

(human) languages and some other natural semiotic systems. Such three-level hierarchy can also be observed in

molecular-biological encoding, likewise known as a cell language. The cell language also uses symbols (four

different nucleotides), words (genes), and sentences (strings of genes). Thus, both human and cell languages can

be represented by grammars with sets of rules governing the formation of sentences from words and the

formation of words from letters (Ji, 1997).

rewrite rules in which the left-hand part is a symbol (designation of a syntactic category) and

each of the right-hand parts is an atomic formula: A A, B B, A(AB), B(AB).

The common property of the syntactic rules is their recursivity. The antecedent of every

rule can be used also on its right-hand side as the rightmost symbol (right recursivity) or

leftmost symbol (left recursivity). The antecedent can also appear twice on right-hand side

(bilateral recursivity). The recursion property of the rules allows syntactic structures to be

expanded.

Thus the grammar rules that generate syntactic structures may be generalized as

productions of the following forms:

SS (X◦S)…X,

X(X◦S)… ,

where S (“sentence”) is the initial symbol; X (“word”) is the start symbol for word derivation;

is the symbol of any unary operation; ◦ is the symbol of any binary operation (S,XA;

,◦); here and in the following, the “” sign (with the mark of ellipsis) is used to denote

multiple rules for the same antecedent.

The syntax of the formal language can be represented on basis of properties of the given

algebraic operations. So, the commutative and associative coordination () is expressed in the

syntactic rules as the atomic formula XX in which the same symbol is used for both

members in parentheses-free notation. The coordination in the rule with parentheses

S(XX) is necessary for expression of the left-distributive property of determination ()

over coordinated row in strings like X(XX). The non-commutative and non-associative

determination is expressed as an obligatory use of the different symbols and parentheses in

the rules S(XS), X(XS). The parentheses must certainly be used to indicate the

“evaluation” order of the non-associative operation. And so the atomic formula (XS) is

represented twice: in case S(XS), the operation is “evaluated” from right to left in

(X(XS)), and in case X(XS), it is “evaluated” from left to right in ((XS)S).

In the following table, we can compare the syntax of the natural language algebra

(NL ring) with syntax of other algebraic structures that have a pair of binary operations.

Algebraic structures with two binary operations

Syntax

Ring: addition () and multiplication () are

commutative and associative; multiplication is

distributive over addition.

S(XX) SS X,

XXX SS.

Boolean ring: union () and intersection () are

commutative and associative; union is distributive over

intersection, and intersection is distributive over union.

S(XX)SS X,

XXX (SS).

NL ring: coordination () is commutative and

associative; determination () is non-commutative,

non-associative, and left-distributive over coordination.

S(X X) (X S)X,

XX X (X S).

The semantic interpretation of the generated syntactic expressions can be realized by

usual grammar rules. Hence, the grammar generating sentence languages is considered as an

integration of well-known tools: the recursive definitions of wffs (they will be called the

syntactic rules) are integrated with the traditional productions (they will be called the

semantic rules). That is to say, one can propose to supplement the traditional formal-grammar

tools with a set of special operation symbols and a set of special rules that generate formulas,

i.e., the expanded formal grammar G is defined as an ordered six-tuple:

G AT, AN, T, N, S, P,

where AT and AN are subsets of terminal and non-terminal symbols of the basic alphabet; T,

N are subsets of terminal and non-terminal symbols of the auxiliary alphabet; SN is a start

symbol; P is a finite set of productions (syntactic and semantic rules).

A formal generative grammar possessing non-empty sets of both syntactic and semantic

rules (in case Ω) will be called the grammar of sentences, in contrast to the classic

grammar of words, in which there are no syntactic rules (in case Ω = ).

We get certainly several versions of generated languages L(G) representing various kinds

of derivable terminal strings – words, abstract formulas, and algebraic expressions:

L(G) {ω | ω AT*L(A)T L(A*)T, S*ω}.

The presence of the three versions (using all possible grammar means) allows us to obtain

adequate descriptions for various semiotic objects. So the language of words L(G)AT* is a

set of simple strings such as numbers in scale of notation; the syntactic language

L(G)L(A)T is a set of pure symbolic formulas (that do not contain words); and the

semantic language L(G)(A*)T is a set of algebraic expressions, i.e., a set of strings that

include terms (variables or constants), each of which can be a word (separated by the

operation symbols).

4. Abstract Syntagmatic Structures

The syntactic rules allow one to represent the well known concepts of abstract syntagmatic

structures such as syntagmatic markedness (the “agreement” of dependent members), the

kinds of connections between words, the absolutely independent member (so-called

“grammatical subject”), the main attribute of the sentence (known, in particular, as “topic” or

“thema”), homogeneous parts of the sentence.

Syntagmatic markedness. Every dependent member of the syntagmatic structure is

marked in the opposition to a corresponding head member.

The atomic formula (XX) may be considered as an elementary syntagmatic structure

(minimal phrase) well known as the syntagme, namely, a two-word string in which X (the

first word) is the independent (head, governing) member of the syntagme, and X (the second

word) is the dependent (non-head) member. In syntagmatic notation, the words can be, for

clearness, separated by a blank character: (X X).

Indeed, the dependent member X contains a sign of determination used as a start for the

derivation of the meaning of part of the sentence. Thus the determination serves in the role of

formal feature of dependence and is expressed in the generated structures as sign in

syntagmatic oppositions between marked dependent and unmarked head members. As it is

evident, the dependent word-form is (as a marked element) grammatically more informative

than its head member is: it must express not just its "own" grammatical information, but also

must possess data about the grammatical properties of its governing word5.

Stepwise and collateral subordination. In syntagmatic structures, two types of syntactic

connections known as stepwise and collateral subordinations are defined.

5

The grammatical informativeness of a dependent member is clearly apparent in inflectional-type

languages, where syntagmatic markedness is manifested as agreement features: the dependent (marked) wordform is defined by the presence of the received agreement features (see Blevins, 2000).

In the stepwise (consecutive) subordination, the word of the preceding dependent member X

of the preceding syntagme serves as the independent member X of the succeeding syntagme

(as in the book of the new author). The structure with stepwise subordination is expressed by

using the right-recursive rule S (XS) as right syntagmatic expansion:

S (X (X (X...))).

In the collateral (parallel) subordination, several dependent members X are subordinated

to a single independent member X (as in the new book of the author). The structure with

collateral subordination is expressed by using the left-recursive rule X(XS) as left

syntagmatic expansion:

S ((...X X) X).

The two kinds of syntagmatic structures with left and right expansions is usually expressed

by using both the right- and the left-recursive rules, i.e., S (X S) and X (X S):

(X S ) ((...X X) (X (X X...))),

where one part of the structure is joined in parallel, while the other part is consecutive

(simultaneously collateral and stepwise subordination).

It is necessary to pay special attention to the quantitative distinction between the left- and

the right-expansion structures. The interesting thing is that the distance from the extreme

dependent member (the terminal node of a tree structure) to its governing word (the root

node) is increased in case of left expansion (((...X X) X) X), while the dependent member

always has immediate connection with its governing word in case of right expansion

(X (X (X X...))). So, the syntactic structures with collateral and stepwise subordination are

potentially asymmetric in the sense of presence of different real capabilities to use the left and

the right syntagmatic expansions. The left expansion is limited by the structure depth 6, while

the right expansion admits endless expressions (as in the sentence with attributive clauses like

well-known This is the dog, that worried the cat, that killed the rat).

Absolutely independent part. For any syntagmatic structure, there exists a unique head

word, called the absolutely independent part, which does not contain the meaning of the

part of the sentence.

Indeed, a single syntagmatically unmarked part (so-called “grammatical subject”), placed in

the first position of generated structures, does always not have its governing member. Hence,

it does not contain the determination sign denoting the meaning of the part of the sentence.

The presence of a single member that does not contain any meaning of the part of the

sentence (uniqueness property) is confirmed by observed phenomena. So in many languages,

there are special syntactically neutral word forms to express such a member, namely, the noun

form of the nominative case (in languages with a nominative structure) and the infinitive verb

form. It is typical that nominative case form is usually expressed by the pure stem of the

word (“casus indefinites”) or uses affixes that have lost the meanings. Among such

desemantized affixes is, in particular, the ending of the Indo-European nominative case in -s,

which, as it is believed, derives from the ergative case-marker denoting a previously agentive

meaning of a dependent member7. The main head of a sentence can also be expressed by the

According to Yngve’s depth hypothesis (Yngve, 1960; see also Sampson, 1997), the left expansion (leftbranching structure) demands the increasing of the memory resources for the lengthened sentence; since human

operative memory is limited (by the structure depth 72), the left expansion is also limited.

7

The ergative construction of a sentence is now interpreted as a syntagmatic structure with absence of the

nounal (non-verbal) grammatical subject as absolutely independent member. All case forms in such syntagmatic

structure are determined by the verb; and so it can be asserted that Tesnière's verb-centred structure with verbal

absolutely independent member is best suited in description of the ergative construction (Dressler, 1971).

6

infinitive verb form8 as well as the finite verb form in which the grammatical person (the

subject) is reflected by the inflection of the verb (in so-called “null subject languages”).

General dependent part. There exists a possibility to express a main attribute of the

sentence, namely, the general dependent part that relates to the whole sentence.

The general dependent part can be represented in the formal syntagmatic structure as the

distance-marked element. It can be supposed that the degree of grammatical informativeness

(“markedness degree”) of a dependent member increases in proportion to the distance from its

governing element: the dependent member is the more informative the further it is situated

from the governing member. So, known information content of the predicate in a sentence

derives from the fact that this marked member follows the entire subject group and occupies

the more distant position relative to absolutely independent (governing) member with its

adjuncts. Thus, the general dependent part may be defined as the most distant dependent

member. The syntagmatic structure with the general dependent part can be considered as a

hypersyntagme: the general dependent part is a dependent member of the structure, and the

rest part of the sentence is its independent member.

Note that this syntagmatic structure is every so often considered on the basis of semantic or

morphology distinctions and is known in linguistics as dichotomies topic/comment,

theme/rheme, known/unknown, etc. Of course, syntactically, the topic (theme) is the marked

dependent member9, and the comment (rheme) is the unmarked independent member. Topic

structures can be represented as the bracketed expressions: cf. the topic structure like

((XX)(X X)) as in This book, he reads and topicless structure like (X (X (X X))) as in

He reads this book. The same method is fitted for description of the sentences with so-called

locative inversion. So, the locative-inversion examples (see Bresnan, 1994) may be also

represented as the “topic” structures: ((X X) X) as in In the corner was a lamp; cf. the

structure of the canonical order sentence (X (X X)) as in A lamp was in the corner.

Homofunctional parts. There exists a possibility to express the syntagmatic structure

with homofunctional parts of the sentence.

The syntagmatic structure with homofunctional parts is derived from the initial syntagma by

using the rules S(X X), XX X :

(X S) * (X (X X X)).

The determination sign in this syntagmatic structure, called multisyntagme, represents a

common functional meaning of the parts of the sentence, whereas coordination sign serves as

a means of juncture together the identical parts by extracting the common functional meaning

of these members outside the brackets.

Note that the operation of determination is only left-distributive but not right-distributive,

and so the only homogeneity of dependent members can be expressed by syntactic means. So

there are two kinds of homogeneous parts, which are expressed in language by different

grammatical means: the homogeneous dependent members are expressed by universal

syntactic means as a coordination of the identical parts of sentence (functional homogeneity),

8

The infinitive verb form is also a syntagmatically unmarked member (“null form”) expressing only a

lexical meaning (meaning of process) outside the syntagmatic relations (Karcevski, 1927:18).

9

As is well known, the topic (“the thing being talked about”) can be marked in various languages by special

(topic-indicating) grammatical means, (see Li and Thompson, 1976). For example, Japanese marks it with the

special particle wa: the part of the sentence preceding wa is the topic, and part following wa is a “comment”

about the topic. English employs word order (the topic is placed at the beginning of the sentence), lexical means

(As for, Regarding), and prosodic marks.

while the homogeneous independent members can be expressed only by individual semantic

means as a coordination of the identical parts of speech (categorical homogeneity).

In natural languages, the difference in these two kinds of homogeneity can become

apparent as a difference in the used means of expression: the functional homogeneity is

expressed by a usual (unmarked) connection, while the categorical homogeneity needs some

special (marked) means. So, in the grammar of Chinese, the categorical homogeneity is

expressed by means of special generalizing words like dōu ‘all’, quán ‘whole’, e.g.:

Wŏ mǎile shū, bĕnz, bĭ ‘I have bought books, writing-books, pencils’;

Shū, bĕnz, bĭ dōu shì wŏd ‘Books, writing-books, pencils are mine’.

In the first example, the functional homogeneity (coordinated objects) is expressed by usual

means as the immediately connection of direct objects to the transitive verb mǎi ‘to buy’; in

the second example, the connection of the categorical homogeneity (coordinated nouns) is

realized by a special distributivity marker dōu.

5. Semantic Interpretation

A real content of generated sentences is derived from syntactic structures by the rules of

semantic interpretation. These rules allow one to replace the word symbol X and the operation

symbols by designations of lexical and grammatical meanings.

The word symbol X is at first replaced by designations of word categories. We suppose

that these categories (traditionally called the syntactic parts of speech) are sets of words

possessing a certain common context-governing potential, namely, a possibility to attach their

“own” determinant. That is, we say that the Xi represents a syntactic part of speech if the

appropriate part-of-sentence meaning i exists. Obviously, only three syntactic parts of speech

(existing in English and other languages) may be distinguished at most: the Noun (Xn), the

Verb (Xv), and the category of Quality (Xq)10, i.e.,

X Xn Xv Xq.

The necessary indication of the given syntactic parts of speech is possibility to attach their

individual determinants (part-of-sentence meanings): the adjectival attribute, adverbial

attribute, and quality attribute.

The symbol of unary operation (used in SS) is replaced by designations denoting the

syntactically independent meanings such as interrogation, exclamation, general-negation

meaning, and all the general-sentence modality expressed in natural language by parenthetical

words, interjections. These grammatical meanings can also be expressed by the well-known

signs, e.g.: ? !

The replacement of coordination and determination symbols allows us to represent all the

conjunction meanings and the traditional part-of-sentence meanings (known also as “semantic

cases”, “semantic roles”), i.e., the meanings of predicate and secondary notional parts

(attribute, adverbial modifier, object). All of the grammatical meanings can be represented by

special designations (grammatical codes) that can consist of several components: an initial

10

The word classes such as the Adjective and the Adverb, which are as usual distinguished in known

syntax systems (e.g., in Fries's four-member system), do not distinguish as syntactic parts of speech because

these classes are possessed by one common attachable attribute (e.g., attribute very in very quick, very quickly).

It is clear that these word classes differ only at the surface level as morphologized parts of the sentence, i.e.,

morphological (but not syntactic) word categories.

letter, which denotes the common (semantically neutral) meaning, and several abbreviations,

which denote more specific meanings (each component is separated by a dot), i.e.,

c c.dj c.adv c.cns ,

A O P,

Aa a.prs a.abs a.tm a.pl a.cs ,

O oo.cmt o.dst o.mdt ,

Pp p.pt p.ft p.ct p.pt.ct p.pf

Here symbols A,O,PN denote the categories of attributive, objective, and predicative

meanings; the abbreviations can be considered as terminal symbols (elements of T) that

denote various grammatical meanings, namely:

meanings of coordination - conjunctive (c), disjunctive (c.dj), adversative (c.adv),

consecutive (c.cns), etc.;

attributive meanings - neutral adjective or adverbial (a), meaning of presence (a.prs), of

absence (a.abs), of time (a.tm), of place (a.pl), of cause (a.cs), etc.;

objective meanings – neutral (direct) objective (o), comitative (o.cmt), destinative (o.dst),

mediative (o.mdt), etc.;

predicative meanings – the predicate in the present tense (p), past tense (p.pt), future tense

(p.ft), present continuous tense (p.ct), past continuous tense (p.pt.ct), present perfect tense

(p.pf), etc.

Of course, the said grammatical meanings are expressed by diverse context-sensitive

variants at the level of a surface (morphological) interpretation. So, the neutral attributive is

expressed by “agreed” word forms such as the adjective (a big house), the adverb (to run

quickly), as well as by “non-agreed” word forms (the leg of the table); similarly, the neutral

objective meaning is expressed by various “agreed” (governed) word forms (to read the book,

to ask for a book, to knock at the door, to consist of parts, etc.); the same predicative meaning

is expressed both by affixes and syntactic words (he writes, he is a teacher, he is red). And

vice versa, the diverse meanings can be expressed by the same word form: cf. a book with

pictures (attributive meaning of presence), to live with parents (objective comitative

meaning), to write with a pen (objective meditative meaning), and so on.

The use of the syntactic parts of speech and designations of various grammatical

meanings allows us to represent all possible sentence patterns. So it is possible to represent

the simple unexpanded and expanded sentences, the compound sentences, the sentences with

homogeneous parts, the complex sentences with all types of subordinate clauses:

((XX)(XX))*((XnaXq)p(XvoXn)),

(XX)*(Xv pXq),

(X (X(XX))) *(Xn p(Xv o(Xn p.pfXv))),

(X(X(XX))) *(Xn p(Xv a.pl(Xn pXv))).

Here the derived syntagmatic structures represent the well-known sentence patterns: a simple

expanded sentence with a verbal predicate, adjectival attribute, and neutral (direct) object; an

infinitive sentence; a complex sentence with an object clause; a complex sentence with an

adverbial clause of place.

The replacement of syntactic parts of speech by concrete lexical meanings presupposes

use of semantic parts of speech, i.e., categories of word root meanings. The belonging of

words to a certain semantic part of speech is manifested as their possibility to attach common

lexical modifiers, i.e., derivational meanings. Here it is noteworthy that there is a formal

distinction between derivational (“word-formative”) and relational (“inflectional”) meanings,

i.e., there is a rigorous criterion for the delimitation of the two different types of grammatical

meanings: the derivational meaning can appear in any syntactic position of the sentence,

while the relational meaning (contained only in inflected word forms) do not appear in the

position of the syntactically neutral (absolutely independent) part.

So Xn, Xv, Xq can be replaced by categories of root and derivational meanings:

XnRn RDn Pn Rc Pc,

XvRv RDv,

XqRq Rq Dq Pq,

RRn Rv Rq Rc.

Here the derived symbols denote: general category of word root meanings (R), the categories

of root meanings of nouns (Rn, Pn), of verbs (Rv), of quality (Rq, Pq), of numerals (Rc, Pc), and

the categories of derivational meanings (Dn, Dv, Dq).

The categories of root meanings can be substituted by usual morphemes (from AT*):

R n book man,

Pnhewe ,

Rv know read ,

Rq little red ,

Pqso such ,

Rc one two ,

Pc few many ,

The categories of derivational meanings are substituted by special designations (from AN):

Dn Ag Pl ,

Dv Exc Rv ,

DqCp Sp ,

where designations Ag (agent), Pl (plural), Exc (excessive), Rv (revertive), Cp (comparative),

Sp (superlative), etc., indicate the derivational meanings, e.g.: readAgreader, manPlmen,

loadExcoverload, turnRvreturn, littleCplesser, littleSpleast.

The final result of the generative process can be showed on the following examples:

(((Xn aXq) p(Xv oXn)) *(((boy a.little) p(read o.book))

‘The little boy reads a book’;

((Xv pXq) *((read p.pleasant)

‘To read is pleasant’;

((Xn p(Xv o(Xn p.pfXv))) *((We p(know o(he p.pf.return)))

‘We know he has returned’;

(Xn p(Xv a.pl(Xn pXv)))*(house p(stand a.pl(road p.turn)))

‘The house stands where the road turns’.

One can assert that the generated formal syntagmatic structures represent the whole

semantic content of sentences, i.e., they are sufficient for accurate transition to adequate

natural manifestations. Certainly, the transition from the generated artificial (semanticsyntactic) sentences to their natural (textual or verbal) manifestations requires some special

algorithmic techniques that do not belong to the grammatical tools11.

11

The experience in development of transformational-generative grammars showed that transition from the

generated expressions (“deep structures”) to their natural manifestations (“surface structures”) requires special

(“non-grammatical”) actions - such as the simultaneous replacement of more than one symbol, the transposition

of symbols, etc. (see Shaumyan, 1962: 399).

6. Conclusion

It is shown that the formal language generated by the proposed grammar tools is a set of

formal syntagmatic structures expressed in the explicit form using standard mathematical

notation.

In the formal description outlined above, we attempted to unite the tools of formal

grammars with the known notions of traditional linguistics. The proposed formal-grammar

tools make it possible to represent the sentence parts, the parts of speech, and the other

existing grammatical categories, i.e., that which can be called the grammatical system of a

language.

The produced grammatical system may be considered as a certain result of authentically

scientific (experimental) cognition of linguistic reality. It must be again further improved,

investigated, and specified in each particular language description. The grammatical system is

also to become an object of the comparative reconstruction and typological verification.

The formal language proposed above can be used as a linguistic notation (grammatical

interlingua) and a semantic record for knowledge representation. The transition from input

text messages to the internal representation of knowledge may be implemented by means of

the formal language serving as an intermediate link between the text and the growing

conceptual structure (Yakovishin, 1999). Possibility of the automatic transition from input

messages to the internal knowledge representation allows one to realize the accumulation of

knowledge extracted from great volumes of the electronic documentation.

References

Blevins, J.P., 2000, Markedness and agreement, Transactions of the Philological Society 98 (2), 233-262.

Bresnan J., 1994, Locative inversion and the architecture of universal grammar, Language 70 (1), 72-131.

Chomsky, N., 1965, Aspects of the Theory of Syntax, Cambridge, MA: The MIT Press.

Dressler, W., 1971, Űber die Rekonstruktion der indogermanischen Syntax, Zeitschrift fűr Vergleichende

Sprachforschung. 85 (1), 5-22.

Ji, S., 1997, Isomorphism between cell and human language: molecular biological, bioinformatic and linguistic

implications, BioSystems, 44 (1), 17-39.

Karcevski, S., 1927, Système du Verbe Russe: Essai de Linguistique Synchronique, Prague: Plamja.

Li, Ch. N. and Thompson, S. A., 1976, Subject and topic, pp. 457-489 in Syntax and Semantic:Subject and

Topic, Charles Li (ed.), New York: Academic Press. (T ran sl at io n in Новое в зарубежной лингвистике.

Вып. XI. – M.:Прогресс, 1982.)

McKay, Th., 2006, Plural Predication, Oxford: Clarendon Press.

Sampson, G. R., 1997, Depth in English grammar, Journal of Linguistics, 33, 131-151.

Shaumyan, S. K., 1962, Theoretical foundations of transformational grammars [in Russian], pp. 391-411 in

Новое в лингвистике. Вып. II. – M.: ИЛ.

Yakovishin, V. S., 1999, Transformation of syntagmatic structures into a form of knowledge representation [in

Russian], Автоматика и вычислительная техника, 1, 76-83. (T ransl at io n in Automatic Control and

Computer Sciences, New York: Allerton Press, Inc., 33 (1), 64-69.)

Yngve V. N., 1960, A model and hypothesis for language structure, Proceedings of the American Philosophical

Society, 104, 444-466.