Lecture 25

advertisement

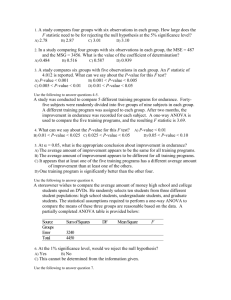

Chapter 9 Types of Studies i. controlled experiments – X’s are controlled through designed experiment. Therefore, no selection methods needed. ii. controlled experiments with supplemental variables – most of X’s come from design experiment, but in addition have supplemental variables. E.g. gender which was not originally included. Goal is to reduce the error variance (MSE). iii. confirmatory observational studies – based on observational study as opposed to experiment. Intended to test hypothesis derived from previous studies. Data consist of covariates from previous studies which are called controlled variables or risk factors. In addition to these, we also have new variables which are called primary variables. E.g. suppose Y is incidence of type of cancer (categorical) and risk factors are Age, Gender, Race, etc., but primary covariate is amount of vitamin E taken daily. iv. exploratory observational studies – investigators don’t have a clue what they are looking for, but have a large amount of data. They then search for covariates that may be related to Y. v. preliminary model investigation – identify functional forms of covariates. That is, do want X, or X2 or log X? Also look for important interactions. Reduction of Explanatory Variables i. This is not a concern ii. May want to reduce supplemental variables iii. Do NOT want to reduce controlled variables or primary variables – no reduction used iv. Covariate reduction Methods of Model Selection 1. All-possible regression procedures using some criteria to identify a small group of good regression models 2. Automatic subset selection methods. Results in identifying the “best” overall model. This best model may vary by selection method invoked. Criteria Method – Minitab By using Stat > Regression > Best Subset features, Minitab produces 4 criteria: 1 1. 2. 3. 4. R2 R2adj Mallows Cp S If you use Stat > Regression > Stepwise under the options you can also get the following criteria statistics: 5. PRESS – Predicted Sum of Squares 6. Predicted R2 R2 = SSR/SST or alternatively, 1 – SSE/SST R2adj = 1 – [(n-1)/(n-p)]*(SSEp/SST) or alternatively 1 – [MSE/(SST/(n-1)] Mallows Cp = (SSEp/MSEk) – (n – 2p) where MSEk is MSE with all predictors in model and p is for the number of estimates in model (i.e. number of predictors plus intercept). S= MSE 2 e PRESS = i where ei = residual and hi = leverage value for the ith observation. 1 hi Leverage, hi, is the ith diagonal element of the hat matrix, H = X(XTX)-1XT Predicted R2 = 1 – (PRESS/SST) Model Selection Base on Criteria If using criteria: a. 1, 2 or 6: select model with highest value(s). Keep in mind that as variables are added R2 will always at least stay the same if not increase, but for R2adj you may experience a decrease as variables are added. b. 4: select model with lowest value(s). These are models that are providing the smallest error. c. 3: select model where Cp ≈ p (the number of parameters in model) and Cp value is small. Where Cp > p this reflects possible bias in the model and where Cp < p this reflects random error. d. 5: select model with low PRESS value(s) 2 Percent Weights: X1 = Tricalcium Aluminate X2 = Tricalcium Silicate X3 = Tetracalcium Alumino Ferrite X4 = Dicalcium Silicate Y = Amount of Heat Evolved during curing in calories per gram of cement X1 7 1 11 11 7 11 3 1 2 21 1 11 10 X2 26 29 56 31 52 55 71 31 54 47 40 66 68 X3 6 15 8 8 6 9 17 22 18 4 23 9 8 X4 60 52 20 47 33 22 6 44 22 26 34 12 12 Y 78.5 74.3 104.3 87.6 95.9 109.2 102.7 72.5 93.1 115.9 83.8 113.3 109.4 Best Subsets Regression: Y versus X1, X2, X3, X4 MTB > BReg 'Y' 'X1'-'X4' ; SUBC> NVars 1 4; SUBC> Best 2; NOTE: This option choice prints the best 2 of any group SUBC> Constant. Response is Y Vars 1 1 2 2 3 3 4 R-Sq 67.5 66.6 97.9 97.2 98.2 98.2 98.2 R-Sq(adj) 64.5 63.6 97.4 96.7 97.6 97.6 97.4 Mallows C-p 138.7 142.5 2.7 5.5 3.0 3.0 5.0 S 8.9639 9.0771 2.4063 2.7343 2.3087 2.3121 2.4460 X X X X 1 2 3 4 X X X X X X X X X X X X X X X X Based on the criteria, the best models are those including X1, X2 and X4 or X1, X2 and X3 3 Forward Selection: Y versus X1, X2, X3, X4 MTB > Stepwise 'Y' 'X1'-'X4'; SUBC> Forward; SUBC> AEnter 0.25; This is the alpha default value SUBC> Best 0; SUBC> Constant; SUBC> Press. Forward selection. Alpha-to-Enter: 0.25 Response is Y on 4 predictors, with N = 13 Step Constant 1 117.57 2 103.10 3 71.65 X4 T-Value P-Value -0.738 -4.77 0.001 -0.614 -12.62 0.000 -0.237 -1.37 0.205 1.44 10.40 0.000 1.45 12.41 0.000 X1 T-Value P-Value X2 T-Value P-Value S R-Sq R-Sq(adj) Mallows C-p PRESS R-Sq(pred) 0.42 2.24 0.052 8.96 67.45 64.50 138.7 1194.22 56.03 2.73 97.25 96.70 5.5 121.224 95.54 2.31 98.23 97.64 3.0 85.3511 96.86 Best model is after step 3 concludes and is Y = Bo + B1X1 + B2X2 + B4X4 --Remember alpha to enter is 0.25 thus X4 is entered. Step1 starts by calculating the p-value for regressing Y on each variable separately, keeping the variable with the lowest p-value that satisfies being less than the alpha-toenter value (e.g. 0.25), in this case the initial variable is X4. Next, step 2 is to compute partial statistics for adding each remaining predictor variable to model containing variable from step 1. That is, MTB finds the partial statistics for adding X1 to a model containing X4, then finds the partial statistics for adding X2 to a model containing X4, and finally for adding X3 to a model containing X4, and from these partials MTB selects the variable whose p-value is lowest and less than the criterion alpha of 0.25. For step 2 this variable is X1. Step 3 is then to find partial statistics for remaining variables to be added individually to a model already containing previous selected variables. That is, find partials for adding X2 to a model containing X4,X1 and partials for adding X3 to a model containing X4,X1. From these partial statistics, enter the variable whose p-value is lowest and less than 0.25. From this step the variable entered is X2. Step 4 is to repeat the previous step but now using the model with the 3 variables previously selected. That is 4 find partials for adding X3 to model already containing X1,X2, and X4. The p-value for this partial F is not less than 0.25 so the process stops at Step 3 with the best forward selected model. Backward Selection Regression: Y versus X1, X2, X3, X4 MTB > Stepwise 'Y' 'X1'-'X4'; SUBC> Backward; SUBC> ARemove 0.1; This is the alpha default value SUBC> Best 0; SUBC> Constant; SUBC> Press. Backward elimination. Alpha-to-Remove: 0.1 Response is Y on 4 predictors, with N = 13 Step Constant 1 62.41 2 71.65 3 52.58 X1 T-Value P-Value 1.55 2.08 0.071 1.45 12.41 0.000 1.47 12.10 0.000 X2 T-Value P-Value 0.510 0.70 0.501 0.416 2.24 0.052 0.662 14.44 0.000 X3 T-Value P-Value 0.10 0.14 0.896 X4 T-Value P-Value -0.14 -0.20 0.844 -0.24 -1.37 0.205 2.45 98.24 97.36 5.0 110.347 95.94 2.31 98.23 97.64 3.0 85.3511 96.86 S R-Sq R-Sq(adj) Mallows C-p PRESS R-Sq(pred) 2.41 97.87 97.44 2.7 93.8825 96.54 Best model is after step 3 concludes and is Y = Bo + B1X1 + B2X2 Step 1 starts with full model, removes X3 first since this has the largest p-value and is greater than the criterion of alpha = 0.1. Step two, the regression is for Y on X1, X2, X4 and the p-value for X4 is largest greater than 0.1 so X4 is removed. Next step is to regress Y on X1, X2 and from this model no p-values are greater than 0.1 so the process stops. 5 Stepwise Selection Regression: Y versus X1, X2, X3, X4 MTB > Stepwise 'Y' 'X1'-'X4'; SUBC> AEnter 0.15; These AEter/ARemove are the alpha default criterion SUBC> ARemove 0.15; SUBC> Best 0; SUBC> Constant; SUBC> Press. This is another criterion measure such as R2, Cp, S, R2adj Alpha-to-Enter: 0.15 Alpha-to-Remove: 0.15 - STEPWISE Response is Y on 4 predictors, with N = 13 Step Constant 1 117.57 2 103.10 3 71.65 X4 T-Value P-Value -0.738 -4.77 0.001 -0.614 -12.62 0.000 -0.237 -1.37 0.205 1.44 10.40 0.000 1.45 12.41 0.000 1.47 12.10 0.000 0.416 2.24 0.052 0.662 14.44 0.000 2.31 98.23 97.64 3.0 85.3511 96.86 2.41 97.87 97.44 2.7 93.8825 96.54 X1 T-Value P-Value X2 T-Value P-Value S R-Sq R-Sq(adj) Mallows C-p PRESS R-Sq(pred) 8.96 67.45 64.50 138.7 1194.22 56.03 2.73 97.25 96.70 5.5 121.224 95.54 4 52.58 Best model is after step 4 concludes and is Y = Bo + B1X1 + B2X2 Step1 starts by calculating p-value for regressing Y on each variable separately, keeping the variable with the lowest p-value that satisfies being less than the alpha-to-enter value (e.g. 0.15), in this case the initial variable is X4. Next step 2 is to compute partial statistics for adding each remaining predictor variable to model containing variable from step 1. That is, MTB finds the partials for adding X1 to a model containing X4, then finds the partials for adding X2 to a model containing X4, and finally for adding X3 to a model containing X4, and from these partials select the variable with lowest p-value and less than the criterion alpha of 0.15 and at the same time comparing p-values and removing a variable if its p-value is the highest and greater than 0.15. From step 2 this results in X1 being added to the model with X4. Now the regression repeats step 2 for adding the next variable, X1, but now checks whether any previous variables added to the model, here that is X4, should be dropped from the model when added to the model containing the variable from Step 2 (i.e. variable X1). This is analogous to running the conditional Ttests and that is why you see the T-value in the output. Since the T-value and resulting pvalues satisfy the conditions set to keep a variable in the model, less than 0.15 both the variables remain.. Step 3 continues by going to the remaining unused variables to see if 6 one could be added to the model from Step 2 and if so repeats the conditional t-tests. From this step X2 is added to the model, but now the p-value for X4 is greater than 0.15 and is therefore removed – resulting in Step 4. This final step then leaves us with a model containing X1 and X2 as adding the other variables does not produce a p-value less than 0.15 to allow the variable to be entered nor does it produce a partial F with a p-value greater than 0.15 for any previously selected variable indicating that variable be removed. SPECIAL NOTE: If centering is done on the data set used to select model then validation set needs to center on means used from model building set to maintain the definitions of these variables as used in the fitted model. Model Validation Once a model or set of models has been determined, the final step is to validate selected model(s). The best method is to gather new data and apply the model(s) to this new set and compare estimates and other factors. However, gathering new data is not often feasible to do constraints such as time and money. A more popular technique is to split the data into two sets: model building and model validation. The data is typically split randomly into two equal sets, but if that is not possible then often the model building set is larger. The random split should be done to meet any study requirements. For instance, if known in the population that a certain percentage is male and gender is a considered variable on which the data is gathered, then the two data sets should be split to reflect these percentages. Once the data is separated into the model and validation sets, the researcher proceeds to use the model building set to develop best model(s). Once the model(s) are selected, they are applied to the validation set. From here one considers measures of internal and external validity of the best model(s). Internal Validity The models are fit to the model building data set. Comparisons are done by comparing PRESS to SSE within each model (they should be reasonably close) and the Cp values to their respective p. External Validity The models are fit to the validation data set. Comparisons of the beta estimates and their respective standard errors are made between the two sets. Some problem indicators are if an estimate/standard error from the model building set is much larger/smaller than that estimate from the validation set, or if the sign of the estimate changes. A good measure to use to gauge the predictive validity of the selected model(s) is to compare the Mean Square Predicted Error (MSPR) from the validation set to the MSE of the model selection set. 2 Yi Yˆi MSPR = where Yi are the observed values from the validation set, n* Yˆi are the values calculated by running the best model(s) variable(s) from the validation set as predicted values for new observations in the model building set, and n* come from 7 the validation set. The process is to 1. Copy and paste the x-variables for the model selected from the validation set into the model building set. 2. Perform a regression of one of the selected model(s) and use the Options feature to enter the newly copied xvariables into the “Prediction intervals for new observations” and BE SURE TO CLICK THE STORE FITS OPTION IN THIS WINDOW! 3. These stored fits (PFITS) are then copied and pasted into the validation set are the Yˆi in the formula above. 4. Calculate MSPR as shown and compare to MSE that resulted from the selected model fitted to the model building set. Points to Consider: Consider 1: Many statistical packages will do a line-item removal of missing data prior to beginning a stepwise procedure. That is, the system will create a complete data set prior to analysis by deleting any row of observations where a missing data point exists. Variable(s) with several missing data points can greatly influence the final model selected. If your data does consist of many missing data points you may want to run your model and then return to the original data set and create a second data set consisting of only those variables selected. Using this new data set repeat your stepwise procedure. Consider2: When your data set has a small number of observations in relation to the number of variable (one rule of thumb is if number of observations is less than ten times the number of explanatory variables) then the stepwise procedure may possibly select a model containing all variables, i.e. the full model. This could also occur if a high degree of multicollinearity exists between the variables. This model selection result can be especially true when either or both of these conditions exist and you option to use low threshold values for the F-enter and F-to-remove Consider 3: Of great importance is to remember that the computer is just a tool to help you solve a problem. The statistical package does not have any intuition in which to apply to the situation. Don’t forget this as the model selected by the software and should not be treated as absolute. You should apply your knowledge of the data and the research to the model selected in order to revise if you deem necessary. Choosing between Forward and Backward: When your data set is very large and you are not sure of what to expect, then use the Forward method to select a model. On the other hand, if you have a medium set of variables from which you would prefer to eliminate a few, apply Backward. Don’t be afraid to try both, especially if you started with Forward. In such a case the Forward method will supply a set of variables from which you may want to then use in a Backward process. All possible regression: Different software packages will supply you with various criteria for making a selection. However, adjusted R2 and Mallow’s Cp are two very common statistics provided. These two are related with Cp being more “punishing” to models where the additional variable is of little consequence. When selecting a model using the criteria remember that very rarely will you get a model that ranks the best on all 8 indicators. You should temper your decision by considering models where the values are ranked high in several of the criteria presented. Again, remember to apply your judgment. The computer does not know the difficulty in gathering the data between variables. For instance, the variable Weight in humans, especially when self-reported can lead to reporting errors (men tend to over report and females under) or missing data (women are typically less likely to present their weight than males). A model selected by any of the methods discussed here might involve Weight, but another model that is similar in statistics but slightly less simple (i.e. includes more variables) could be easier in terms of data gathering 9