Statistics 512 Notes 11: - Wharton Statistics Department

advertisement

Statistics 512 Notes 11:

Motivation for percentile bootstrap confidence intervals:

Suppose there exists a monotone transformation

U m(ˆ) such that U ~ N ( , c 2 ) where m( ) . We do

not suppose that we know the transformation, only that one

*

*

exists. Let U b* m(ˆb ) where ˆb is the estimate of from

*

the bth bootstrap resample. Let u be the sample

*

quantile of the U b ’s. Since a monotone transformation

*

*

preserves quantiles, we have that u / 2 m( / 2 ) . Also,

2

since U ~ N ( , c ) , the / 2 quantile of U is z / 2 c .

*

*

Hence u / 2 z / 2 c . Similarly, u1 / 2 z / 2 c .

Therefore,

P(* / 2 1* / 2 ) P(m(* / 2 ) m( ) m(1* / 2 ))

=P(u* / 2 u1* / 2 )

P(U cz / 2 U cz / 2 )

=P( z / 2

U

z / 2 )

c

=1

An exact normalizing transformation will rarely exist but

there may exist approximate normalizing transformations.

The advantage of the percentile method is that it

automatically makes this transfomation. We don’t need to

know the correct transformation; all we assume is that such

a transformation exists.

Improvements on percentile bootstrap confidence intervals:

Major topic of research during the last twenty years: See

Introduction to the Bootstrap by Efron and Tibshirani.

It is often a good idea to standardize the estimator ˆ by an

estimator of scale. See Problem 5.9.5.

Parametric Bootstrap

Suppose we assume that X 1 , , X n iid with density/pmf

f ( x; ) , . To obtain a bootstrap estimate of the

standard error of a statistic Tn ( X1 , , X n ) or a confidence

interval for a parameter, we have taken resamples from the

empirical distribution Fˆn . For a parametric model, a more

accurate approach is to take resamples from f ( x;ˆ) where

ˆ is a point estimate of based on X1 ,

, Xn .

Example: Odds ratio.

Linus Pauling, recipient of Nobel Prizes in Chemistry and

in Peace, advocated the use of vitamin C for preventing the

common cold. A Canadian experiment examined this

claim, using 818 volunteers. At the beginning of the

winter, subjects were randomly divided into two groups.

The vitamin C group received a supply of vitamin C pills

adequate to last through the entire cold season at 1,000 mg

per day. The placebo group received an equivalent amount

of inert pills. At the end of the cold season, each subject

was interviewed by a physician who did not know the

group to which the subject had been assigned. On the basis

of the interview, the physician determined whether the

subject had or had not suffered a cold during the period.

Cold

No Cold

Total

Placebo

335

76

411

Vitamin C

302

105

407

Total

637

181

818

An important quantity in comparing two groups with a

binary outcome is the odds ratio. Let X denote the event

that an individual is exposed to a treatment (or lack of

treatment) or harmful agent and let D denote the event that

an individual becomes diseased. The odds of an individual

contracting the disease given that she is exposed is

P( D | X )

Odds( D | X )

1 P( D | X )

Let X denote the event that the individual is not exposed to

the treatment or harmful agent.

The odds ratio

Odds( D | X )

Odds( D | X )

is a measure of the influence of exposure on subsequent

disease. Here let D=catching a cold, X=receiving placebo

and X =receiving Vitamin C.

A natural point estimate of the odds ratio is

Pˆ ( D | X )

ˆ

Pˆ ( D | X )

1 Pˆ ( D | X )

1 Pˆ ( D | X )

(335 / 411)

(302 / 407)

1 (335 / 411)

1.53

1 (302 / 407)

In other words, odds of catching a cold on the placebo

regimen are estimated to be 1.53 times as large as the odds

for catching a cold while on the vitamin C regimen.

Parametric bootstrap confidence interval: The parametric

model is that the placebo outcomes have a binomial(n=411,

p= p1 ) distribution and the vitamin C outcomes have a

binomial (n=407,p= p2 ) distribution. Point estimates for

p1 are pˆ1 335 / 411 0.815 and pˆ 2 302 / 407 0.742 .

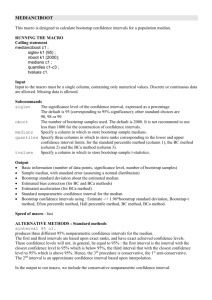

# Parametric percentile bootstrap confidence interval for

# odds ratio

bootstrapcioddsratiofunc=function(X1,n1,X2,n2,m,alpha){

bootoddsratiosvector=rep(0,m);

oddsratio= ((X1/n1)/(1-X1/n1))/((X2/n2)/(1-X2/n2));

for(i in 1:m){

# Resample X1, X2 using X1/n1 and X2/n2 as estimates of

# p1, p2

X1boot=rbinom(1,n1,X1/n1);

X2boot=rbinom(1,n2,X2/n2);

bootoddsratiosvector[i]=((X1boot/n1)/(1X1boot/n1))/((X2boot/n2)/(1-X2boot/n2));

}

bootoddsratiosordered=sort(bootoddsratiosvector);

cutoff=floor((alpha/2)*(m+1));

lower=bootoddsratiosordered[cutoff]; # lower CI endpoint

upper=bootoddsratiosordered[m+1-cutoff]; # upper CI

# endpoint

list(oddsratio=oddsratio,lower=lower,upper=upper);

}

> bootstrapcioddsratiofunc(335,411,302,407,10000,.05)

$oddsratio

[1] 1.532546

$lower

[1] 1.104468

$upper

[1] 2.175473

A 95% bootstrap confidence interval for the odds ratio is

(1.10,2.18).

Coverage study: How well does the percentile bootstrap

confidence interval work? Suppose

n1 100, p1 0.5, n2 100, p2 0.5, 1 . We can use the

Monte Carlo method to estimate how often the percentile

bootstrap confidence interval will contain the true odds

ratio 1 .

1. Generate two binomials

X1 ~ Binomial (n1 100, p1 0.5), X 2 ~ Binomial (n2 100, p2 0.5)

2. Using X1 , X 2 , construct a percentile bootstrap

confidence interval for .

3. Check if the true 1 belongs to the confidence

interval.

4. Repeat m* times and estimate the true coverage rate of

the confidence interval as the proportion of times that the

true 1 belongs to the confidence interval.

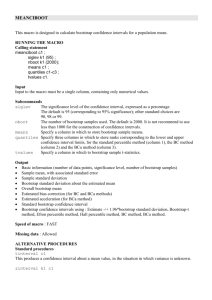

# Monte Carlo estimate of coverage rate for a 90%

# confidence interval

mstar=1000;

n1=100;

p1=.5;

n2=100;

p2=.5;

deltaincivector=rep(0,mstar);

for(i in 1:mstar){

X1=rbinom(1,n1,p1);

X2=rbinom(1,n2,p2);

bootci=bootstrapcioddsratiofunc(X1,n1,X2,n2,1000,.10);

deltaincivector[i]=(bootci$lower<1)*(bootci$upper>1);

}

estcoveragerate=sum(deltaincivector)/mstar;

> estcoveragerate

[1] 0.891

Bootstrap hypothesis testing

To do a hypothesis test of H 0 : 0 vs. H1 : 0 at

significance level (size) using the bootstrap, we can use

the duality between confidence intervals and hypothesis

test and check whether 0 is in a (1 ) confidence interval.

How about p-values?

The definition we have used:

For a test statistic W ( X1 , , X n ) , consider a family of

critical regions {C : } each with different sizes. For

the observed value of the test statistic Wobs from the

sample, consider the subset of critical regions for which we

would reject the null hypothesis, {C : Wobs C } . The pvalue is the minimum size of the tests in the subset

{C : Wobs C } ,

p-value = min Size(test with critical region C ) .

{C :Wobs C }

Equivalent definition:

Suppose we are using a test statistic W ( X1 , , X n ) for

which we reject H 0 : 0 for large values of the test

statistic. The p-value is

max 0 P (W ( X 1 , , X n ) Wobs ) where Wobs is the test

statistic for the observed data.

Using the bootstrap, we can estimate

max 0 P (W ( X 1 , , X n ) Wobs ) .

However, we need to make sure that the distribution we

resample from is in the null hypothesis. See Section 5.9.2.

Review of Chapter 5

Goal of statistics: A parameter (e.g., the mean) of a

population is unknown, all we know is that . Figure

out something (make inferences) about a parameter of a

population based on a sample from X 1 , , X n from the

population.

I. Three types of inferences:

A. Point estimation. Best estimate of based on

X 1 , , X n . Criterion for evaluating point estimators: mean

squared error.

B. Confidence intervals. Range of plausible values for

based on X 1 , , X n .

C. Hypothesis Testing: Decide whether 0 or 1 .

II. Monte Carlo method: Method for estimating how well

methods that are supposed to work well in large samples

work in finite samples.

III. Bootstrap procedures: Method for estimating

standard errors and forming confidence intervals

using complicated point estimates.