Finite Difference Method

advertisement

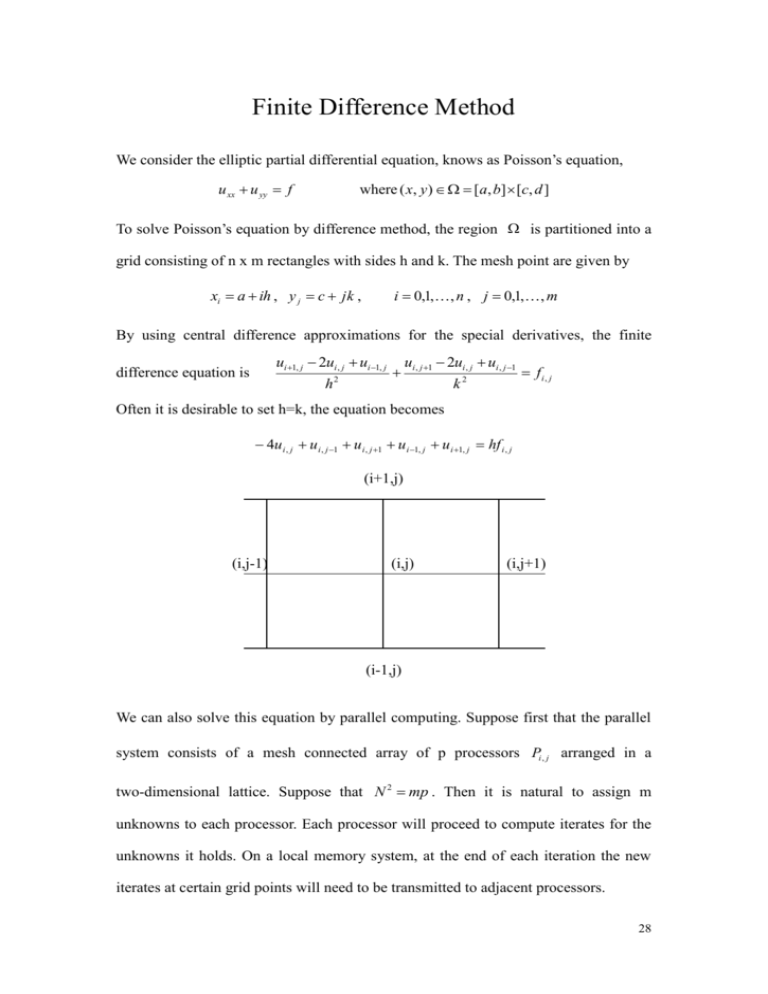

Finite Difference Method We consider the elliptic partial differential equation, knows as Poisson’s equation, u xx u yy f where ( x, y ) [a, b] [c, d ] To solve Poisson’s equation by difference method, the region is partitioned into a grid consisting of n x m rectangles with sides h and k. The mesh point are given by xi a ih , y j c jk , i 0,1,, n , j 0,1, , m By using central difference approximations for the special derivatives, the finite ui 1, j 2ui , j ui 1, j difference equation is h 2 ui , j 1 2ui , j ui , j 1 k2 fi, j Often it is desirable to set h=k, the equation becomes 4u i , j u i , j 1 u i , j 1 u i 1, j u i 1, j hf i , j (i+1,j) (i,j-1) (i,j) (i,j+1) (i-1,j) We can also solve this equation by parallel computing. Suppose first that the parallel system consists of a mesh connected array of p processors Pi , j arranged in a two-dimensional lattice. Suppose that N 2 mp . Then it is natural to assign m unknowns to each processor. Each processor will proceed to compute iterates for the unknowns it holds. On a local memory system, at the end of each iteration the new iterates at certain grid points will need to be transmitted to adjacent processors. 28 We denote by ” internal boundary values “ those values of u ij that are needed by other processors at the next iteration and must be transmitted. xxxxxxxxxxxxxxxxxxxxxxx x x x x Pij x x x x x x. xxxxxxxxxxxxxxxxxxxxxxxx u41 u31 u 43 u33 u42 u32 P21 u21 u11 u44 u34 P22 u 23 u13 u22 u12 P11 u24 u14 P12 For example, for the situation the computation in processor P22 would be k 1 k 1 , u34 compute u33 ; send to P12 k 1 k 1 k 1 , u 43 compute u 43 , send u33 to P21 29 From the above, we know that there are different types of connection between the processors. We choose the type of connection depend on the structure of the matrix. Example: A star A ring A cube Example 9 We have poisson equation u xx u yy 1 where ( x, y ) [0,1] [0,1] The region is partitioned into a grid consisting of 240x240 rectangles. Result: No of processor 1 4 9 16 Time used in each processor calculating x 17.64 12.17 5.57 3.27 Total time used in this function 21.08 13.4 7.42 4.51 (1) In the Scientific Computing Lab, the result shows that the total time used in this method is decreasing if more processors are used. E.g. When 9 computers are used, the total time used is 7.42s. It accelerates about triple times comparing with that when single processor is used. The time needed to calculate x becomes shortened 5.24+0.23=5.57s. It is because each computer just needs to calculate 1/9 part of x. The time used in ‘Send’ and ‘Receive’ is very small and the time mainly used in calculation. So we find that this method can apply parallel algorithm. 30 The speedup of parallel algorithm is S p =execution time for a single processor / execution time using p processors Ep Sp p p Speed up, S p Efficiency, E p 1 4 9 16 1 1.5731 2.841 4.6741 1 0.3933 0.3157 0.2921 We find that the efficiency of the algorithm is decreasing slowly. Since the number of processor is limited in our network of work-stations, we need to use PC cluster to see the development of curve when more processors are used. The upper line shows the ideal case where Sp=1. 31 (2) In TDG Cluster, the result is similar. The number of processor is increased and we can use up to 100 CPUs. No of processor, p 1 Total time used in this function 39.68 Speed up, S p Efficiency, E p 4 9 16 25 36 64 100 16.17 7.8 4.25 2.86 2.28 1.92 1.85 1 2.4539 5.0872 9.3365 13.874 17.4035 20.0667 21.4486 1 0.6135 0.5652 0.5835 0.555 0.4834 0.3135 From the table, we know that the efficiency is decreasing gradually. It means that the development of Sp is increased slowly. We notice that the curve of speed up becomes a horizontal line at the end. It is because when we use more than 70 processors, the time used in calculation is also around 2 seconds and the time used in message passing is no changed, so the total time is almost the same. 32 0.2145 Conclusion In conclusion, we know that the jacobi method can apply parallel algorithm. We can connect several processors to calculate the x at the same time, it will decrease the time used. But the Gauss-Seidel method need the latest information as new as possible, so the processors can not run the program at the same time and it can not apply parallel algorithm. For jacobi method, we need to notice the time used in message passing. It is because the time need to broadcast or gather the x is very long. The solution is we can apply the property of the matrix’s structure. Ex. For the sparse matrix, we just need to send the necessary data to the adjacent processor, it will decrease the time used in transferring data. In addition, we just use this parallel algorithm if the program need extensive time to solve in one processor, i.e. the matrix’s size is very large or it need many iterates to convergent, then the efficiency will be more evident. The main factors that cause degradation from perfect speedup are: (i) Lack of a perfect degree of parallelism in the algorithm and/or lack of perfect load balance; (ii) Communication, contention, and synchronization time By load balancing we mean the assignment of tasks to the processors of the system so as to keep each processor doing useful work as much as possible. We expect to need a parallel machine only for those problems too large to run in a feasible time on a single processor. 33 Bibliography [1] K. A. Gallivan, Michael T. Heath, Esmond Ng, James M. Ortega, Barry W. Peyton, R. J. Plemmons, Charles H. Romine, A. H. Sameh, Robert G. Voigt, Parallel Algorithms for Matrix Computations, Society for Industrial and Applied Mathematics, 1990 [2] U. Schendel, Introduction to Numerical Methods for Parallel Computers, Ellis Horwood Limited, 1984 [3] Marc Snir, MPI--the complete reference, Cambridge, Mass, 1998 [4] C. T. Kelley, Iterative methods for linear and nonlinear equations, Society for Industrial and Applied Mathematics, 1995 [5] Anne Greenbaum, Iterative Method for Solving Linear Systems, Society of Industrial and Applied Mathematics, 1997 [6] Wolfgang Hackbusch, Iterative Solution of Large Sparse Systems of Equations, Springer-Verlag, 1994 34 Appendix Total time used in different method Example 1 Jacobi method (matrix size:6x6) Gauss-Seidel method (matrix size:6x6) Example 2: Jacobi method (matrix size:6x6) Comparing the cases when 1,3 clusters are used. Example 3: Jacobi method (Sparse matrix:57600x57600) Comparing the cases when 1,2,4,8 clusters are used. Example 4: Jacobi method (Sparse matrix:921600x921600) Comparing the cases when 1,2,4,8 clusters are used. Example 5: Gauss-Seidel method (matrix size:6x6) Comparing the cases when 1,3 clusters are used. Example 6: Gauss-Seidel method (Sparse matrix:57600x57600) Comparing the cases when 1,5 clusters are used. Example 7: Conjugate gradient method (Sparse matrix) (diagonal=5) Comparing the cases when 1,2,4 clusters are used. Example 8: Conjugate gradient method (Sparse matrix) (diagonal=4) Comparing the cases when 1,2,4,8 clusters are used. Example 9: Finite different method (Poisson’s matrix) Comparing the cases when 1,4,9,16,25,36,64,100 clusters are used. Profile Report (1) Network of work-stations (2) PC Cluster 35