On the batch means approach by R. H. Storer, Lehigh Univ.

advertisement

IE 305 Simulation

Distributed 1/29/03

Robert H. Storer

http://www.lehigh.edu/~rhs2/ie305/ie305.html

Output Analysis of a Single System

Single Run Estimates

It may be desirable to estimate performance (and assess estimate

accuracy) from one single (probably long) run of a simulation. We

have seen that ARENA can do this, but that it often fails in its

attempts.

There are a couple of reasons why we might want to use only a

single run for this.

1.We will not have to “throw away” data from many initial

transients, thus saving computer time in steady state

simulations.

2.We may be able to provide useful statistics to people who

do not have the statistical background to understand

replicates, correlation, etc.

Recall the basic problem:

Suppose we are interested in average time in queue. Each

customer incurs a time in queue. We “write” each customer’s time

in queue to a data file.

Let Xi be the time in queue of customer i.

We could calculate and average X and sample variance S2 from

this data. We could theoretically calculate a confidence interval.

What is wrong?

The data points are not independent, but rather are “correlated”.

The estimate S2 will be biased, and the confidence interval wrong.

Actually, the Xi values are “correlated with themselves”. Thus we

refer to this phenomenon as “autocorrelation”. In particular, Xi

will be highly correlated with Xi+1.

We would expect to see much lower correlation between, say, Xi

and Xi+200 .

How can we deal with this “autocorrelation” problem?

Batch Means

Suppose we divide the run into batches of data points. Then we

take the average of the data points in each batch. For example, in

the graph below, we have plotted time in queue over time, grouped

in batches, and found the average of each batch.

Batch 1

Warm-up

Batch 2

Batch 3

Batch 4

Discard

The idea is that if the batches are big enough, the batch averages

will not be highly correlated.

The batch averages will be correlated somewhat.

For example, the last observation in batch one will be correlated to

the first observation in batch two. However, the idea is that the

correlation “washes out”.

Thus while there is correlation between batch averages, it will be

small, and thus can (hopefully) be ignored.

Once we have the batch averages, we can treat them in the same

fashion as if they were averages from independent replicates.

Of course we have to make sure that the batches are big enough so

that there is little correlation between batch averages.

How big is big enough?

Before we answer this question, we need to know a little more

about autocorrelation.

Review of Covariance and Correlation

Given two random variables X and Y, and a Joint distribution,

fXY(x,y), we know how to find E(X), E(Y), V(X), and V(Y).

We simply calculate them as before from the marginal

distributions of X and Y.

The means and variances of the two random variables alone do not

capture an important behavioral feature, namely the degree to

which the two random variables are related.

i.e. are they independent, or how dependent are they? There are

two essentially equivalent measures that attempt to capture this

relationship, covariance and correlation.

The covariance between two random variables X and Y is given

by:

COV(X,Y) = E{[X-E(X)](Y-E(Y)]}

=

(x-x)(y-y)fXY(x,y)dxdy

Note that the covariance between a random variable and itself is:

COV(X,X) = E{[X-E(X)](X-E(X)]} = E{[X-E(X)]2} = V(X)

The correlation between X and Y is related to the covariance and is

given by:

XY = COV(X,Y) / V ( X )V (Y )

The numerical value of covariance is not of much use in

determining to what degree the random variables are related. The

correlation is a “scaled” version of covariance.

Independent random variables have a correlation of zero indicating

that they are unrelated. If two random variables have a correlation

of 1, then they are “perfectly correlated”. For example, if X=Y

then the correlation is 1. If the correlation is -1, then they are

perfectly negatively correlated as would be the case if X = -Y.

Imagine we took observations from the X and Y, and then plotted

the observations on a scatterplot. Each observation is represented

by one point in the plot.

y

y

x

0<XY<1

x

-1<XY<0

y

y

XY = 1

x

XY = -1

x

The above plots show what it is about a joint distribution that

correlation measures: the degree to which the two random

variables are related.

Example

For each of the following real world random variables, indicate

what you think the correlation will be:

a) Let X and Y represent the IQ and height in inches of a randomly

selected person. It seems clear that these two random variables are

unrelated and thus independent. If two random variables are

independent, then = 0.

b) X = persons height in inches, and Y = there height in feet.

If you know the value of X, the value of Y can be determined

precisely. As X increases, Y increases, thus = 1.

c) X = the height of a tractor trailer, Y = the clearance of the

tractor trailer under a 14 foot bridge.

Again knowing X allows you to calculate Y precisely. However,

as X increases, Y decreases. Thus = -1.

d) X = a person’s height, Y = a person’s weight.

Taller people tend to be heavier, but it is not a deterministic

relationship.

Thus 0<<1.

e) X = the number of IE 305 classes skipped, Y = the final grade in

IE 305.

I assure you that -1 < < 0 !

Sample Correlation Coefficient

Given a data set X1,...Xn and Y1,...Yn we can estimate the

correlation using the sample correlation coefficient given by:

N

Y Y X

i

rXY

i

X

i 1

N

N

2

Y

Y

X

X

i i

2

i 1

i 1

Autocovariance and Autocorrelation

The “autocovariance at lag k” is denoted by k and is defined as

COV(Xi , Xi+k)

Thus 0 is the variance of X. 1 is the covariance between

consecutive observations in the series, etc.

Similarly, the autocorrelation at lag k is k = k / 0

A “correlogram” is a plot of k vs. k. A typical correlogram might

look like this:

k

k

We can construct a correlogram in Excel (see posted example).

Variance of the Average of Autocorrelated Data

A general result from statistics (which I have no intention of

proving now) is that if we take the average of the Xi values, the

variance of the average is given by:

V( X ) = (0/n)[ 1 + 2 (1 - k/n)k]

k

Example

Suppose we have data on the time in queue for 100 consecutive

customers. Suppose the time in queue random variable has the

following parameters:

= 50

2 = 0 = 4

1 = .94, 2 = .86, 3 = .71, 4 = .65, 5 = .55,

6 = .43, 7 = .28, 8 = .19, 9 = .05

All other autocorrelations are 0

Then we can compute the true variance of X using the formula

above:

n

V( X ) = (0/n)[ 1 + 2 (1 - k/n)k]

i 1

= (4/100){ 1 + 2[( .94)(.99) + ( .86)(.98) + ( .71)(.97)

+ ( .65)(.96) + ( .55)(.95) + ( .43)(.94) +( .28)(.93) +( .19)(.92)

+( .05)(.91) ]}

=(4/100)(1 + 2(4.4935)) = (4/100)(9.987)

If we ignored correlation we would use the usual

V( X ) = 2/n = 4/100 = 0.04

Thus our estimate ignoring correlation is off by a factor of

almost 10 (an order of magnitude!)

We form the usual confidence interval X k StandardError( X )

(Incorrectly) assuming independence we would get roughly:

50 (1.96)Sqrt(4/100) = 50 0.392

When the correct interval would be

50 (1.96)Sqrt((4/100)(9.987)) = 50 0.632

Note that if we computed S2 using the usual formula, it estimates

only 0. Thus we see that S2 can seriously under estimate the true

variance as we alluded to before. This again illustrates why

autocorrelation can really mess up the standard approach!

Aside: You may observe that the equation above will give a good,

unbiased estimate of the true variance. We would have to plug our

estimates of correlation rk into the equation in place of the k

(which are unknown). Using this variance estimate, we can

construct a fairly valid confidence interval on the mean. This

general approach forms the basis of what is called the

“Standardized Time Series” approach to output analysis.

From the correlogram picture above we can see that after roughly

lag 10, the correlation has died out. This gives us some indication

how big our batches have to be! Certainly batches of 10 is not big

enough.

Rule of Thumb

It is recommended that the number of observations per batch be at

least 4 times the number of observations before correlation dies

out.

Thus in the above example, we need at least 40 observations per

batch.

In general, 4*Number of lags till washout is a rule of thumb

minimum number of observations per batch.

Time Persistent Variables

The method of batch means works pretty much the same way for

time persistent variables as it does for observation based (i.e. tally)

variables.

Imagine the plot of the time persistent variable over time. We

divide time into intervals. Within each interval, we take the

average by integrating over the interval. We then use each average

as the batch mean.

To decide how long (i.e. how much time) each batch should be, the

rule of thumb is that the batch should be long enough so that the

variable changes value at least 40 times.

Correlation Test and ARENA Output

You may have observed that if you run a single replicate of a

simulation, ARENA attempts to provide a confidence interval on

certain results.

The way ARENA does this is by the method of batch means.

ARENA divides the output data into 20 batches, and then uses the

20 batch means to calculate a confidence interval.

It is possible to examine the autocorrelation in the data, and

determine if it is too high for the method of batch means (with a

specified batch size) to yield accurate results.

If your run is too short so that the 20 batches are not sufficient to

“wash out” the correlation, ARENA will print a message under the

“confidence interval half width” column indicating this.

Example (Question 9 from review problems)

Batch means on the number in queue from a simulation are

collected and shown below. As this is a steady state simulation,

you are interested in estimating the mean number in queue.

2.1 5.6 8.0 7.5 7.2 7.0 7.4 7.8 8.3 8.8 8.4 8.0

a) Provide an estimate of the steady state number in queue. Be

sure and explain your reasoning thoroughly.

Examining the batch means, it appears that there may be an initial

transient. The first two values (2.1 and 5.6) are significantly lower

than the rest of the batch means. Thus it would seem reasonable to

“chop off” the first two batches and use only last ten values:

8

7.5

7.2

7

7.4

7.8

8.3

8.8

8.4

8

average= 7.84

b) Provide a 95% confidence interval on your estimate in part a)

assuming independent batch means.

average=

std dev=

8

7.5

7.2

7

7.4

7.8

8.3

8.8

8.4

8

7.84

0.56999

t/2,n-1 = t0.05/2,10-1 = t0.025,9 = ???

95% CI = 7.84 (t0.025,9)(0.56999) / 10

95% CI = 7.84 (2.26)(0.56999) / 10

c) Do you think the assumption of independence is justified?

Support your answer. Points will be awarded on the basis of the

strength of your argument.

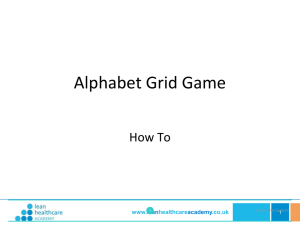

TIme Plot of Example data

10

9

8

7

6

5

4

3

2

1

0

1

2

3

4

5

6

7

8

9

10

Far from appearing random, the plot above would seem to indicate

that there exists positive correlation. A correlogram would verify

this.