Solution to Assignment 7

advertisement

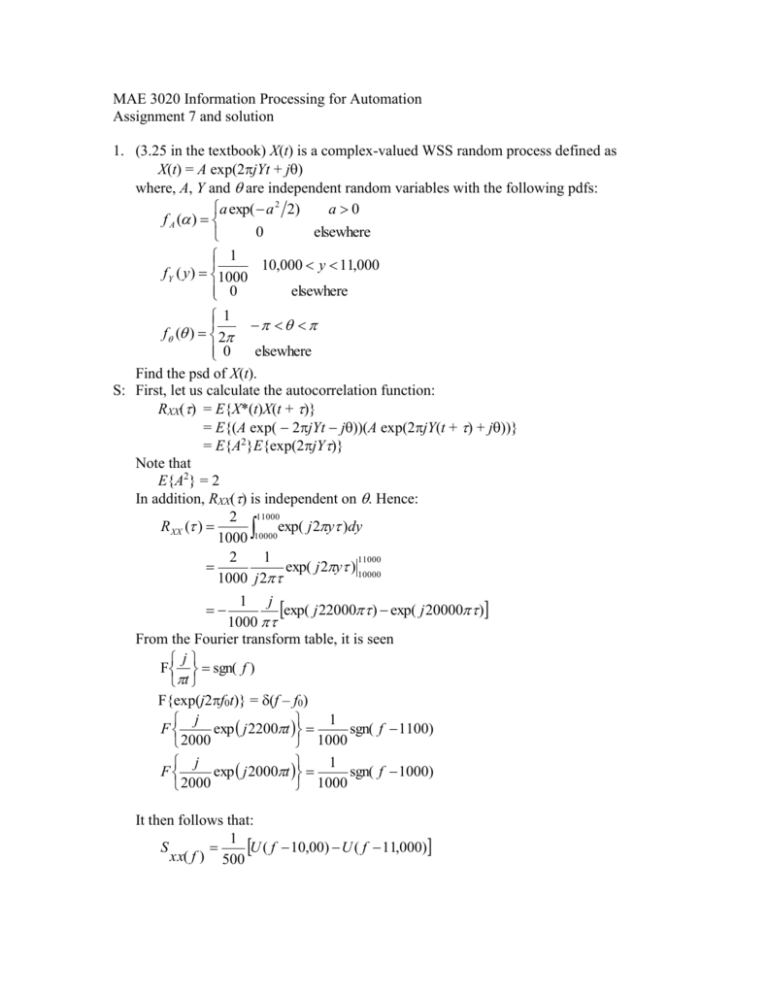

MAE 3020 Information Processing for Automation

Assignment 7 and solution

1. (3.25 in the textbook) X(t) is a complex-valued WSS random process defined as

X(t) = A exp(2jYt + j)

where, A, Y and are independent random variables with the following pdfs:

a exp( a 2 2)

a0

f A ( )

0

elsewhere

1

10,000 y 11,000

f Y ( y ) 1000

0

elsewhere

1

f ( ) 2

0

elsewhere

Find the psd of X(t).

S: First, let us calculate the autocorrelation function:

RXX() = E{X*(t)X(t + )}

= E{(A exp( 2jYt j))(A exp(2jY(t + ) + j))}

= E{A2}E{exp(2jY}

Note that

E{A2} = 2

In addition, RXX() is independent on . Hence:

2 11000

R XX ( )

exp( j 2y )dy

1000 10000

2

1

11000

exp( j 2y ) 10000

1000 j 2

1 j

exp( j 22000 ) exp( j 20000 )

1000

From the Fourier transform table, it is seen

j

F sgn( f )

t

F{exp(j2f0t)} = (f – f0)

1

j

F

exp j 2200t

sgn( f 1100)

2000

1000

1

j

F

exp j 2000t

sgn( f 1000)

2000

1000

It then follows that:

1

U ( f 10,00) U ( f 11,000)

S

xx( f ) 500

2. (3.35 in the textbook) Let Z(t) = x(t) + Y(t) where x(t) is a deterministic, periodic

power signal with a period T and Y(t) is a zero mean ergodic random process. Find

the autocorrelation function and also the psd function of Z(t) using time averages.

S: Z(t) = x(t) + Y(t)

1 T /2

RZZ ( ) T x(t ) x(t ) x(t ) y (t ) x(t ) y (t ) x(t ) y (t )dt

T T / 2

1 T /2

x(t ) x(t )dt RYY ( )

T T / 2

Note that the cross terms are dropped because x(t) is periodic (not because Y(t) has a

zero mean). Since x(t) is periodic, it can be written as follows:

2kt

2kt

x(t ) ak cos

bk sin

T

T

k

Hence,

2kt

2kt

2k (t )

2k (t )

x(t ) x(t ) a j cos

b j sin

a k cos

bk sin

T

T

T

T

l

k

Because of the orthogonality, it can be shown that:

1 t

2k

x(t ) x(t )dt ak2 bk2 cos

T

/

2

T

T

k

Therefore:

2k

RZZ ( ) ak2 bk2 cos

RYY ( )

T

k

Using the Fourier transform table:

ak2 bk2

k

k

S ZZ ( f )

f f RYY ( f )

2

T

T

k

3. (3.39 in the textbook) A stationary zero-mean Gaussian random process X(t) has an

autocorrelation function:

RXX() = 10 exp( )

Show that X(t) is ergodic in the mean and autocorrelation function.

S: (i) The mean

T /2

1

E X T E X (t )dt X 0

T / 2

T

1 T /2

var x(t ) 1 C XX ( )d

T T / 2 T

2 T

1 10 exp( )d

10 0 T

2 2

10 2 1 exp( T )

T T

since:

lim var[ x(t )] 0

T

X(t) is ergodic in the mean

(ii) The autocorrelation function

1 T /2

E R XX ( ) T E{ X (t ) X (t )dt

T T / 2

1 T /2

R XX ( )d

T T / 2

R XX ( )

R XX ( )d 10 exp( )d

0

0

10e d 10e d

10 10 20

Therefore, it is ergodic in the autocorrelation function.

4. (3.46 in the textbook) The probability density function of a random variable X is

shown in the figure below. (a) If X is quantized into four levels using a uniform

quantizing rule, find the MSE (Mean Square Error); (b) If X is quantized into four

levels using a minimum (MSE) non-uniform quantizer, find the quantizer end points

and output levels as well as the MSE.

fX(x)

1/2

-2

0

2

x

S: This problem deals with the quantilization. From the figure, it is seen that the

probability density function is:

1

f X ( x) 2 x ,

x 2

4

(a) Using a four-level uniform quantizer, we have:

-2 < x < -1: m = (-2 - 1) / 2 = -1.5

-1 < x < 0:

m = (-1 - 0) / 2 = -0.5

0 < x < 1:

m = (0 + 1) / 2 = 0.5

-2 < x < -1: m = (2 + 1) / 2 = 1.5

Hence:

N q E{( X m) 2 }

4

xi

i 1

xi 1

( x mi ) 2 f X ( x)dx

0

1

2

1 1

( x 1.5) 2 (2 x)dx ( x 0.5) 2 (2 x)dx ( x 0.5) 2 (2 x)dx ( x 1.5)( 2 x)dx

1

0

1

2 2

1 / 12

S q E m

2

4

mi

2

i 1

xi

xi 1

f X ( x)dx

3/ 4

Therefore, the mean squares error (MSE) is:

N q 1 / 12

1/ 9

Sq

3/ 4

(b) When nonuniform quantizer is used, the quantizer values, mi, cannot be

analytically determined. However, assuming that X is normally distributed (the

triangle is similar to normal after all),

Nq = (2.2)Q-1.96, when (Q >> 1)

Since Q = 4 (not much greater than 1), Nq = 0.1453. Thus,

N q 0.1453

0.1938

Sq

3/ 4

5. (4.6 in the textbook) The input-output relationship of a discrete-time linear timeinvariant causal system is given by:

Y(n) = h(0)X(n) + h(1)X(n-1) + … + h(k)X(n-k)

The input sequence X(n) is stationary, zero mean, Gaussian with

1 j 0

E{ X (n) X (n j )}

0 j 0

(a) Find the pdf of Y(n)

(b) Find RYY(n) and SYY(f).

S: (a) Since X(n) are Gaussian, Y(n) will be Gaussian. Its mean is:

E{Y(n)} = E{h(0)X(n) + h(1)X(n-1) + … + h(k)X(n-k)}

= h(0)E{X(n)} + h(1)E{X(n-1)} + … + h(k)E{X(n-k)}

=0

and its variance is:

E{Y2(n) } = E{[h2(0)X2(n) + h2(1)X2(n-1) +… + h2(k)X2(n-k)]}

= h2(0) + h2(1) +… + h2(k)

(c) The autocorrelation is:

R yy (n) R XX (n) * h(n) * h(n)

h( n) * h( n)

h( n j ) h( j )

j

h( n j ) h( j )

j

Hence:

J

h( n j ) h( j ) n J

RYY (n)

j o

0

nJ

In addition:

SYY ( f ) F RYY ( )

K

K

h(n )h( ) exp( j 2nf )

n K 0