SECTION D Linear Independence

advertisement

Chapter 3: Euclidean Space

41

SECTION D Linear Independence

By the end of this section you will be able to

understand what is meant by linear combination and linear independence

show that given vectors are linearly independent or dependent

prove properties about linear independence

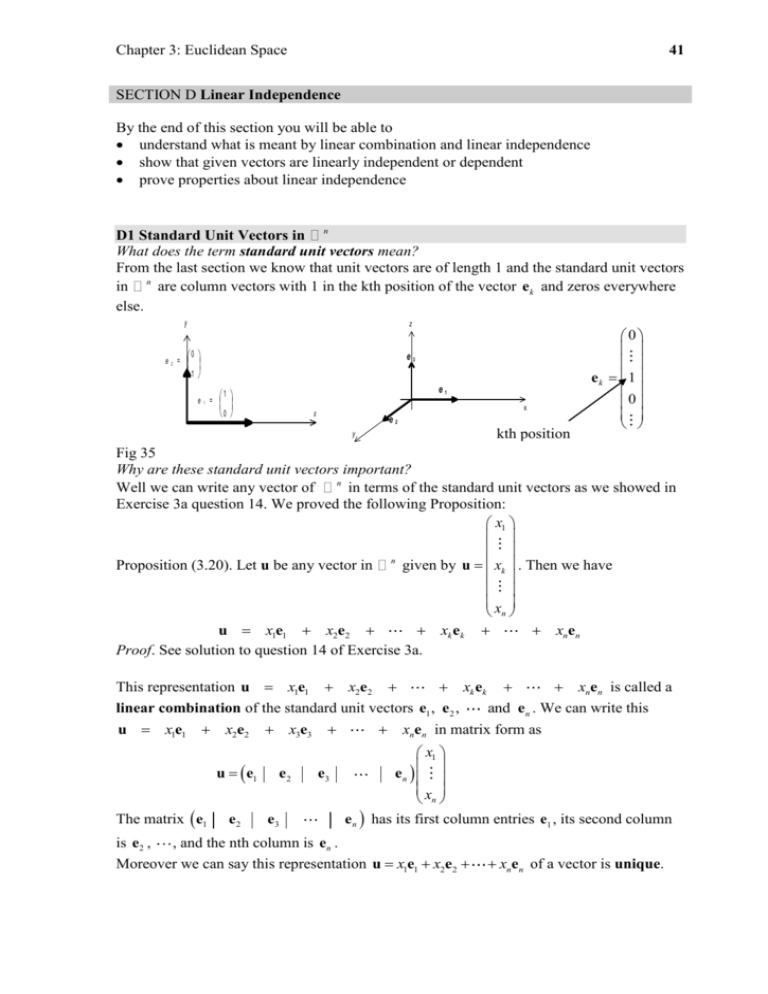

D1 Standard Unit Vectors in n

What does the term standard unit vectors mean?

From the last section we know that unit vectors are of length 1 and the standard unit vectors

in n are column vectors with 1 in the kth position of the vector ek and zeros everywhere

else.

y

e2 =

z

0

1

e1 =

e3

e1

1

0

x

x

e2

kth position

y

0

ek 1

0

Fig 35

Why are these standard unit vectors important?

Well we can write any vector of n in terms of the standard unit vectors as we showed in

Exercise 3a question 14. We proved the following Proposition:

x1

Proposition (3.20). Let u be any vector in n given by u xk . Then we have

x

n

u x1e1 x2e2

xk ek

xnen

Proof. See solution to question 14 of Exercise 3a.

xk ek

xnen is called a

This representation u x1e1 x2e2

and en . We can write this

linear combination of the standard unit vectors e1 , e2 ,

u x1e1 x2e2 x3e3

xnen in matrix form as

u e1

The matrix e1

e2

e2

e3

e3

en

x1

en

x

n

has its first column entries e1 , its second column

is e2 , , and the nth column is en .

Moreover we can say this representation u x1e1 x2e2

xnen of a vector is unique.

Chapter 3: Euclidean Space

42

Proposition (3.21). Let u be any vector in n then the linear combination

u x1e1 x2e2

xk ek

xnen

is unique.

What does this proposition mean?

Means that there is only one way of writing any vector u of n in terms of the standard

unit vectors e1 , e2 ,

and en .

Proof.

Let the vector u be written as another linear combination:

u y1e1 y2e2

yk ek

ynen

What do we need to show?

Required to show that the scalars are equal: y1 x1 , y2 x2 , y3 x3 ,

and yn xn .

Equate the two linear combinations because both are equal to u:

x1e1 x2e2

xnen y1e1 y2e2

yn e n

x1e1 x2e2

x1 y1 e1

xnen

x2 y2 e2

Writing out e1 , e2 ,

y1e1

y2e2

xn yn en

yn e n O

O

Factorising

, en and O as column vectors in the last line we have

1

0

0 0

0

0

1

0 0

Remember ek

x1 y1 x2 y2 xn yn

1

0

0

1 0

0

We can write this in matrix form as

0 x1 y1 0

x1 y1 0

1 0

I is the

x y

x

y

0

1

0

0

0

2

2

2

2

identity

implies I

0

matrix

1 xn yn 0

0

xn yn 0

and xn yn 0 which gives our

Hence we have x1 y1 0, x2 y2 0, x3 y3 0,

and yn xn .

result: y1 x1 , y2 x2 , y3 x3 ,

Therefore the given linear combination, u x1e1 x2e2 xk ek xnen , is unique. ■

D2 Linear Independence

Example 12

Determine the linear combination of the standard unit vectors in n which gives the zero

and xn in the following:

vector. This means find the values of the scalars x1 , x2 , x3 ,

x1e1 x2e2

xk ek

xnen O

Solution

and en we have

Substituting e1 , e2 ,

1

0

0

1

x1 x2

0

0

0 0

0

0

xn

1 0

Chapter 3: Euclidean Space

43

We can write this in matrix form as

1

0 x1 0

[This is Ix O ]

1 xn 0

0

In compact form we have Ix O where I is the identity matrix. Ix O gives x O .

From the zero vector x O we have x1 0, x2 0, x3 0,

and xn 0 .

We say that the standard unit vectors e1 , e2 ,

and en are linearly independent which

means that any one of the vectors ek cannot be made by a linear combination of the others.

These standard unit vectors are not the only vectors in

Definition (3.22). Generally we say vectors v1 , v 2 , v3 ,

n

which are linearly independent.

and v n in n are linearly

independent the only real scalars k1 , k2 , k3 ,

and kn which satisfy:

k1v1 k2 v 2 k3 v3 kn v n O are k1 k2 k3 kn 0

What does this mean?

The only solution to the linear combination k1v1 k2 v 2

kn v n O is

when all the scalars k1 , k2 , k3 ,

and kn are equal to zero. In other words you cannot

make any one of the vectors v j say, by a linear combination of the others.

We can write the linear combination k1v1 k2 v 2 k3 v3

v

1

v2

The first column of the matrix v1

v3

v2

v3

kn v n O in matrix form as

k1 0

k

0

vn 2

kn 0

v n is given by the entries in v1 ,

the second column is given by the entries in v 2 and the nth column is given by the entries

in v n .

Recall from chapters 1 and 2 that a linear system Ax O has the unique solution x 0

matrix A is invertible or det A 0 [Not zero].

How is this related to linear independence?

v n then these vectors v1 , v 2 , … and v n are

Well if the matrix A v1 v 2

linearly independent Ax O has the unique solution x O . What does this mean?

v n is invertible or

Means we only need to check that the matrix A v1 v 2

det A 0 [not zero] for the vectors v1 , v 2 , … and v n to be linearly independent.

Example 13

1

2

Show that u and v are linearly independent in 2 and plot them.

1

3

Solution

Using the above definition (3.22) and writing out the linear combination we have

k u c v O [Using k and c as scalars]

Substituting the given vectors u and v into this k u c v O gives

Chapter 3: Euclidean Space

44

k 2c 0

1 2 0

k c or

k 3c 0

1 3 0

1 2

k

0

Let A u

v

and x . We need to solve Ax O where O .

1 3

c

0

1 2

Since det A det

1 3 1 2 5 is non-zero so the only solution is

1 3

x O [Because Ax O and det A 0 then x O ] which means the values of the

scalars are k 0 and c 0 .

Hence the linear combination k u c v O yields k 0 and c 0 therefore the

given vectors u and v are linearly independent because all the scalars, k and c, are equal

to zero. Plotting the given vectors we have:

2

v

3

1

u

1

Fig 36

This means that vector u is not a scalar multiple of vector v and vice-versa.

In 2 the vectors u and v are linearly independent means that they are not scalar multiples

of each other. Plotting linear independent vectors:

v

Linearly independent vectors u

and v

u

Fig 37

D3 Linear Dependence

What does linear dependence mean?

Definition (3.23). Conversely we have the vectors v1 , v 2 , v3 ,

linearly dependent the scalars k1 , k2 , k3 ,

k1v1 k2 v 2 k3 v3

and v n in

n

are

and kn are not all zero and satisfy

kn v n O

From chapters 1 and 2 we know that the linear system

Ax O

has an infinite number of solutions if det A 0 . This means that if

k1

A v1 v2

vn , x

k

n

and v n are linearly dependent. Why?

and det A 0 then these vectors v1 , v 2 , v3 ,

Because det A 0 means the linear system Ax O has an infinite number of solutions

so there must be non-zero solutions, that is x O . Hence there are non-zero k’s which

satisfy

Chapter 3: Euclidean Space

45

k1v1 k2 v 2 k3 v3

kn v n O

Example 14

3

1

2

Show that u and v

and plot them.

are linearly dependent in

1

1/ 3

Solution

Using the above definition (3.23) and writing the vectors u and v as a linear combination

we have

k u c v O [k and c are scalars]

Substituting the given vectors

3 1 0

ku cv k c

1 1/ 3 0

You can check the determinant of A u

v is equal to zero. However this does not

give us the relationship between the two vectors u and v. We can write out the argumented

O and carry out row operations to determine the relationship between u and

matrix A

v. The augmented matrix for this is given by

R1 3 1

0

Because u v

O

R 2 1 1/ 3 0

where R 1 and R 2 represent rows. Carrying out the row operation 3R 2 R1 gives

k c

3 1 0

3R 2 R1 0 0 0

From the top row we have 3k c 0 which implies c 3k . Let k 1 then

c 3k 31 3

Substituting our values k 1 and c 3 into k u c v O gives

u 3v O or u 3v

We have found non-zero scalars, k 1 and c 3 , which satisfy k u c v O

therefore the given vectors u and v are linearly dependent and u 3v .

Plotting the given vectors u and v we have:

R1

3

u

1

1

v

1/ 3

Fig 38

Note that u 3v which means that the vector u is a scalar multiple 3 of the vector v.

If vectors u and v in

2

are linearly dependent then we have

k u c v O where k 0 or c 0

That is at least one of scalars is not zero. Suppose k 0 then

Chapter 3: Euclidean Space

46

k u c v

c

u v Dividing by k

k

This means that the vector u is a scalar multiple of the other vector v which suggests that u

is in the same (or opposite) direction as vector v. Plotting these we have:

u

Fig 39

v

Linearly dependent vectors u and v

Of course in the above Example 14 we could have let k 2, 3, , 666 etc. Any non-zero

number will do! Generally it makes the arithmetic easier if we use k 1 .

Example 15

2

3

0

Determine whether the following vectors u 1 , v 1 and w 0 in 3 are

0

1

0

linearly dependent or independent.

Solution

Consider the following linear combination

k1u k2 v k3w O

where k’s are scalars.

Substituting the given vectors u, v and w into this, k1u k2 v k3w O , yields

Let A u

Expanding

along this

row

2 0

3

0

k1u k2 v k3w k1 1 k2 1 k3 0 0

0

1

0 0

3 0 2

k1

and

v

w 1 1 0

x k2 . We need to solve Ax O :

0 1 0

k

3

3 0 2

3 2

det A det 1 1 0 1 det

0 2 2

1

0

0 1 0

Because det A 2 is non-zero therefore Ax O only has the solution x O .

Solution x O means k1 k2 k3 0 , that is all the scalars are zero:

k1u k2 v k3w O implies k1 k2 k3 0

Hence the given vectors u, v and w are linearly independent.

Example 16

2

1

3

0

Determine whether the following vectors u 1 , v 1 , w 0 and x 2 in

0

1

0

3

3

are linearly dependent or independent.

Solution

Chapter 3: Euclidean Space

47

Consider the linear combination

k1u k2 v k3w k4 x O

What do we need to find?

Need to determine whether all the scalars k’s - k1 , k2 , k3 and k4 are zero or not.

Substituting the given vectors into this linear combination:

2

1 0

3

0

k1u k2 v k3w k4 x k1 1 k2 1 k3 0 k4 2 0

0

1

0

3 0

The augmented matrix of this is given by

R1 3 0 2 1

0

Using u v w x

R2 1 1 0 2

0

O

R 3 0 1 0 3

0

Carrying out the row operation R 2 R 3 gives:

k1 k2 k3 k4

3 0

R2 R3 1

0

R3

0 1

From the bottom row we have k2 3k4

R1

0

0 5

0

0 3

0

0 which gives k2

2 1

3k4 . Let k4 1 :

3k4 3 1 3

k2

From the middle row we have k1 5k4 0 implies that k1 5k4 5 1 5 .

The top row gives 3k1 2k3 k4 0 . Substituting k1 5 and k4 1 into this:

3 5 2k3 1 0

implies

k3 8

Our scalars are k1 5 , k2 3 , k3 8 and k4 1 . Substituting these into the above linear

combination k1u k2 v k3w k4 x O gives the relationship between the

vectors:

5u 3v 8w x O or x 5u 3v 8w

Since we have non-zero scalars (k’s) so the given vectors are linearly dependent.

We can write the

vector:

x 5u 3v 8w

1

x 2

3

3

u 1

0

0

v 1

1

2

w 0

0

Fig 40

In general if vectors u , v and w in

3

are linearly dependent then we have

Chapter 3: Euclidean Space

48

k1u k2 v k3w O gives k1 0 or k2 0 or k3 0

That is at least one of scalars is not zero. Suppose k1 0 then

k1u k2 v k3w

k

k

u 2 v 3 w

Dividing by k1

k1

k1

This means that the vector u in 3 is a scalar multiply of the other two vectors v and w.

Hence we can make vector u out of vectors v and w.

In the above Example 16 we have x 5u 3v 8w which means we can make the

vector x out of the vectors u, v and w.

D4 Properties of Linear Dependence

Proposition (3.24). Let v1 , v 2 , v3 ,

and v n be vectors in n . If one (or more) of these

and v n are linearly

vectors, v j say, is the zero vector then the vectors v1 , v 2 , v3 ,

dependent.

Proof.

Consider the linear combination

k1 v1 k2 v 2 k3 v 3

kjv j

kn v n O

(*)

In (*) take k j 0 [Non-zero number] and all the other scalars equal zero, that is

k1

k2

k3

k j 1

k j 1

kn

0

Since v j O we have k j v j O which means that all scalars in (*) are not zero ( k j 0 ).

By definition (3.23) we have that the vector v1 , v 2 ,

and v n are linearly dependent.

■

What does Proposition (3.24) mean?

and v n , one of these is the zero vector then they

If amongst the vectors v1 , v 2 , v3 ,

are linearly dependent.

and v m be distinct vectors in n . If n m , that is

Proposition (3.25). Let v1 , v 2 , v3 ,

value of n in the n – space is less than the number, m , of vectors, then the vectors

v1 , v 2 , v3 ,

and v m are linearly dependent.

Proof.

and v m :

Consider the linear combination of the given vectors v1 , v 2 , v3 ,

k1v1 k2 v 2

kn v n kn1v n1

km v m O

(*)

The number of equations is n because each vector belongs to the n – space n but the

number of unknowns k1 , k2 , k3 , , kn , kn1 , , km is m. Writing this out we have

v11

v21

v12

v

k1

k2 22

v1n

v2 n

v n 11

vn1

v

v

n

1

2

kn n 2 kn 1

v

v

nn

n 1n

vm1 0

vm 2 0

km

vmn 0

n equations

m unknowns

By the following Proposition (1.24) case (II) of chapter 1:

In a linear system, if the number of equations is less than the number unknowns then the

system has an infinite number of solutions.

(3.23) If non-zero k’s satisfy k1v1 k2 v 2

kn v n O then vectors v’s are dependent

Chapter 3: Euclidean Space

49

In our linear system the number of equations n is less than the number of unknowns m.

Therefore we have an infinite number of k’s which satisfy (*) and this means all the k’s are

not zero. By Definition (3.23) the given vectors are dependent.

Example 17

Determine whether the following vectors

2

2

2

4

u 3 , v 19 , w 6 and x 2

7

5

3

5

3

in

are linearly dependent or independent.

Solution

Since we have 4 vectors u, v, w and x in 3 and 4 3 therefore by the above Proposition

(3.25) we conclude that the given vectors u, v, w and x are linearly dependent.

Normally we write the vectors as a collection in a set. A set is denoted by { } and is a

collection of objects. The objects are called elements or members of the set.

We can write the set of vectors v1 , v 2 , v3 ,

and v n as

S v1 , v 2 , v3 ,

, vn

We use the symbol S for a set.

Proposition (3.26). Let S v1 , v 2 , v3 ,

, v n be n vectors in the n - space

be the n by n matrix whose columns are given by the vectors v1 , v 2 , v3 ,

n

. Let A

and v n :

A v1 v2

vn

If the reduced row echelon form (rref) of A contains a zero row then the set of vectors

S v1 , v 2 , v3 , , v n are linearly dependent.

Proof.

See Exercise 3(d).

SUMMARY

and v n be vectors in n and k1 , k2 , k3 ,

and kn be scalars.

Let v1 , v 2 , v3 ,

Consider the following linear combination

k1v1 k2 v 2 k3 v3

kn v n O

(*)

kn 0 (all scalars are

If the only solution to this (*) is k1 k2 k3

and v n are linearly independent.

zero) then the vectors v1 , v 2 , v3 ,

and kn are not all zero satisfy (*) then the vectors

If the scalars k1 , k2 , k3 ,

v1 , v 2 , v3 ,

and v n are linearly dependent.

and v n is zero then they are linearly

If one or more of the vectors v1 , v 2 , v3 ,

dependent.

and v m in n where n m then these vectors

If there are m vectors v1 , v 2 , v3 ,

v1 , v 2 , v3 ,

and v m are linearly dependent.