ProjectDescription

advertisement

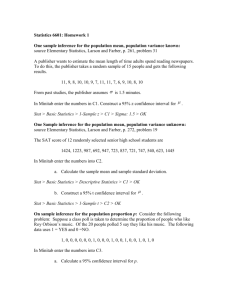

Exam project in STK4170 and STK9170 Bootstrapping and resampling, fall 2011 Mathematical Institute, University of Oslo This project starts 5. December. The students have to submit a written report before 13. December 16:00 by email to magne.aldrin@nr.no . The project is divided into ten smaller problems. Problem 1e) counts 6 %, problems 2a) and 2b) count 12 % each, and the remaining seven problems count 10 % each in the evaluation of your project report. The report should contain a short description of how each problem is solved, the numerical answers and potential comments to the answers. All computer code should be documented in an appendix. You may use the R package or other computer programs for computations. If you use R, you can either use the bootstrap routines from the book, or you can program from scratch. If you use another program than R, every bootstrap routine must be programmed from scratch. It is always wise to plot the data, but it is up to you if you want to present data plots in the report or not, except if they are especially asked for. If you get problems with your computer code, it can be wise to use the R function browser(), which stop inside the code and gives you the opportunity to check the status of variables and to run single commands. Use about 10000 bootstrap replicates when performing significance tests or constructing confidence intervals for problems that are not too computer-intensive. For some problems, for instance with double bootstrap, it may be necessary to use only a few hundred replications in the inner or outer loops. For most problems, it is possible to use the bootstrap routines in the book, but for at least problems 1h) and 2a) you have to program everything by yourself. If for some problems you are unable to do the numerical computations, but know the main ideas for how to solve the problem, please describe your solution in the main part of the report. The report can be in English or Norwegian. If you have any technical problems, you can send me an email or phone me at 22 85 26 58. Magne Aldrin Problem 1 The two data sets NO2 and PM10 are available at the exam web page. They both contain 50 hourly observations of air pollution measured during a two-year period at road in Oslo. Bot data sets contain a random sample of 50 observations from a much larger data set of many thousand observations, and you can ignore correlation in time. The two data sets are collected for different hours, so there is no direct correspondence between the observations in the two dataset. The NO2 data set can for instance be read into R by read.table(“NO2.dat”,header=T) The first column of the NO2 data contains the (natural) logarithm of the NO2 concentration (denoted y in the data frame), whereas the first column of the PM10 data contains the logarithm of the PM10 concentration, that is particles smaller than 10 micro-meters. The next seven columns in both of the data sets are logarithm of number of cars, temperature 2 meters above the ground, wind speed, temperature difference between 25 meters above the ground and two meters above the ground, wind direction in degrees between 0 and 360, hour of day and day number counted from the start of the original data period. In most problems below the logarithm of NO2 or of PM10 will be treated as a response variable and the other variables as explanatory variables. a) Consider the wind speed in the NO2 data. Calculate a 95 % confidence interval for the median wind speed, using the normal, basic, percentile and BCa bootstrap methods. Perform a significance test for the median being equal or below 1.5 (H0: m<=1.5, H1: M>1.5) by using the BCa confidence interval method. b) Consider the logarithm of the hourly number of cars in the NO2 data. Test if its distribution is nonsymmetric, but this time without using a method based on confidence intervals. Calculate an adjusted p-value by using double bootstrap. Then it holds with 199 bootstrap replicates in the inner and outer loops, or more if your computer if fast. c) Calculate NO2 and PM10 by taking exp() of their logarithms. NO2 and PM10 have different levels. First, adjust NO2 by a multiplicative factor so it gets the same mean as PM10. Then, test if PM10 and the adjusted NO2 have the same distribution. d) Now, regress the logarithm of NO2 on all the seven explanatory variables, using multiple linear regression, for instance the R function glm(). We will focus on the regression coefficient for the logarithm of number of cars, here called beta1. Calculate the standard 95 % confidence interval based on normal theory. Perform then a case-wise bootstrap, and construct 95 % confidence intervals for beta1, by the normal, basic, percentile and BCa methods. e) Perform then a case-wise bootstrap, and construct 95 % confidence intervals for beta1, by the studentized method. Use then only 499 or 999 bootstrap replications. f) Perform then a model based or semi-parametric bootstrap by resampling residuals, and construct 95 % confidence intervals for beta1, by the normal, basic, percentile methods. Use also the BCa method if you know how. g) Now, also regress the logarithm of PM10 on all the seven explanatory variables in the PM10 data, again using multiple linear regression. Again, we will focus on the regression coefficient for the logarithm of number of cars, here called alpha1. Especially, we are interested in if the number of cars has different effect on PM10 than on NO2. Therefore, perform case-wise bootstrap and construct a 95 % confidence interval for the difference beta1-alpha1, by the normal, basic, percentile and BCa methods. Finally, use the percentile confidence interval to find the p value for the test H0: beta1=alpha1, H1: beta1 not equal alpha1. h) Some of the explanatory variables, for instance temperature, are expected to have a non-linear relation to the logarithm of NO2. However, with as few as 50 observations, a non-linear model may result in over-fitting. Consider the following three models of increasing complexity: M1: All explanatory variables have linear effects, i.e. the linear model used above. M2: Temperature at 2 meter, wind speed and wind direction have non-linear effects, the other four explanatory variables have linear effects. M3: All explanatory variables have non-linear effects. Models M2 and M3 can be specified as generalized linear models (GAMs) of the form y = s(x1) + b2 x2 + , where s(x1) is a smooth function of x1, with the function form estimated by the data, whereas the x2 is included as a linear term as in linear regression. M2 is such a combined linear/non-linear model, whereas M3 has only non-linear terms. GAM models can be fitted by the function gam() in the gam-library in R. Then each non-linear function use four degrees of freedom (four free parameters). Predictions can be performed by the predict.gam function as predict.gam(gamobj,newdata=test.data). It is also useful to look at the gam plots by first writing par(mfrow=c(3,3)) and then plot(gamobj). Perform a 10-fold cross validation, and compare the root mean squared prediction error (called PE in the lecture notes) for the three models. Select the best model. Repeat once and compare the results. Perform a permuted 10-fold cross validation, where the 10-fold cross validation is repeated 10 times. Select the best model. Problem 2 Two exercises on time series. To be made during the weekend. a) The data set TimeUnivariate is available at the exam web page, with 300 observations of a univariate time series from time t=1 to t=300. The data set can for instance be read into R by read.table(“TimeUnivariate.dat”,header=T). Fit an autoregressive model to the data, using maximum likelihood, and where the number of autoregressive parameters (p) is found by minimizing Akaike’s Information Criterion (AIC), You can use the R function ar(), with method=”mle”, and maximum 12 autoregressive parameters. What is the optimal value of p? Find the corresponding residual root mean squared errors (residual RMSE). For the optimal value of p, estimate the RMSE you can expect for future one-step-ahead predictions. Use forward validation, predicting the observations from number 51 to 300. Comment on the difference from the RMSE you found above. Use instead forward validation, again predicting the observations from number 51 to 300, to select the optimal order p. What is the optimal order then? Finally, use forward validation to estimate the RMSE you can expect for future one-step-ahead predictions, when the model is selected by minimizing the AIC, and you also take into account the uncertainty introduced by model selection. b) The data set TimeReg is available at the exam web page, with 300 bivariate time series observations from time t=1 to t=300. The data set can for instance be read into R by read.table(“TimeReg.dat”,header=T). Variable y is a response variable and variable x is an explanatory variable. This is a regression problem with an autocorrelated noise term. A plausible model is yt = beta0 + beta1 xt + nt, where the noise process nt follows an AR(1) model, i.e. nt = nt-1 + t . Our focus is on the regression parameter beta1. Fit the model above to the data. You can use the R function arima() (corresponds to arima.mle() in Splus). Report the estimate of beta1 and its standard error. Check also if the residuals look Gaussian by the R function qqnorm(). Find an alternative estimate of the standard error of beta1 by a block bootstrap with fixed block length 10, using overlapping blocks and circular variant of the bootstrap algorithm. Use 1000 bootstrap replicates. Are you sure that you use overlapping blocks and a circular bootstrap? Tell why. Now, use the stationary bootstrap to estimate the standard error of beta1, and vary the expected block length over the values 1, 2, 3, 4, 5, 10, 15, 20, 25 and 30. Use 1000 bootstrap replicates. Use the results to choose a suitable block length, and give your final estimate of the standard error of beta1.