Applied Statistical Models – Attachment

advertisement

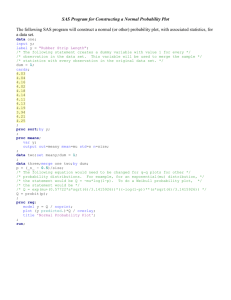

Applied Statistical Models – Appendix Part SAS for Windows v6.12 – Introduction & Reference Gunther Matthys University Center for Statistics Katholieke Universiteit Leuven -1- 1 Introduction 1.1 The SAS-environment Windows When you start SAS, three windows are opened: the PROGRAM EDITOR, where you can type SAS-programs to create and to analyse datasets, the LOG-window, where you get information from the system about the execution of commands and programs, the OUTPUT-window, where you find the output of analyses, tests and other procedures. In the upper left-hand corner you find the COMMAND LINE where you can enter SAS-commands that influence or customise the SAS-session, e.g. call modules and user interfaces (ASSIST, INSIGHT, …), manage windows (CLEAR, MANAGER, PGM, LOG, OUTPUT, …). Working with SAS Basically there are two ways to get SAS doing what you want it to do: 1. With SAS-programs which you type in the PROGRAM EDITOR. A SAS-program consists of DATA-steps to create and edit datasets, PROC-steps to analyse datasets, perform tests and produce output. Each entity (‘line’) in a SAS-program ends with a semicolon “;”. The program itself ends with “run;” and you can execute it with the <F8>-button or by clicking the icon with the little running man on top. In the PROGRAM EDITOR you can recall previously executed programs with the <F4>-button. When a SAS-program is saved as a file, its name gets extension “.sas”. In the following we will indicate SAS-programs which have to be typed in the PROGRAM EDITOR with PGM and put them in this font. 2. By clicking and selecting in menu-oriented modules and interfaces. We will mainly use SAS/INSIGHT exploratory data analysis, SAS/ASSIST menu-oriented user interface. 1.2 Data-management in SAS SAS-datasets are matrices; each column represents a variable and each observation’s features are in a row. Libraries Datasets are collected in libraries. In fact a library-name (libname) is just a shorter name for a Windows File Manager directory in which datasets are located. Standard in SAS you dispose of 2 libraries: WORK default, temporary, SASUSER permanent. You can manage libraries and assign your own libnames to Windows-directories by clicking on the librarybutton on top. For instance, if you want to save SAS-datasets on floppy disk, you assign a libname to the directory “a:\” with the “New Library...” option. -2- Names of datasets are of the form “libname.dataset”, where libname refers to the library the dataset is located in, “dataset”, when the dataset is in the default library WORK (this is equivalent to “WORK.dataset”). Names of datasets and libraries are at most 8 characters long. In Windows File Manager a SAS-dataset is a file with extension “.sd2” in the folder that is assigned to its library. Creating SAS-datasets If necessary you first create the library you want to put your SAS-dataset in. After that there are 2 easy ways to create datasets that SAS can handle. 1. By importing data from a text-file, an Excel-spreadsheet, etc. Call the Import Wizard with “File > Import”, make the appropriate selections and click “Next >” after each step. 2. With a SAS-program using DATA-steps. A DATA-step typically consists of the following statements: PGM data libname.datasetname; input variablenames; cards; (data) ; E.g. The “data”-line creates a dataset with the specified name in the specified library. The libname is optional; if you omit it, the dataset will be put in the WORK library. The “input”-line determines the structure of the dataset by enumerating the names of the variables in the dataset. SAS works with 2 kinds of variables: character and numeric variables. Character variables are defined by a “$”-sign after their name. “cards” indicates that the data follow in the DATA-step. The data must be given one line per observation and in the same order as the variable names. You end the DATA-step with a semicolon “;” and execute it with <F8> or by clicking on the button with the running man. PGM data sasuser.clouds; input rainfall type $; cards; 1202.6 unseeded 830.1 unseeded 2745.6 seeded 1697.1 seeded ; -3- You can also enter the data one after another without starting a new line for each observation by typing “@@” at the end of the input line. E.g. 1.3 PGM data sasuser.clouds; input rainfall type $ @@; cards; 1202.6 unseeded 830.1 unseeded 372.4 unseeded 2745.6 seeded 1697.1 seeded 1656.4 seeded ; SAS/INSIGHT and SAS/ASSIST SAS/INSIGHT The module SAS/INSIGHT is a very useful tool to investigate the distribution of data, i.e. for exploratory data analysis. You can call it by typing “insight” in the command line and entering. Select the appropriate library and the dataset you want to explore and click “Open”. Now you see the dataset as a matrix. Basic techniques to handle the data in INSIGHT involve selecting observations and variables by clicking on them, double-clicking to view their properties, and sorting the dataset using the data popup menu (with the arrow in the upper left hand corner) and “Sort...”. Observations and variables can be deleted by selecting them and choosing Edit > Delete. If you just want certain observations to be excluded in calculations and/or not to appear in graphs (without deleting them) you can select them and click Edit > Observations > Exclude in Calculations and/or Edit > Observations > Hide in Graphs. You can transform variables with Edit > Variables and by selecting the appropriate transformation. When changes have been made to the dataset (e.g. observations are deleted or a new variable is created) you can save the modified dataset with File > Save > Data.... Otherwise the original dataset remains unaltered after quitting INSIGHT. SAS/ASSIST SAS/ASSIST is a menu-oriented interface to perform various kinds of tests. This module is called by typing “assist” in the command line and entering. In the following, whenever analyses have to be performed with submenus of SAS/ASSIST or SAS/INSIGHT this will be indicated with the tags ASSIST and INSIGHT respectively. -4- 2 2.1 Comparing two data groups Methodology Aim: compare two groups of data do they have the same distribution? Parametric techniques Basic assumption: relevant data are normally distributed check for equal means 2 distinguished cases: Unpaired data Paired data ( correlation; visual check with scatter plot) check normality of both groups separately normal density on histogram Kolmogorov-Smirnov test normal QQ-plot (if not normal: try transformation of both data groups and check again) F-test for equal variances standard 2-sample t-test create difference-variable & check its normality normal density on histogram Kolmogorov-Smirnov test normal QQ-plot (if not normal: try difference of logtransformed data) one-sample t-test on difference variable ( paired t-test) sign test Wicoxon signed rank sum test Nonparametric techniques 2.2 2-sample quantile plot with correlation coefficient Wilcoxon(-Mann-Whitney) rank sum test Terry-Hoeffding-Van der Waerden test Exploratory Data Analysis Sample distribution In order to investigate the distribution of a sample and to check the assumption of normality one can use the following tools. All of them are available in SAS/INSIGHT. histogram (with normal density) boxplot empirical and normal distribution functions with Kolmogorov-Smirnov test normal quantile plot with correlation coefficient Remark: when working with unpaired data it is necessary to specify a group variable in order to perform the analyses for each group separately! INSIGHT #boxplot, histogram, moments and quantiles : Analyze > Distribution (Y) (Y : Rainfall) #best fitting normal density on histogram : Curves > Parametric Density... > Normal - Sample Estimates/MLE -5- #empirical and normal distribution functions with Kolmogorov-Smirnov test : Curves > Test for Distribution... > Normal #normal quantile plot : Graphs > QQ Plot... > Normal #correlation coefficient : Analyze > Multivariate (Y’s) (Y : Rainfall, N_Rainfal) Dependence The dependence and/or similarity between paired datasets can be visualised with INSIGHT #paired boxplot Analyze > Histogram/Bar Chart (Y) (Y : February, March) #scatter plot with correlation coefficient Analyze > Scatter Plot (Y X) (Y : March, X : February) Analyze > Multivariate (Y’s) (Y : February March) 2.3 t-Tools INSIGHT #one-sample t-test Analyze > Distribution (Y) Tables > Location Tests... > Student’s T Test #paired t-test Edit > Variables > Other... > Y-X (Y : March, X : February, Name : Diff) Analyze > Distribution (Y) (Y : Diff) Tables > Location Tests... > Student’s T Test The Student’s t-test in SAS/INSIGHT gives a Student's t-statistic Y T= . S/ n Assuming that the null hypothesis (population mean = ) is true and the population is normally distributed, the t-statistic has a Student's t-distribution with n-1 degrees of freedom. The P-value is the probability of obtaining a Student’s t-statistic greater in absolute value than the absolute value of the observed statistic T. ASSIST #paired t-test Data Analysis > Anova > T-tests... > Paired comparisons... (Active data set : Gariep, Paired variables : February March) Locals > Run #standard 2-sample t-test Data Analysis > Anova > T-tests... > Compare two group means... (Active data set : Clouds, Dependent : Rainfall, Classification : Type) Locals > Run The standard 2-sample t-test in SAS/ASSIST returns the result of a 2-sided F-test for equal variances, and tstatistics with corresponding 2-sided P-values assuming either equal or unequal variances. -6- 2.4 Non-parametric alternatives Unpaired data Some non-parametric alternatives to the t-tools for unpaired data groups are: 2-sample quantile plot with correlation coefficient Wilcoxon(-Mann-Whitney) rank sum test Terry-Hoeffding-Van der Waerden test In order to make a 2-sample quantile plot with correlation coefficient you first have to create a dataset with the ordered data; this only works when both data groups contain the same number of observations. After that you can make the quantile plot and its correlation coefficient in SAS/INSIGHT. PGM data Seeded; set Clouds; rename Rainfall=SeRain; if Type="Seeded"; keep Rainfall; data Unseeded; set Clouds; rename Rainfall=UnRain; if Type="Unseeded"; keep Rainfall; proc sort data=Seeded; by SeRain; proc sort data=Unseeded; by UnRain; data Combine; merge Seeded Unseeded; run; INSIGHT Analyze > Scatter Plot (Y X) (Y : UnRain, X : SeRain) Analyze > Multivariate (Y’s) (Y : SeRain UnRain) ASSIST #Wilcoxon rank sum test Data Analysis > Anova > Nonparametric Anova... (Active data set : Clouds, Dependent : Rainfall, Classification : Type) > Analysis based upon : Wilcoxon scores Locals > Run #Terry-Hoeffding-Van der Waerden test Data Analysis > Anova > Nonparametric Anova... (Active data set : Clouds, Dependent : Rainfall, Classification : Type) > Analysis based upon : Van der Waerden scores Locals > Run SAS uses normal approximations to calculate the 2-sided P-values of the Wilcoxon rank sum test and the Terry-Hoeffding-Van der Waerden test. This is done by centring the test statistic with its expected value under the null hypothesis, and dividing this by its standard deviation under the null hypothesis. For rather small sample sizes, however, it is more accurate to use tables for the Wilcoxon rank sum test. -7- Paired data For paired data one can use the following non-parametric techniques in SAS/INSIGHT: sign test Wicoxon signed rank sum test These tests are performed on the difference variable in its distributional analysis window. INSIGHT #sign test Tables > Location Tests... > Sign Test The sign statistic is given by n ( ) n ( ) M= , 2 where n(+) is the number of observations with values greater than , and n(-) is the number of observations with values less than . INSIGHT #Wilcoxon signed rank sum test Tables > Location Tests... > Signed Rank Test The signed rank test assumes that the distribution is symmetric. The signed rank statistic is computed as n(t )n(t ) 1 R(i ) S= 4 i where n(+) is the number of observations with values greater than , n(-) is the number of observations with values less than , n(t) = n(+) + n(-) is the number of Y(i) values not equal to , R(i+) is the rank of |Y(i) - | after discarding Y(i) values equal to , and the sum is calculated for values of Y(i) - greater than 0. Average ranks are used for tied values. The P-value is the probability of obtaining (by chance alone) a signed rank statistic greater in absolute value than the absolute value of the observed statistic S. -8- 3 3.1 One-way layout ANOVA Testing the hypothesis of equal means You get a visual impression of the data for the different groups with INSIGHT #side-by-side boxplots Analyze > Box Plot/Mosaic Plot (Y) (Y : Time, X : Type) It is useful to check normality of the data in the different groups. This can be done as before with SAS/INSIGHT, specifying the appropriate group variable. When the assumption of normality holds (possibly for a transformation of the data) an ANOVA table with F-test can be used to test the hypothesis that all group means are equal. Otherwise non-parametric tests such as the Kruskal-Wallis test and the test of Van der Waerden can be used. ASSIST #one-way ANOVA table with F-test Data Analysis > Anova > Analysis of variance... (Active data set : Bearings, Dependent : Time, Classification : Type) Locals > Run #Kruskal-Wallis test and test of Van der Waerden Data Analysis > Anova > Nonparametric Anova... (Active data set : Bearings, Dependent : Time, Classification : Type) > Analysis based upon : Wilcoxon scores Van der Waerden scores Locals > Run In SAS a k21 -distribution is used instead of an Fk-1,N-k-distribution to calculate the 2-sided P-value of the test of Van der Waerden. This is a good approximation when N-k is big enough (i.e. when k is small w.r.t. N), as then (k-1)Fk-1,N-k is approximately k21 distributed. PGM #one-way ANOVA table with F-test proc anova data=Bearings; class Type; model Time=Type; run; #Kruskal-Wallis test and test of Van der Waerden proc npar1way data=Bearings wilcoxon vw; class Type; var Time; run; -9- 3.2 Multiple comparison procedures ASSIST #confidence intervals for pairwise differences Data Analysis > Anova > Analysis of variance... (Active data set : Bearings, Dependent : Time, Classification : Type) > Additional options > Output statistics... > Means... : Type > Options for means... > Comparison Tests : Fisher’s least-significant-difference test Tukey’s HSD test Bonferroni t test Scheffe’s multiple-comparison procedure > Specify comparison options... : Level of significance for comparison of means : 0.05 Confidence intervals for differences between means Locals > Run #testing contrasts Data Analysis > Anova > Analysis of variance... (Active data set : Bearings, Dependent : Time, Classification : Type) > Additional options > Model hypotheses... > Contrast... > Select effect : Type > Specify number of contrasts : 1 > Specify contrast label : 1_2 > Supply contrast values : 1 -1 0 0 0 Locals > Run PGM #confidence intervals for pairwise differences proc anova data=Bearings; class Type; model Time=Type; means Type / t bon scheffe tukey cldiff alpha=.05; run; #testing and estimating contrasts proc glm data=Bearings; class Type; model Time=Type; contrast ‘1_2’ Type 1 -1 0 0 0; estimate ‘1_2’ Type 1 -1 0 0 0; run; The "estimate"-statement provides the standard error to create an individual student’s t-confidence interval for the specified contrast. - 10 - 4 4.1 Simple & multiple linear regression Model fitting SAS/INSIGHT is a very helpful tool to interactively fit simple as well as multiple linear regression models. The model statement or the data can be easily modified, which is especially useful to verify the influence of presumed outliers, or to include transformations of variables in the model. Moreover, all techniques to investigate distributions are available for the analysis of residuals. Exploratory analysis INSIGHT #pairwise scatterplot of the response and all covariates Analyze > Scatter Plot (Y X) (Y : Y X1 X2 ..., X : Y X1 X2 ...) #correlation between the response and all covariates Analyze > Multivariate (Y’s) (Y : Y X1 X2 ...) Simple linear regression For simple linear regression SAS/INSIGHT provides a.o. the following output: parameter estimates, with confidence intervals and significance t-tests R2- and adjusted R2-statistics F-test for 1 = 0 residuals and standardised residuals, which can be analysed in the usual way fitted values for given datapoints confidence bounds for individual means prediction bounds for individual predictions INSIGHT #model fitting Analyze > Fit (Y X) (Y : Y, X : X, Intercept) > Output > Tables : Model Equation Summary of Fit Analysis of Variance/Deviance Parameter Estimates 95% C.I. (LR) For Parameters > Residual Plots : Residual by Predicted Apply Individual confidence bounds for the means and prediction bounds for individual predictions are created with #individual confidence and prediction bounds Curves > Confidence Curves > Mean : 95% > Prediction : 95% 2 new variables have been added to the dataset: P_Y, the predicted or fitted values, and R_Y, the corresponding residuals. Standardised residuals, which get the name RS_Y, and which should approximately have a standard normal distribution, are calculated with the following command. You can investigate these residuals and standardised residuals with the usual techniques. #standardardized residuals Vars > Standardized Residual - 11 - A normal QQ-plot of the standardised residuals, and a scatterplot of the standardised residuals versus the fitted values, where about 95% of the residuals should lie between -2 and 2, are very informative about the quality of the regression model. You can detect possible 'outliers' through their standardised residuals or through their Cook's distances, which indicate influential observations and which should be compared to the critical value of an Fp-1,N-pdistribution. #Cook's distances Vars > Cook's D. The influence of presumed outliers can be investigated by clicking on them in a graph or in the dataframe and selecting Edit > Observations > Exclude in Calculations. When you want to apply a linear regression to a transformation of the response or the explanatory variable, you can add this transformed variable to the dataset with Edit > Variables and re-fit your regression model, including the transformed variable. Multiple linear regression The basic techniques to fit a multiple linear regression model are the same as for simple linear regression. However, especially the paired scatterplot and the adjusted R2-statistic become more interesting for multiple covariates. After having fitted a multiple regression model, it is useful to construct a partial residual (or leverage) plot for each explanatory variable, which reflects the variation of the response variable that is induced by this explanatory variable only. This can be used as an indicator of the relative influence of each observation on the parameter estimates. #partial leverage plots Graphs > Partial Leverage Two reference lines are displayed in each plot. For a given datapoint, its residual without the explanatory variable under consideration is the vertical distance between the point and the horizontal line; its residual with the explanatory variable is the vertical distance between the point and the fitted line. 4.2 Model selection Regression analysis can also be performed with a SAS-procedure. Additionally, this offers the possibility to make automatic selections among multiple covariates, based on various criteria, in order to get a good regression model. PGM #regression analysis and model selection proc reg data=dataset; model Y = X1 X2 ... / <options>; run; Covariates are separated from each other with a blank. When you want to include transformations of variables (power transforms, for instance) in the “model”-statement, you first have to create them explicitly (e.g. in SAS/INSIGHT "XSQR = X*X "); then the new variables can be used in the regression procedure. - 12 - Some useful options that can be specified in the “model”-statement are: P: calculates predicted values from the input data and the estimated model. The printout includes the observation number, the actual and predicted values, and the residual. (If CLI, CLM, or R is specified, P is unnecessary.) NOINT: suppresses the intercept term that is otherwise included in the model. R: requests an analysis of the residuals. The printed output includes everything requested by the P option plus the standard errors of the prediction for the mean and of the residual, the studentised residual, and Cook's D statistic to measure the influence of each observation on the parameter estimates. CLI: requests the 95% upper- and lower-confidence limits for the prediction of the dependent variable for each observation. The confidence limits reflect variation in the error, as well as variation in the parameter estimates. CLM: prints the 95% upper- and lower-confidence limits for the expected value (mean) of the dependent variable for each observation. This is not a prediction interval because it takes into account only the variation in the parameter estimates, not the variation in the error term. SELECTION= : specifies the method used to select an optimal subset of covariates. This can be FORWARD (or F), BACKWARD (or B), STEPWISE, MAXR, MINR, RSQUARE, ADJRSQ, or CP. ADJRSQ: computes the adjusted R2-statistic for each model selected. (Only available when SELECTION= RSQUARE, ADJRSQ, or CP). CP: computes Mallows' Cp-statistic for each model selected. (Only available when SELECTION= RSQUARE, ADJRSQ, or CP). Furthermore, it is possible to test hypotheses about the regression parameters in a “test”-statement, to make plots of the results of the regression, and to save the output in a SAS-dataset. PGM #regression analysis, model selection, hypothesis testing, plots, and data output proc reg data=dataset; model Y = X1 X2 ... / <options>; test equation <,..., equation >; plot variable.*variable. <...>; output out=newdataset predicted=pred residual=res student=stud; run; The hypothesis-equations for the parameters are written with the names of the corresponding covariates. For instance, "test X1, X2 - X3 = 2;" tests whether the coefficient of X1 equals 0, and the difference between the coefficients of X2 and X3 is equal to 2. In the “plot”-line you can use all variables that result from the “model”-statement, such as r., student., p., u95., u95m., etc. For instance, "plot r.*p. student.*p." plots the residuals and the studentised residuals versus the fitted values of the linear regression. The “output”-statement creates an output dataset containing statistics calculated for each observation. For each statistic, specify the keyword, an equal sign, and a variable name for the statistic in the output data set. The following keywords are available: PREDICTED or P, RESIDUAL or R, L95M, U95M, L95, U95, STDP, STDR, STDI, STUDENT, COOKD, and PRESS. - 13 - 5 5.1 Multivariate data analysis Principal component analysis Principal component analysis (PCA) is meant to find an optimal representation of a multivariate data cloud in a lower-dimensional subspace. Therefore one creates independent new variables by taking linear combinations of the original ones such that the projections of the data points upon the consecutive subspaces generated by these so-called principal components retain maximal spread (variance). For instance, the 2-dimensional representation that distinguishes best among the data is found by projecting upon the plane generated by the first 2 principal components. The interpretation of the principal components has to be inferred from the original variables' coefficients in the linear combinations that make up the PCA. A PCA can be performed with the following SAS-procedure: PGM #principal component analysis proc princomp data=dataset <options>; var X1 X2 ...; run; Some options that can be specified are: cov: uses the diagonalisation of the variance-covariance matrix of the data in order to calculate the principal components instead of the correlation matrix, which is the default method. out= newdataset: creates an output dataset that contains the principal component scores for all observations, together with the scores for the original variables. The principal components are given the variable names PRIN1, PRIN2, etc. This output dataset can be used e.g. in SAS/INSIGHT to plot the projection of the data cloud upon the first principal components. 5.2 Factor analysis Factor analysis (FA) is aimed at finding underlying factors that explain contrasts and similarities between the original variables. This procedure leads to projections in lower-dimensional spaces that optimally reflect the configuration or spread of the original variables. PGM #factor analysis proc factor data=dataset <options>; var X1 X2 ...; run; Some possible options are: nfactors= : specifies the number of factors to be retained. scree: prints a scree plot of the eigenvalues. rotate= v: asks for a varimax rotation of the factors. out= newdataset: creates an output dataset that contains the factor scores for all observations, together with the scores for the original variables. The factor variables are given the names FACTOR1, FACTOR2, etc. This output dataset can be used e.g. in SAS/INSIGHT to plot the projection of the data cloud upon the first underlying factors. A biplot gives an optimal 2-dimensional representation of the configuration of the variables, combined with the projections of the data points on the plane generated by the first 2 underlying factors. In order to create a biplot one first has to submit the following macro: - 14 - PGM /* This macro produces a correlation biplot of a general form. Last update : July 95. It is written by Magda Wauters (E-mail Magda.Vuylsteke@kuleuven.ac.be). Comments and information can be obtained on this E-mail address. */ %macro biplot( target=cgmmwwc, skore=scoreset, text=id, multlad=1, multsco=1, radius = 0, print=0, varlist=, mean=0, x_as=FACTOR1, y_as=FACTOR2 ); /*device for hardcopy plot */ /*dataset with observation scores */ /*label variable - must be a character variable */ /*scalingfactor for variable-arrows */ /*scalingfactor for observations */ /*min distance of visible points */ /*print=1 then print loadings and scores. */ /* list of variables to be plotted by means of arrows */ /*mean=1 then only the mean-score of the obs belonging to the different categories of the text-variable will be plotted */ /* factor for factoranalysis */ /* Other orthogonal axes are possible e.g. in can. analysis */ goptions hsize=15 cm vsize=15 cm gunit=pct ftext=zapfb htext=2; axis1 length=12 cm order=(-1,0,1) value = (tick=1 justify=c "-1:&multsco" tick=2 "0" tick=3 "+1:&multsco") label=("&x_as" f=zapfb h=2.5); axis2 length=12 cm order=(-1 to 1) value = (tick=1 justify=c "-1:&multsco" tick=2 "0" tick=3 "+1:&multsco") label=("&y_as" f=zapfb h=2.5); goptions targetdevice=&target; %annomac; * scaling to length = 1 of scores; proc means data=&skore uss noprint; var &x_as &y_as; output out=gemid uss=ussx ussy; data skore; if _n_ = 1 then set gemid; set &skore; x_as = &x_as / sqrt(ussx) * &multsco; y_as = &y_as / sqrt(ussy) * &multsco; text=&text; run; %if &mean %then %do; proc sort data=skore; by text; proc means data=skore noprint mean; by text; var x_as y_as; output out=gemid mean=x_as y_as; run; data skore; set gemid; %end; * Saving the loadings; proc corr data=&skore out=loadset noprint; var &x_as &y_as; with &varlist; data lading(keep=text x_as y_as); set ; if _type_ = 'CORR'; x_as=&x_as * &multlad; y_as=&y_as * &multlad; text='+'||_name_; run; * concatenation of loadings and scores; data totaal; set skore lading; keep text x_as y_as; radius = x_as ** 2 + y_as ** 2 ; if sqrt(radius) ge &radius; data anno; set totaal; length function $ 8 style $ 8; retain xsys '2' ysys '2'; if _n_=1 then do; hsys='2'; %circle(0,0,1); end ; hsys='4'; x=x_as;y=y_as; if substr(text,1,1)='+' then do; size=.8; iden='var'; text=substr(text,2); style='zapfb '; function='label'; - 15 - output; end; else do; style='zapfbe'; size=.5; function='label'; output; end; if iden='var' then do; size=1; %line(x,y,0,0,*,1,1); output; end; symbol1 v=none; proc gplot data=totaal anno=anno; plot y_as*x_as/href=0 vref=0 haxis=axis1 vaxis=axis2; * printout of the coordinates ; %if &print %then %do; proc print data =totaal; var text x_as y_as; run; %end; footnote "Radius of the circle = 1"; %mend biplot; options mlogic mprint; run; After submitting the factor procedure and this biplot macro you can make a biplot with a SAS-program of the following form. After skore= you must give the name of the output dataset that contains the factor scores and that was created with out= in the factor procedure. text= specifies the variable that contains the labels of the observations (this must be a character variable), and varlist= lists the variables used in the FA. PGM %biplot( target=cgmmwwc, /*device for hardcopy plot */ skore= newdataset, /*dataset with observation scores */ text= labelvar, /*label variable - must be a character variable */ multlad=1, /*scalingfactor for variable-arrows */ multsco=1, /*scalingfactor for observations */ radius = 0, /*min distance of visible points */ print=0, /*print=1 then print loadings and scores. */ varlist= X1 X2 ..., /* list of variables to be plotted by means of arrows */ mean=0, /*mean=1 then only the mean-score of the obs belonging to the different categories of the text-variable will be plotted */ x_as=FACTOR1, /* factor for factoranalysis */ y_as=FACTOR2 /* Other orthogonal axes are possible e.g. in can. analysis */ ); run; - 16 - 6 Canonical discriminant analysis The purpose of canonical discriminant analysis (CDA) is to find a projection of the data in a low-dimensional subspace which optimally distinguishes between different groups of multivariate data. As such it is an application of PCA to a transformation of the data. PGM #canonical discriminant analysis proc candisc data=dataset <options>; class classvar; var X1 X2 ...; run; class: specifies the variable that contains the group labels. var: lists the variables to be used in the CDA. Some options: ncan= : specifies the number of canonical components to be retained. out= newdataset: creates an output dataset that contains the CDA scores for all observations, together with the scores for the original variables. The CDA scores are given the variable names CAN1, CAN2, etc. This output dataset can be used e.g. in SAS/INSIGHT to make a scatter plot of the data cloud in the canonical co-ordinate system. - 17 -