Construct a version of the table that we have in class for 1/1

advertisement

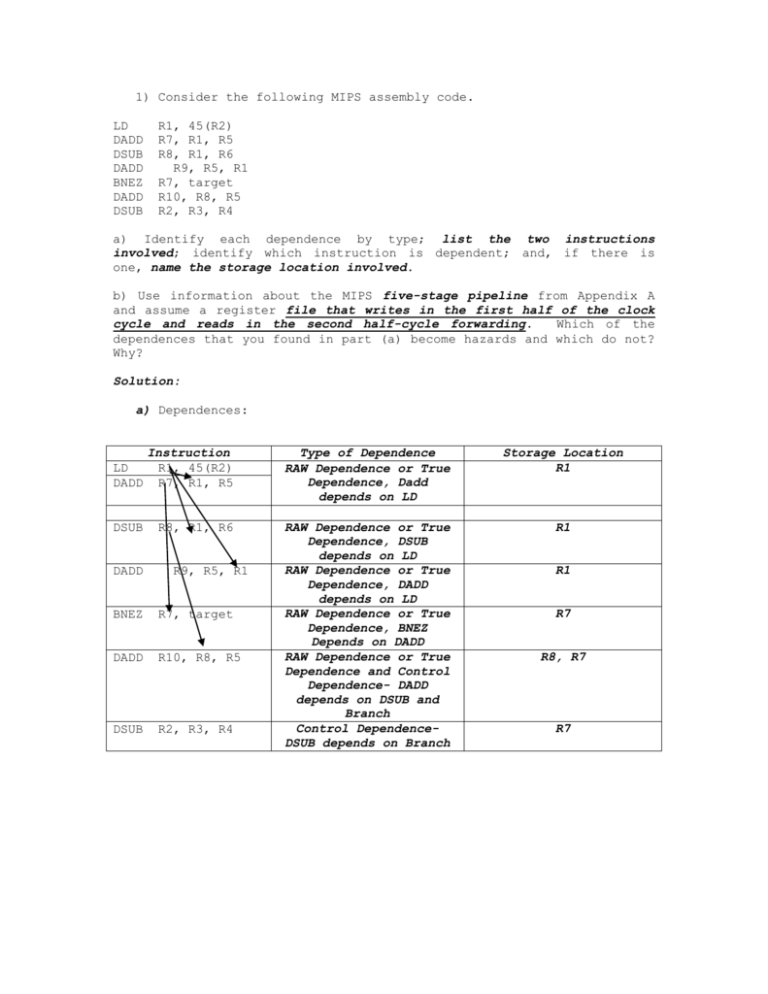

1) Consider the following MIPS assembly code. LD DADD DSUB DADD BNEZ DADD DSUB R1, 45(R2) R7, R1, R5 R8, R1, R6 R9, R5, R1 R7, target R10, R8, R5 R2, R3, R4 a) Identify each dependence by type; list the two instructions involved; identify which instruction is dependent; and, if there is one, name the storage location involved. b) Use information about the MIPS five-stage pipeline from Appendix A and assume a register file that writes in the first half of the clock cycle and reads in the second half-cycle forwarding. Which of the dependences that you found in part (a) become hazards and which do not? Why? Solution: a) Dependences: LD DADD Instruction R1, 45(R2) R7, R1, R5 Type of Dependence RAW Dependence or True Dependence, Dadd depends on LD Storage Location R1 DSUB R8, R1, R6 RAW Dependence or True Dependence, DSUB depends on LD RAW Dependence or True Dependence, DADD depends on LD RAW Dependence or True Dependence, BNEZ Depends on DADD RAW Dependence or True Dependence and Control Dependence- DADD depends on DSUB and Branch Control DependenceDSUB depends on Branch R1 DADD R9, R5, R1 BNEZ R7, target DADD R10, R8, R5 DSUB R2, R3, R4 R1 R7 R8, R7 R7 b) Using MIPS Five Stage Pipeline, we get the next schedule for our instructions. Assumption: The only way of forwarding is through the register file. LD R1, 45(R2) DADD R7, R1, R5 DSUB R8, R1, R6 DADD R1 BNEZ R9, R5, R7, target DADD R10, R8, R5 DSUB R2, R3, R4 1 2 3 4 5 IF ID EXE WB IF S ME M S ID IF 6 7 ME M ID WB IF EX E ID IF 8 9 ME M EX E ID WB IF ME M EX E S 10 11 12 13 ME M EX E WB 14 WB ME M ID IF WB EX E ID ME M WB Data Dependences that became hazards: 1) LD and DADD RAW Dependence-See the 2 cycles that need to be stalled2) BNEZ and DADD, Control Dependence that becomes hazard because of the need for checking the branch and waiting for the effective address calculation of the next instruction to be executed. 2) Construct a version of the table that we have in class for 1/1 predictor assuming the 1-bit predictors are initialized to NT, the correlation bit is initialized to T, and the value of d (leftmost column of the table) alternates 0,1,2,0,1,2. Also, note and count the number of instances of misprediction. Solution: Considering a sequence where d alternates between 0 and 2 and we can assume a NT value for B2 at the beginning. d=? 0 1 2 0 1 2 B1 Prediction NT/NT NT/NT T/NT T/NT T/NT T/NT B1 Action NT T T NT T T New B1 B2 Prediction Prediction NT/NT NT/NT NT/NT T/NT* T/NT NT/NT T/NT NT/T T/NT NT/T T/NT NT/NT B2 Action NT NT T NT NT T New B2 Prediction NT/NT NT/NT NT/T* NT/T NT/NT* NT/T* Total Mispredictions: 4 3) Increasing the size of a branch-prediction buffer means that it is less likely that two branches in a program will share the same predictor A single predictor predicting a single branch instruction is generally more accurate than is the same predictor serving more that one branch instruction. a) List a sequence of branch taken and not taken actions to show a simple example of 1-bit predictor sharing that reduces misprediction rate. b) List a sequence of branch taken and not taken actions to show a simple example of 1-bit predictor sharing that increases misprediction rate. c) Discuss why the sharing of branch predictors can be expected to increase mispredictions for the long instruction execution sequences of actual programs. Solution: a) Let’s consider two branches B1 and B2, executed alternatively and alternating between TAKEN/NOT TAKEN. The next table shows the values for the predictions and the mispredictions. Because a single predictor is shared here, prediction accuracy improves from 0% to 50%. P NT Correct Prediction? B1 T P T B2 NT No P NT No B1 NT P NT Yes B2 T P T No B1 T P T Yes B2 NT P NT No B1 NT P N T Yes B2 T No b) For this part, let’s consider two Braches B1 and B2 where B1 is always TAKEN and B2 is always NOT TAKEN and we follow the same pattern as in part a. If each branch had a 1-bit predictor, each would be correctly predicted. Because they share a predictor, the accuracy of our predictions is 0%.-See table belowP NT Correct Prediction? B1 T No P T B2 NT No P NT B1 T No P T B2 NT No P NT B1 T No P T B2 NT No P NT B1 T No P T B2 NT No c) In general terms, if a predictor is shared by a set of branch instructions, then over the course of program execution set membership is very likely to change. When a new branch enters the set or an old one leaves the set, the branch action history represented by the state of the predictor is unlikely to predict new set behaviors as it did before. Then, transient intervals following set changes likely will reduce the long term prediction accuracy for our shared predictors. d) Consider the following loop. bar: L.D MUL.D L.D ADD.D S.D ADDI ADDI ADDI BNEZ F2, F4, F6, F6, F6, R1, R2, R3, R3, 0(R1) F2, F0 0(R2) F4, F6 0(R2) R1, #8 R2, #8 R3, #-8 bar a) Assume a single-issue pipeline. Show how the loop would look both unscheduled by the compiler and after compiler scheduling for both floating-point operation and branch delays, including any stall or idle clock cycles. What is the execution time per iteration of the result, unscheduled and scheduled? How much faster must the clock be for processor hardware alone to match the performance improvement achieved by the scheduling compiler (neglect the possible increase in the number of cycles necessary for memory system access effects of higher processor clock speed on memory system performance?) Unscheduled: Instruction L.D F2, 0(R1) MUL.D F4, F2, F0 L.D F6, 0(R2) ADD.D F6, F4, F6 S.D ADDI ADDI ADDI F6, R1, R2, R3, 0(R2) R1, #8 R2, #8 R3, #-8 BNEZ R3, bar Clock Number 1 Stall 3 4 Stall Stall 7 Stall Stall 10 11 12 13 Stall 15 Stall Cycle Total Execution Time in clock cycles: 16 Cycles Scheduled: Instruction L.D L.D MUL.D ADDI ADDI ADDI ADD.D F2, F6, F4, R1, R3, R2, F6, 0(R1) 0(R2) F2, F0 R1, #8 R3, #-8 R2, #8 F4, F6 BNEZ S.D R3, bar F6, -8(R2) Clock Number 1 2 3 4 5 6 7 Stall 9 10 Cycle Total Execution Time in clock cycles: 10 Cycles How much faster has the clock to be to get this improvement just using hardware? 16 cycles/10 cycles= 1.6 times faster than the original then the clock must be 60% faster to match the performance of the schedule code on the original hardware. b) Assume a single-issue pipeline. Unroll the loop as many times as necessary to schedule it without any stalls, collapsing the loop overhead instructions. How many times must the loop be unrolled? Show the instruction schedule. What is the execution time per element of the result iteration? What is the major contribution to the reduction in time per iteration? Solution: For this problem, we can unroll at least 2 times the loop and schedule it to avoid stalls. It can be unrolled more than 2 times and fit a better performance but initially the goal is to find the first schedule using unrolling that doesn’t have stalls. Instruction L.D L.D L.D L.D F2, 0(R1) F8, 8(R1) F6, 0(R2) F12, 8(R2) Clock Cycle Number 1 2 3 4 MUL.D F4, F2, F0 MUL.D F10, F8, F0 5 6 *ADDI 7 8 *ADDI R1, R1, #16 R3, R3, #-8 ADD.D F6, F4, F6 ADD.D F12, F10, F12 *ADDI R2, R2, #16 9 10 11 S.D F6, 0(R2) *BNEZ R3, bar S.D F12, 8(R2) 12 13 14 In this exercise, we have produced 2 results using 14 cycles, which results in 7 clock cycles per element. The major advantage in the unrolled case is that we have eliminated 4 instructions compared to not unrolling, to be precise, the loop overhead instructions of one of the iterations-the instructions with * in the table aboveAdditionally, with unrolling the loop body is more suited to scheduling and allows the stall cycle present in the scheduled original loop to be eliminated.